TrueFoundry AI Gateway integration with LangSmith

Introduction

In this blog, we’ll walk through how to operationalize LLM systems by combining the TrueFoundry AI Gateway with LangChain’s LangSmith into a single, production-ready workflow. We’ll start by explaining the architecture, why routing all model and agent traffic through the gateway creates a clean execution boundary, and how LangSmith becomes the record for traces and evaluations. Then we’ll go step-by-step through the actual integration using OpenTelemetry, including what to configure in the AI Gateway, which LangSmith ingestion endpoints to use, and how authentication and project routing work.

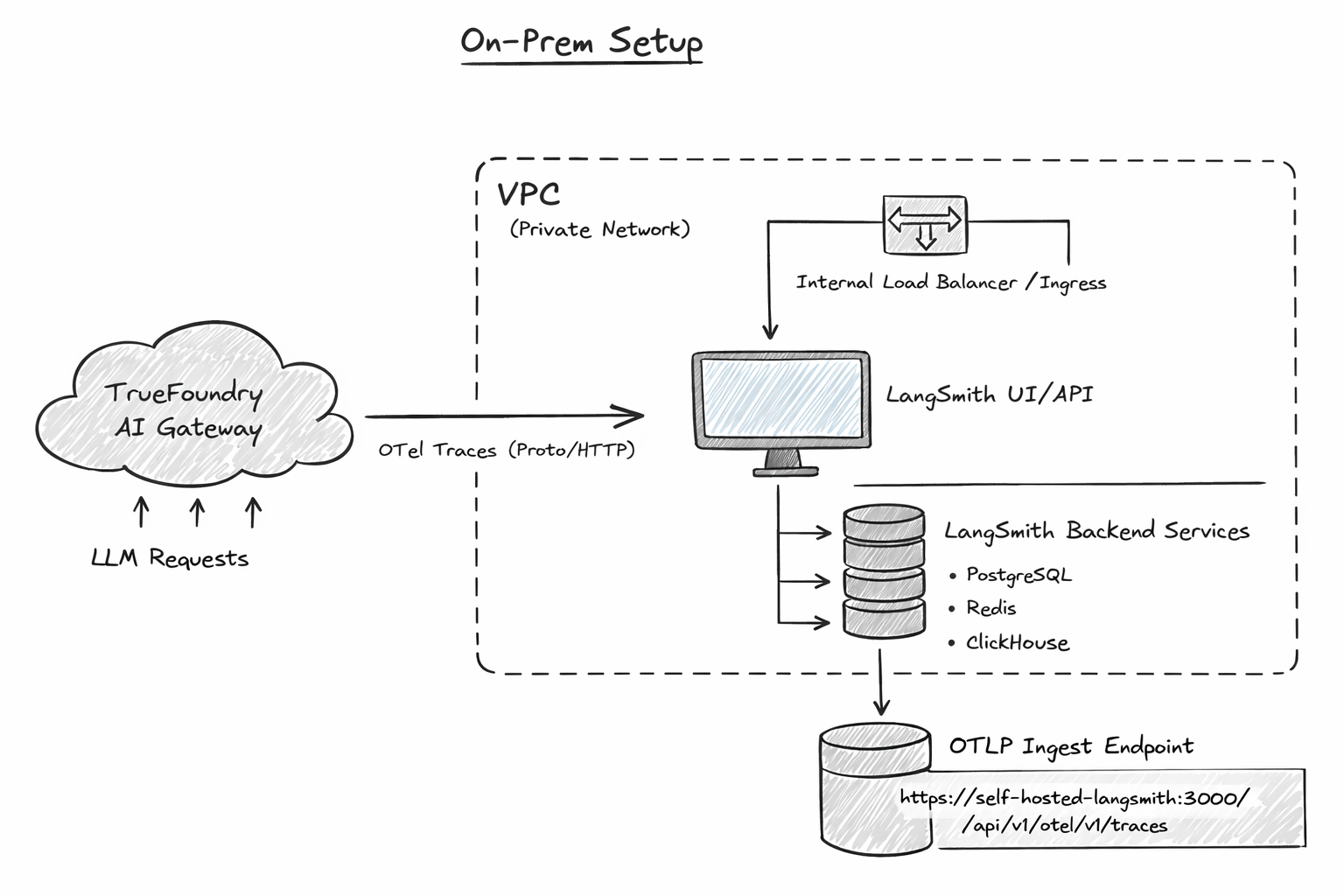

We’ll also cover the on-prem/VPC setup in detail: how to deploy self-hosted LangSmith, how to determine the correct OTLP ingestion URL in private networks, and how to validate end-to-end trace delivery from the gateway to LangSmith. By the end, you’ll have a clear blueprint for moving from “we have an LLM app” to “we can reliably operate, debug, and continuously evaluate it in production.”

The Missing Control Plane in AI Architectures

Modern AI systems are distributed. They use multiple model providers, multiple execution environments, and increasingly, multiple autonomous agents. Without a centralized execution layer, there is no natural place to enforce governance, apply policy, or capture consistent telemetry. Without observability, traces devolve into opaque logs that miss the very information engineers need to debug model behavior. Without continuous evaluation, quality becomes a subjective conversation rather than a measurable signal.

TrueFoundry’s AI Gateway addresses the first half of this problem by acting as a unified execution layer for all LLM traffic. LangSmith addresses the second half by providing observability and evaluation designed specifically for LLM systems. The integration between them is what turns these capabilities into a coherent control plane.

TrueFoundry AI Gateway

The TrueFoundry AI Gateway establishes a single, governed entry point for all model and agent requests. Applications and agents no longer talk directly to model providers. They talk to the gateway proxy. This architectural decision matters because it creates a consistent surface for policy enforcement, routing decisions, and telemetry generation. The gateway determines which model is used, under what constraints, in which environment, and with what safeguards. It also becomes the one place where production behavior can be observed comprehensively.

For platform leaders, this is the point where AI systems stop being a collection of python scripts and start behaving like infrastructure.

LangSmith

While the gateway governs where and how requests execute, LangSmith is the place you go to reconstruct what actually happened as structured trace data rather than scattered logs. In LangSmith’s terminology, a trace captures the end-to-end sequence of steps for a single request (from input to final output), and each step inside that trace is a run, a single unit of work such as an LLM call, a chain step, prompt formatting, or any other operation you want visibility into. Traces are organized into projects (a container for everything related to a given application or service), and multi-turn conversations can be linked as threads so you can inspect behavior across an entire dialogue rather than one isolated request. Read here if you want to dive deeper: Observability concepts

LangSmith also treats feedback as a first-class concept, letting you attach scores and criteria to runs - whether that feedback comes from humans, automated evaluators, or online evaluators running on production traffic. This is what makes it more than “monitoring”: it supports an evaluation loop where you can run offline evaluations on curated datasets before shipping, and online evaluations on real user interactions in production to detect regressions and track quality in real time.

OpenTelemetry

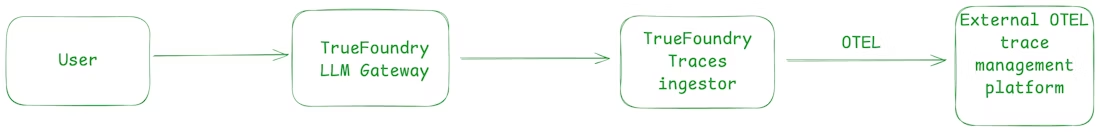

TrueFoundry and LangSmith integration is built on OpenTelemetry. The AI Gateway exports traces using standard OpenTelemetry protocols, and LangSmith ingests those traces as an OpenTelemetry compliant backend. This design choice avoids tight coupling. It allows organizations to adopt LangSmith without changing how they deploy or route models. It also enables enterprise requirements that are often ignored in early stage AI tooling, such as region specific endpoints, self hosted LangSmith deployments, and VPC isolated environments.

Overview of the Integration

On the TrueFoundry side, you enable the AI Gateway’s OpenTelemetry traces exporter. The gateway remains responsible for generating and storing traces that you can view inside the TrueFoundry Monitor UI, and exporting those traces is an additive operation that doesn’t change TrueFoundry’s own storage behavior. Check OTeL export docs here: TrueFoundry

On the LangSmith side, you provide an API key for authentication and (optionally) a project name so traces land in a predictable project rather than the default. LangSmith’s OpenTelemetry guide documents the OTLP headers used for authentication and project routing. Docs: LangChain

Integrating with managed LangSmith (SaaS)

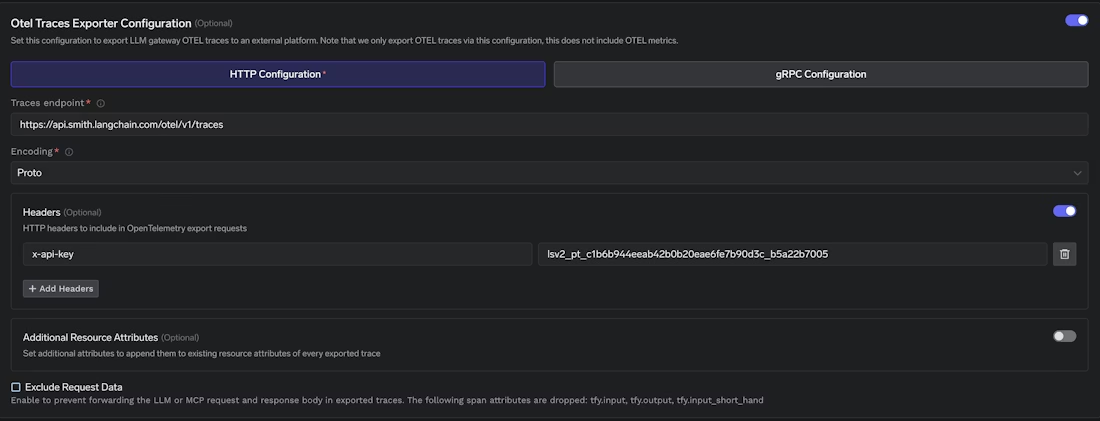

Start by generating a LangSmith API key from the LangSmith dashboard settings. TrueFoundry’s integration guide then has you configure the AI Gateway OTEL exporter from the TrueFoundry UI by going to the AI Gateway “Configs” section, opening “OTEL Config”, enabling “OTEL Traces Exporter Configuration”, choosing the HTTP exporter, and setting the endpoint and encoding.

For managed LangSmith, the traces ingestion endpoint is:

https://api.smith.langchain.com/otel/v1/traces

TrueFoundry’s doc calls out HTTP with Proto encoding for the exporter configuration, and it also notes that LangSmith uses a single OTEL ingestion endpoint and supports Proto or JSON encoding.

Finally, add the authentication header in the exporter configuration. TrueFoundry documents the required header as x-api-key with your LangSmith API key value.

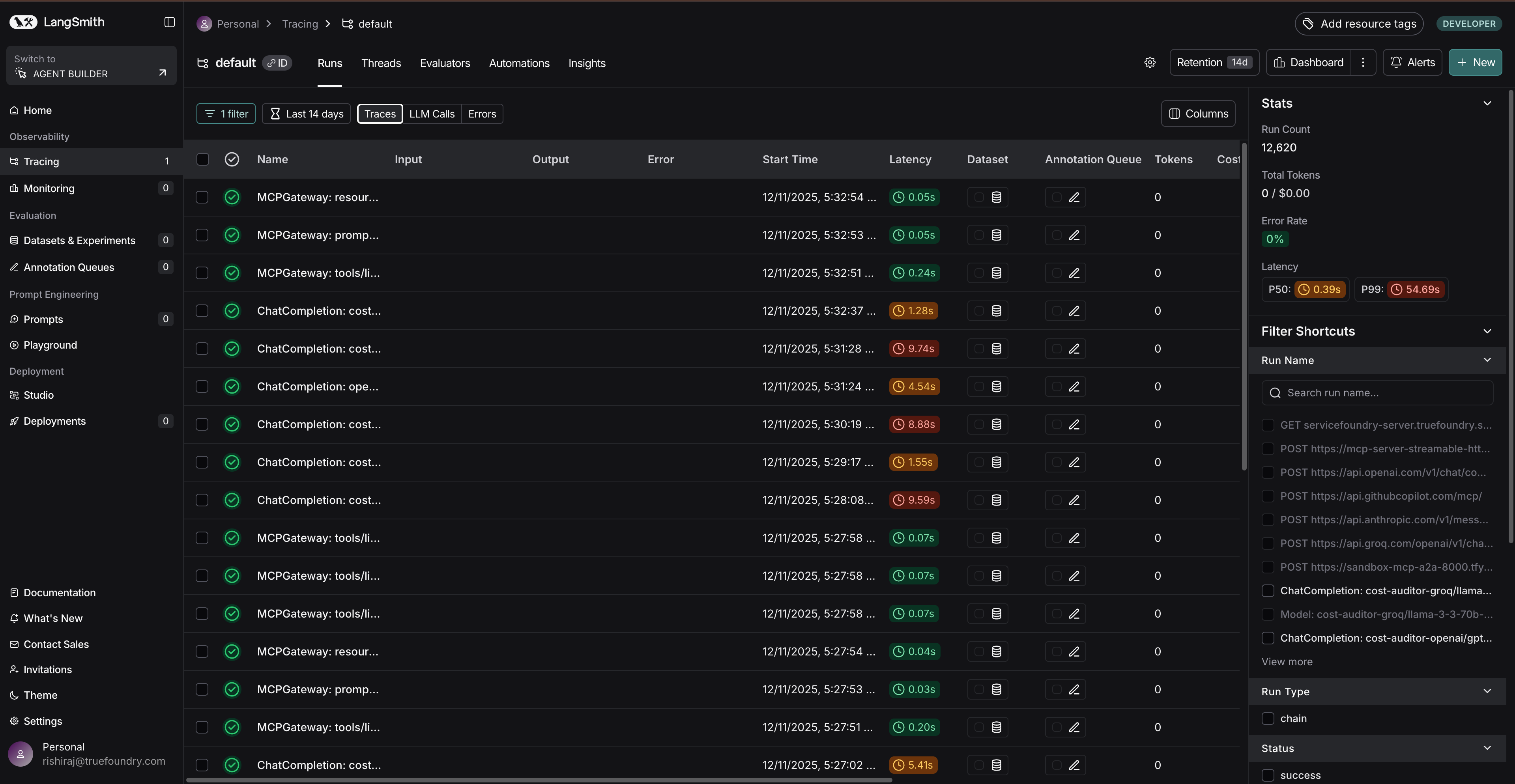

At this point, verification is intentionally boring: send a few requests through the AI Gateway, confirm traces are visible in the TrueFoundry Monitor section, and then confirm those traces appear in LangSmith under Projects. Below are some images to help you set this up.

Self-hosting LangSmith in a VPC and exporting traces from the AI Gateway

Self-hosted LangSmith is designed for environments where traces and evaluation artifacts must remain inside controlled network boundaries. You will have to self-host the LangSmith UI/API plus backend services and datastores (PostgreSQL, Redis, ClickHouse, and optional blob storage).

If you’re deploying to Kubernetes, the official “Self-host LangSmith on Kubernetes” guide is Helm-based and is explicit about what you must provide upfront: a LangSmith license key, an API key salt, and (if using basic auth) a JWT secret. It also recommends using external managed Postgres/Redis/ClickHouse for production rather than in-cluster defaults, because trace volume can grow quickly. For more indept reading, I would recommend going through LangSmith's self-host on Kubernetes docs: Self-host on kubernetes

A minimal Helm values file looks like the following:

config:

langsmithLicenseKey: "<your license key>"

apiKeySalt: "<your api key salt>"

authType: mixed

basicAuth:

enabled: true

initialOrgAdminEmail: "admin@your-company.com"

initialOrgAdminPassword: "a-strong-password-with-12+-chars"

jwtSecret: "<your jwt secret>"

The Kubernetes guide also calls out an operational constraint that surprises many locked-down networks: LangSmith requires egress to https://beacon.langchain.com for license verification and usage reporting unless you are running in an offline mode. You will have to plan for this explicitly in VPC egress policy reviews.

Once deployed, you expose the LangSmith frontend service behind an internal load balancer or private ingress so the UI and APIs are reachable only from approved services. The docs strongly nudge you toward configuring DNS and SSL for encrypted trace submission.

Determining the correct OTLP ingestion URL for self-hosted LangSmith

This is the detail that tends to get muddled in “on-prem” setups: the OTLP endpoint path differs between managed and self-hosted because the self-hosted API is typically served under an /api/v1 prefix.

LangSmith’s “Trace with OpenTelemetry” guide states that for self-hosted LangSmith you should replace the base endpoint with your LangSmith API endpoint and append /api/v1, giving an example base OTEL endpoint like https://ai-company.com/api/v1/otel. It also notes that some OTLP exporters require you to append /v1/traces when sending traces only. Check here: LangChain Docs

TrueFoundry’s AI Gateway exporter configuration wants the complete traces endpoint (as shown by the managed LangSmith example .../otel/v1/traces).

Putting those together, the most common self-hosted ingestion URL you’ll configure in the AI Gateway is:

https://<your-langsmith-host>/api/v1/otel/v1/traces

Authentication is still done with an API key header. For project routing, LangSmith documents an optional Langsmith-Project header that you can include alongside x-api-key so traces land in a named project rather than “default”.

If your LangSmith is behind path-based routing

Some enterprises expose internal platforms under a shared hostname with path prefixes (for example, https://platform.company.com/langsmith/dev/...). LangChain’s support guidance for path-based routing shows how to set config.basePath/config.subdomain and two environment variables so URLs are generated correctly throughout the application when a basePath is used.

In that setup, your OTLP traces URL should include the base path prefix as well. Conceptually it becomes:

https://<hostname>/<basePath>/api/v1/otel/v1/traces

and the rest of the TrueFoundry AI Gateway configuration (HTTP exporter, Proto encoding, headers) remains unchanged.

Operational validation

After you wire the endpoint, validate in three places, because each one isolates a different failure domain.

- First, confirm the gateway is producing traces locally in TrueFoundry’s Monitor UI; this tells you your gateway-side telemetry is functioning.

- Second, confirm the gateway can reach LangSmith over the network by looking for successful export behavior; in locked-down VPCs, the most common failures are DNS resolution, missing private routing, or TLS trust chain issues when internal CAs are used.

- Third, confirm traces are appearing in the intended LangSmith project; if they’re landing in “default” unexpectedly, it’s usually because the project header wasn’t set, and LangSmith’s OTEL guide documents the exact header name to use.

Why this architecture holds up as you scale

The key design choice here is that the TrueFoundry AI Gateway exports traces using OpenTelemetry and LangSmith accepts OpenTelemetry traces directly. LangChain’s announcement of OTEL ingestion emphasizes that LangSmith can ingest OTEL traces from standard exporters, which is what makes “gateway emits, LangSmith records”.

Conclusion

For AI leaders, the TrueFoundry–LangSmith integration provides a shared foundation where execution, observability, and evaluation stay aligned as systems scale. It lets teams manage LLM applications with the same rigor as distributed services meeting enterprise requirements without slowing development because production AI needs production-grade infrastructure.

The partnership is intentionally composable: TrueFoundry governs and routes execution, LangSmith records and evaluates behavior, and OpenTelemetry connects them. Together, they function as a practical control plane that moves organizations from promising demos to dependable, accountable AI in production.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.