In 2026, AI Gateways Will Need to Become a Board-Level Priority

By 2026, AI will be making decisions that directly affect customers, revenue, and whether businesses stay operational. As AI’s influence grows, responsibility for its success will move out of AI/ML teams and squarely into the hands of executive leadership and boards.

What’s driving this shift? As we move into 2026, with AI systems becoming more mature, early bets are going to show tangible results and there will be clear winners and losers in the AI race. What this means for leadership is that AI can no longer be relegated to experimental projects or isolated Centers of Excellence. Governance and scalability of AI will now become the greatest priority for the C-suite and boards as it could mean the difference between survival and obsolescence.

Here, we explore why AI transformation now demands direct leadership oversight, why a governance layer becomes foundational rather than optional, and how an AI Gateway can give the C-suite and boards the observability they need to scale AI responsibly and reliably.

Ready or Not, AI Has Entered the Boardroom

Early AI projects enjoyed relative flexibility, operating at the margins of the enterprise. Many AI initiatives were sourced from the ground up, with data science teams and engineering teams identifying a specific function that AI could improve and running localized experiments. However, AI cannot deliver actual value through surface-level application. You can’t put new paint on an old car and expect the engine to run faster. AI requires change at the process and infrastructure level.

Gartner’s report ‘Innovation Guide for Generative AI Engineering’, states that enterprises are moving past the experimentation phase and are now embedding GenAI into core business processes. “Over the past two years, incumbent and new AI engineering vendors have raced to provide tooling and services to support GenAI pipelines beyond simplistic prompting of GenAI models.”

All this points to AI initiatives needing to be set at the leadership-level in alignment with larger organization-wide objectives. Only then can AI initiatives receive the mandate, investment, and cross-functional coordination required to rewire infrastructure, redesign processes, and drive true transformation.

Why Now: From Assistive Tools to Autonomous Operations

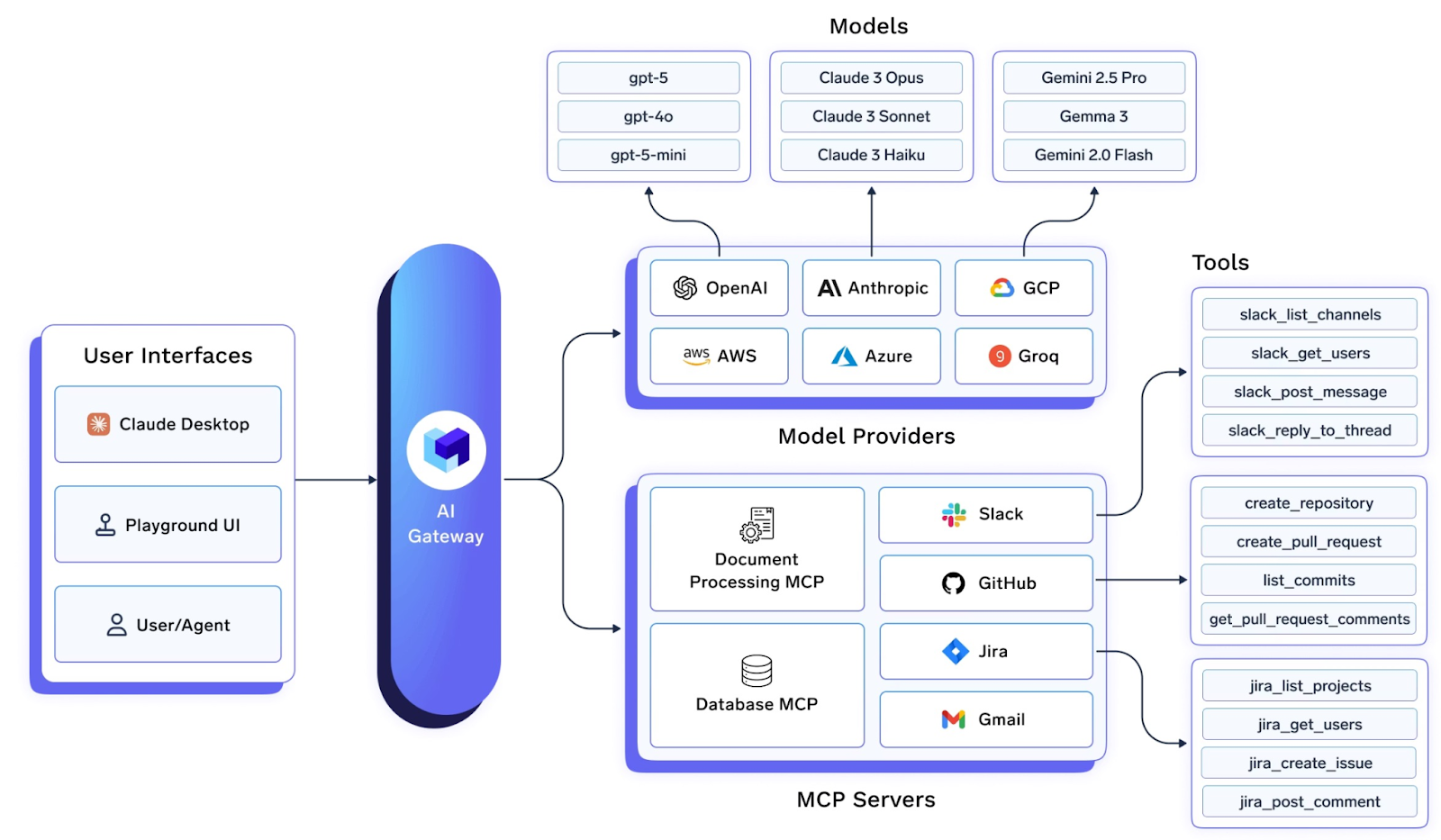

The rise of agentic AI in the enterprise is arguably one of the most transformative applications of GenAI (yet). To work effectively in an enterprise setting, this agent ecosystem, along with the MCP servers and integrations that support it, must operate across data silos, team boundaries, and infrastructure layers.

On the other hand, the same autonomy that makes agentic AI so powerful also makes it a substantial risk to enterprises.

- Consider a financial services company using AI agents to assist with loan approvals or fraud detection. If those agents behave unpredictably, incur runaway processing costs, or make inconsistent decisions, the company faces not only compliance issues, but also direct financial loss and erosion of customer trust.

- Or take a consumer brand rolling out AI-powered customer engagement across digital channels. When those systems fail during peak demand, generate incorrect information, or escalate costs unexpectedly, executives are forced to explain not just a technical outage, but a breakdown in customer experience strategy.

These are not edge cases. They are becoming common as organizations move from AI tools to AI-driven operations. Yet many organizations are scaling AI without the same level of centralized control, financial discipline, and accountability that they apply to other mission-critical platforms.

That gap is where risk accumulates.

AI investments will be held to measurable KPIs

AI requires substantial investments. We predict that AI costs will be the new cloud computing costs. Some reports say that Gen AI is driving cloud costs higher by up to 30%. Unlike traditional software, AI costs don’t follow clean, linear patterns. With LLM-based systems, costs often rise in unexpected ways. A setup that costs roughly $40 a day to handle a few hundred user requests might not cost $4,000 to support tens of thousands. As usage grows, prompts get longer, responses get richer, retries become more common, and agent workflows introduce additional background calls, all of which increase token usage. At the same time, constraints around compute, memory, and data movement can force teams onto higher-cost infrastructure sooner than planned.

As AI grows in scope and scale, there will be a clear need to maintain oversight of LLM costs to ensure that innovation doesn’t come at the risk of uncontrolled expenses. Boards will begin to hold leadership more accountable to clear revenue, efficiency gains, and savings from AI transformation projects, making the need for clear cost tracking critical.

The AI Gateway is Now a Non-Negotiable Infrastructure Requirement

The easiest way to understand the criticality of an AI Gateway is to take a look at what happens in the absence of one.

In many enterprises, AI agents are built independently by different teams, each connecting directly to LLMs, tools, MCP servers, and internal data sources. What does this result in?

- Routing logic, access controls, retries, prompts, and guardrails are implemented inconsistently,

- There is a fragmented agentic stack with no central visibility

- Costs are duplicated, security gaps emerge, and leadership lacks a clear view of how AI systems behave in production

How does an AI Gateway solve for this?

An AI Gateway addresses this by acting as a central control plane for all AI traffic. It sits between agents and the components they rely on (models, tools or MCP servers, prompts, and guardrails) and governs how these components interact with each other. The gateway routes requests to the appropriate models or regions, enforces team-level access permissions, applies content and safety filters, and continuously monitors usage. This centralized layer provides resilience, reliability, and governance, allowing enterprises to scale AI with confidence.

This control becomes essential in production, especially for customer-facing systems.

Consider a natural-language booking interface where users issue complex requests that trigger multiple agent actions across pricing systems, booking APIs, and customer data. To operate reliably at scale, such systems must deliver accurate results, respond quickly, handle large volumes of traffic, protect sensitive information, and keep costs under control. The AI Gateway enables this by supporting rapid model and prompt iteration, automatic failover and load balancing across models and regions, rate and budget limits to prevent abuse, and end-to-end audit trails for issue resolution and regulatory review.

Industry adoption is already moving in this direction. According to Gartner’s report , by 2028, 70% of software engineering teams building multimodal applications will use AI Gateways to improve reliability and optimize costs. Even sooner, by 2027, 40% of enterprises will have two or more AI Gateways deployed to control and monitor heterogeneous MAS (multi-agent systems).

Why Boards Should View the AI Gateway as Strategic Infrastructure

AI Gateways have risen in importance over the last year, but most discussions about them have remained within the scope of engineering. While AI and ML practitioners are the primary users and beneficiaries of an AI Gateway, its importance extends well beyond the technical organization. Boards cannot afford to treat AI Gateways as purely an engineering concern. AI innovation has moved to the top of board agendas, but innovation and reliability are ultimately two sides of the same coin.

Beyond their technical value, AI Gateways provide strategic capabilities that are directly relevant to board-level priorities.

- Faster technological innovation

To bring AI applications to market faster, teams need the freedom to experiment, iterate, and make decentralized decisions. At the same time, enterprises must ensure that this innovation aligns with organizational policies and risk tolerance. An AI Gateway enables this balance by allowing teams to deploy and operate AI systems independently, while maintaining centralized oversight of how those systems access models, data, and tools. We think about this as federated execution with centralized control.

- Enforceable compliance guardrails

AI systems increasingly handle personal data, financial information, and customer interactions. Without enforced controls, this creates direct exposure to privacy violations, regulatory penalties, and legal claims. An AI Gateway enforces data access rules, privacy protections, and usage policies at runtime, reducing the risk that AI systems operate outside approved regulatory or legal boundaries.

- Financial controls and transparency

AI usage can grow faster than forecasted, leading to unexpected increases in cloud and model costs. An AI Gateway provides consolidated visibility into AI usage across teams and enforces limits that prevent unplanned or abusive consumption. This allows boards to maintain predictable budgets and assess whether AI spending is aligned with approved investment plans.

- Establishes auditable trails

When issues arise, such as customer complaints, regulatory inquiries, or internal reviews, boards must be able to reconstruct what happened. An AI Gateway maintains a centralized record of AI activity, including who initiated requests, which systems were involved, and what actions were taken. This traceability supports audits, investigations, and clear accountability at the leadership level.

- Prevents vendor lock-ins

A centralized AI Gateway gives leadership leverage by reducing dependency on any single model provider or platform. By separating AI applications from underlying vendors, the organization avoids long-term lock-in and preserves the ability to shift providers as pricing, performance, regulatory requirements, or geopolitical considerations change. For boards, this flexibility is strategic: it lowers concentration risk, strengthens negotiating position with vendors, and ensures the company can adapt its AI strategy without costly rewrites or operational disruption.

Preparing Boards for the Next Phase of Enterprise AI

AI is moving from isolated use cases into the core of how enterprises operate, compete, and serve customers. As that shift accelerates, governance can no longer be treated as an afterthought or delegated solely to engineering teams.

As a centralized control plane, the AI gateway gives enterprises a practical way to align innovation with accountability, providing visibility into how AI is used, enforcing guardrails at runtime, and maintaining control as systems become more autonomous and customer-facing.

Organizations that approach AI governance proactively will be better positioned to scale AI with confidence, manage risk, and tie investment to measurable outcomes. Those that delay will find themselves reacting to cost overruns, compliance issues, or loss of trust, often after damage has already been done.

As AI transformation becomes a standing item on board agendas in the years ahead, the decisions made today about foundational infrastructure will shape how effectively organizations can innovate tomorrow.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.