LLMs

Deploy and serve open-source or proprietary LLMs with GPU acceleration and production-grade reliability.

Agents

Run long-running AI agents with memory, tool execution, and seamless integration with AI Gateway and MCP servers

MCP Servers

Deploy MCP servers to securely expose tools, APIs, and enterprise systems to AI agents.

Workflows

Orchestrate multi-step AI workflows across models, agents, and services from a single control plane.

Jobs

Run batch jobs, training workloads, and scheduled AI tasks on demand.

Classical ML Models

Deploy and serve traditional machine learning models alongside LLMs using the same platform.

.webp)

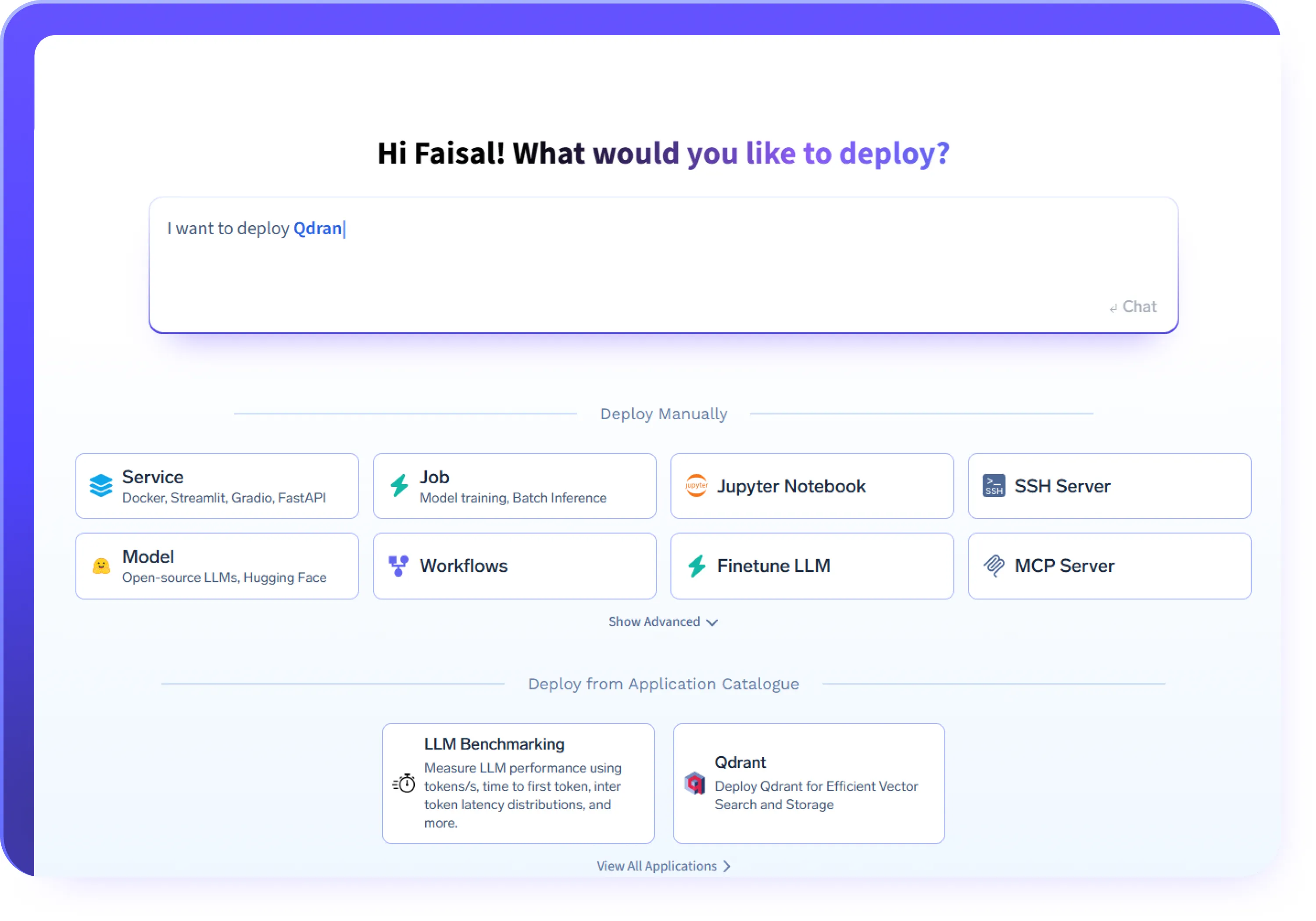

Deploy Any AI Workload

- Deploy LLMs and GPU-based inference workloads using frameworks like vLLM, Triton, KServe, or custom containers

- Deploy AI agents and agent services with consistent runtime and networking

- Deploy MCP servers to securely expose tools and internal systems

- Run batch jobs, APIs, and long-running AI services on the same platform

.webp)

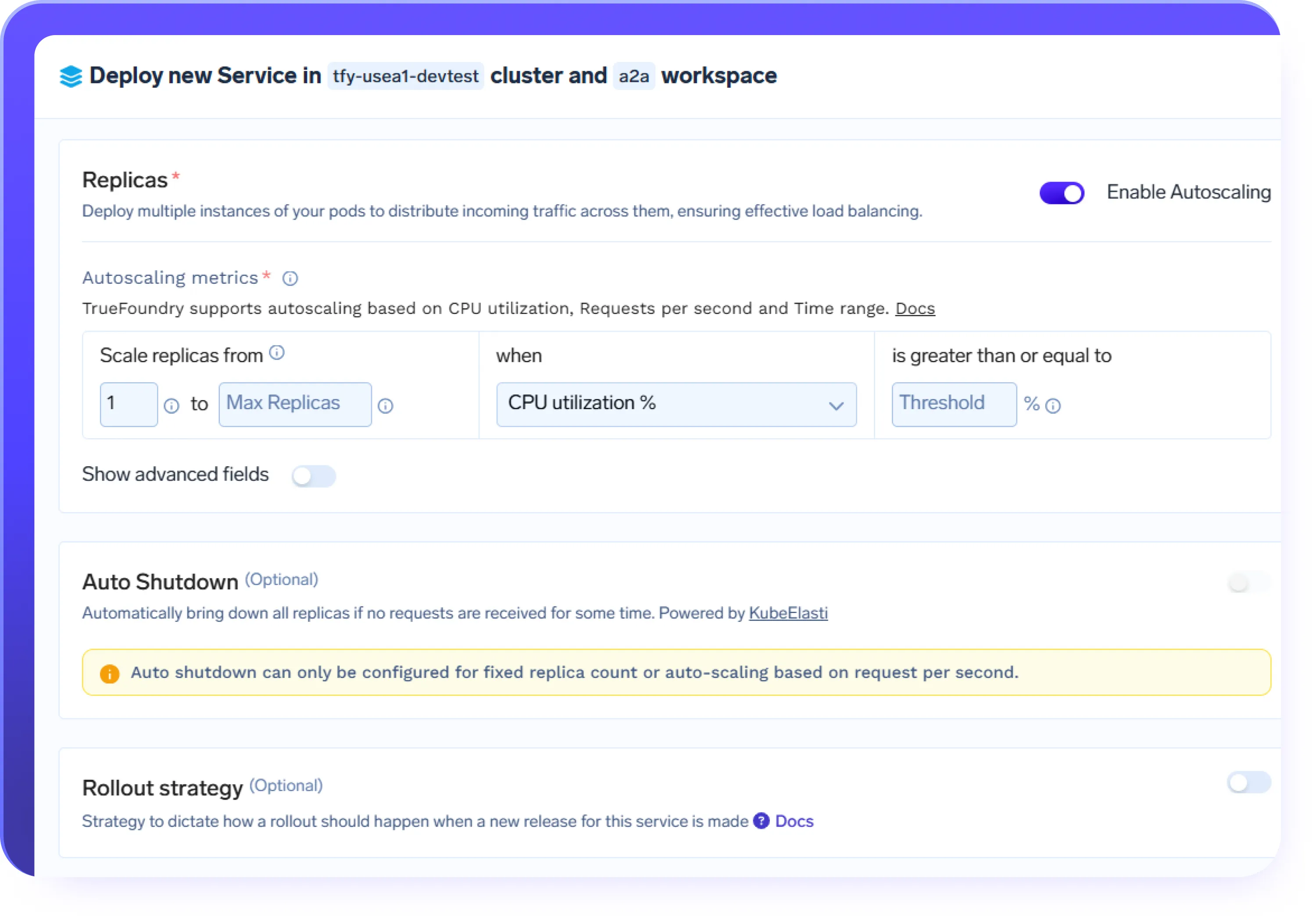

Autoscaling for AI Workloads

demand.

- Automatically scale inference endpoints and agent services based on request volume

- Scale GPU workloads up during peak demand and scale down when traffic drops

- Support bursty workloads such as chat, RAG, and agent-driven workflows

- Maintain predictable performance during traffic spikes

Auto-Shutdown to Control Costs

- Automatically shut down endpoints, agents, or services after configurable idle periods

- Reduce GPU waste during off-peak hours or experimentation

- Restart workloads on demand without manual intervention

- Enforce cost discipline across teams and

environments

.webp)

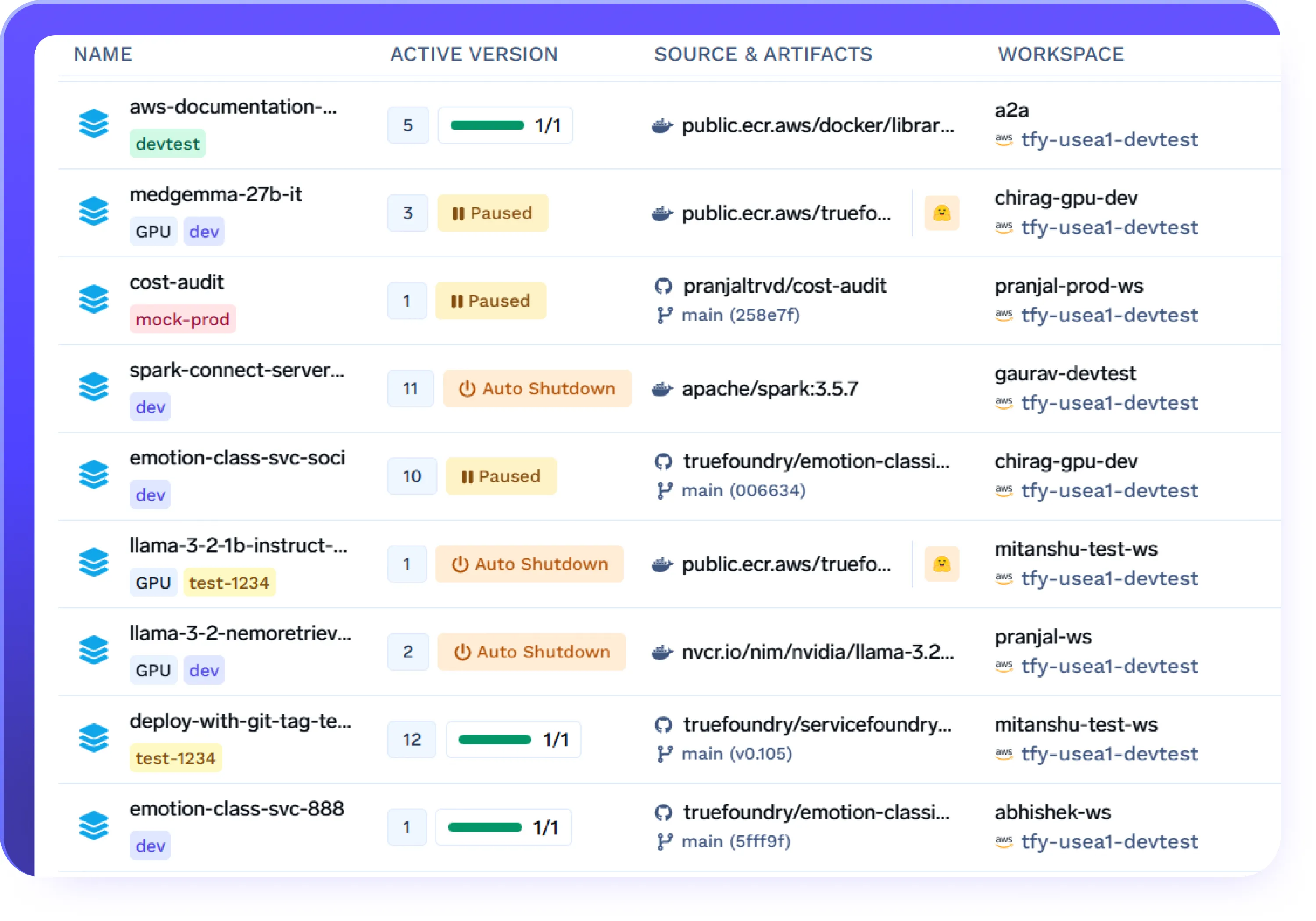

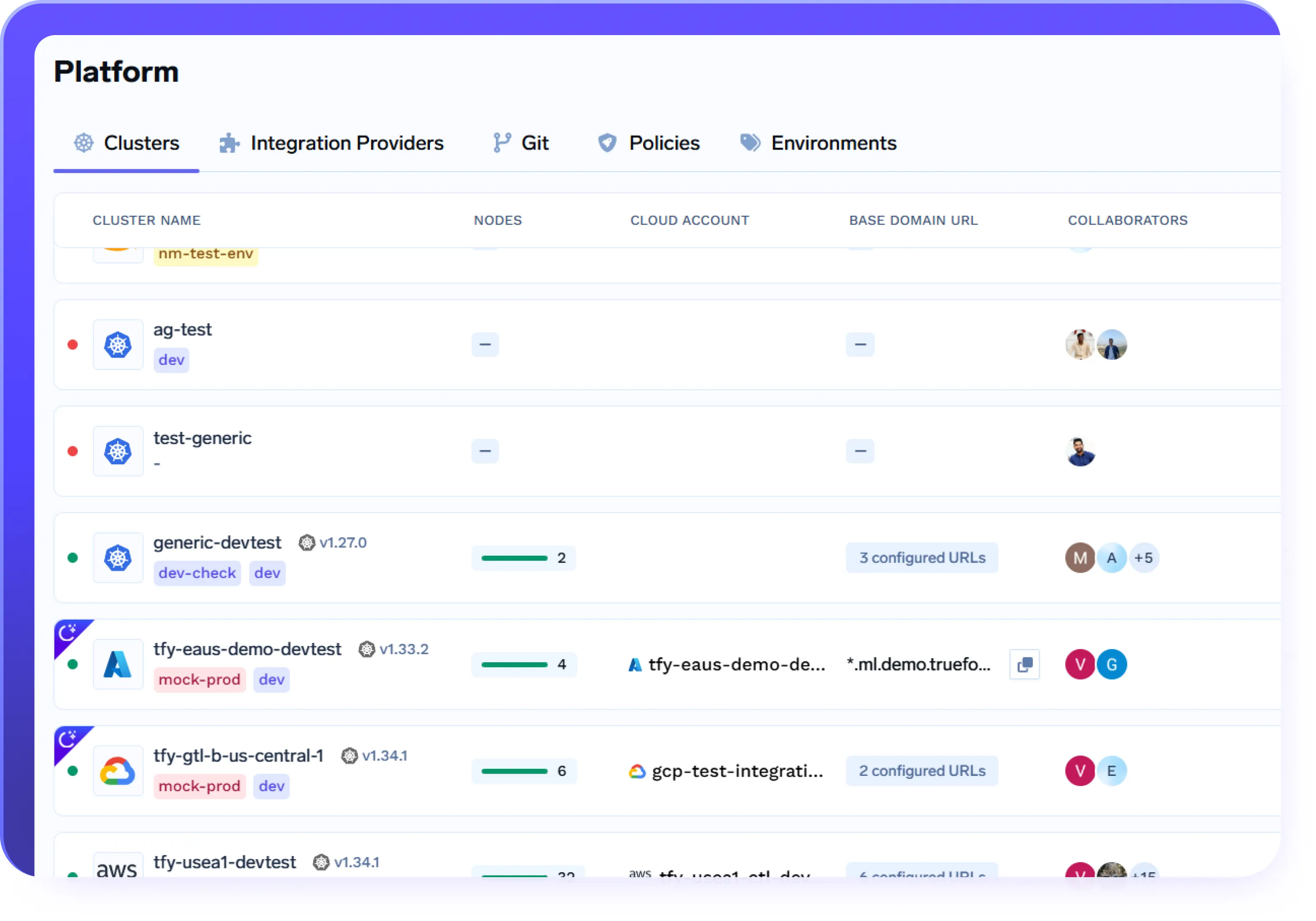

Unified Deployment Experience Across Cloud/Onprem

- Connect and manage AWS, Azure, GCP, and on-prem clusters from a single control plane

- Deploy the same workload to different environments using identical workflows and APIs

- Abstract away cloud-specific complexity while retaining full control and isolation

- Use the same deployment experience across dev, staging, and production, regardless of infrastructure

.webp)

Built for a First-Class Developer Experience

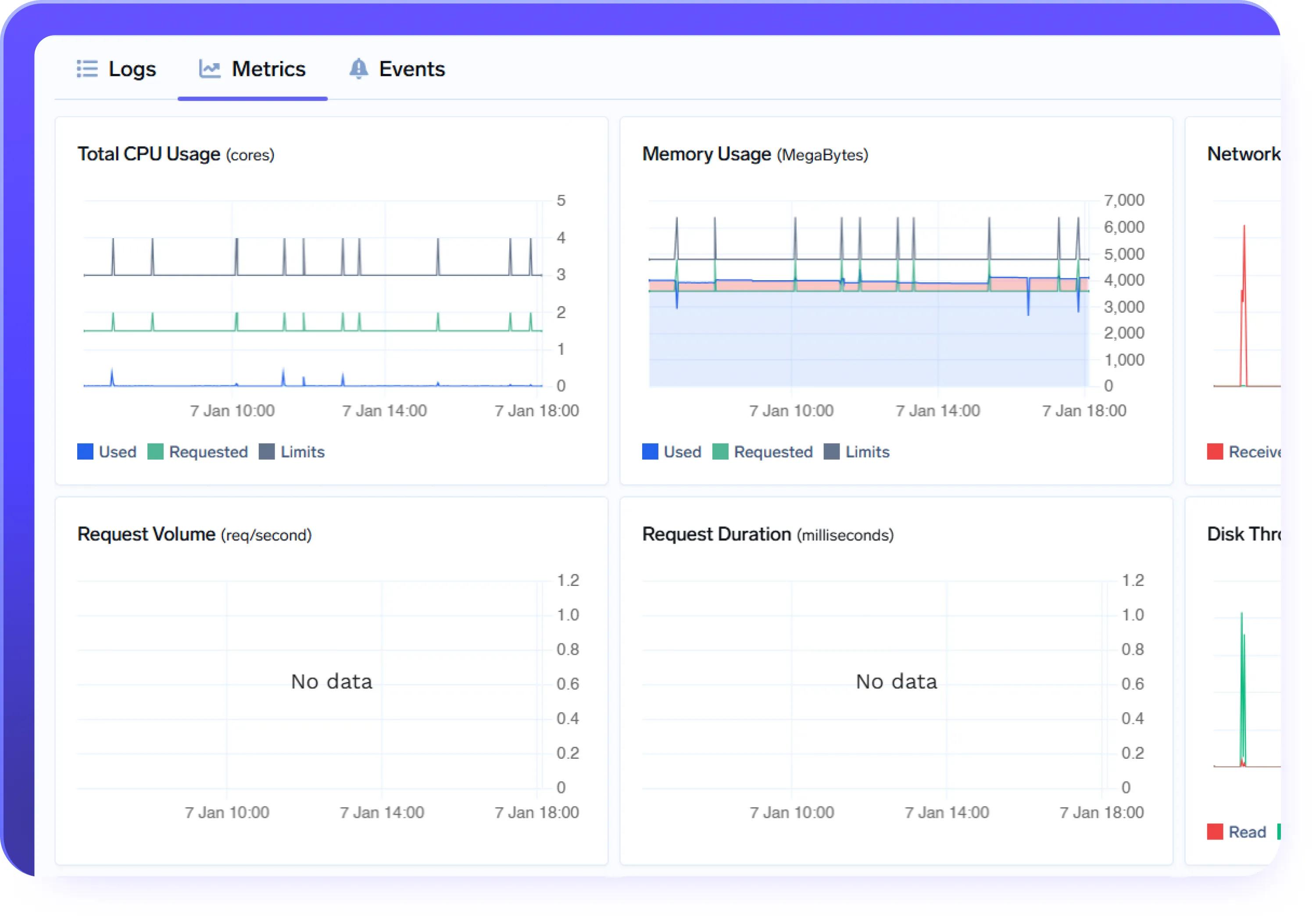

- Integrated logs, metrics, and events for every deployment

- Native monitoring and alerting to quickly detect and resolve issues

- Production-ready deployment features like health checks and rollout strategies

- Secure secret management and seamless CI/CD integrations

.webp)

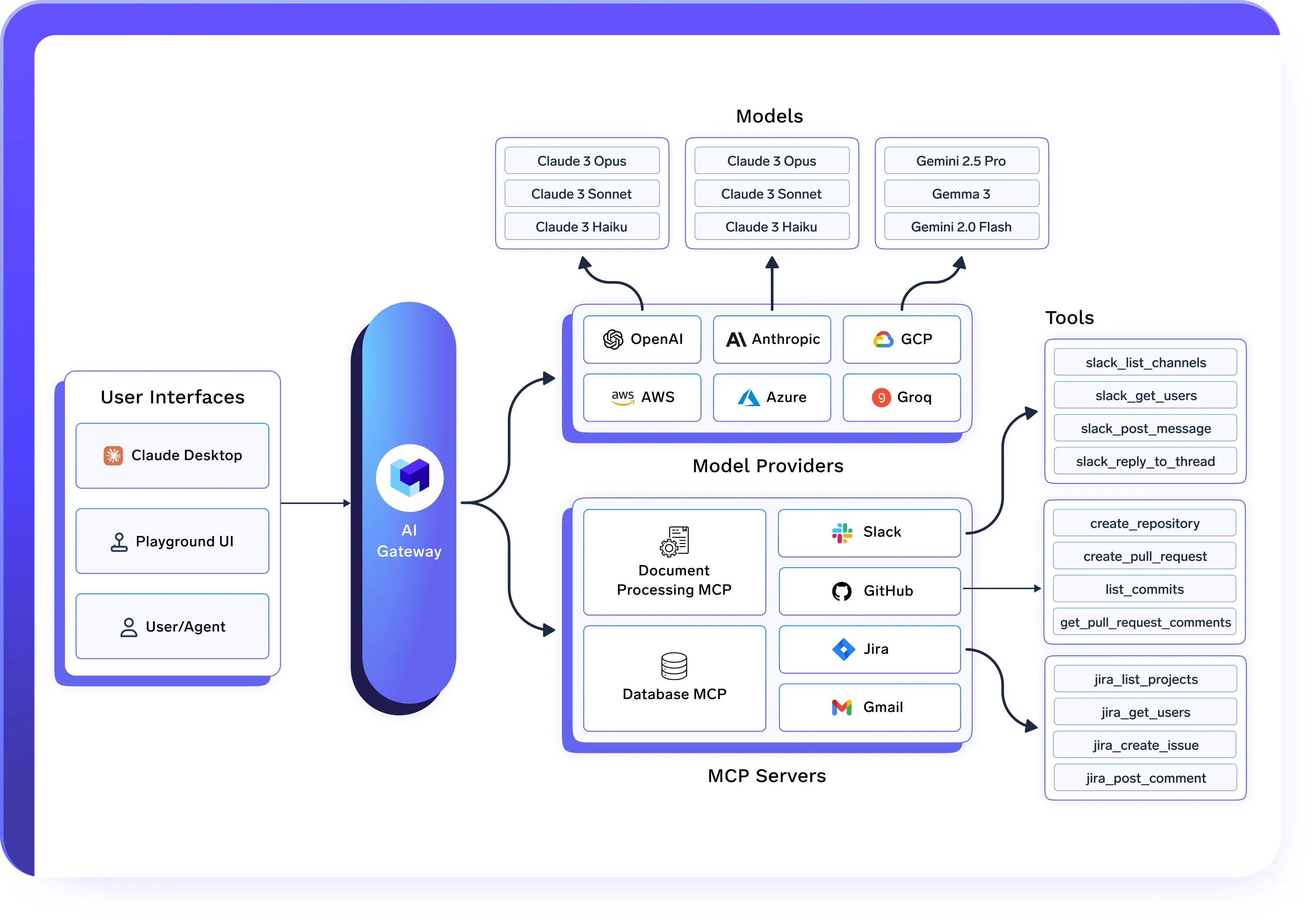

Works Seamlessly with AI Gateway & Agent Gateway

above it.

- AI Gateway governs model access, routing, and cost controls

- MCP Gateway governs tool access and execution

- Agent Gateway orchestrates and governs agent workflows

- Unified AI Deployments power the actual execution and infrastructure

.webp)

.webp)

Made for Real-World AI at Scale

Enterprise-Ready

Your data and models are securely housed within your cloud / on-prem infrastructure

Compliance & Security

SOC 2, HIPAA, and GDPR standards to ensure robust data protectionGovernance & Access Control

SSO + Role-Based Access Control (RBAC) & Audit LoggingEnterprise Support & Reliability

24/7 support with SLA-backed response SLAs

VPC, on-prem, air-gapped, or across multiple clouds.

No data leaves your domain. Enjoy complete sovereignty, isolation, and enterprise-grade compliance wherever TrueFoundry runs

Real Outcomes at TrueFoundry

Why Enterprises Choose TrueFoundry

3x

faster time to value with autonomous LLM agents

80%

higher GPU‑cluster utilization after automated agent optimization

Aaron Erickson

Founder, Applied AI Lab

TrueFoundry turned our GPU fleet into an autonomous, self‑optimizing engine - driving 80 % more utilization and saving us millions in idle compute.

5x

faster time to productionize internal AI/ML platform

50%

lower cloud spend after migrating workloads to TrueFoundry

Pratik Agrawal

Sr. Director, Data Science & AI Innovation

TrueFoundry helped us move from experimentation to production in record time. What would've taken over a year was done in months - with better dev adoption.

80%

reduction in time-to-production for models

35%

cloud cost savings compared to the previous SageMaker setup

.webp)

Vibhas Gejji

Staff ML Engineer

We cut DevOps burden and simplified production rollouts across teams. TrueFoundry accelerated ML delivery with infra that scales from experiments to robust services.

50%

faster RAG/Agent stack deployment

60%

reduction in maintenance overhead for RAG/agent pipelines

.webp)

Indroneel G.

Intelligent Process Leader

TrueFoundry helped us deploy a full RAG stack - including pipelines, vector DBs, APIs, and UI—twice as fast with full control over self-hosted infrastructure.

60%

faster AI deployments

~40-50%

Effective Cost reduction of across dev environments

.webp)

Nilav Ghosh

Senior Director, AI

With TrueFoundry, we reduced deployment timelines by over half and lowered infrastructure overhead through a unified MLOps interface—accelerating value delivery.

<2

weeks to migrate all production models

75%

reduction in data‑science coordination time, accelerating model updates and feature rollouts

.webp)

Rajat Bansal

CTO

We saved big on infra costs and cut DS coordination time by 75%. TrueFoundry boosted our model deployment velocity across teams.

Frequently asked questions

What types of AI workloads can I deploy with Unified AI Deployments?

Does Unified AI Deployments support autoscaling?

How does auto-shutdown work for AI workloads?

Can I deploy AI workloads in my own environment?

How does Unified AI Deployments integrate with AI Gateway?

GenAI infra- simple, faster, cheaper

Trusted by 30+ enterprises and Fortune 500 companies