What Is MCP Server? A Brief Explanation

As AI agents become more capable, they require safe and structured methods for interacting with real-world tools, including APIs, databases, file systems, and more. That’s where the Model Context Protocol (MCP) comes in. Introduced by Anthropic, MCP is a standardized protocol that lets language models call external tools through a consistent interface. An MCP Server is the backend service that exposes these tools, making them accessible to AI clients in real-time. From file readers to cloud apps, MCP Servers are the glue that connects LLMs to action. This blog dives deep into how MCP Servers work, why they matter, and how to build them.

What is the MCP Server?

The Model Context Protocol (MCP) Server is a specialized backend service designed to manage and serve contextual information for large language models (LLMs) during inference. In LLM applications, especially those involving dynamic conversations, task-based agents, or retrieval-augmented generation (RAG), managing the evolving context is essential. The MCP Server plays a central role by decoupling context management from the core model execution, enabling more scalable and modular AI systems.

At its core, the MCP Server handles the storage, retrieval, and real-time updating of context elements such as system prompts, user history, retrieved documents, memory states, or user-specific metadata. Instead of hardcoding these into each model call, developers can query the MCP Server to fetch and assemble the relevant context on demand. This allows consistent and reusable prompt structures across different model invocations.

Another key function of the MCP Server is efficient caching and retrieval, often powered by fast in-memory databases like Redis. This supports stateless model APIs while maintaining session continuity and performance. In agentic workflows or chained calls, the MCP Server ensures that all parts of a system reference the same source of truth for context, maintaining accuracy and coherence.

By introducing a standardized protocol for context management, the MCP Server enables cleaner abstractions, better debugging, and easier scaling for production-grade LLM applications.

How does the MCP Server work?

The Model Context Protocol (MCP) Server is a foundational component in modern AI architectures, designed to bridge the gap between large language models (LLMs) and external systems like databases, APIs, or internal tools. It standardizes how LLMs access tools, data, and contextual prompts, enabling seamless integration and modular scalability across applications. Instead of hardcoding tool invocations or API calls into each application, developers can delegate this logic to an MCP server, which exposes capabilities through a clean, interoperable protocol.

When an LLM-based application starts, it initiates a handshake with the MCP server to discover what tools, resources, and prompt templates are available. These could include read-only data sources, such as customer records or documents; executable tools like SQL runners or file uploaders; or system prompts that shape the LLM’s tone and instructions. This discovery step enables the LLM to dynamically select and invoke external functions as needed, without embedding specific logic within the model itself.

Crucially, the MCP server is stateful, which means it maintains memory across multiple requests within a session. This is particularly useful in agentic or multi-turn workflows, where a model may need to chain several actions together based on prior responses. Unlike traditional stateless APIs, MCP enables coherent, context-aware interaction between the LLM and its environment.

The MCP server communicates either locally via standard input/output or remotely over HTTP using Server-Sent Events (SSE). It supports implementations in a variety of programming languages, making it highly flexible. As the ecosystem evolves, major AI providers like Anthropic, OpenAI, and Microsoft are increasingly adopting or supporting MCP-based integrations.

Security remains a critical concern. MCP authorization controls which MCP servers, tools, and resources an AI agent can access at runtime, enforcing scoped and policy-driven tool access after authentication is complete. Since MCP servers grant models access to sensitive tools and data, they must implement strict authentication, authorization, and context scoping. Misuse or poor configuration can lead to prompt injection attacks or unauthorized tool use, making governance and auditability essential.

The MCP server transforms how AI applications interact with external capabilities. It introduces a universal, reusable protocol layer that simplifies tool access, maintains contextual integrity, and enables secure, scalable integration, essentially becoming the “USB-C for AI tools and data.”

MCP to MCP Server: The Difference

To understand what an MCP Server does, we first need to clarify what MCP actually is. MCP (Model Context Protocol) is a standardized communication protocol that allows AI models, particularly large language models (LLMs), to interact with external tools and data sources in a safe, consistent, and extensible way. Think of MCP as the API specification or “contract” that defines how AI clients (like Claude, ChatGPT, or any agent framework) can discover and invoke tools securely, using JSON-RPC 2.0 as the transport layer.

Now, an MCP Server is a specific implementation of this protocol. It wraps one or more tools (for example, a GitHub API, a database, a PDF reader, or a proprietary business service) and exposes them using the MCP specification. When an AI client connects to an MCP Server, it performs a discovery handshake, learns about available methods (such as list_pull_requests), and then sends invocation requests over stdio or HTTP with Server-Sent Events (SSE).

In simple terms:

- MCP is the language both sides speak

- MCP Client (like an agent or AI runtime) is the caller

- MCP Server is the tool provider

Why separate them? Because this modular design allows:

- Reusability: One server can power many clients

- Security: Servers can be sandboxed or permission-scoped

- Flexibility: You can build custom tools without modifying the AI system

This separation of concerns is what makes MCP powerful. It decouples the intelligence (AI agent) from the execution (tool access), leading to scalable, secure, and maintainable AI integrations.

To operationalize MCP Servers in production, teams often rely on managed MCP Gateway platforms. Examples include TrueFoundry and Composio, which help standardize tool access, security, and observability across agents.

In the next section, we’ll break down how an MCP Server fits into the overall architecture and how requests are processed under the hood.

The Core Architecture

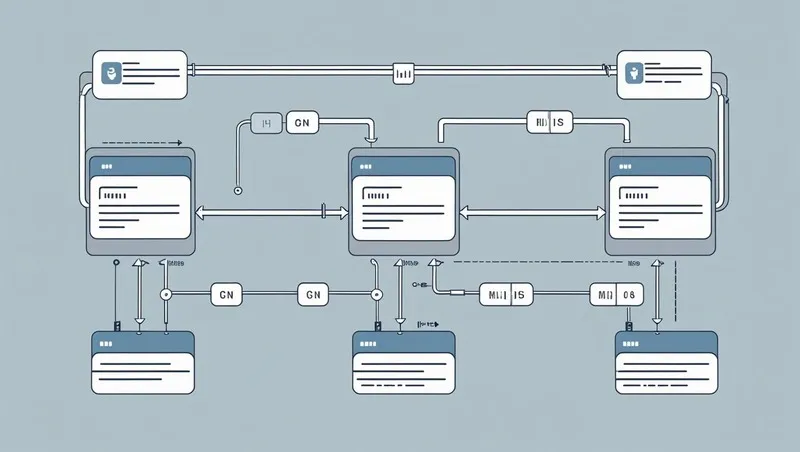

At the heart of the MCP ecosystem is a clean, modular architecture that separates AI reasoning from tool execution. This structure allows for flexibility, security, and maintainability. The interaction primarily involves three components: the MCP Client, the MCP Server, and the Tool itself.

- MCP Client: This is typically part of the AI runtime or agent framework. The client handles initiating connections to one or more MCP Servers. It performs a discovery process to understand what tools are available and what methods can be invoked. The MCP Client is responsible for sending method calls, handling responses, and managing tool availability during runtime.

- MCP Server: The server implements the MCP protocol and wraps one or more tools. It exposes them through a well-defined JSON-RPC 2.0 interface. MCP Servers can run locally or remotely and communicate via two modes:

- stdio (commonly used for local tools)

- HTTP with Server-Sent Events (SSE) (used for remote, scalable services)

- Each server registers its tools and responds to discovery and invocation requests from clients.

- Tools or Backends: These are the actual functions or services the server connects to. They can be REST APIs, databases, file systems, proprietary business tools, or external SaaS apps. The MCP Server abstracts these behind a standardized interface so the AI model does not need to know the implementation details.

Request Flow

- The client sends a discovery request to the server

- The server responds with available tool methods and metadata

- The client invokes a method using JSON-RPC

- The server executes the method and returns the result

This architecture ensures LLMs can interact with a wide range of tools without custom code for each integration. In the next section, we will explore what makes an MCP Server truly effective.

What Makes a Good MCP Server?

Not all MCP Servers are created equal. While any tool can be wrapped in an MCP interface, building a high-quality MCP Server requires thoughtful design and robust implementation. A good enterprise MCP server is not just functional — it is secure, efficient, easy to discover, and provides clear semantics for the AI client.

Here are the key traits of an effective MCP Server:

- Well-Defined Tool Interface: Every method exposed by the server should have clear input and output schemas, ideally using JSON Schema or TypeScript-style type annotations. This allows AI models to reason about the tool’s functionality with minimal hallucination or guesswork.

- Tool Metadata and Descriptions: Good servers include descriptive metadata for each method: what it does, when to use it, and what parameters are expected. This helps with runtime tool discovery and improves the quality of model reasoning.

- Error Handling and Logging: A robust MCP Server returns meaningful error messages when things go wrong. It also logs inputs, outputs, and errors in a structured format to support observability and debugging.

- Security and Access Control: If the server connects to sensitive systems (like internal APIs or databases), it should enforce strict authentication and authorization controls. Rate limiting and sandboxing can also help prevent abuse.

- Performance and Scalability: For remote MCP Servers, low-latency responses and the ability to handle concurrent requests are essential. Caching, connection pooling, and efficient serialization all contribute to better performance.

- Composability: Servers that expose multiple related tools (e.g., a CRM API plus analytics endpoints) allow for more complex and valuable agent workflows.

When these qualities come together with strong governance and observability, teams can confidently build on what effectively becomes the best MCP gateway for production-grade AI systems. A well-structured MCP Server becomes a reusable, plug-and-play module that can serve multiple AI clients across various use cases. Next, let’s look at real-world examples already in use.

MCP Server Examples

The growing adoption of the Model Context Protocol has led to the development of a wide range of MCP Servers across industries. These servers act as adapters, wrapping existing tools and services so that AI models can interact with them securely and efficiently. One of the most widely used examples is the GitHub MCP Server, which allows AI agents to interact with GitHub repositories. It exposes methods like list_pull_requests, create_issue, and get_repo_stats, making it easy for agents to automate development workflows using a standardized interface.

Another common type is the File System Server. This is typically a local MCP Server that provides read and write access to files on disk. It exposes tools such as read_file, list_directory, and write_file within a safe execution boundary, enabling AI agents to perform file operations without direct access to the host system. Enterprise software vendors like Atlassian have also embraced the protocol by building MCP Servers for Jira and Confluence. These allow agents to create tasks, update issues, or search through documentation, all while respecting enterprise-grade permission systems and audit trails.

MCP Servers are also being used to expose structured business data. For example, a database query server can wrap SQL or NoSQL databases and offer safe access through methods like get_customer_by_id or fetch_sales_summary. These servers handle parameter validation and protect against query injection, making them useful in data-sensitive environments. Beyond internal tools, many companies are building MCP wrappers for third-party SaaS platforms such as Slack, Notion, HubSpot, and Salesforce. These servers handle authentication, rate limiting, and data transformation so agents can seamlessly interact with cloud-based tools.

Together, these examples illustrate how MCP Servers can bridge LLMs with operational systems, whether local or remote, simple or complex. In the next section, we will explore best practices and design tips for building effective MCP Servers.

Best Practices and Tips

Building an MCP Server involves more than just exposing functions over JSON-RPC. To ensure reliability, security, and usability, developers should follow a set of best practices that make the server robust and AI-friendly.

First, clarity is key. Each tool method should be well-documented with human-readable descriptions and clear input-output schemas. This allows AI models to reason more effectively about the tool's purpose and usage. For instance, include parameter names, data types, constraints, and examples within the server’s discovery metadata. Avoid exposing overly generic or ambiguous methods, as these can confuse the AI or lead to incorrect usage.

Second, implement solid error handling. Always return structured and meaningful error messages, including codes and descriptions. This helps both developers and AI agents understand what went wrong and how to recover gracefully. Consider logging every request and response, along with timestamps and metadata, for observability and debugging.

Security should be a top priority. If the MCP Server interacts with sensitive systems, such as production databases, financial tools, or cloud APIs, use authentication and authorization mechanisms to limit access. For remote servers, secure the HTTP endpoints with HTTPS and use API keys, tokens, or OAuth flows. In local environments, consider process isolation or containerization to prevent privilege escalation.

Performance also matters. Use connection pooling, response caching, and efficient serialization to keep latency low. Servers should be responsive even under concurrent loads, especially if they are serving AI agents in real-time.

Finally, make your server composable and extensible. Group related tools into modular packages and allow dynamic registration of new tools if possible. This makes it easier to scale and reuse your server across multiple AI workflows.

Following these practices ensures that your MCP Server is not only functional but also safe, scalable, and ready for production use. Next, let’s look at how TrueFoundry fits into this ecosystem.

MCP Server with TrueFoundry

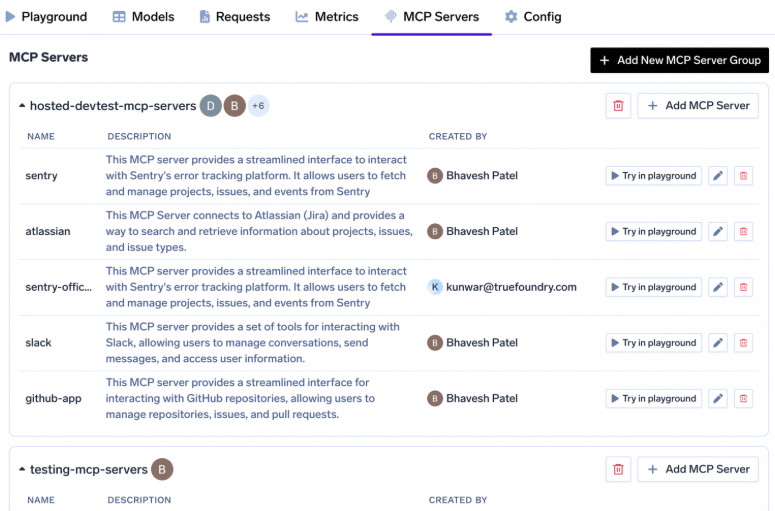

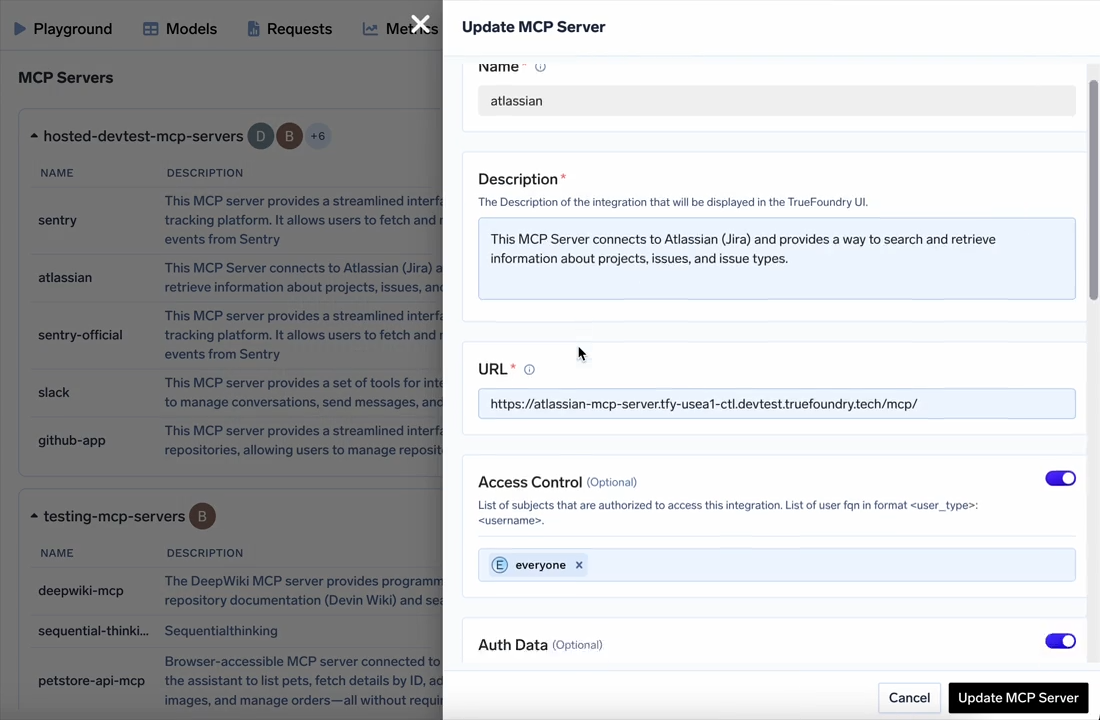

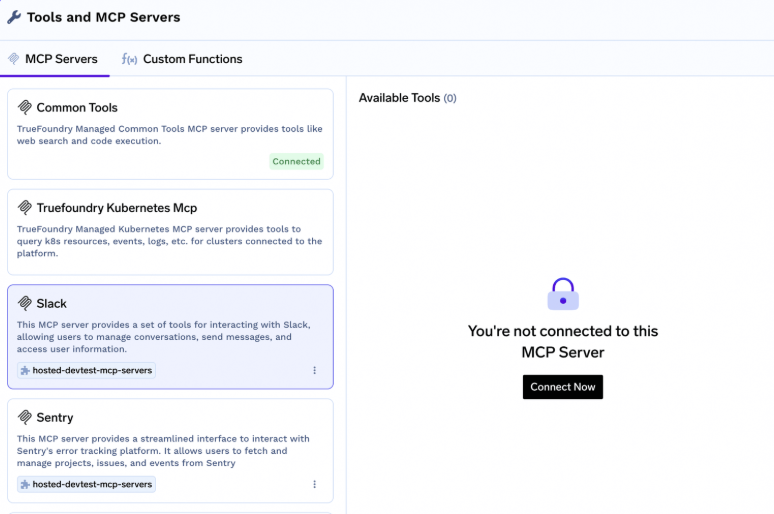

TrueFoundry provides a modern, scalable foundation for managing your entire MCP Server ecosystem, from deployment to discovery, from access control to observability. As enterprises adopt AI agents that rely on external tools, managing MCP Servers efficiently becomes critical. TrueFoundry offers a unified MCP Gateway that centralizes the lifecycle of all your MCP integrations, whether internal, third-party, cloud-hosted, or on-premises. Below, we explore how TrueFoundry elevates the MCP Server infrastructure with five core capabilities.

1. MCP Server Registry & Discovery

TrueFoundry offers a unified MCP Gateway that enables agent runtimes to discover and connect with all authorized MCP Servers, regardless of their origin. Internal tools, cloud services, or third-party SaaS integrations are all visible and searchable in one place. From a centralized dashboard, teams can register and catalog MCP Servers deployed across cloud, on-premises, or hybrid environments. Built-in approval flows allow organizations to define which roles or teams can access specific servers, ensuring secure and policy-driven access at scale.

2. Out of the Box Integrations

To accelerate agent adoption, TrueFoundry provides prebuilt MCP Server integrations for widely used enterprise tools like Slack, Confluence, Sentry, and Datadog. These plug-and-play connectors make it possible to integrate external services into LLM-powered workflows without writing code or modifying your AI stack. Using standardized schemas and auto-generated discovery metadata, these MCP Servers are ready for use in pipelines and autonomous agents instantly, with no SDK changes required.

3. Bring Your Own MCP Server

TrueFoundry gives you the flexibility to onboard any custom or proprietary service as an MCP Server within minutes. Whether you are wrapping an internal API, a microservice, or a legacy enterprise tool, you can register it with the MCP Gateway and make it discoverable to agents. This also enables seamless coordination between self-hosted and vendor-hosted MCP Servers, allowing teams to personalize LLM workflows based on unique business logic or data without needing additional engineering overhead.

4. Secure Auth & Access Control

Security is first-class in TrueFoundry’s MCP ecosystem. Teams can implement federated identity through providers like Okta, Azure AD, or Google Workspace, while role-based access control (RBAC) ensures fine-grained policy enforcement at the MCP Server level. TrueFoundry also supports OAuth 2.0 with dynamic discovery for token handling and session management. Centralized security policies applied at the gateway level help reduce the surface area of risk while improving regulatory compliance.

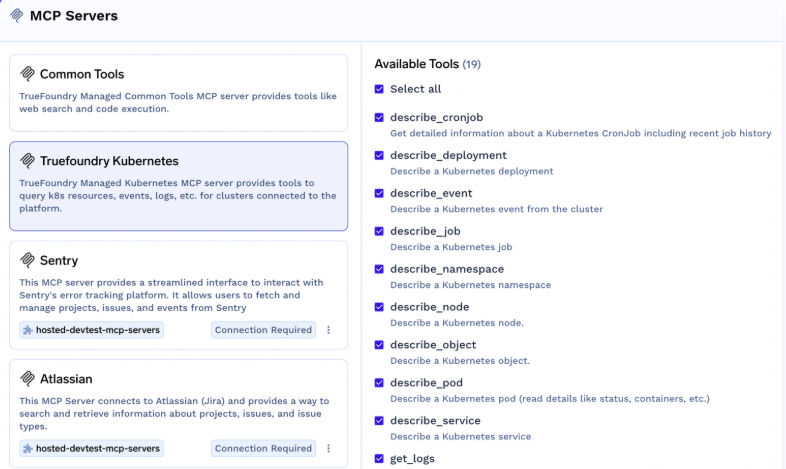

5. Built-In Observability

TrueFoundry includes native observability tools that let you trace every MCP interaction, from agent decisions to tool executions. You can collect structured telemetry including latency, error rates, request volume, and usage patterns, filtered by team, user, tool, or cost center. This makes it easy to troubleshoot performance issues, monitor health, and optimize usage across your entire MCP landscape.

TrueFoundry is not just a deployment platform. It is an enterprise control plane for your entire MCP Server architecture. It simplifies discovery, strengthens security, and enables real-world AI integrations at scale.

Conclusion

MCP Servers are becoming essential components in modern AI systems, bridging the gap between language models and real-world tools. By standardizing how tools are exposed and accessed, they enable scalable, secure, and modular AI workflows. Whether you're integrating third-party SaaS apps or internal APIs, MCP Servers provide a clean, reusable interface for LLMs to interact with external systems. Platforms like TrueFoundry take this a step further by offering centralized management, security, and observability across your MCP ecosystem. As AI agents become more capable, investing in a robust MCP Server strategy will be critical to unlocking their full operational potential.

Frequently Asked Questions

What is the MCP server?

At its core, it is a standardized bridge that exposes specific tools, data sources, or prompts to AI models through the Model Context Protocol. It allows AI applications to interact with diverse backend systems using a universal interface, eliminating the need for custom, brittle integrations for every new data source.

What is the difference between MCP server vs API?

The primary difference between an MCP server vs a traditional API is the layer of abstraction and standardization. While a raw API provides endpoints that require custom coding to integrate, an MCP server exposes a machine-readable schema that tells an AI model exactly what tools are available and how to use them. This enables dynamic tool discovery that standard APIs do not natively support.

Is MCP server a microservice?

An MCP server can be implemented as a microservice, as it is a modular component designed to perform specific tasks or provide access to distinct data sets. In a modern AI stack, treating these servers as microservices allows teams to scale specific tool capabilities independently and maintain a clean separation between the AI's reasoning engine and the underlying data infrastructure.

How to use MCP server?

To use an MCP server, you typically deploy it as a service that connects to an MCP-compatible host, such as a specialized IDE or an AI Gateway. For production environments, TrueFoundry provides a managed platform to deploy and scale these servers securely, ensuring that every tool invocation is monitored, authenticated, and governed within your private cloud environment.

What is the difference between MCP server and client?

The difference lies in who provides the data versus who uses it. The MCP server is the provider that hosts tools, resources, and prompts, while the client (often an AI agent or application) is the consumer that requests these capabilities. The client queries the server to "discover" what it can do and then sends commands to the server to execute specific actions.

Is an MCP server an actual server?

While the term implies a remote web server, an MCP server is technically any executable or process that implements the MCP communication standard. It can run as a local process using standard input/output (stdio) or as a remote service over HTTP. In enterprise architectures, these are usually deployed as remote services to allow multiple agents to share access to the same centralized tools and data.

What is the main purpose of an MCP Server?

An MCP Server exposes tools like APIs, databases, or services in a standard format that AI agents can understand and use. It acts as a secure bridge between language models and external systems, enabling AI to perform real-world tasks through structured, discoverable interfaces.

How is an MCP Server different from the MCP protocol itself?

MCP is the communication protocol that defines how AI models interact with tools. An MCP Server is an actual implementation of that protocol, wrapping specific tools or services and making them accessible to clients through the MCP interface. It’s the backend that serves the tools.

Can I build my own MCP Server for internal tools?

Yes. You can easily build and register your own MCP Server to expose internal APIs, services, or databases. With platforms like TrueFoundry, custom MCP Servers can be made discoverable to AI agents securely, allowing you to personalize agent workflows without major engineering effort.

What security measures should I consider for MCP Servers?

MCP Servers should implement authentication, authorization, and rate limiting. For sensitive tools, use OAuth 2.0, federated login (e.g., Okta, Azure AD), and RBAC policies. Platforms like TrueFoundry also offer centralized security controls and secret management to reduce risks across your MCP Server deployments.

How does TrueFoundry enhance the MCP Server experience?

TrueFoundry provides a unified gateway to register, manage, and monitor all MCP Servers across environments. It offers built-in observability, out-of-the-box integrations, secure access control, and scalability. This streamlines how teams deploy and scale MCP Servers for real-world AI agent workflows in production environments.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.