Data Residency in the Age of Agentic AI: How AI Gateways Enable Sovereign Scale and Compliance

Introduction

The rise of Agentic AI, where AI systems operate semi-autonomously across workflows, APIs, and data sources—has fundamentally changed the data landscape. Every prompt, response, and contextual action in an AI system is data: data that may reveal intellectual property, customer interactions, or even sensitive corporate strategies. As organizations scale these AI systems globally, a question that once belonged to compliance teams has now become a boardroom concern: where does your data live, and who can access it?

This is the essence of data residency — ensuring that data remains within a defined geographic or legal boundary. What was once a regulatory checkbox has become a strategic necessity in an increasingly geopolitical AI landscape.

In this article, we explore why data residency is gaining urgency in the Agentic AI world, what Gartner calls the emerging trend of geopatriation, and how organizations can build for sovereignty and scale using an AI Gateway architecture, and how TrueFoundry’s AI Gateway delivers flexible, region-aware data control that aligns with these evolving imperatives.

What Is Data Residency and Why It Matters More Than Ever in AI

Data residency refers to where organizational data is physically stored and legally governed. In the pre-AI era, this typically applied to structured data—databases, CRMs, analytics systems. Today, in the AI era, this extends far beyond storage. Every AI model interaction generates new forms of data: prompts, completions, embeddings, logs, and contextual memory.

Each of these elements can contain: - Sensitive intellectual property or trade secrets. - Personally identifiable or regulated information (PII). - Confidential business strategies or customer details. Even ephemeral data - stored only for milliseconds during inference can fall under data-sovereignty rules if it crosses borders. AI therefore expands the definition of data residency from “where data rests” to “where it moves

For organizations leveraging third-party LLM APIs or global AI platforms, even transient data may pass through jurisdictions with different privacy laws. Under regulations such as the EU GDPR, India’s Digital Personal Data Protection Act (DPDP), or Australia’s Privacy Act, these cross-border transfers create compliance and reputational risks.

In short: AI isn’t just producing insights - it’s generating new data liabilities. Every inference request is a micro-transaction of sensitive data that needs to be treated with the same rigor as stored information.

Who Cares About Data Residency and Why the Trend Is Accelerating

Historically, only highly regulated sectors- financial services, healthcare, defense, and government worried about data residency. But in 2025, the trend has gone mainstream. Gartner’s Understanding the Landscape of Cloud Repatriation and Geopatriation (Sept 2025) notes that non-U.S. organizations are increasingly cautious about hosting data with U.S. or China-based cloud hyperscalers. Legislative developments like the U.S. CLOUD Act have intensified these concerns by granting U.S. authorities access to data held by American providers, even if that data resides outside U.S. borders. Major hyperscalers have also responded with sovereign-cloud offerings - AWS European Sovereign Cloud, Google’s EU Sovereign Cloud, and Microsoft Cloud for Sovereignty each designed to reassure enterprises facing rising regulatory fragmentation.

In parallel, global enterprises are facing fragmentation in data regulation: - The EU enforces strict cross-border data transfer limits under GDPR. - India mandates storage of critical personal data within the country. - Australia and the Middle East are introducing region-specific AI governance frameworks.

According to Gartner, inquiries about cloud sovereignty and geopatriation rose 305% in the first half of 2025, signaling that this concern has moved from niche to critical. In other words, organizations no longer view data residency as compliance hygiene—they see it as strategic risk management.

This shift is particularly pronounced for AI-first companies and SaaS providers. Their products often rely on user prompts, inference logs, and AI model telemetry that might traverse global infrastructure. For them, ensuring jurisdictional control isn’t optional—it’s foundational to customer trust and regulatory continuity.

Gartner Calls It: Data Residency and Geopatriation as Top Tech Trends for 2026

In its Top Strategic Technology Trends for 2026 report (Oct 2025), Gartner introduced a pivotal concept: Geopatriation. Defined as the relocation of workloads from hosting environments perceived to carry geopolitical risks to those offering greater sovereignty, geopatriation is expected to reshape how enterprises design their digital stacks.

Gartner predicts that by 2030, more than 75% of European and Middle Eastern enterprises will geopatriate their workloads into solutions that mitigate geopolitical exposure—up from less than 5% in 2025. This is a staggering projection, underscoring that sovereignty is becoming as important as scalability.

Gartner also positions Geopatriation within its Vanguard theme—alongside Preemptive Cybersecurity, Digital Provenance, and AI Security Platforms—indicating that data sovereignty is now core to digital trust. In an AI-driven enterprise, digital trust directly correlates with adoption, customer confidence, and regulatory resilience.

In essence, data sovereignty has become a board-level technology strategy, not merely a compliance checkbox.

Designing for Data Residency in an AI-First Stack

As organizations embrace agentic AI systems, the AI stack must evolve to embed residency controls at every layer—from inference to observability. Here are five design principles for AI architectures that respect jurisdictional data boundaries:

- Regionalized Logging and Storage: Ensure that AI prompts, responses, and usage logs are stored within the same jurisdiction as the end-user or data source. This prevents inadvertent data export through centralized observability systems.

- Jurisdiction-Aware Routing: Implement intelligent gateways that route model calls through region-appropriate APIs and infrastructure. This ensures that data generated in the EU never leaves the EU, and similarly for other regions.

- Encryption and Key Sovereignty: Adopt an “encryption everywhere” philosophy with customer-managed keys. Even if data passes through foreign infrastructure, decryption should only occur within the customer’s control.

- Provider Flexibility: Design for modularity so that different regions can use different LLM providers or infrastructure without architectural rewrites. For example, an EU deployment might use Mistral or Aleph Alpha, while the U.S. instance might use OpenAI or Anthropic.

- Transparent Auditing and Control: Maintain a real-time audit trail of all data interactions — what data was sent, where, and for what purpose. This traceability is key to compliance reporting.

These design principles ensure not just compliance, but also operational agility. Sovereign-by-design systems are inherently more adaptable to evolving data laws.

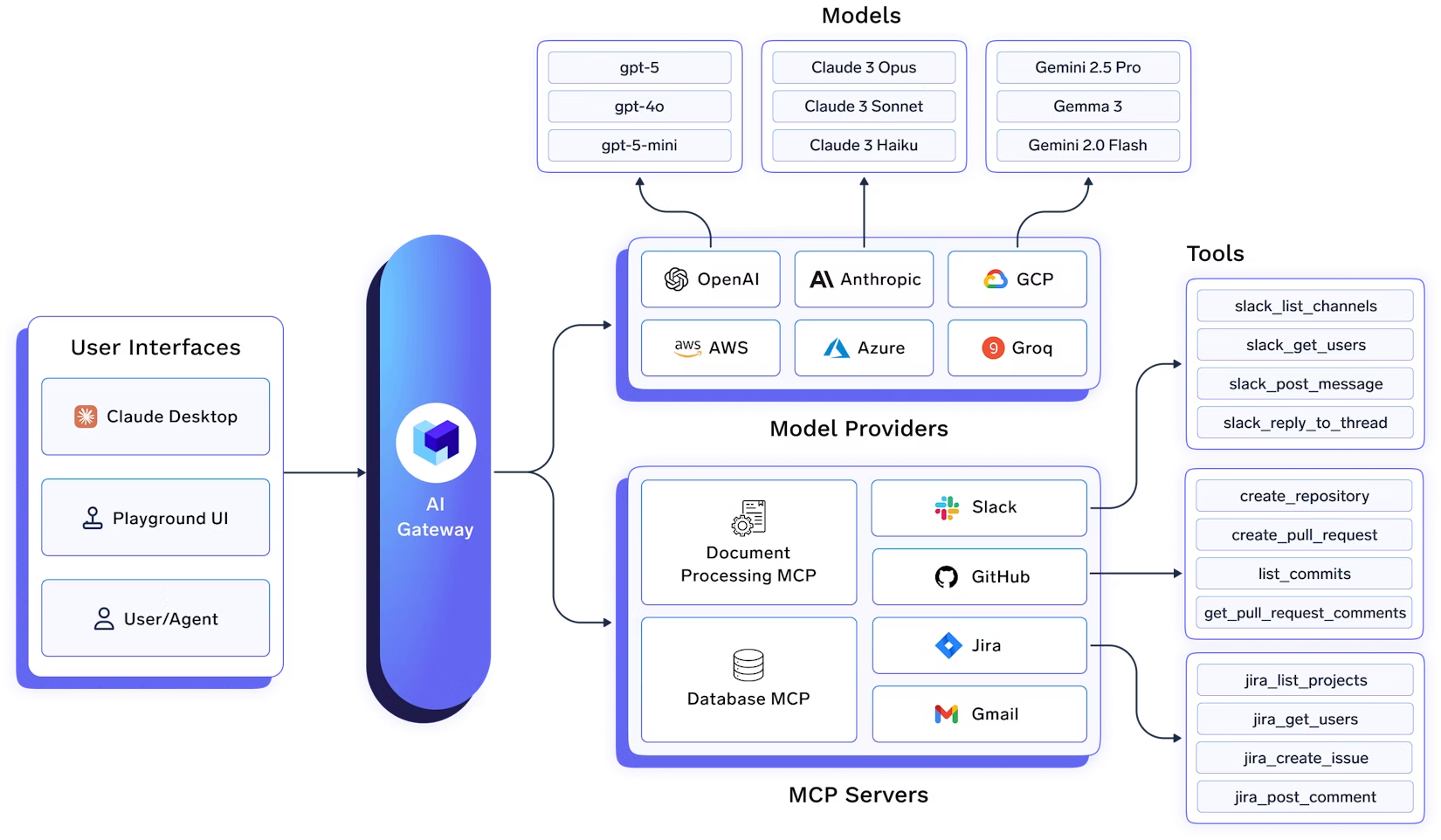

Data Residency in AI Gateways — The New Control Plane

In an AI-first enterprise, the AI Gateway becomes the nexus for enforcing sovereignty. Sitting between applications, users, and AI models, the gateway centralizes all AI traffic, providing a single point to apply residency and governance policies.

Key Capabilities of a Residency-Ready AI Gateway:

- Regional Log Isolation: Logging and analytics data is stored locally in-region.

- Routing Intelligence: Each API call is automatically directed to the correct regional endpoint based on user or data origin.

- Model Abstraction: The gateway allows switching between global and sovereign model providers without code changes.

- Privacy and Redaction Filters: Sensitive data is anonymized or masked before being sent to external models.

- Policy-Aware Observability: Monitoring and dashboards adhere to the same regional controls as the underlying data.

In essence, the AI Gateway acts as both traffic controller and compliance firewall, ensuring that innovation doesn’t come at the cost of sovereignty.

How TrueFoundry’s AI Gateway Delivers Data Sovereignty and Scale

TrueFoundry’s AI Gateway was built with data residency and sovereignty at its core. It enables organizations to scale AI workloads globally while maintaining strict regional control.

Key Differentiators:

- Flexible Logging Architecture:

- TrueFoundry’s Gateway stores all prompt and response logs within the user’s deployment region.

- Organizations can configure log retention and masking policies independently per region (e.g., anonymize data in the EU, retain raw logs in the U.S.).

- Region-Aware Routing:

- The Gateway automatically routes requests to model providers compliant with regional laws.

- This enables hybrid deployments—for example, sending EU data to a local LLM hosted on EU infrastructure while U.S. traffic leverages OpenAI APIs.

- Bring-Your-Own-Cloud and On-Prem Support:

- Enterprises can deploy the Gateway on private cloud, VPC, or on-prem environments to meet internal compliance mandates.

- A global view of metrics without compromising residency. TrueFoundry aggregates anonymized observability data while ensuring local log isolation.

- Encryption and Key Control:

- All data in transit and at rest is encrypted, and organizations retain full control of decryption keys per region.

This architecture empowers enterprises to meet data sovereignty requirements without sacrificing scalability, latency, or developer productivity.

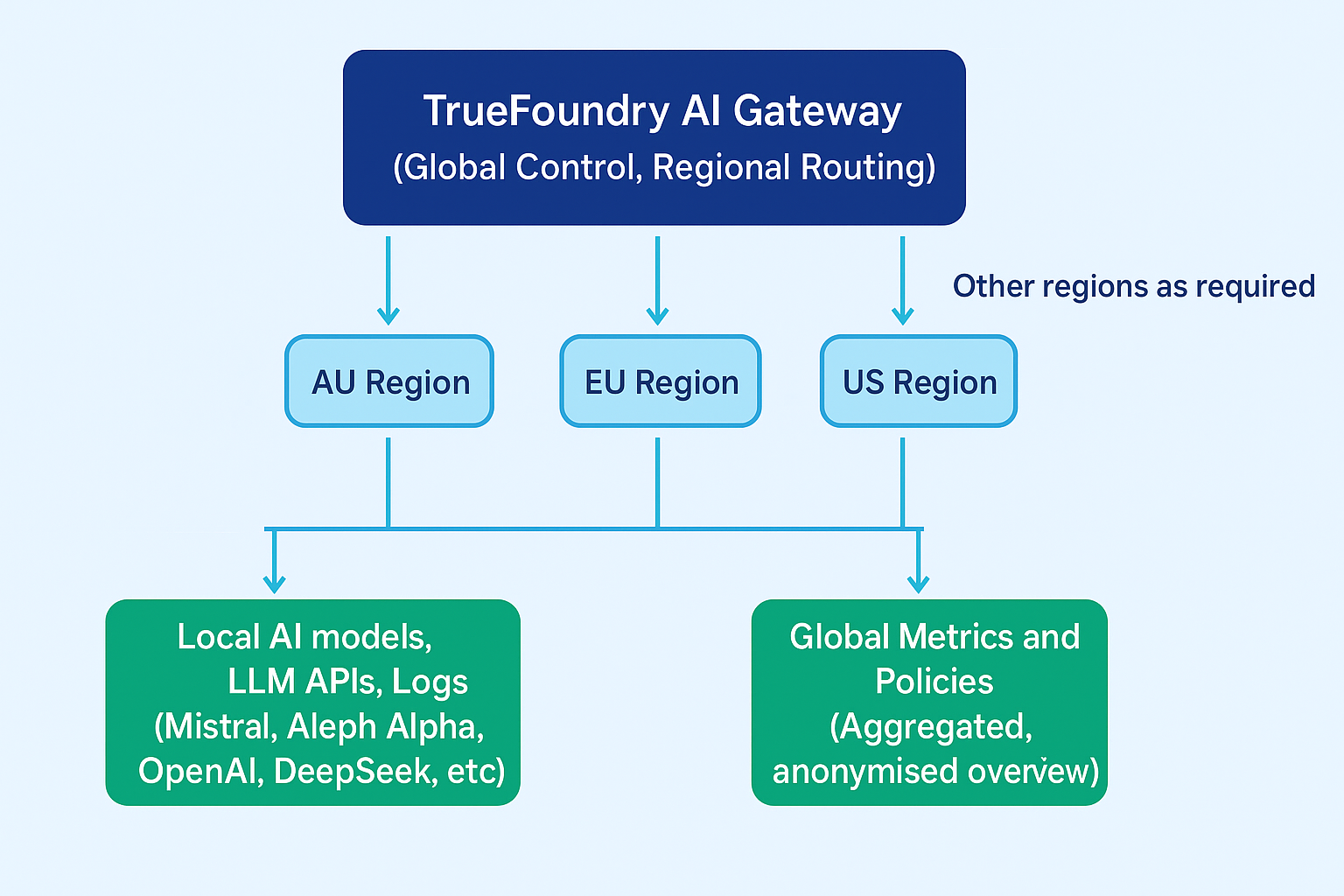

Multi-Region AI Data Residency Flow

Below is a conceptual representation of how a multi-region enterprise can manage AI data flows through TrueFoundry’s AI Gateway.

In this setup: - Prompts and responses from users in each region are handled by their local AI Gateway endpoint.

- Logs are stored regionally and never cross jurisdictions.

- Global administrators retain visibility into performance and usage metrics, but without accessing raw data.

This architecture provides data sovereignty without fragmentation—a unified AI layer that respects borders while maintaining cohesion.

Conclusion

As Agentic AI systems automate workflows and make increasingly autonomous decisions, the trust boundary between organizations and AI models is expanding. The organizations that win in this new era will be those that can balance agility with sovereignty.

Data residency is no longer a compliance checklist; it is a core pillar of AI infrastructure strategy. Gartner’s identification of geopatriation as a top technology trend for 2026 validates this trajectory—enterprises are recognizing that where their data lives directly impacts how securely and responsibly they can innovate.

AI Gateways like TrueFoundry’s represent the next evolution in enterprise AI infrastructure. They empower organizations to scale globally, operate locally, and stay compliant effortlessly. In a world where AI is everywhere, control over data location equals control over destiny.

References:

Gartner Top 10 Technology Trends for 2026

Understanding the Landscape of Cloud Repatriation and Geopatriation

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)