Secure Enterprise AI Gateway with Centralized MCP

Introduction

Large‑language models can now reason, code and generate with breathtaking fluency. Yet they remain disconnected from the data, workflows and APIs that make your business unique. The result - Powerful models that still need humans to copy‑paste data between tools.

We have entered an age of agentic AI where LLM‑powered agents can autonomously call tools, trigger incidents or update CRMs. But to act safely and reliably, agents need a universal way to discover and invoke enterprise APIs.

That universal port is the Model Context Protocol (MCP). In this post we unpack MCP, why it matters for enterprises, and how TrueFoundry’s new AI Gateway with MCP support turns the vision into production reality.

We recently hosted an in-depth webinar on using Model Context Protocol (MCP) in enterprise settings.

What is Model Context Protocol (MCP)?

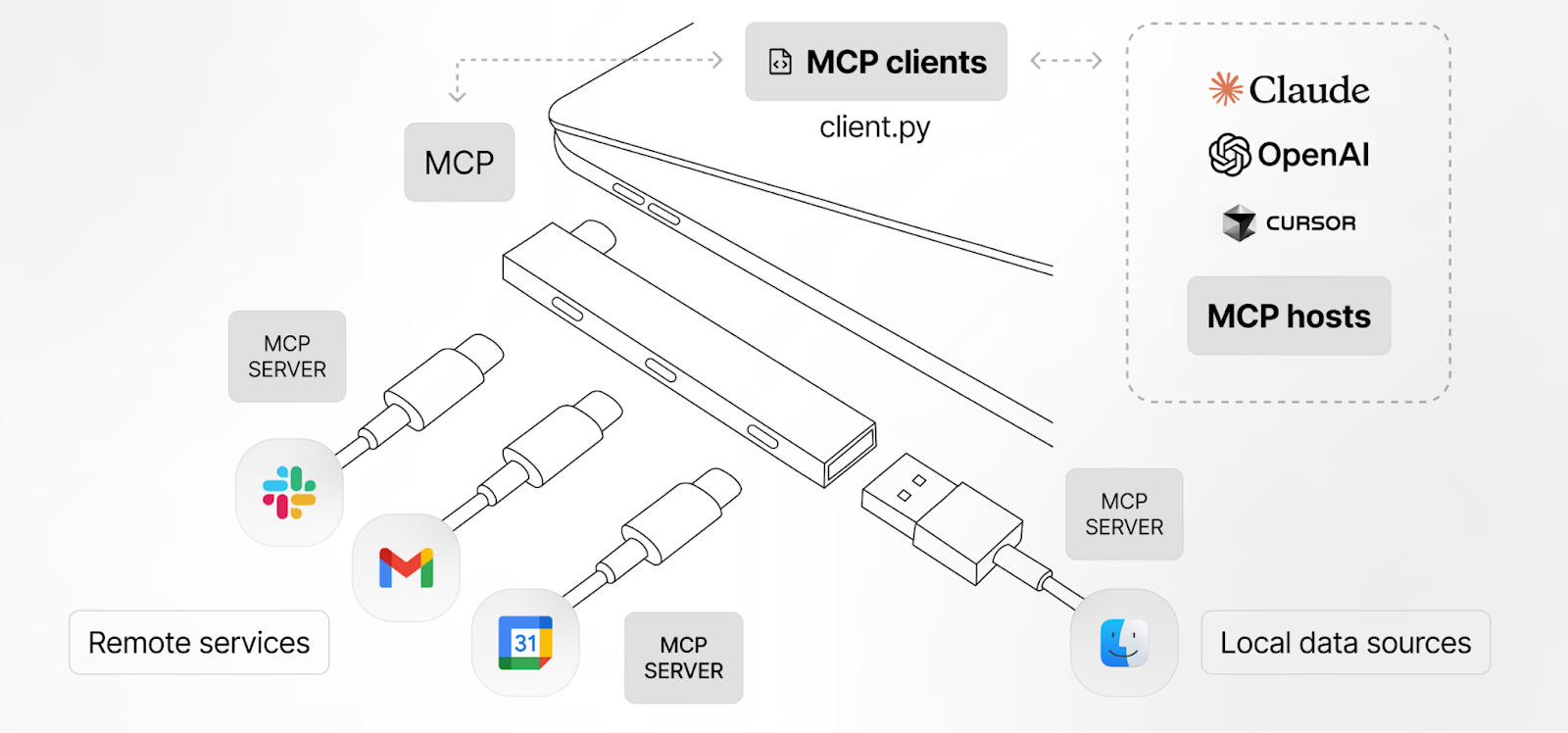

MCP is an open standard that lets software expose its API surface in an LLM‑friendly schema—think of it as USB‑C for AI applications. An MCP server publishes tool definitions, authentication requirements and usage descriptions that an LLM can read at runtime.

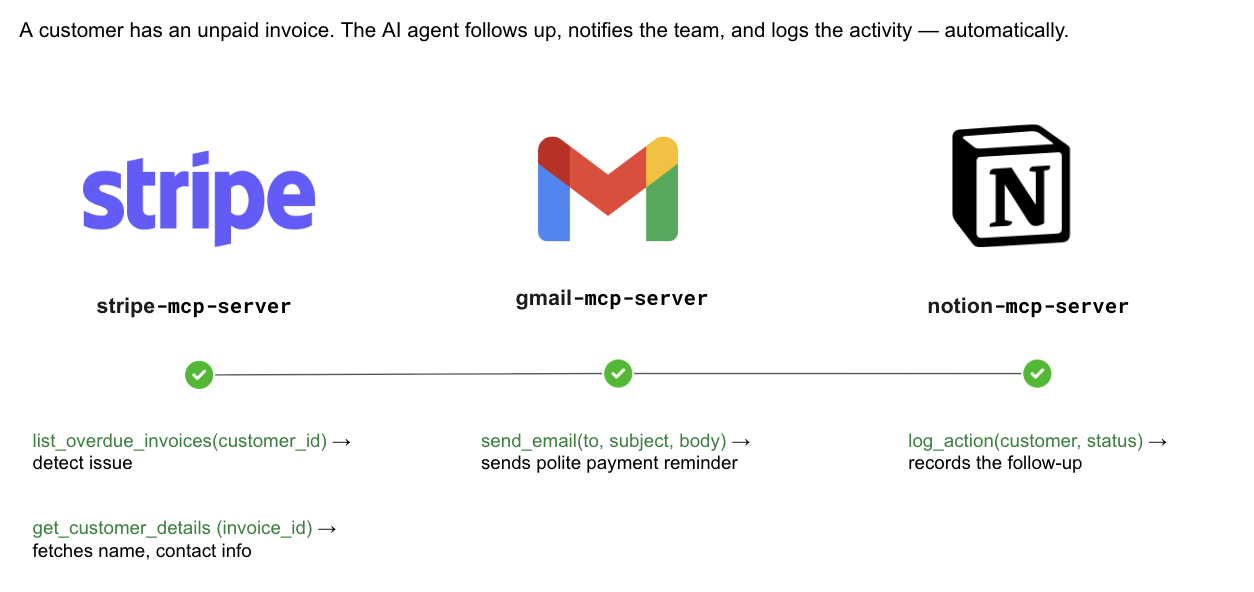

With MCP, you can build an invoice agent. When a customer’s invoice slips past its due date, the agent will use the following workflow:

- Stripe MCP: list_overdue_invoices to identify unpaid bills, then get_customer_details to pull the customer’s name and contact information.

- Gmail MCP: Crafts and sends a courteous reminder email via send_email.

- Notion MCP: Records the outreach with log_action, so finance and account teams have an up-to-date audit trail.

The entire workflow executes autonomously - detect, notify, and record - eliminating manual context-switching and data entry. Because every step is encapsulated in a reusable MCP call, the same architecture extends seamlessly to more finance operations, from subscription renewals to vendor disbursements, providing a consistent automation layer.

Operational Challenges with MCP at Scale

Deploying one demo server is easy but rolling MCP out organization wide is not. Common hurdles include:

- Hosting & lifecycle – Custom servers must run close to sensitive data, sometimes air‑gapped.

- Central discovery – Developers need a registry of approved MCP servers.

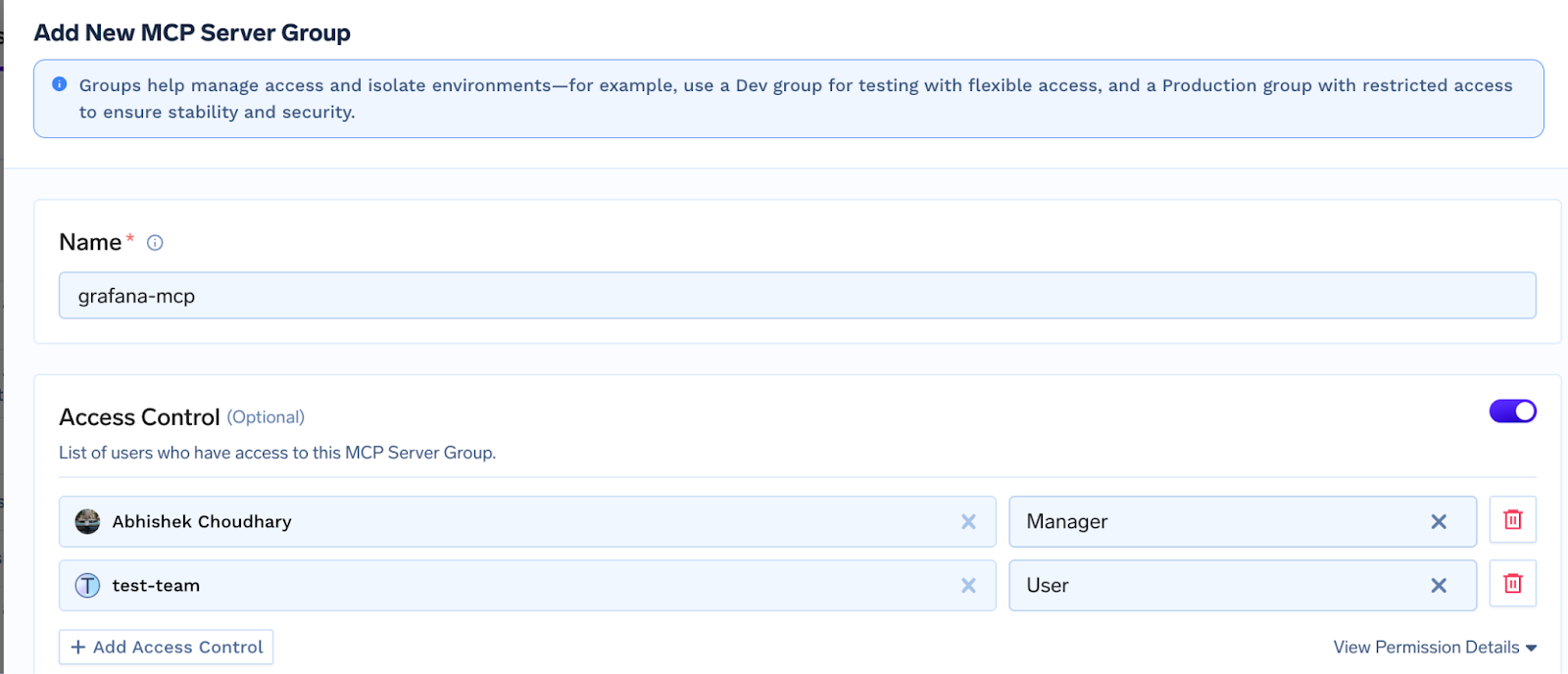

- Access Control - Define which teams / users / applications can access which MCP servers or tools registry

- Authentication & fine‑grained authorization – Need to make sure that the user calling the MCP server can only access the data that they have access to

- Guardrails – Destructive tools (e.g.DELETE_CUSTOMER) should require human approval.

- Observability & Cost controls – Without tracing, runaway agents can spam APIs or rack up bills.

TrueFoundry’s MCP‑Enabled AI Gateway

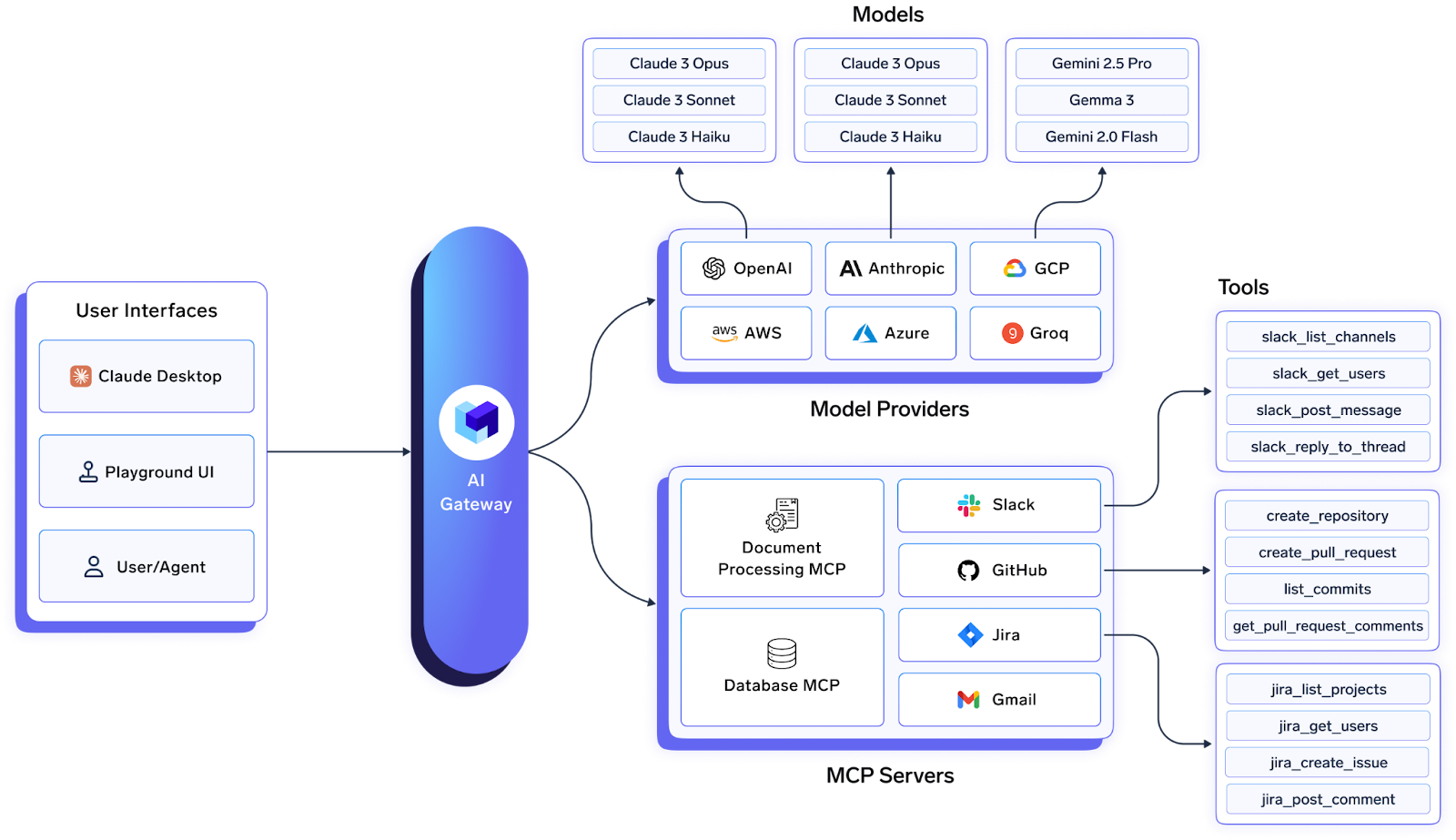

TrueFoundry’s AI Gateway already provided a unified, low‑latency proxy for multiple LLM providers. Today we’re extending it to become the central MCP control plane inside your VPC—on any cloud or on‑prem.

- Register public or self‑hosted MCP servers.

- Enforce OAuth‑backed auth so every tool call carries the user’s real identity.

- Apply org‑wide guardrails, rate limits and semantic caching.

- Trace every agent step for auditing and cost attribution.

Deep‑Dive: Key Gateway Capabilities

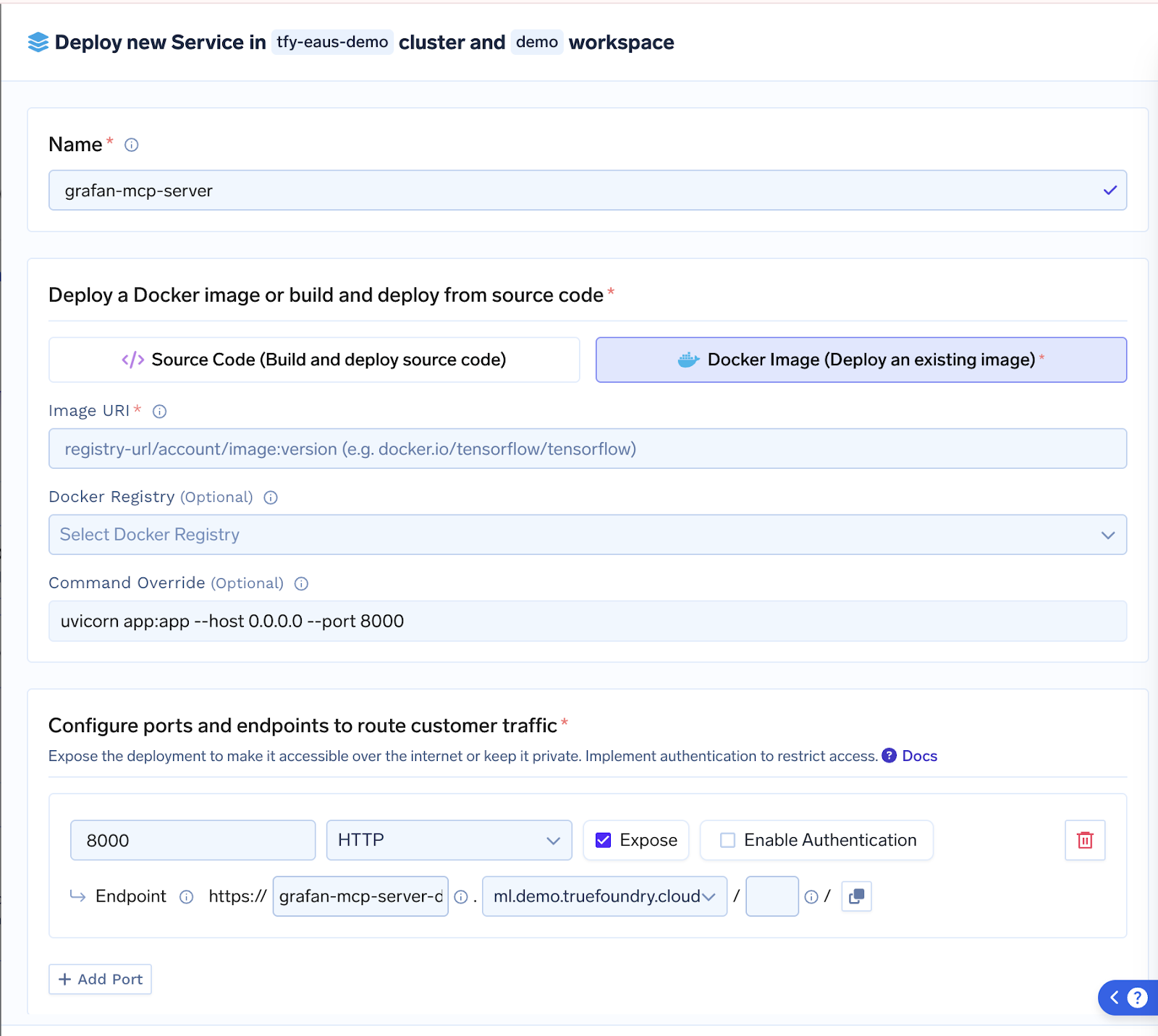

Effortless MCP Server Deployment : Ship servers as source or Docker; Gateway handles container build, rollout and auto‑scaling with zero downtime.

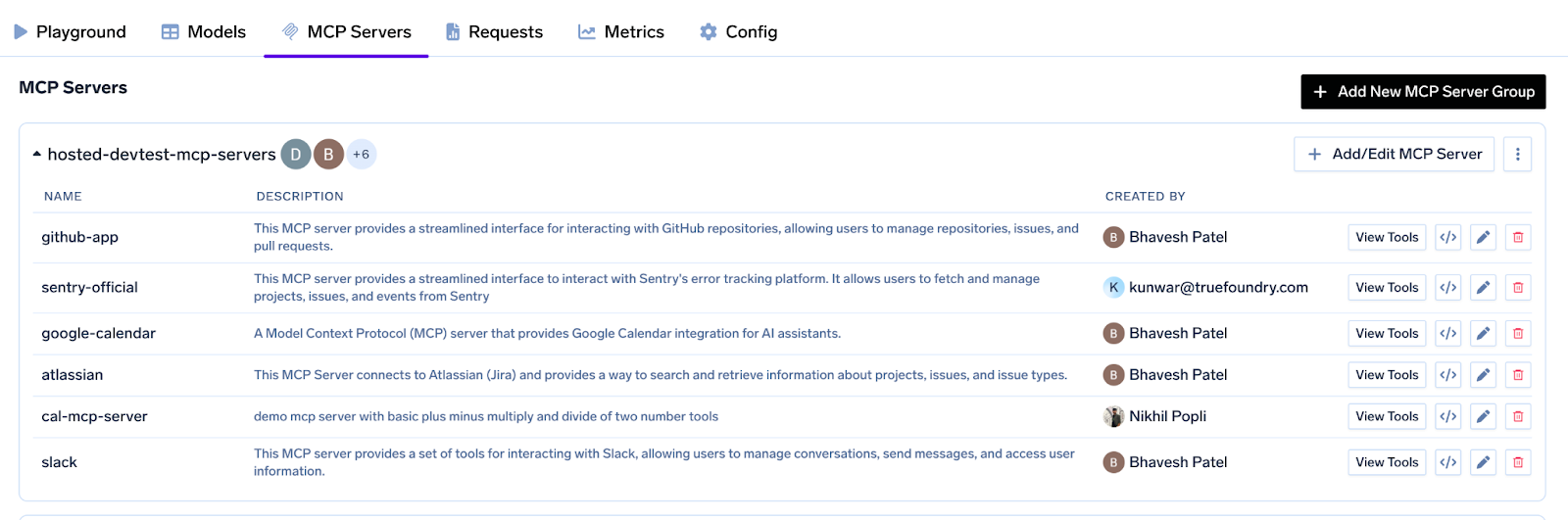

Central Registry & OAuth‑Backed Auth: A single pane lists Slack, GitHub, Salesforce—or your custom Postgres MCP—complete with token refresh logic and per‑user scopes.

Fine‑Grained Access Control : Assign servers (or individual tools) to specific teams, service‑accounts or environments so a staging agent can’t touch prod data.

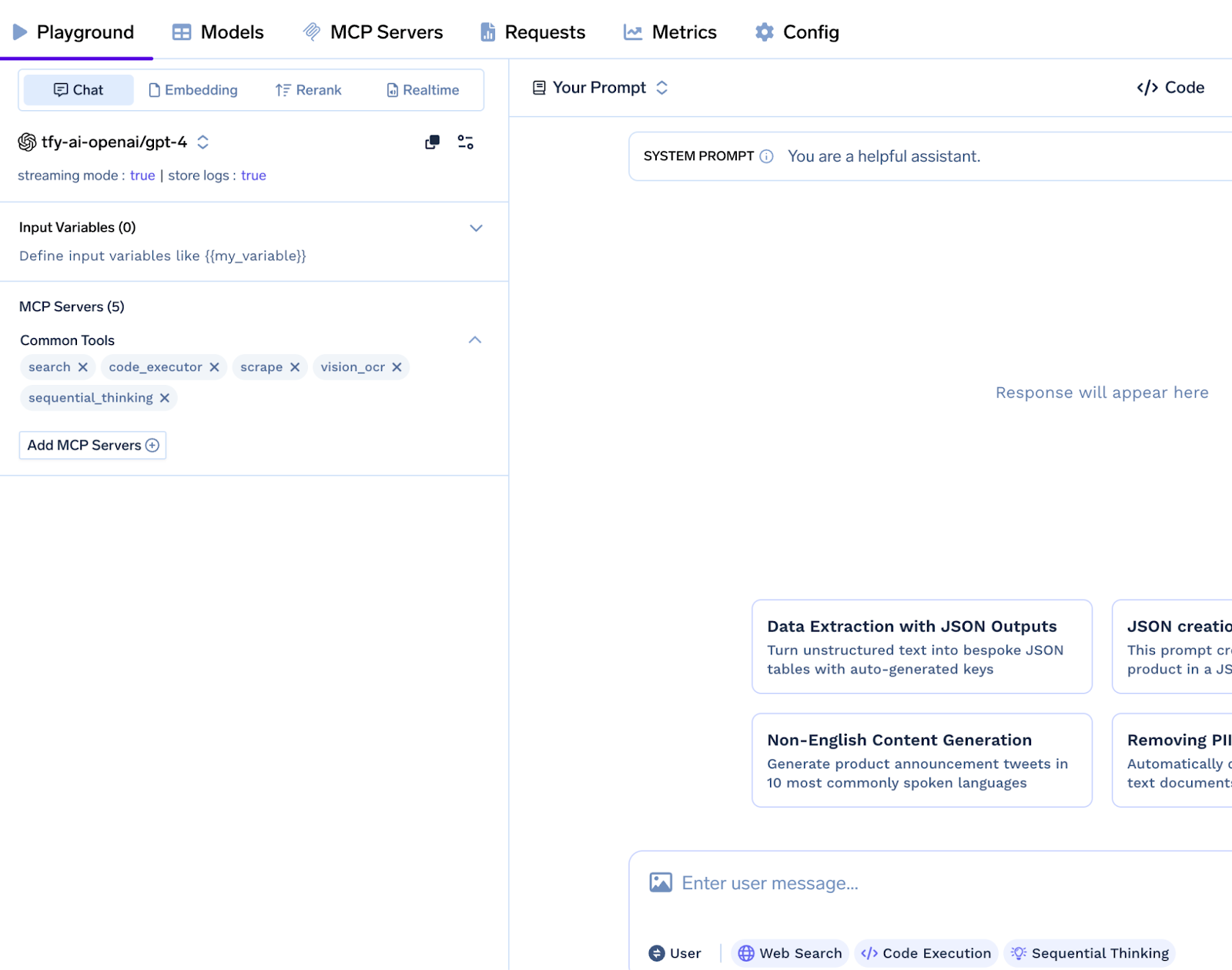

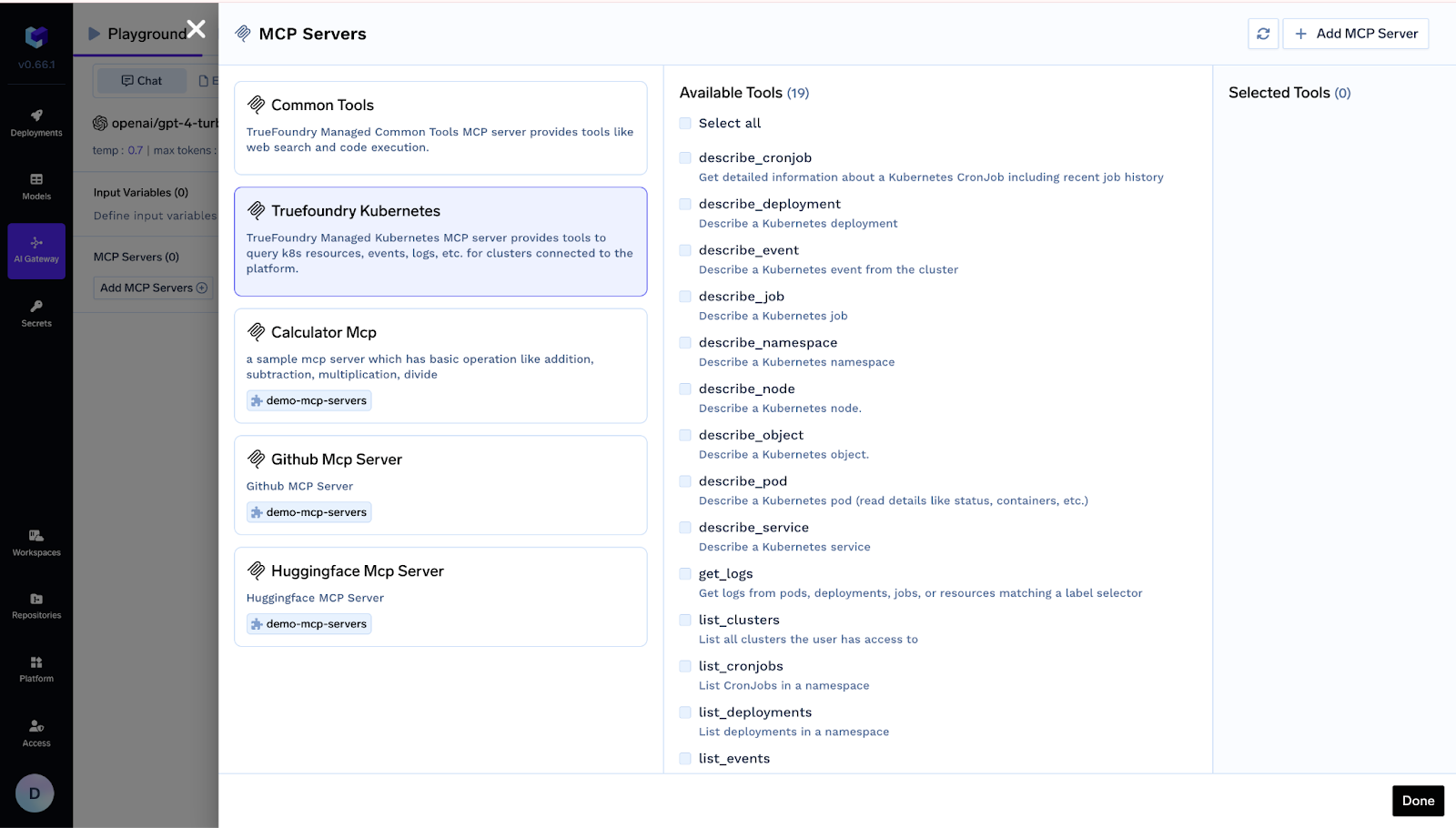

Agent Playground & Code Snippets : Spin up agents in minutes: pick tools, write a prompt, watch real‑time traces, then copy ready‑made Python snippets into your pipeline.

Guardrails, Observability & Rate‑Limiting: PII redaction, manual‑approval hooks, per‑tool quotas and full distributed traces

Real‑World Use‑Cases & Demos

Below are two hands-on examples we showed during the webinar. Each one follows a clear, step‑by‑step flow so you can picture what the agent is doing under the hood

1. Debugger Agent

Purpose — Automated triage and remediation of production failures.

Workflow:

- Accept a failing service URL from the user.

- Query the Kubernetes MCP to list active pods and stream real‑time logs.

- Parse the stack trace, then pivot to the GitHub MCP to pinpoint the exact file and line that triggered the error.

- Generate a fix on a new branch, push the commit, and open a Pull Request.

- Post the PR link to the designated Slack channel for rapid review.

2. Interview Assistant

Purpose — Deliver concise candidate briefs before interview panels meet.

Workflow:

- Scan tomorrow’s Google Calendar events whose titles contain “Interview.”

- Retrieve any attached resumes or linked Drive documents.

- Run each PDF through an OCR MCP to obtain clean text.

- Extract key details—current employer, total experience, and recent roles—while redacting personal identifiers.

- Publish a short summary in the private “Interview‑Prep” Slack channel so the panel walks in prepared.

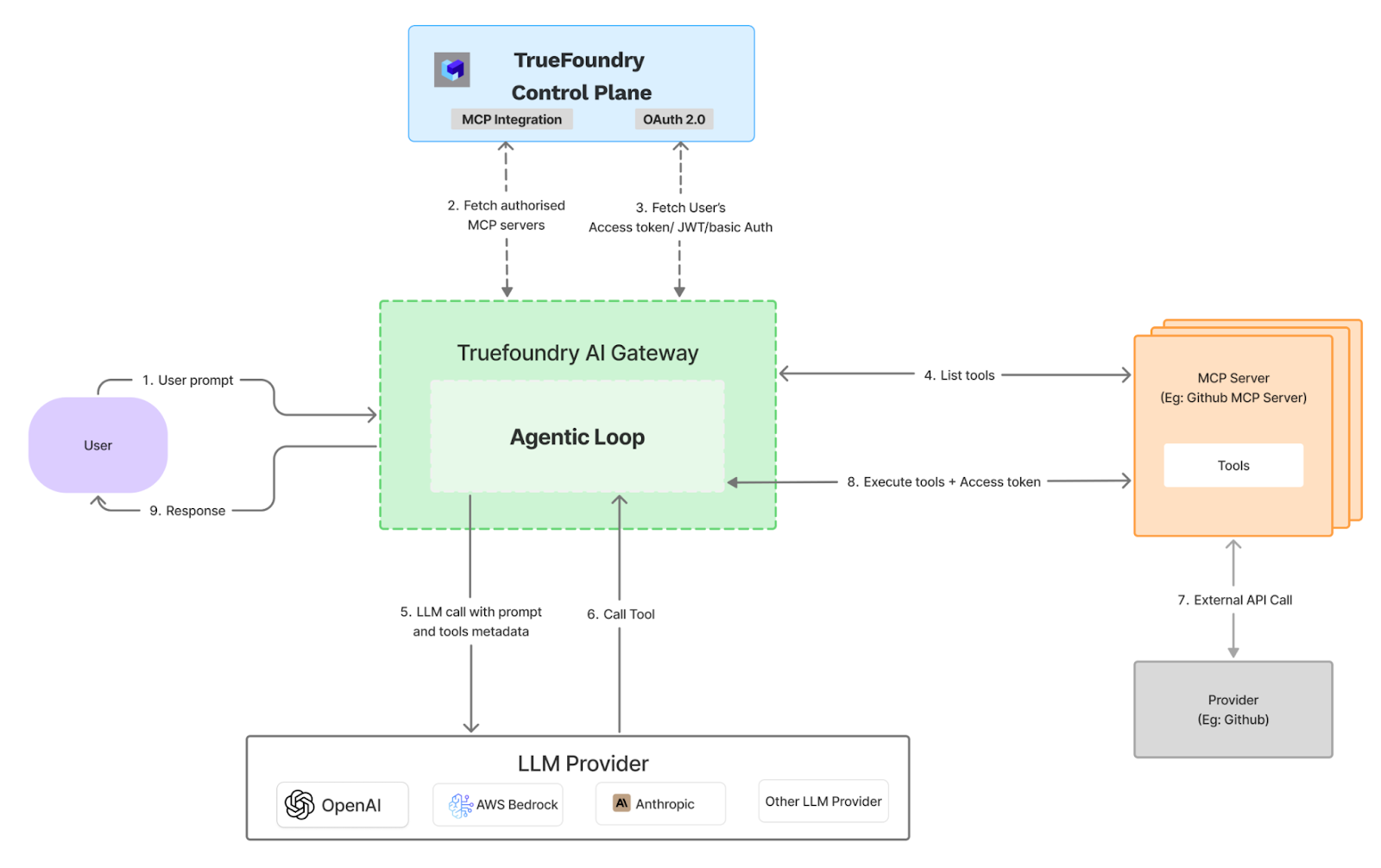

System Architecture Overview

The Truefoundry AI Gateway is architected as a high-performance intermediary proxy that sits between the end-user's agentic loop and downstream services, including Large Language Model (LLM) providers and MCP servers. The system is designed for minimal latency by executing critical functions like authentication, rate-limiting, and guardrails in-memory. To maintain performance, logging and analytics data are processed asynchronously.

Request Lifecycle and Data Flow

The operational flow from user prompt to final response is executed in a precise, multi-step sequence:

1. Initial Request Ingestion

The Gateway receives an initial prompt from the user. Upon ingestion, the request is tagged with a unique identifier for tracing and immediately forwarded for processing.

2. Authentication and Authorization

The Gateway communicates with the Truefoundry Control Plane to perform two critical security functions:

- It fetches a list of authorized MCP servers that the user has permission to access based on predefined policies.

- It requests and receives fresh, short-lived access tokens for each of these authorized servers, ensuring that credentials have a limited exposure time.

3. Dynamic Tool Discovery

Using the newly acquired tokens, the Gateway queries each authorized MCP server to discover the set of available tools or functions. This process occurs in real-time for every user request, and the results are cached for the duration of that request to optimize performance.

4. Unified LLM Invocation

The Gateway constructs a single, comprehensive prompt for the designated LLM provider (e.g., OpenAI, AWS Bedrock). This prompt includes the original user query along with the metadata and schemas of all the discovered tools, enabling the LLM to generate a complete, multi-step execution plan.

5. Iterative Tool Execution

The Gateway then executes the plan generated by the LLM in a sequential, iterative loop:

- The Gateway invokes the selected tool on the appropriate MCP server, passing the necessary user-specific access token with the call.

- The result from the tool execution is returned to the Gateway.

- This output is then fed back into the context of the LLM for the next step of the plan.

- This cycle repeats until the LLM determines that the task is complete.

6. Encapsulated External API Interaction

All external API calls (e.g., to GitHub, Stripe) are handled exclusively by the MCP servers, not the Gateway.

7. Response Delivery and Asynchronous Logging

Once the execution loop is complete, the final response is transmitted back to the user. Concurrently, all relevant operational data—including traces, performance timings, and cost metrics—is sent to an asynchronous queue for ingestion by observability and analytics platforms. This ensures that logging does not introduce any latency into the user-facing response time.

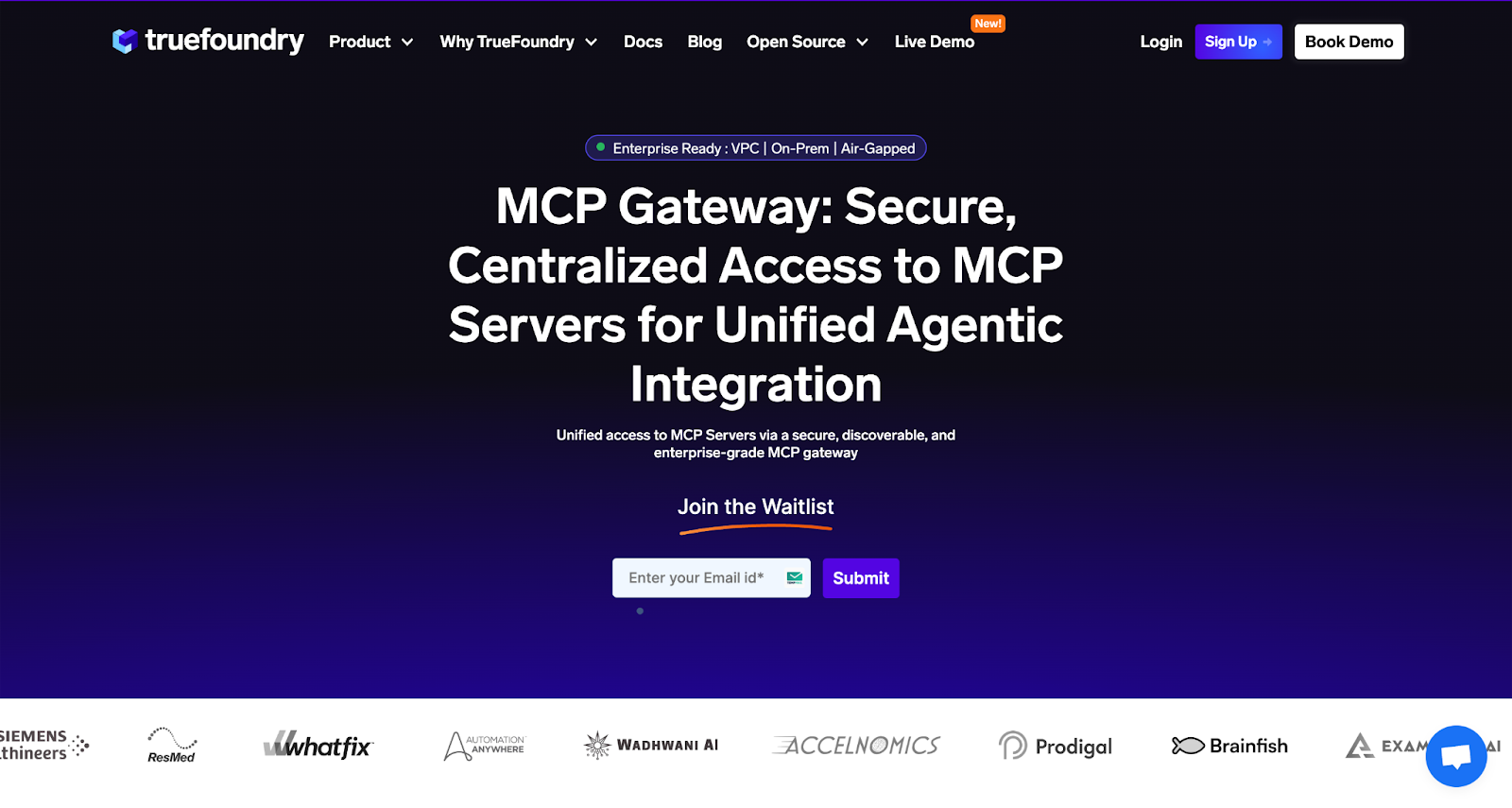

Getting Started & Beta Launch of MCP Gateway

The Beta launch of MCP Gateway is live on the Truefoundry Control Plane. Sign up for a three‑month trial, or book a personalised advisory session to benchmark cost, latency and architecture with our team.

Ready to turn siloed APIs into super‑powered agents? Create your free account and plug your first MCP server in minutes.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.webp)