LLMOps for Model Serving & Inference

.webp)

- Deploy any open-source LLM within your LLMOps pipeline using pre-configured, performance-tuned setups

- Seamlessly integrate with Hugging Face, private registries, or any model hub—fully managed within your LLMOps platform

- Leverage industry-leading model servers like vLLM and SGLang for low-latency, high-throughput inference

- Enable GPU autoscaling, auto shutdown, and intelligent resource provisioning across your LLMOps infrastructure.

.webp)

Serve any LLM with high-performance model servers like vLLM and SGLang, powered by GPU auto-scaling and cost-efficient LLMOps infrastructure.

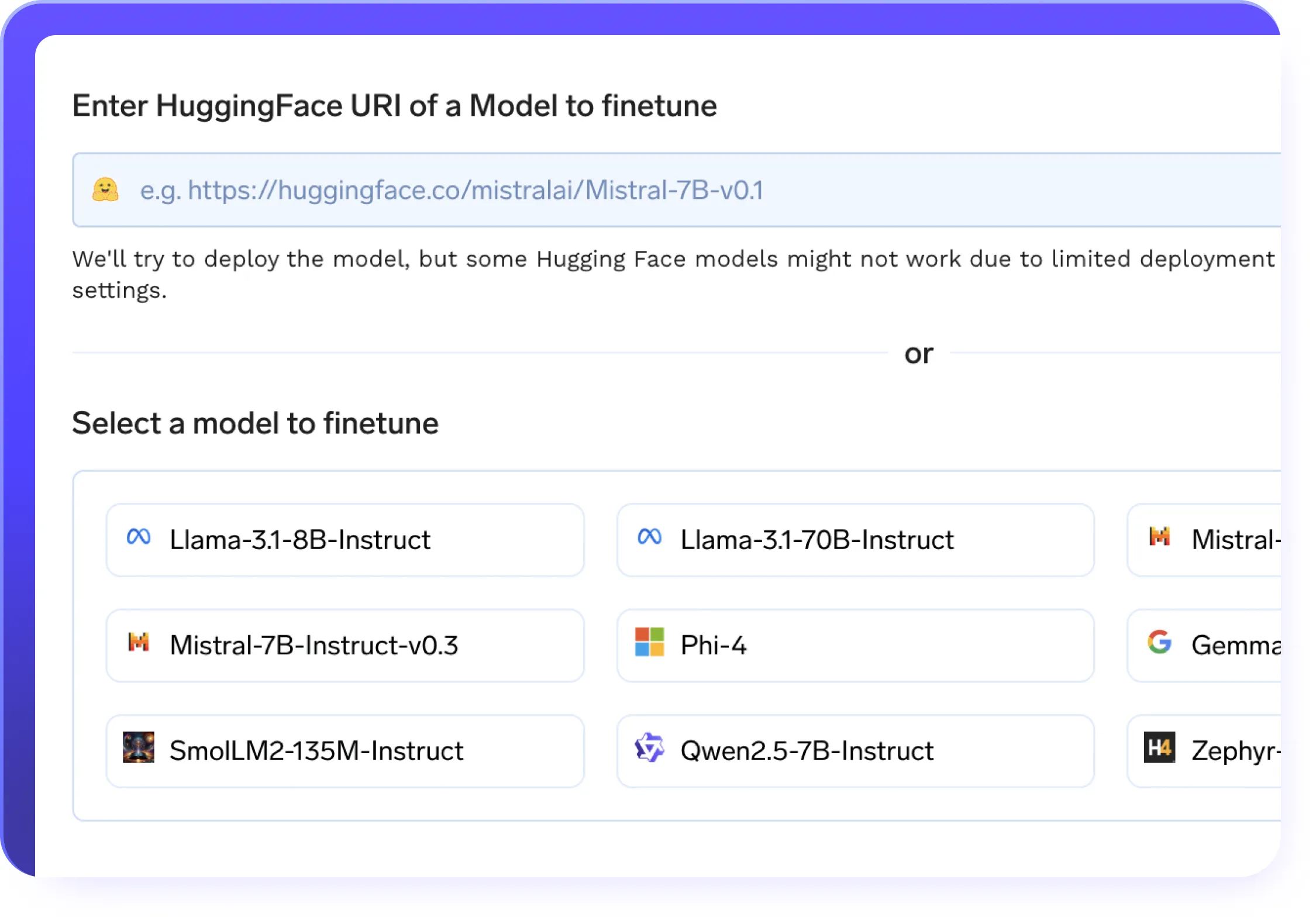

Efficient Finetuning

.webp)

- No-code & full-code fine-tuning support on custom datasets

- LoRA & QLoRA for efficient low-rank adaptation

- Resume training seamlessly with checkpointing support across your LLMOps pipelines

- One-click deployment of fine-tuned models with best-in-class model servers

- Automated training pipelines with built-in experiment tracking baked into your LLMops workflows

- Distributed training support for faster, large-scale model optimization

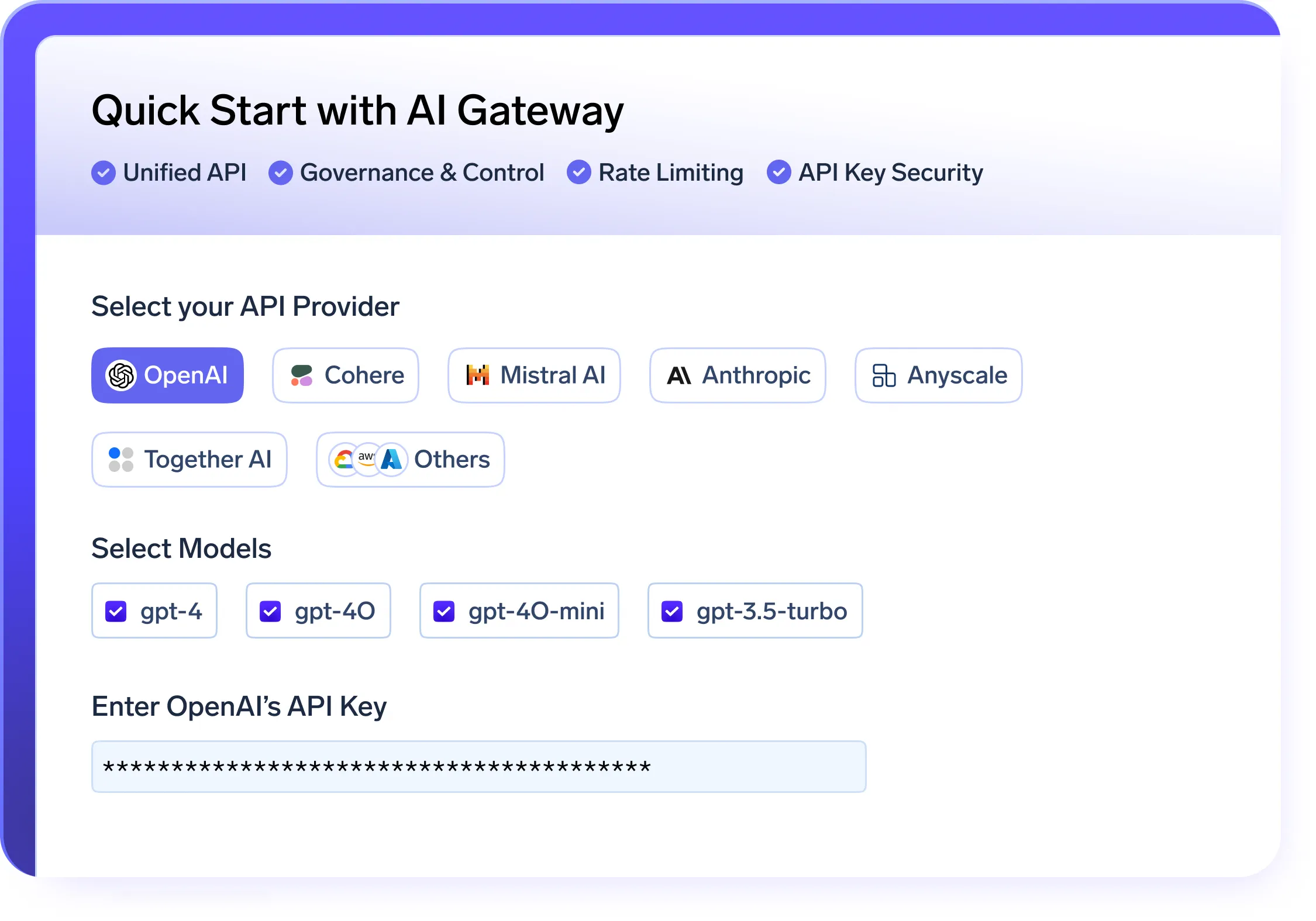

Govern AI usage with an AI Gateway that unifies model access, enforces quotas, and ensures observability and safety.

Secure and Scalable AI Gateway

.webp)

- A unified API layer to serve and manage models across OpenAI, LLaMA, Gemini, and other providers

- Built-in quota management and access control to enforce secure, governed model usage within your LLMOps platform

- Real-time metrics for usage, cost, and performance to improve LLMOps observability

- Intelligent fallback and automatic retries to ensure reliability across your LLMOps pipelines

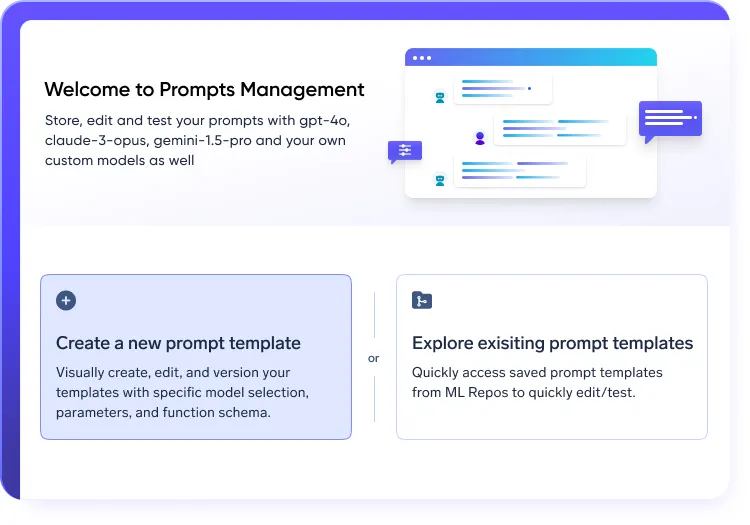

Structured prompt workflows in LLMOps stack

.webp)

- Experiment and iterate using version-controlled prompt engineering

- Run A/B tests across models to optimize performance

- Maintain full traceability of prompt changes within your LLMOps platform

Tracing & Guardrails for LLMOps Workflows

.webp)

- Capture full traces of prompts, responses, token usage, and latency

- Monitor performance, completion rates, and anomalies

- Integrate with guardrails for PII detection and content moderation in LLMOps pipelines

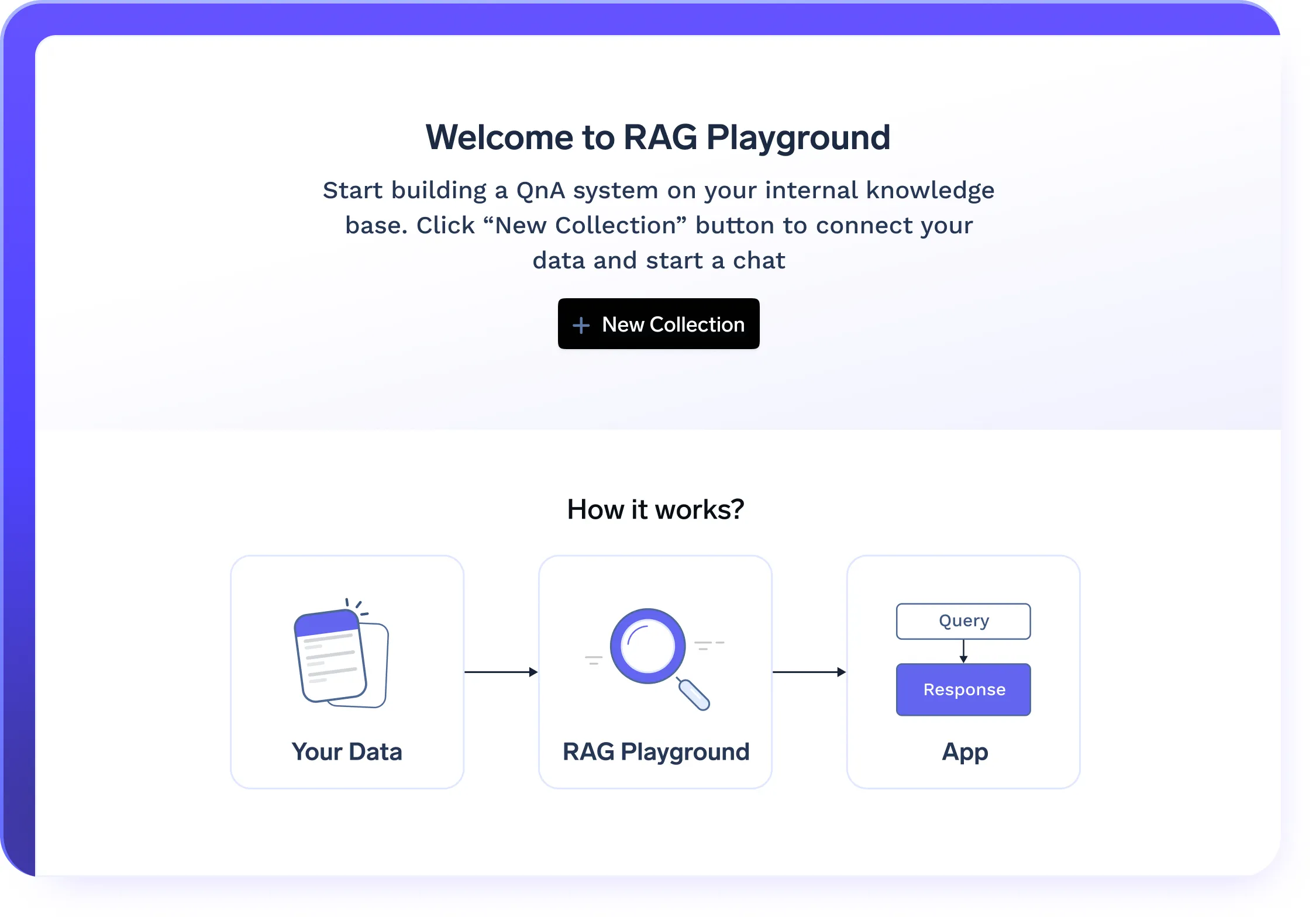

One click RAG deployment

.webp)

- Deploys all RAG components in a single click, including VectorDB, embedding models, frontend, and backend

- Configurable infrastructure to optimize storage, retrieval, and query processing

- Handle growing document bases with cloud-native LLMOps scalability

Manage agent lifecycles - from deployment to observability - across any framework, powered by your LLMOps platform.

LLMOps for AI Agent Lifecycle Management

.webp)

- Run and scale agents across any framework using your LLMOps infrastructure

- Support for LangChain, AutoGen, CrewAI, and custom agents

- Framework-agnostic agent orchestration with built-in LLMOps monitoring

- Support for multi-agent orchestration, enabling agents to interact, share context, and execute tasks autonomously

.webp)

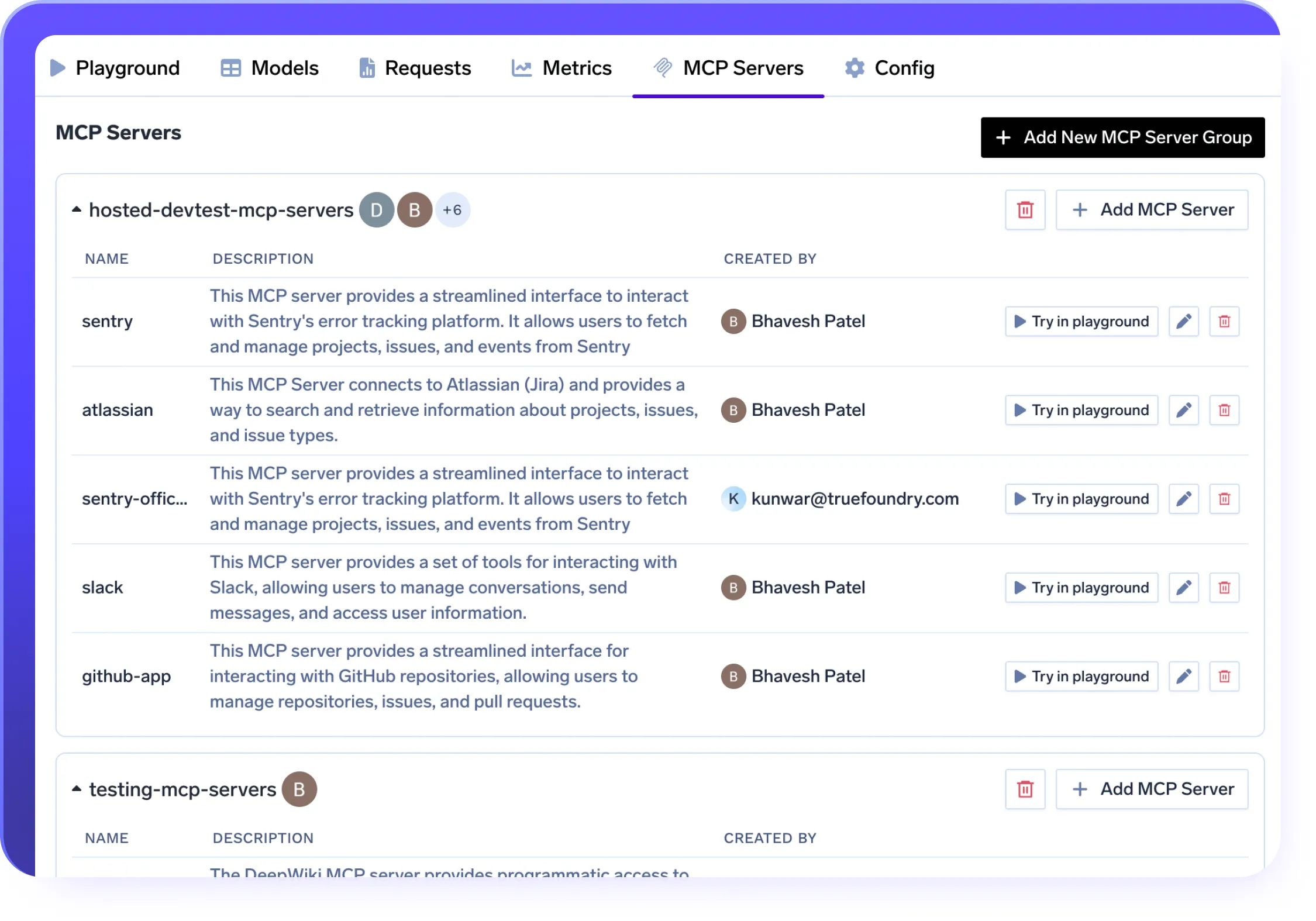

MCP Server Integration in Your LLMOps Stack

.webp)

- Securely connect LLMs to tools like Slack, GitHub, and Confluence using the MCP protocol

- Deploy MCP Servers in VPC, on-prem, or air-gapped setups with full data control

- Enable prompt-native tool use without wrappers—fully integrated into your LLMOps stack

- Govern access with RBAC, OAuth2, and trace every call with built-in observability

Enterprise-Ready

Your data and models are securely housed within your cloud / on-prem infrastructure

Compliance & Security

SOC 2, HIPAA, and GDPR standards to ensure robust data protectionGovernance & Access Control

SSO + Role-Based Access Control (RBAC) & Audit LoggingEnterprise Support & Reliability

24/7 support with SLA-backed response SLAs

Deploy TrueFoundry in any environment

VPC, on-prem, air-gapped, or across multiple clouds.

No data leaves your domain. Enjoy complete sovereignty, isolation, and enterprise-grade compliance wherever TrueFoundry runs

Frequently asked questions

What is LLMOps and why does it matter?

LLMOps (Large Language Model Operations) refers to the practice of managing the full

lifecycle of large language models—from training and fine-tuning to deployment, inference,

monitoring, and governance. LLMOps helps organizations bring GenAI applications into

production reliably and at scale. TrueFoundry provides a production-grade LLMOps platform

that simplifies and accelerates this entire process.

lifecycle of large language models—from training and fine-tuning to deployment, inference,

monitoring, and governance. LLMOps helps organizations bring GenAI applications into

production reliably and at scale. TrueFoundry provides a production-grade LLMOps platform

that simplifies and accelerates this entire process.

How is LLMOps different from traditional MLOps?

While MLOps supports a wide range of ML models, LLMOps is purpose-built for GenAI and

large language models. It includes capabilities like model server orchestration, prompt

management, token-level observability, agent frameworks, and secure API access.

TrueFoundry’s LLMOps platform handles these GenAI-specific workflows natively—unlike

generic MLOps tools.

large language models. It includes capabilities like model server orchestration, prompt

management, token-level observability, agent frameworks, and secure API access.

TrueFoundry’s LLMOps platform handles these GenAI-specific workflows natively—unlike

generic MLOps tools.

Why should I invest in a dedicated LLMOps platform like TrueFoundry?

Managing LLMs at scale is complex. TrueFoundry’s LLMOps platform offers integrated tools for

model serving, fine-tuning, RAG, agent orchestration, observability, and governance—so your

team can focus on building instead of stitching infrastructure. It also supports enterprise needs

like compliance, quota management, and VPC deployments.

model serving, fine-tuning, RAG, agent orchestration, observability, and governance—so your

team can focus on building instead of stitching infrastructure. It also supports enterprise needs

like compliance, quota management, and VPC deployments.

What are the core features of TrueFoundry’s LLMOps platform?

TrueFoundry’s platform includes:

Model Serving & Inference with vLLM, SGLang, autoscaling, and right-sized infra

Finetuning Workflows using LoRA/QLoRA with automated pipelines

API Gateway for unified access, RBAC, quotas, and fallback

Prompt Management with version control and A/B testing

Tracing & Guardrails for full visibility and safety

One-Click RAG Deployment with integrated VectorDBs

Agent Support for LangChain, CrewAI, AutoGen, and more

Enterprise Features like audit logs, VPC hosting, and SOC 2 compliance

Can I deploy TrueFoundry’s LLMOps platform on my infrastructure?

Yes. TrueFoundry is designed for flexibility. You can deploy the LLMOps platform on your own

cloud (AWS, GCP, Azure), in a private VPC, on-premise, or even in air-gapped

environments—ensuring data control and compliance from day one.

cloud (AWS, GCP, Azure), in a private VPC, on-premise, or even in air-gapped

environments—ensuring data control and compliance from day one.

How does LLMOps improve observability and debugging?

TrueFoundry’s LLMOps stack offers token-level tracing, latency tracking, cost attribution, and

request-level logs. You can track every prompt, response, and error in real time, making it easy

to debug and optimize your LLM applications.

request-level logs. You can track every prompt, response, and error in real time, making it easy

to debug and optimize your LLM applications.

Is TrueFoundry’s LLMOps platform secure and compliant?

Yes. TrueFoundry is built with enterprise-grade security in mind. It supports role-based access

control (RBAC), SOC 2 Type II certification, HIPAA compliance, multi-tenant isolation, and full

audit logging. Guardrails for content safety and data privacy are built-in.

control (RBAC), SOC 2 Type II certification, HIPAA compliance, multi-tenant isolation, and full

audit logging. Guardrails for content safety and data privacy are built-in.

Which models and frameworks are supported in TrueFoundry’s LLMOps platform?

TrueFoundry supports open-source LLMs like Mistral, LLaMA, Falcon, GPT-J, and integrates

with model servers like vLLM and SGLang. It also supports agent frameworks such as

LangChain, CrewAI, AutoGen, and custom-built agents—making it truly framework-agnostic.

with model servers like vLLM and SGLang. It also supports agent frameworks such as

LangChain, CrewAI, AutoGen, and custom-built agents—making it truly framework-agnostic.

Can I use TrueFoundry’s LLMOps platform to manage multiple teams and projects?

Yes. TrueFoundry is multi-tenant by design. You can set up multiple teams, environments (dev,

staging, prod), apply usage quotas, and track cost per team or project. This makes it ideal for

companies with large or distributed AI teams.

staging, prod), apply usage quotas, and track cost per team or project. This makes it ideal for

companies with large or distributed AI teams.

How fast can I start using TrueFoundry for LLMOps?

Most teams get started in minutes with TrueFoundry’s ready-to-use templates, prebuilt

integrations, and intuitive UI. One-click deployment and onboarding support are available to

accelerate setup—even for complex workloads.

integrations, and intuitive UI. One-click deployment and onboarding support are available to

accelerate setup—even for complex workloads.

GenAI infra- simple, faster, cheaper

Trusted by 30+ enterprises and Fortune 500 companies