Understanding Cloudflare AI Gateway Pricing [A Complete Breakdown]

Introduction

Cloudflare AI Gateway is often the first tool engineering teams reach for when they need to wrap observability around their LLM traffic. It makes sense: you put a proxy between your app and OpenAI, and suddenly you have logs and caching without writing a custom middleware service.

However, while "free to start" provides immediate speed, the engineering economics often shift as you scale toward production. While the gateway is marketed with a generous free tier, the Total Cost of Ownership (TCO) for enterprise workloads extends beyond the gateway fee. The full cost profile includes the adjacent services you consume—specifically Cloudflare Workers, persistent log storage, and request volume scaling.

This article breaks down the engineering economics of Cloudflare AI Gateway. We’ll look at what you explicitly pay for, the operational variables that impact scale, and why enterprises often migrate to private, in-VPC alternatives like Truefoundry when they move from "prototype" to "production."

What Is Cloudflare AI Gateway?

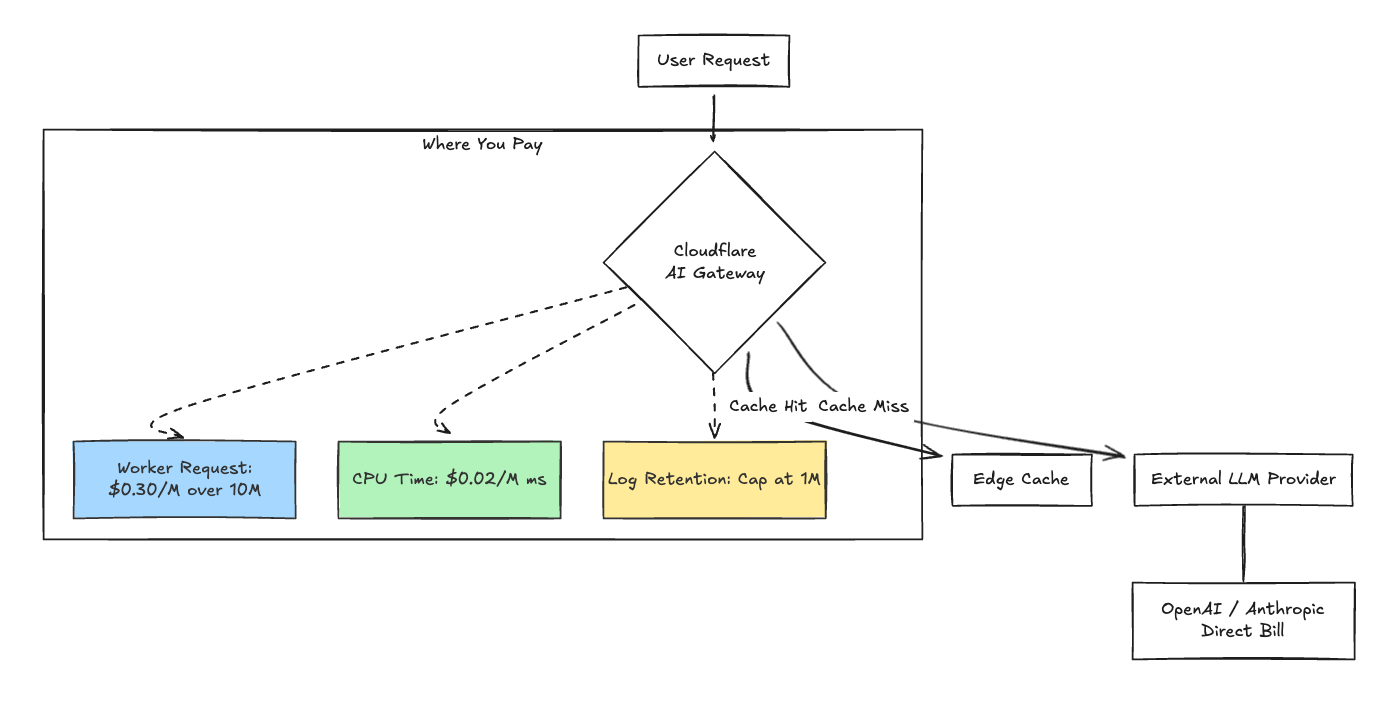

To understand the bill, you have to understand the architecture. Cloudflare AI Gateway operates as a centralized reverse proxy that sits on the edge, intercepting RESTful API requests between your backend and LLM providers.

It doesn't host models itself. It provides the "plumbing" for observability and control. Its core job is:

- Traffic Management: Handling retry logic and rate limiting so your app doesn't have to.

- Observability: Inspecting payloads to log prompts, responses, and token usage headers.

- Ecosystem Integration: Crucially, it runs on the Cloudflare Workers runtime. This is the most important detail for pricing because it shares the infrastructure—and billing limits—of standard serverless functions.

Cloudflare AI Gateway Features That Impact Pricing

Cloudflare doesn't currently charge a "per-token" markup. You pay the same to OpenAI whether you go direct or through Cloudflare. The costs appear in the infrastructure usage and the feature limits that guide you into higher tiers.

Request Routing and Rate Limiting

Every single API call that hits the gateway counts as a "Request" on the underlying Cloudflare Workers platform.

If you are building a low-volume internal tool, this is negligible. But if you are building an automated agent loop or a high-traffic customer-facing chatbot, the math changes. An agent that creates a recursive loop of 10-20 calls to answer a single user prompt can consume standard request quotas rapidly. You aren't paying for the AI; you are paying for the HTTP requests to the proxy.

Prompt and Response Caching

Caching is the main ROI argument for using a gateway. If you can serve a response from the Cloudflare edge, you save money on the upstream LLM provider.

However, the financial upside here is highly variable. It depends entirely on your Cache Hit Rate.

- High ROI: Customer support bots answering "How do I reset my password?" (High repetition).

- Low ROI: RAG applications querying unique documents or analyzing specific user data.

If your app relies on unique context windows, caching won't lower your upstream bill, but you'll still incur costs for request routing.

Usage Analytics and Logging

This is usually the forcing function for upgrades. Persistent logging consumes storage. Cloudflare enforces caps on how many logs you can keep in their dashboard. When you hit that cap, logging pauses, leaving you without observability for the remainder of the billing period unless you upgrade. Eventually, enterprise volumes may require configuring Logpush to ship data out to your own storage, which triggers a different set of fees based on data volume.

Multi-Provider Integration

Routing to multiple providers (e.g., failing over from OpenAI to Anthropic) doesn't incur a surcharge, but it complicates cost attribution. You are aggregating costs from Azure, Bedrock, and OpenAI into a single stream. The gateway normalizes reporting, but billing typically remains direct with those providers.

Cloudflare AI Gateway Pricing Breakdown

The pricing isn't a standalone item; it operates as a line item on a Cloudflare Workers subscription.

The Free Tier (Standard)

This is optimized for hackathons and individual devs. It’s $0, but the operational limits are generally suited for testing rather than production SLAs.

- Cost: $0/month.

- Log Limit: 100,000 logs/month. Note: Once this limit is reached, logging stops.

- Request Limit: 100,000 requests/day.

- Verdict: Excellent for prototyping; requires upgrades for production reliability.

The Paid Tier (Workers Paid)

If you are a business, this is your starting line.

- Cost: Starts at $5/month.

- Log Limit: 1,000,000 logs/month.

- Included Compute: 10 million requests and 30 million CPU-milliseconds.

(Source: https://www.cloudflare.com/plans/developer-platform/)

The Overage:

- $0.30 per additional million requests.

- $0.02 per additional million CPU-milliseconds.

Fig 1: Logic Flow and Billing Architecture

Operational Variables That Impact Scale

The $5/month base fee is an attractive entry point because it strictly covers infrastructure access. However, enterprise TCO requires accounting for the full stack, particularly when integrating into a compliant, secure environment.

1. Compliance & Security Overhead

Cloudflare is a SaaS-based solution. The data plane—where actual prompts and PII live—resides on their servers, not yours. Your sensitive customer data must exit your VPC, transit the public internet (via encrypted tunnels), be processed by Cloudflare, and then move to the LLM.

For a fintech or healthcare company, this architecture often triggers a Third-Party Risk Assessment. The cost here isn't just the subscription; it is the operational overhead of InfoSec reviews, negotiating Data Processing Addendums (DPAs), and potentially purchasing enterprise security add-ons to meet data residency requirements.

2. Log Retention Strategy

The 1 million log limit on the paid plan covers immediate debugging, but long-term compliance requires more. Cloudflare does not store these logs indefinitely.

For use cases requiring long-term audit trails (e.g., 7 years), native gateway storage must often be supplemented with external exports. You may need to pay to export logs via Logpush to an S3 bucket or Splunk.

- The Logpush Fee: The first 10M requests are free. After that, fees apply (e.g., $0.05 per million requests) to push the data out, plus your own cloud storage costs.

3. Managed SaaS Routing Constraints

When you use a managed SaaS router, you accept the provider's infrastructure logic. This can limit complex cost-arbitrage strategies, such as routing traffic to a private model hosted on Spot Instances inside your own AWS VPC to save on compute. You are working within the routing logic the SaaS platform supports, which may limit your ability to engineer specific lower unit economics.

When Cloudflare AI Gateway Pricing Makes Sense

It is the right tool for specific jobs:

- Edge-Native Apps: If your whole stack is already on Cloudflare Workers/Pages, this is a natural fit. The integration is instant.

- Pre-Revenue Startups: If your total AI spend is under $500/month, the $5 fee is highly competitive. You don't need complex governance yet; you need speed.

- POCs: If you need to validate a prompt chain today, you can do so without configuring Kubernetes ingress.

Why Some Teams Look Beyond Cloudflare AI Gateway

As you scale, the priority often shifts from "ease of setup" to "control of data."

Scaling Costs vs. Predictability

Consumption-based pricing is linear. Paying per request (plus CPU, plus log exports) creates a variable bill that grows with your traffic. At a certain scale, paying for a fixed-cost gateway running on your own reserved instances often yields better unit economics.

Compliance and Data Residency

This is often the deciding factor. Many enterprise contracts stipulate that user data must never leave a specific AWS region or VPC. A SaaS gateway is structurally incompatible with this topology. If you need data sovereignty, you need a self-hosted or in-VPC data plane.

Advanced Orchestration Requirements

Enterprise RAG pipelines are complex. You might need custom PII masking regex that runs before the prompt leaves your network, or complex failover logic that routes to a local Llama 3 model if OpenAI times out. Executing this inside a shared SaaS environment can be restrictive compared to running your own gateway container.

How Truefoundry Approaches AI Gateway Pricing Differently

Truefoundry takes a different architectural stance: Control Plane / Data Plane Separation.

We built this for engineers who need gateway features but require full data sovereignty.

- The Control Plane: Managed by us (or self-hosted). This handles configuration, users, and dashboards.

- The Data Plane: This runs as a container in your cloud (AWS, GCP, Azure). Your API keys, your prompts, and your customer data never leave your environment.

Granular Budget Control

We allow you to set budgets that enforce hard dollar limits on specific teams or projects. If an experimental agent script loops unexpectedly, the gateway halts traffic at the infrastructure level before costs spiral.

Universal Connectivity

Since the gateway sits in your VPC, it treats private models exactly like public ones. You can route traffic to a Llama 3 model running on a local GPU node just as easily as you route to GPT-4. This allows you to optimize for cost and latency without worrying about egress fees or public internet transit.

Cloudflare AI Gateway vs Truefoundry: Detailed Pricing Comparison

Ready to Build AI Without Pricing Surprises?

Choosing a gateway is an infrastructure decision, not just a tool selection. Cloudflare AI Gateway is excellent for the "edge" use case—lightweight, fast, and easy to start. But if you are building an enterprise platform, the requirements for governance, data locality, and flat-rate economics often point toward a private architecture.

Truefoundry is built for the long haul. We decouple the control plane from your data so that as your volume grows, your costs stay tied to your own efficient infrastructure.

Next Step: If you are evaluating gateways for a production workload and require data to stay within your VPC, [Book a Demo with Truefoundry]. Let's look at how a private architecture handles your traffic.

FAQs

Is Cloudflare AI gateway free?

It has a free tier, yes. You get 100,000 logs/month. However, once you hit that limit, logging typically pauses. For production applications, you will generally need the "Workers Paid" plan (starting at $5/mo) to unlock higher limits.

How much will Cloudflare AI cost?

The gateway billing is wrapped into Cloudflare Workers. You pay $5/month for the first 10 million requests. After that, it is **$0.30 per million requests** (pricing based on publicly available data as of early 2026). Remember: that fee covers the gateway infrastructure. You still pay OpenAI/Anthropic separately for the tokens.

How is Truefoundry more cost-effective than Cloudflare AI?

At scale, Truefoundry optimizes TCO by leveraging your existing cloud infrastructure. You avoid the variable "per-request" markup of a SaaS proxy, and you store your logs in your own S3 buckets (reducing export fees). Additionally, our architecture simplifies routing traffic to cost-effective, self-hosted open-source models within your own VPC.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.