EU AI Act Compliance: Building AI Governance with Gateways & Platforms

Introduction

The EU AI Act has transformed AI compliance from a legal concern into a core platform engineering challenge. For enterprise leaders responsible for AI systems, It now directly affects:

- How training data is governed

- How models are built, versioned, and deployed

- How inference is monitored

- How audit trails are produced

- How human oversight is operationalized

Modern AI compliance cannot be achieved with process documents alone -it requires infrastructure that enforces governance by design.

The central question enterprises now face is: How do we build AI systems that ship safely and remain compliant at scale without slowing innovation?

The answer is increasingly clear:

compliance must be built into AI infrastructure, across the full lifecycle, not bolted onto applications one by one.

What the EU AI Act Requires?

The EU AI Act introduces a risk-based regulatory framework for AI systems, with stricter obligations applied to high-risk and general-purpose AI deployments. For enterprise AI leaders, the law translates into very specific technical expectations, not high-level ethical guidance.

At its core, the regulation requires that organizations operating regulated AI systems must be able to demonstrate:

In summary, the EU AI Act reframes compliance as an engineering discipline - demanding transparency, governance, and operational safety controls be designed directly into AI systems. Meeting its requirements requires infrastructure that can continuously enforce standards across the entire AI lifecycle, rather than piecemeal controls layered onto individual applications.

Why Application-Based Compliance Breaks at Enterprise Scale

A common first reaction to regulatory pressure is attempting to “solve compliance at the application layer.” Teams adapt existing AI-powered services with custom controls:

- Each product team implements its own logging logic

- Individual services build local prompt or response filters

- Applications define separate transparency and disclosure messaging

- PII redaction varies by microservice or SDK

- Some experimental or internal AI usage remains completely outside governance workflows

This approach may appear workable during early adoption, but it fails rapidly at enterprise scale. As the number of AI services, models, LLM providers, and internal agent workflows grows, governance becomes fragmented and inconsistent.

Compliance cannot be reliably maintained when controls are distributed across hundreds of application codebases owned by different teams with varying maturity, priorities, and interpretations of policy.

Fragmentation Effects

Application-driven compliance results in systemic weaknesses:

- Inconsistent governance - Policies drift between teams as filters, logging standards, and disclosure rules are implemented differently across services.

- Incomplete visibility - AI usage lacks a single audit source of truth, making it impossible to answer fundamental questions like “Which models processed customer data this month?”

- Shadow AI adoption - Teams deploy unregistered models or external LLM integrations outside formal compliance workflows to move faster.

- Undocumented lifecycle lineage - Training datasets, evaluation pipelines, and deployment artifacts become disconnected, making it difficult to trace outcomes back to the data and models that produced them.

- Unverifiable compliance - Audit preparation degenerates into documentation exercises rather than producing operational evidence drawn directly from system telemetry.

At scale, application-layer compliance becomes not just error-prone, it becomes unmanageable. Governance requirements demand centralization, standardization, and automation at the infrastructure level, rather than piecemeal enforcement scattered throughout application code.

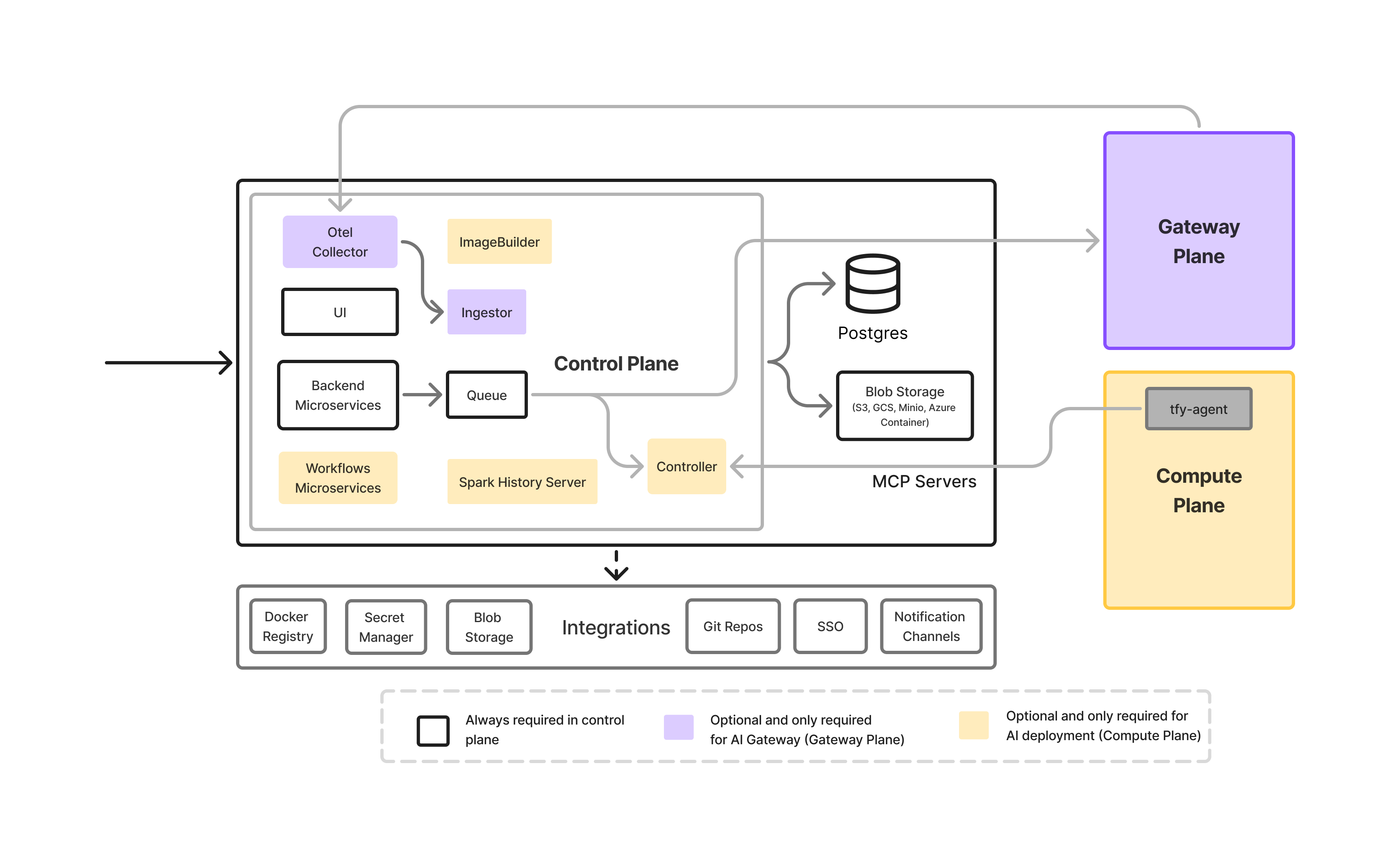

Centralizing Runtime Governance with an AI Control Plane

To address fragmentation at the application layer, enterprises are increasingly moving toward a runtime control-plane architecture for AI - a centralized gateway layer through which all model traffic flows.

Instead of embedding safety, privacy, and compliance logic inside every service, this approach places governance at the infrastructure edge of AI usage.

What an AI Control Plane Does?

A control plane operates as the single enforcement point for inference-time policies across all applications, models, and providers. It enables organizations to apply compliance once and enforce it everywhere.

Key capabilities include:

- Centralized prompt and response filtering

- Removal or masking of sensitive data before requests reach external models

- Blocking unsafe instructions or prohibited content patterns

- Standardized request logging

- Unified schema capturing prompt content, model metadata, response payloads, latency, and user or application identifiers

- Creation of a single auditable record for all AI interactions

- Policy enforcement across providers

- Routing controls that allow or deny specific models based on geography, data sensitivity, or use-case classification

- Fallback safety rules when providers fail or produce disallowed outputs

- Automated transparency requirements

- Injection of required “AI-generated” disclosures into responses where applicable

- Consistent labeling for AI-assisted interactions across products

By consolidating all inference traffic into one system layer, enterprises regain visibility and uniform control:

- There is one place to update policies instead of dozens.

- Audit logs become consistent and complete.

- Sensitive data handling becomes predictable and enforceable.

- Shadow AI activity is dramatically reduced.

For inference governance, this architectural shift is essential. It transforms compliance from distributed application hacks into continuous infrastructure enforcement.

However, while control planes solve safety and transparency challenges at runtime, they do not address the most complex regulatory obligations introduced by the EU AI Act - those related to the training lifecycle, risk classification, documentation, evaluation, and approvals of high-risk AI systems.

Runtime governance answers how AI is used.

It does not ensure governance for:

- How training data was sourced and validated

- Which datasets trained each model

- How models were evaluated or stress-tested

- Who approved deployment of high-risk models

- What evidence exists of bias testing and post-launch monitoring

Meeting these obligations requires governance across the full AI lifecycle, not just at inference time.

That is why enterprises need more than a control plane - they need a governance platform that integrates directly into data pipelines, training workflows, and deployment systems.

Compliance Lives Across the AI Stack and Not in a Single Tool

The EU AI Act makes one thing clear: compliance is not a runtime-only concern. It applies to every phase in the AI lifecycle - from the moment data is collected to how predictions are monitored long after deployment.

While an AI control plane governs how models are used, true regulatory compliance depends equally on how models are built, validated, deployed, and continuously monitored. These lifecycle obligations cannot be satisfied at the gateway alone.

Enter the concept of full-stack AI governance - an architecture where compliance flows across integrated layers rather than existing as isolated point solutions.

In practice, this means enterprises need governance mechanisms at four key levels:

1. Data & Feature Governance

Data is the foundation of regulated AI.

Compliance begins where data enters the system:

- Dataset registration and versioning

- Source documentation and schema validation

- Data representativeness checks

- Bias and leakage detection during preprocessing

Without this layer, organizations cannot demonstrate that the training data behind regulated models meets quality and fairness standards.

2. Model Lifecycle Governance

Once data is prepared, governance must extend to model training and evaluation:

- Model registries linking each model to specific training datasets

- Evaluation workflows capturing accuracy, stability, robustness, and bias metrics

- Repeatable training pipelines enabling reproducibility

- Model approval records documenting deployment readiness

This creates a transparent technical record demonstrating that models were tested, validated, and reviewed before reaching production — which is essential for high-risk classifications under the EU AI Act.

3. Deployment & Oversight Governance

Deployment is where technical control becomes regulatory accountability.

For high-risk AI systems, simply allowing teams to push models to production is unacceptable. Instead, governance requires:

- Role-based deployment permissions

- Environment isolation for staging vs. production

- Manual approval gates for regulated models

- Transparent deployment logs with reviewer attribution

This layer operationalizes the human-in-the-loop requirement — ensuring that regulated models cannot go live without explicit oversight and signoff.

4. Continuous Monitoring & Audit

Compliance does not stop when a model ships.

Production governance requires:

- Ongoing drift detection

- Bias amplification monitoring

- Output safety and effectiveness checks

- Alerting for policy or performance violations

- Immutable log retention

Monitoring dashboards must be capable of serving both engineering teams and compliance auditors with the same underlying telemetry turning governance into a measurable operational activity rather than periodic documentation.

When combined with a runtime AI control plane, these lifecycle layers form a true enterprise compliance fabric - governance that is systemic, continuous, and automated rather than reactive or manual. This integrated architecture eliminates the need for fragmented controls and enables enterprises to confidently scale AI adoption into regulated domains.

But infrastructure alone is not enough - tooling must make this governance usable for real engineering organizations.

How TrueFoundry Enables End-to-End EU AI Act Compliance

TrueFoundry is designed to operationalize AI governance across every compliance layer not as a bolt-on policy checklist, but as built-in infrastructure.

Rather than treating safety, documentation, approvals, and monitoring as parallel manual processes, TrueFoundry embeds them directly into the ML development lifecycle enabling teams to move fast while remaining aligned with regulatory obligations. Below is how key EU AI Act requirements become platform-native workflows inside TrueFoundry.

1. Governed Data & Dataset Traceability

Compliance begins before training ever starts. TrueFoundry treats datasets as versioned, auditable assets rather than ad–hoc files or notebook artefacts:

- Dataset registry with metadata describing source, labels, schema, transformations, ownership, and intended use

- Immutable dataset versioning aligned with pipeline outputs

- Automated validation hooks for schema consistency, distribution drift, and data quality checks

- Documented bias testing workflows integrated into data preprocessing

This ensures teams can verify and prove that training data is representative and systematically reviewed, rather than assembled informally.

2. Full Model Lineage & Evaluation Governance

Each model deployed with TrueFoundry maintains complete lineage back to the originating data and pipelines:

- Model registry linking models to:

- Training datasets and versions

- Feature pipelines

- Hyperparameters

- Evaluation metrics and experiment results

- Reproducible training pipelines ensure that any model can be retrained identically if required by audit or investigation.

- Pre-deployment evaluation gates enforce:

- Accuracy benchmarks

- Bias threshold acceptance

- Stress testing against edge-case inputs

Evaluation results are stored as artifacts attached to the model version, creating a defensible compliance record far stronger than detached documents or spreadsheets.

3. Deployment Governance & Human Oversight

Regulated AI demands more than CI automated deployments. TrueFoundry implements governance directly at release-time:

- Role-based deployment permissions (RBAC) – ensuring only approved roles can push regulated models into production

- Multi-stage approval workflows for high-risk releases, integrating business reviewers, legal stakeholders, and platform leads

- Deployment tags & purpose classification to associate models explicitly with compliance risk categories

- Full reviewer attribution & timestamped deployment decisions

This converts the EU AI Act’s human oversight requirement into a tangible operational control rather than an aspirational policy.

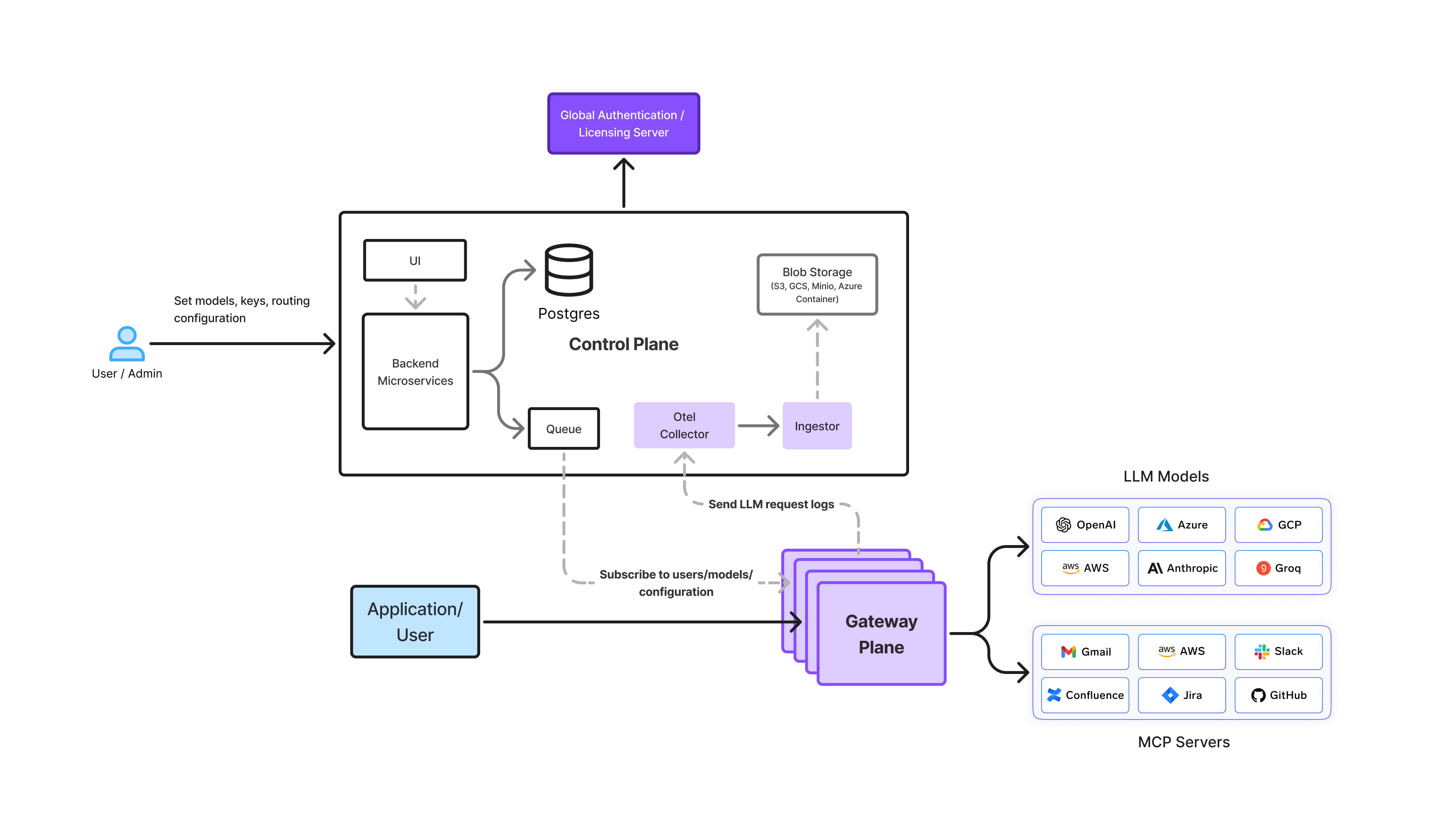

4. Integrated AI Gateway for Runtime Compliance

While lifecycle governance ensures safe development and release, effective compliance requires active control during live AI usage. TrueFoundry’s integrated AI Gateway and Agent Gateway provide centralized runtime enforcement:

- Prompt and output filtering policies

- PII detection and redaction

- Tool-access enforcement for agents

- Multi-model routing with safety fallback rules

- Unified request and response logging

Every runtime request is correlated back to:

User → Application → Model → Dataset → Training Pipeline

This chain of custody provides continuous end-to-end traceability - a critical compliance capability many organizations lack once models leave experimentation stages and enter distributed production systems.

5. Continuous Monitoring & Risk Detection

Deployment is not the endpoint. TrueFoundry embeds compliance verification into production monitoring:

- Model drift detection on core performance and distribution metrics

- Bias amplification monitoring

- Hallucination and unsafe output tracking

- Alerting workflows when models breach policy thresholds

- Comparative scoring dashboards across model versions

These dashboards enable both:

- Engineering teams to maintain technical health

- Compliance and governance teams to verify ongoing regulatory adherence

Continuous observation replaces static certifications - aligning exactly with the EU AI Act’s emphasis on operational accountability.

6. Secure, Region-Aware Infrastructure

Enterprise AI deployments must align not only with governance principles but also with data sovereignty and infrastructure controls. TrueFoundry supports compliant execution environments via:

- VPC or on-prem AI platform deployments

- Tenant isolation for sensitive workloads

- Region-specific routing policies

- Encryption in transit and at rest

- Strict secrets & access management

These capabilities enable enterprises to meet EU data localization obligations and internal security standards without fragmenting platforms by geography. By combining:

- Governed data pipelines

- Model lineage and evaluation systems

- Deployment approval workflows

- Integrated AI runtime gateway controls

- Continuous compliance observability

- Configure location to store your AI Gateway Request and Metrics - This helps in complying with local Data Residency laws and privacy policies

TrueFoundry delivers a unified AI governance fabric - eliminating the need for disconnected tooling and compliance workarounds across regulated AI environments.

Conclusion

The EU AI Act does not slow down AI innovation - it raises the bar for how AI must be built and operated at scale.

For enterprise leaders, the path forward is clear: compliance cannot be treated as a legal afterthought or an application-level patch. It must be engineered directly into the AI platform itself from governed data pipelines and model lineage to centralized runtime controls and continuous monitoring. Organizations that embrace this infrastructure-first approach will not only meet regulatory requirements more efficiently, they will also gain stronger operational discipline, higher customer trust, and faster enterprise adoption. Responsible AI is no longer a differentiator, it is becoming the foundation for sustainable scale.

By embedding governance and oversight across the full AI lifecycle, platforms like TrueFoundry enable teams to innovate confidently within regulated environments- building AI systems that are not only powerful, but also transparent, accountable, and compliant by design.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.