What Is AI Governance?

Introduction: Why AI Governance Matters Now

As organizations accelerate AI adoption, a critical question emerges: How do we ensure our AI systems remain trustworthy, compliant, and aligned with our values? The answer lies in AI governance—a structured approach to managing AI systems throughout their lifecycle. Unlike traditional software, AI systems learn from data, adapt over time, and make decisions with significant societal impact. Many organizations recognize that AI exposes the limitations of legacy governance processes, and prioritize strengthening responsible AI capabilities.

Organizations implementing robust AI governance frameworks don't just reduce risk, they build competitive advantage. They innovate faster with confidence, earn stakeholder trust, and stay ahead of rapidly evolving regulations like the EU AI Act and NIST AI Risk Management Framework (RMF). Modern AI governance requires infrastructure that can operationalize these principles at scale. Platforms like TrueFoundry bridge governance theory and practice by providing centralized controls, real-time observability, automated policy enforcement, and configurable guardrails, transforming governance from aspiration into enforceable reality across all AI workloads.

What Is AI Governance?

AI governance refers to the framework of policies, procedures, and controls that guide responsible AI development, deployment, and monitoring within an organization. It translates abstract ethical principles into concrete, enforceable practices.

Think of AI governance as the rulebook and referee combined. The rulebook defines principles—fairness, transparency, accountability, and security. The referee ensures these principles are followed at every stage: from data collection and model training to deployment and continuous monitoring. Effective governance encompasses operational policies defining what teams can do with AI, technical controls (rate-limiting, access controls, audit logging) that enforce policies automatically, oversight structures establishing accountability, and continuous monitoring that catches issues early.

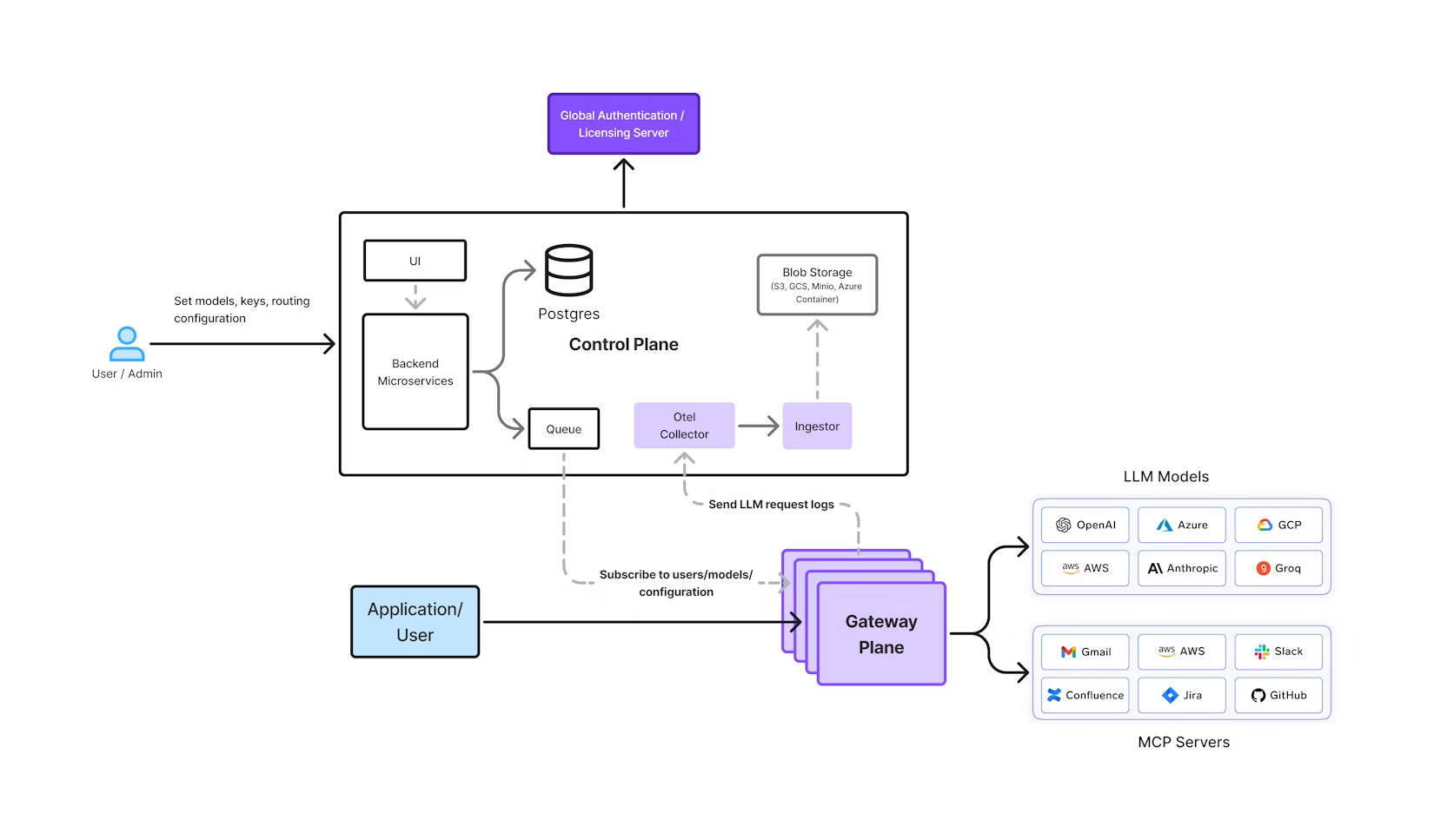

TrueFoundry's AI Gateway exemplifies how modern infrastructure operationalizes these governance principles. Acting as a centralized control plane for all AI interactions, the Gateway provides unified authentication and access control through the Model Context Protocol (MCP), enabling organizations to manage hundreds of AI tools and models through a single, governed platform. Instead of scattered credentials and unmanaged tool sprawl, teams gain complete visibility, audit trails, and policy enforcement across every AI workflow, making governance seamless, scalable, and secure.

Why AI Governance Matters

Mitigating legal and regulatory risk. The EU AI Act (2024) classifies AI systems by risk level and imposes strict obligations on high-risk systems, with fines reaching 7% of global revenue for non-compliance. Similar regulations are emerging globally, and organizations without governance frameworks face escalating legal exposure.

Building and maintaining trust. High-profile failures—Amazon’s biased hiring tool, Apple’s discriminatory credit card algorithm, COMPAS’s racial bias in sentencing predictions—demonstrate how AI failures erode public trust. Organizations demonstrating responsible AI practices earn stakeholder trust, attract talent, and strengthen customer relationships.

Enabling faster, safer innovation. Well-designed governance accelerates innovation. Organizations with clear policies and automated controls can deploy models faster with confidence. Governance becomes an enabler rather than a constraint, allowing rapid iteration within safe boundaries.

Ensuring data quality and model reliability. Governance frameworks mandate practices like data audits, bias testing, and continuous monitoring. These catch data quality issues, model drift, and performance degradation before they impact users or business outcomes.

Optimizing costs and resource allocation. Governance frameworks provide visibility into model usage, performance, and cost, enabling organizations to retire underperforming models and make data-driven investment decisions.

Core Principles of AI Governance

Transparency and Explainability. Users and regulators should understand how AI systems generate outputs and make decisions. This addresses the “black box” problem through techniques like SHAP values and audit logs that trace which data influenced predictions.

Fairness and Non-Discrimination. AI systems must not perpetuate or amplify existing biases through diverse training datasets, regular bias audits, and fairness metrics like demographic parity or equalized odds.

Accountability and Oversight. Every AI decision must trace to responsible parties. Humans should retain meaningful control over high-impact decisions, with clear governance structures defining who owns data quality, approvals, and investigations.

Privacy and Data Security. AI systems must handle personal data responsibly through secure ingestion, encrypted training, anonymization where applicable, and strict access controls, aligned with GDPR and CCPA.

Human-Centric Design and Safety. AI should support human well-being and respect fundamental rights. High-risk decisions—employment, credit, criminal justice—require human review and override capabilities.

Robustness and Resilience. AI systems must remain secure, reliable, and resilient to adversarial attacks through stress testing, adversarial testing, and disaster recovery planning.

Key Components of an AI Governance Framework

Governance Structure and Roles. Organizations need formal structures including an AI Ethics Committee overseeing strategic decisions, Data Stewards managing data quality and compliance, Model Owners accountable for specific models, Compliance Officers managing regulatory requirements, and increasingly, a Chief AI Risk Officer. Role clarity prevents gaps and ensures accountability.

Policy and Standards Development. Policies translate principles into operational directives covering data governance (collection, storage, usage, retention), model development (training, validation, testing), deployment and release (approval gates, rollback procedures), and third-party AI vendor management.

Risk Assessment and Management. AI systems present distinct risks: bias and fairness issues in training data, model drift and performance degradation, data privacy and security vulnerabilities, explainability gaps in complex models, and model misuse. Governance frameworks use AI Impact Assessments, Model Risk Scorecards, and Bias Audits to systematically address these risks.

Data Governance and Quality. High-quality data is foundational. Organizations maintain data inventory and lineage, define data quality standards, establish labeling standards for supervised learning, and create synthetic data policies where applicable.

Model Lifecycle Management. Governance spans the entire lifecycle, covering development practices such as coding standards, version control, and documentation, followed by validation and testing to address bias and performance across different subgroups. Deployment practices include approval gates and canary rollouts, while robust monitoring relies on real-time dashboards to track performance and bias throughout production. Retirement involves secure archiving, data deletion, and stakeholder notification.

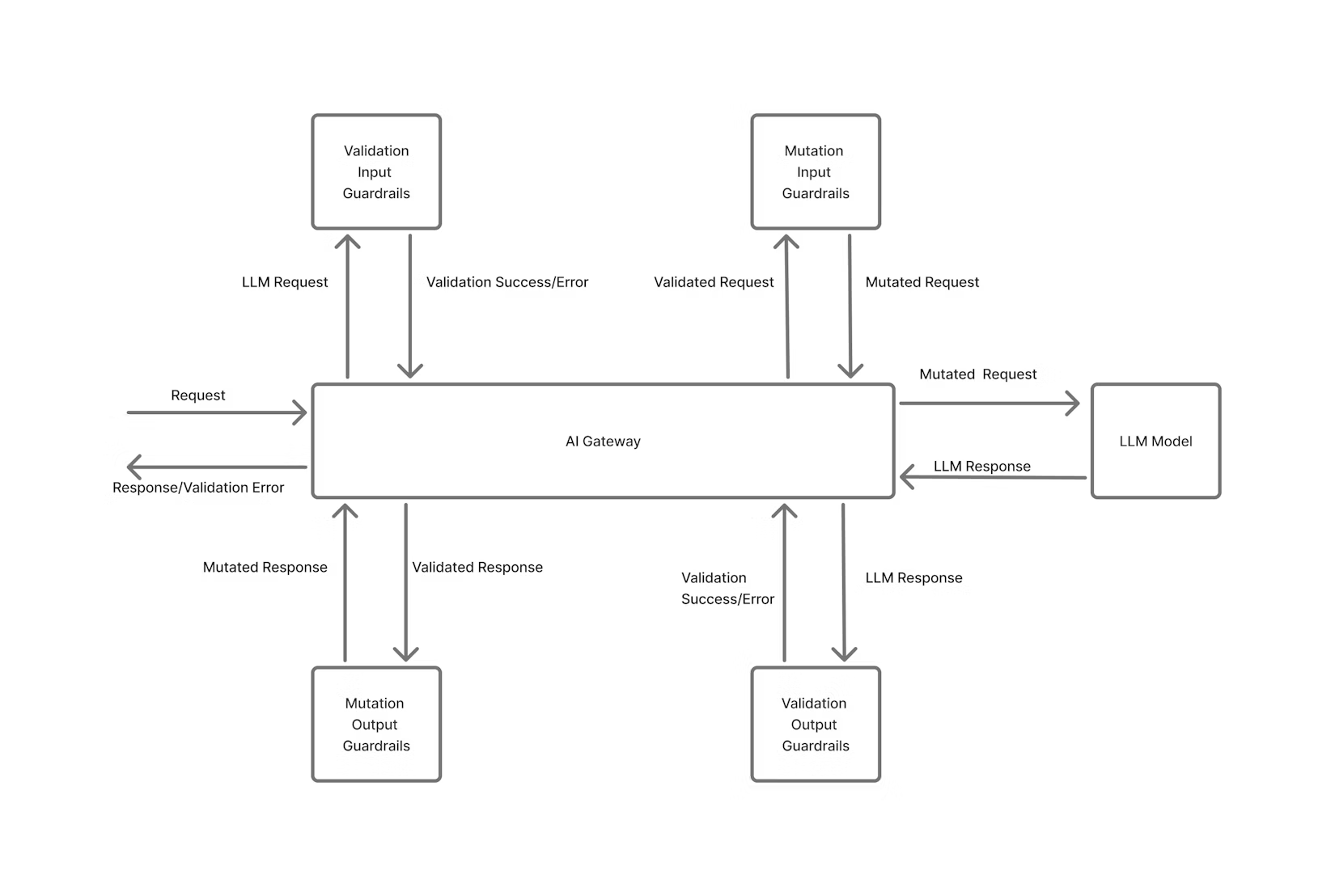

Compliance, Auditing, and Audit Trails. Organizations maintain model development records, decision logs and audit trails, compliance reports, and third-party audit records. TrueFoundry's AI Gateway automates audit trail capture for every model interaction, decision, and policy enforcement action in real time. The Gateway's configurable guardrails validate, redact, or block unsafe or non-compliant content at every stage - covering both input to and output from models. These guardrails integrate with leading providers (OpenAI Moderations, Azure Content Safety, Fiddler) or custom logic to enforce policy, safety, and regulatory requirements automatically. Every action remains properly governed and auditable, with complete traceability from request to response. This real-time enforcement transforms compliance from periodic checks into continuous, automated assurance.

How Organizations Implement AI Governance

Implementation follows a practical progression.

Phase 1: Assessment and Planning involves cataloging AI systems, assessing regulatory requirements, evaluating current state governance, and defining objectives aligned with business strategy.

Phase 2: Framework Development selects or adapts frameworks (NIST, ISO 42001), defines organizational principles and policies, designs governance structures, and develops detailed standards.

Phase 3: Infrastructure and Tooling deploys governance platforms that operationalize policies into automated controls. TrueFoundry's AI Gateway serves as the centralized infrastructure layer, providing model registry, automated audit logging, real-time policy enforcement, and compliance reporting across all AI workloads. The Gateway enables organizations to enforce access controls through unified authentication, apply configurable guardrails for safety and compliance validation, and maintain complete visibility through observability dashboards. By centralizing governance infrastructure, organizations eliminate scattered credentials, reduce shadow AI, and gain consistent enforcement across teams, models, and providers, transforming governance from manual oversight to automated, scalable capability.

Phase 4: Piloting and Operationalization starts with low-risk pilot projects to test processes, refines procedures based on feedback, establishes governance cadences for regular reviews and audits, and builds organizational culture where governance enables innovation.

Phase 5: Continuous Improvement monitors governance effectiveness, adapts to regulatory changes, invests in capability building, and shares learnings across projects.

Canada’s Department of Fisheries and Oceans illustrates this progression—initial pilots without governance couldn’t scale to production until they built governance infrastructure including role definitions and responsibility matrices.

Global Regulations and Standards

EU Artificial Intelligence Act (2024). The most comprehensive regulation uses a risk-based approach. Prohibited uses include certain mass surveillance and social credit scoring. High-Risk Systems require impact assessments, documentation, monitoring, and human oversight for hiring, credit, and law enforcement decisions. Limited-Risk Systems require transparency. Minimal-Risk Systems face minimal requirements. Foundation models require technical documentation and systemic risk assessments.

NIST AI Risk Management Framework (2023). This voluntary U.S. framework emphasizes Govern (organizational roles and policies), Map (identifying risks), Measure (developing metrics), Manage (implementing controls), and Monitor (continuous tracking).

ISO/IEC 42001 (2023). The first international AI management systems standard provides a structured approach compatible with other ISO standards (ISO 9001 quality, ISO 27001 information security), enabling third-party certification.

India AI Governance Guidelines (November 2025). India’s framework emphasizes a “light-touch, innovation-friendly” approach with principles of human-centric, inclusive, privacy-by-design, fair, explainable, safe, and nationally-aligned AI.

These frameworks converge on shared principles—transparency, accountability, fairness, safety, and human oversight—providing clear organizational direction.

Challenges in AI Governance

Technical Complexity. Deep learning models operate through complex mathematical functions resistant to simple explanation. AI systems are probabilistic and adaptive, making them fundamentally different from traditional software. Governance must account for inherent uncertainty and adaptability.

Bias and Fairness. Bias is pervasive—historical biases in training data perpetuate discrimination, algorithmic choices amplify bias, and issues emerge invisibly without careful audit. Defining fairness is difficult; demographic parity may conflict with equalized odds, requiring domain expertise and stakeholder input.

Regulatory Fragmentation. While frameworks converge on principles, regulations diverge in requirements. Organizations operating globally must navigate this fragmentation, often implementing strictest requirements as the de facto standard.

Rapid Model Evolution and Shadow AI. Organizations struggle to maintain governance at adoption pace. New models emerge constantly; teams experiment with open-source and third-party models. This creates “shadow AI” outside governance frameworks, and documentation often lags actual deployments.

Resource and Capability Constraints. AI governance requires specialized expertise—data scientists, compliance officers, security engineers, and ethicists must collaborate. Many organizations lack in-house expertise, particularly in emerging areas like adversarial testing or fairness assessment.

Balancing Innovation and Governance. Organizations worry strict governance slows innovation or drives projects underground. Striking balance requires thoughtful design and cultural buy-in.

Best Practices for Effective AI Governance

Start with Clear Principles. Define what responsible AI means for your organization—prioritize fairness, explainability, privacy, or safety based on context and values. Document principles and align with regulatory requirements and business strategy.

Establish Cross-Functional Structures. Effective governance requires collaboration across technology (architects, engineers, data scientists), legal and compliance, privacy and security, business and product teams, and ethics experts. Regular meetings and clear communication keep teams aligned.

Embed Governance Early in Lifecycle. Governance shouldn’t be bolted on at deployment. Embed governance during planning (assess regulatory requirements), data preparation (ensure quality and privacy compliance), development (build validation and testing in), deployment (enforce approval gates), and operations (continuous monitoring).

Implement Automated Policy Enforcement. Manual processes don’t scale. Express policies as executable code, run tests in CI/CD pipelines, automatically capture decisions and metrics, and use platforms like TrueFoundry’s AI Gateway to enforce policies consistently across models and teams. Automation increases consistency, reduces overhead, and enables faster innovation.

Invest in Data Governance. High-quality data is foundational. Maintain data inventory and lineage, define quality standards, limit access to authorized users, and document datasets including known limitations and biases.

Develop Model Registry. Maintain centralized registry of production models including metadata (owner, version, deployment date), training data documentation, performance metrics, model documentation (architecture, hyperparameters, intended use), and compliance status. This enables visibility, supports audits, and guides retirement decisions. TrueFoundry streamlines end-to-end model lifecycle management with its Model Registry, allowing teams to track, version, deploy, and monitor models seamlessly within a single platform, ensuring compliance and auditability in every phase.

Establish Regular Audit Cycles. Governance is continuous, not one-time. Conduct quarterly or semi-annual model audits, annual compliance reviews against regulatory frameworks, regular data audits, and annual policy reviews.

Build Responsible AI Culture. Governance frameworks succeed only if organizations embrace them. Foster culture where responsible AI enables innovation, teams feel empowered to raise concerns, leaders prioritize governance in resource allocation, and teams receive training and support.

Use Technology Enablers. Modern platforms like TrueFoundry simplify governance through centralized deployment with consistent governance, built-in observability dashboards, automated policy enforcement, and integrated compliance tools.

Measure Governance Effectiveness. Define metrics: regulatory compliance (percentage of models meeting standards), risk reduction (bias and incident reductions), operational efficiency (time-to-deployment), stakeholder trust (satisfaction levels), and innovation velocity (speed and volume of new projects). Track regularly and adjust based on learnings.

The Future of AI Governance

Adaptive, Modular Governance. Future frameworks will enable modular governance where individual controls (security, privacy, fairness, cost) evolve independently. Organizations will update policies through configuration rather than code changes, enabling rapid adaptation to regulatory changes.

Real-Time, Continuous Compliance. Tomorrow’s governance will be continuous, not periodic. AI systems will autonomously enforce compliance, flag violations immediately, and enable rapid remediation.

Integrated Governance Domains. Privacy, security, and legal compliance will integrate rather than operate in silos, with unified threat models and synchronized oversight.

Explainability as Standard. Explainability will shift from research interest to engineering standard, with models designed for interpretability and techniques embedded in deployment pipelines.

Multi-Model, Multi-Provider Governance. Future governance must operate seamlessly across multiple models, providers, and clouds while maintaining consistent policies.

Collaborative Standards. Organizations will increasingly share governance standards and best practices through industry consortia, accelerating maturity and reducing duplication.

AI Safety as Core. As systems become more capable, governance will emphasize safety—ensuring models behave as intended, fail safely, and remain under meaningful human control.

Sustainability Focus. Governance will incorporate environmental considerations, optimizing for efficiency and tracking carbon footprints of training and inference.

Conclusion

AI governance is no longer optional, it's a business imperative driven by regulation, risk, and stakeholder expectations. Organizations implementing robust governance don't just reduce risk; they build competitive advantage through faster, safer innovation. Well-designed governance, implemented through policies, automated controls, and modern infrastructure, actually accelerates innovation by providing confidence and removing manual compliance burden.

TrueFoundry enables "governance by design", embedding compliance, safety, and oversight directly into AI infrastructure rather than retrofitting it later. Through centralized control via the AI Gateway, automated policy enforcement, real-time guardrails, and comprehensive audit trails, organizations can deploy AI with confidence that governance is built in from the start. This approach transforms governance from a constraint into a competitive advantage, allowing teams to innovate rapidly within safe, compliant boundaries.

The path forward requires leadership commitment, cross-functional collaboration, investment in capabilities and infrastructure, and cultural change. Organizations taking this path today are defining responsible AI for their industries. The era of "move fast and break things" in AI is ending. The era of "move fast and don't break trust" is beginning. AI governance, and platforms like TrueFoundry that make it practical, make that era possible.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.