Amazon SageMaker Review: Features, Pricing, Pros and Cons (+ Better Alternative)

Amazon SageMaker has effectively become the default operating system for machine learning within the AWS perimeter. Launched in 2017, it promised to industrialize what was then a fragmented ecosystem of custom scripts and manual server provisioning. By abstracting the underlying EC2 configuration and container orchestration, it allowed organizations to standardize their ML pipelines.

But here we are in 2026, and the value proposition of a closed-source, single-cloud managed service is under scrutiny. The complaints we hear from engineering teams are consistent: opaque pricing models that lead to month-end shock, steep learning curves for non-AWS natives, and a "walled garden" architecture that penalizes multi-cloud strategies.

This technical review looks at SageMaker not as a marketing brochure, but as a piece of infrastructure. We examine the unit economics, the operational friction, and the architectural trade-offs based on data from G2, Gartner Peer Insights, and direct operational experience. We will also evaluate whether decoupled control planes like TrueFoundry offer a viable path away from vendor lock-in.

What is Amazon SageMaker?

At its core, Amazon SageMaker is a managed service wrapper around AWS compute (EC2), storage (S3/EBS), and container orchestration (EKS/ECS). It provides an end-to-end integrated development environment (IDE) and a control plane for the ML lifecycle.

Recent updates, such as the "Unified Studio" and integration with Data Lakehouses, attempt to bridge the gap between data engineering and ML operations. However, for the platform engineer, SageMaker is essentially a set of proprietary APIs used to provision ephemeral compute for training and persistent compute for inference.

Target Audience:

- Enterprise Data Science Teams: Organizations requiring strict IAM compliance and VPC isolation.

- ML Engineers: Teams needing managed infrastructure without managing Kubernetes manifests directly.

Operational Scope:

- Custom Model Development: Notebook-based experimentation (JupyterLab).

- Training Orchestration: Distributed training on high-performance clusters (P4/P5 instances).

- Inference Serving: Deploying endpoints for real-time (REST) or batch processing.

- MLOps Governance: Model registry, lineage tracking, and drift detection.

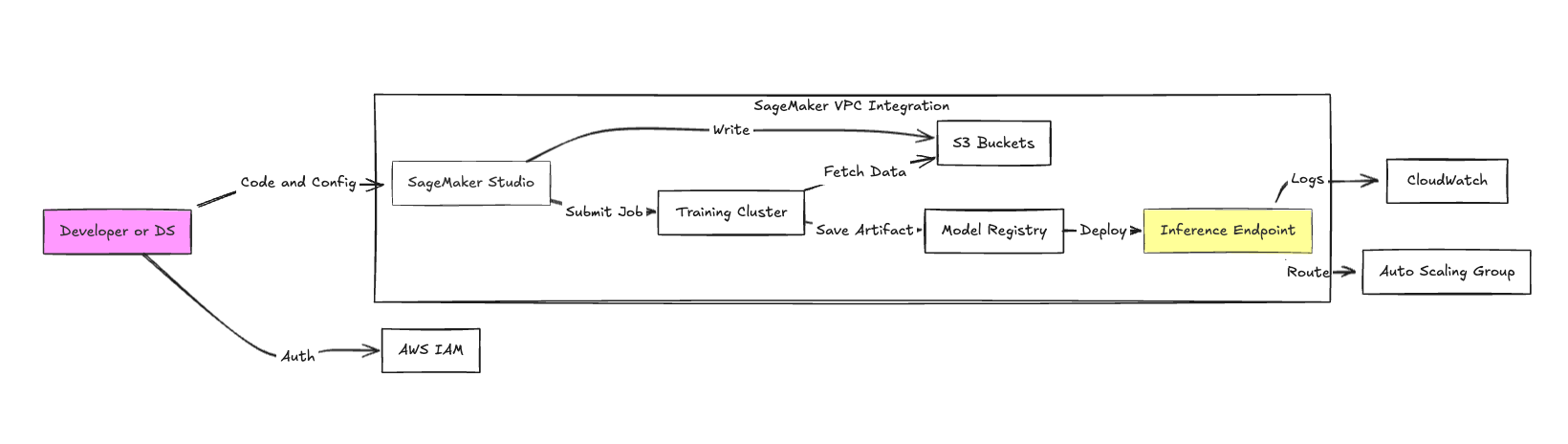

Figure 1 illustrates the architectural flow of SageMaker, highlighting the dependencies on other AWS services.

Fig 1. High-level SageMaker architectural flow and AWS dependencies.

Amazon SageMaker Key Features

SageMaker is a monolith. While it offers dozens of sub-services, the following components constitute the core operational stack.

SageMaker Studio & Development Environments

Studio is a web-based IDE based on JupyterLab. While it centralizes access, it introduces latency. Spinning up a "KernelGateway" app can take several minutes. It creates an abstraction layer over the underlying EC2 instance, which simplifies access but complicates utilizing local system resources for debugging.

Model Training & HyperPod

SageMaker enables distributed training across clusters. SageMaker HyperPod is the notable feature here, designed to be resilient to hardware failures during long-running LLM training jobs. It automatically detects and replaces faulty instances—critical when renting expensive GPU clusters where a single node failure can waste days of compute time.

Model Deployment & Inference

SageMaker offers Real-time Inference, Serverless Inference, and Asynchronous Inference.

- Real-time: Persistent endpoints (always running). Good for low latency (<100ms), bad for cost if utilization drops.

- Shadow Testing: Allows routing a percentage of traffic to a new model version to validate performance without impacting users.

- Serverless: Useful for intermittent traffic, but suffers from "cold starts" (often 5-10 seconds) which makes it unusable for latency-sensitive applications.

SageMaker Autopilot

An AutoML solution that iterates through algorithms to find the best model. While useful for rapid prototyping on tabular data, experienced engineers often find the generated code difficult to refactor or optimize for production inference constraints.

MLOps Tools (Pipelines, Registry, Monitor)

This is the "glue" layer. SageMaker Pipelines is a CI/CD service specifically for ML. It integrates tightly with the Model Registry (versioning) and Model Monitor (drift detection). The trade-off is heavy vendor coupling; migrating a SageMaker Pipeline to Airflow or Argo Workflows usually requires a complete rewrite.

Data Preparation (Data Wrangler, Feature Store)

Data Wrangler provides a UI for data cleaning, generating python code. The Feature Store acts as a centralized repository for features. Note that the Feature Store is backed by Glue and DynamoDB, meaning high-throughput reads can incur significant secondary costs on the database side.

Amazon SageMaker Pricing

Pricing is the most common friction point. SageMaker operates on a consumption-based model with a markup over raw EC2 pricing. There are no upfront fees, but cost predictability is low due to the sheer number of billable vectors.

Pricing Model

You are billed for:

- Compute: Per-second charges for Training and Inference instances.

- Storage: GB-month charges for EBS volumes attached to instances (often overlooked).

- Data Processing: GB charges for data in/out of the service.

- Metadata: Costs associated with storing metrics and logs in CloudWatch.

Cost Components & Real Examples

1. Notebook Instances:

A standard ml.t3.medium notebook instance costs approximately **$0.05/hour**. However, developers frequently leave these running overnight. A team of 10 developers leaving instances on for a month results in ~$360 of "waste," excluding storage costs.

2. Inference Endpoints (The Silent Budget Killer):

Inference is where costs spiral. Unlike training (which ends), endpoints run 24/7.

- Instance: ml.g5.xlarge (NVIDIA A10G).

- Cost: ~$1.40/hour (us-east-1).

- Monthly Cost: ~$1,008 per instance.

- Redundancy: Production requires at least 2 instances for high availability. Total: ~$2,016/month per model.

3. Training & Spot Instances:

Managed Spot Training can offer up to a 90% discount compared to On-Demand rates. However, Spot instances can be preempted (interrupted) by AWS at any time. If your training checkpointing logic is not robust, you lose progress.

Real-World Scenario:

A mid-sized startup training a custom LLM and hosting 5 models in production can easily see bills exceeding $25,000/month. According to AWS Pricing, data processing charges for features like Data Wrangler start at $0.14/node-hour, which scales linearly with data volume.

Amazon SageMaker Reviews: What Users Are Saying

We analyzed feedback from G2, Gartner Peer Insights, and developer forums to identify the consensus.

Overall Ratings

- G2: 4.2/5 (Based on enterprise adoption).

- Capterra: 4.5/5 (Skewed towards AWS-heavy shops).

Pros (What Users Love)

Users appreciate the "compliance-in-a-box" nature of the platform.

- Managed Infrastructure: "The ability to spin up a distributed training cluster without touching Kubernetes manifests is the primary reason we stay," notes a Senior ML Engineer on G2.

- Security: Seamless integration with IAM roles and VPC endpoints satisfies strict InfoSec requirements.

- Feature Store: Centralized feature management reduces data leakage between training and inference.

Cons (Common Complaints)

Negative sentiment focuses on developer experience (DX) and billing opacity.

- Billing Shocks: A common review theme is "zombie resources." Users delete an endpoint but forget the attached EBS volumes or elastic inference accelerators, which continue to bill indefinitely.

- Debugging Complexity: "When a training job fails with an opaque 'AlgorithmError', debugging the underlying container logs in CloudWatch is painful compared to debugging a local container," mentions a user on PeerSpot.

- AWS Lock-in: Migrating a model trained and registered in SageMaker to a different cloud (e.g., GCP or on-prem) is technically difficult because the model artifacts are often wrapped in SageMaker-specific formats.

Note on "SageMaker Gateway": There is often confusion regarding this term. It refers to integrating Amazon API Gateway with SageMaker endpoints to expose models as public REST APIs. While powerful, it introduces another layer of latency and cost (API Gateway charges per million requests) that developers must manage.

Is Amazon SageMaker Worth It?

The decision comes down to your organization's architectural philosophy and budget elasticity.

When SageMaker makes sense:

- AWS-Centricity: Your data resides in S3, your auth is IAM, and you have significant committed spend (EDP) with AWS.

- Compliance: You need FedRAMP, HIPAA, or SOC2 compliance immediately and lack the resources to build a compliant platform on raw Kubernetes.

- Traditional ML: Your primary focus is regression, classification, or XGBoost/Scikit-learn workflows where SageMaker's pre-built containers excel.

When to consider alternatives:

- GenAI / LLM Focus: SageMaker was built for traditional ML. While it supports LLMs, the developer workflow feels retrofitted.

- Multi-Cloud Requirements: If you need to run inference on GCP (for TPU availability) or on-premise (for data sovereignty), SageMaker is a non-starter.

- Cost Control: You need to maximize GPU utilization and cannot afford the "managed service premium" (typically 20-30% over raw EC2).

- Kubernetes Control: You want standard Kubernetes deployments that can be debugged with kubectl, rather than proprietary APIs.

TrueFoundry: A Better Alternative to Amazon SageMaker

For teams finding SageMaker too rigid or expensive, TrueFoundry operates on a fundamentally different architecture. It is a Control Plane that sits on top of your own cloud account (AWS, GCP, Azure), rather than a black-box managed service.

This "Bring Your Own Cloud" (BYOC) approach allows TrueFoundry to orchestrate compute inside your VPC. You get the developer experience of a managed platform like Heroku, but the underlying unit economics of raw EC2/GKE/AKS instances.

Comparison: TrueFoundry vs. Amazon SageMaker

Architecture Comparison

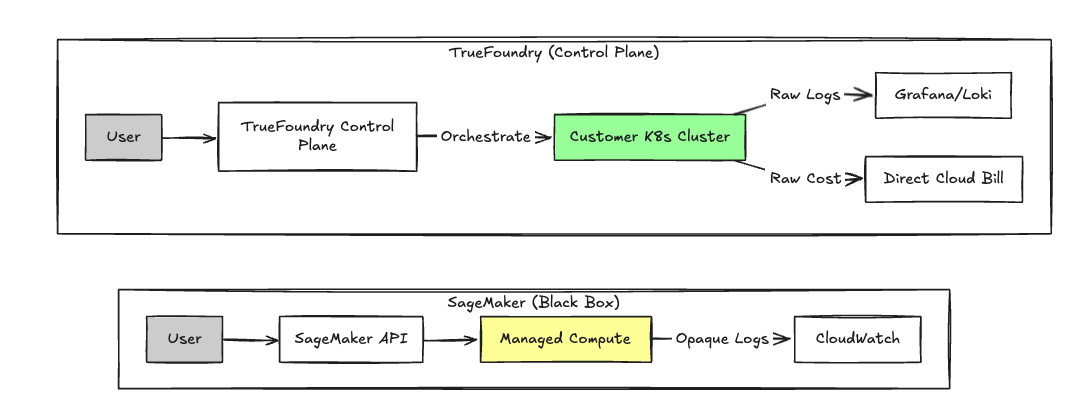

The critical difference is where the compute happens. In SageMaker, you rent the platform's compute. In TrueFoundry, the platform orchestrates your compute.

Fig 2. Architectural difference: Managed Service vs. Control Plane.

Case Study Relevance:

Engineering teams at companies like Whatfix and Udaan utilized TrueFoundry to reduce ML costs by 30-40%. By leveraging TrueFoundry's ability to spot-interruption handling and mixed-instance orchestration on their own Kubernetes clusters, they avoided the premium markup associated with managed SageMaker instances.

Final Verdict

Amazon SageMaker is a robust, enterprise-grade toolkit. If your organization is legally or technically bound to AWS and you have a dedicated DevOps team to manage the billing and configuration complexities, it is a safe, standard choice.

However, for teams building modern GenAI applications where GPU scarcity and unit economics are existential risks, the "AWS tax" is hard to justify.

TrueFoundry offers the logical evolution: the usability of a managed service with the economic and architectural freedom of owning your infrastructure. If you need to deploy LLMs across AWS and GCP to find the cheapest GPUs, or if you simply want a dashboard that speaks the language of developers rather than accountants, TrueFoundry is the superior architectural choice.

Book a Demo with TrueFoundry to see how you can cut your inference costs by 40% while regaining control of your infrastructure.

FAQs

How good is Amazon SageMaker?

SageMaker is technically mature and reliable for traditional ML. It excels in security and compliance but rates poorly on usability, debugging experience, and cost transparency compared to modern MLOps platforms.

Is SageMaker better than Databricks?

It depends on the data. Databricks (Unified Data Analytics Platform) is superior for Spark-heavy workloads and data engineering-led ML. SageMaker is generally preferred for pure deep learning and inference tasks where the data is already prepared in S3.

Is SageMaker widely used?

Yes, it has the largest market share among public cloud ML services simply due to AWS's dominance. However, market share is shifting as "cloud-agnostic" becomes a priority for GenAI stacks.

Is SageMaker a competitor of OpenAI?

No. OpenAI provides models as a service (API). SageMaker provides the infrastructure to train and host your own models (including open-source alternatives to OpenAI, like Llama 3 or Mistral).

Is SageMaker better than Azure ML?

They are functionally similar. Azure ML is generally considered to have a more intuitive UI and better integration with VS Code, while SageMaker offers more granular control over low-level infrastructure for advanced users.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)