Vercel AI Review 2026: We Tested It So You Don’t Have To

If you browse Twitter or developer forums, Vercel AI appears to be the default mechanism for building Generative AI applications. Utilizing the vercel/ai SDK alongside Next.js allows engineering teams to transition from an empty directory to a streaming chatbot in minutes.

The developer experience (DX) is undeniably optimized for immediate gratification, abstracting away the complexities of stream parsing and UI state management. But does “easy to start” equate to “easy to scale”?

We rigorously tested Vercel AI beyond the prototype phase, subjecting it to agentic workflows, high-throughput RAG pipelines, and enterprise security compliance standards. This architectural review outlines where the platform excels, identifies specific operational boundaries, and explains why scaling teams often transition to dedicated orchestration platforms like TrueFoundry.

What Actually Is “Vercel AI”?

Vercel AI is frequently misunderstood because the marketing conflates client-side tooling with underlying infrastructure. From an architectural standpoint, Vercel AI is a composite of the open-source AI SDK and Vercel’s proprietary Edge and Serverless execution environments.

The SDK handles the abstraction layer, managing streaming protocols, backpressure, and provider switching across APIs like OpenAI or Anthropic. However, the runtime behavior is inextricably coupled to Vercel’s hosting model. When deployed, these API routes execute either as Edge Functions (lightweight V8 isolates) or Serverless Functions (ephemeral Node.js containers).

This distinction is critical because it dictates operational constraints. You are not running a persistent server; you are running event-driven, short-lived compute instances that have hard ceilings on execution duration and memory availability, regardless of the complexity of your LLM reasoning chain.

The “Happy Path”: Where Vercel AI Shines

Before analyzing limitations, it is necessary to validate where Vercel AI adds tangible engineering value. During hands-on testing, the platform demonstrated clear utility for specific architectural patterns.

- Frontend Velocity: Implementing streaming chat interfaces with the useChat hook significantly reduces boilerplate. In our internal tests, establishing a connection between a Next.js frontend and an OpenAI backend required less than 20 lines of code, automatically handling chunked response reconstruction.

- Time-To-First-Byte (TTFB): Edge execution provides aggressive TTFB. Because Edge Functions run on V8 isolates that boot in milliseconds, they eliminate the container cold start penalty associated with traditional serverless functions. This makes them ideal for lightweight, stateless inference tasks where low latency is the primary KPI.

- Next.js Integration: For teams already entrenched in the Next.js ecosystem, onboarding friction is effectively zero. The ai package integrates natively with the App Router, removing the need for separate API gateway configuration.

The Stress Test: Where the Experience Hits Limits

When pushed beyond simple request-response cycles into complex reasoning tasks, Vercel AI exposes significant infrastructure constraints. The following limitations were documented during our benchmarking of agentic and RAG-heavy workloads.

The Timeout Ceiling for Agentic Workflows

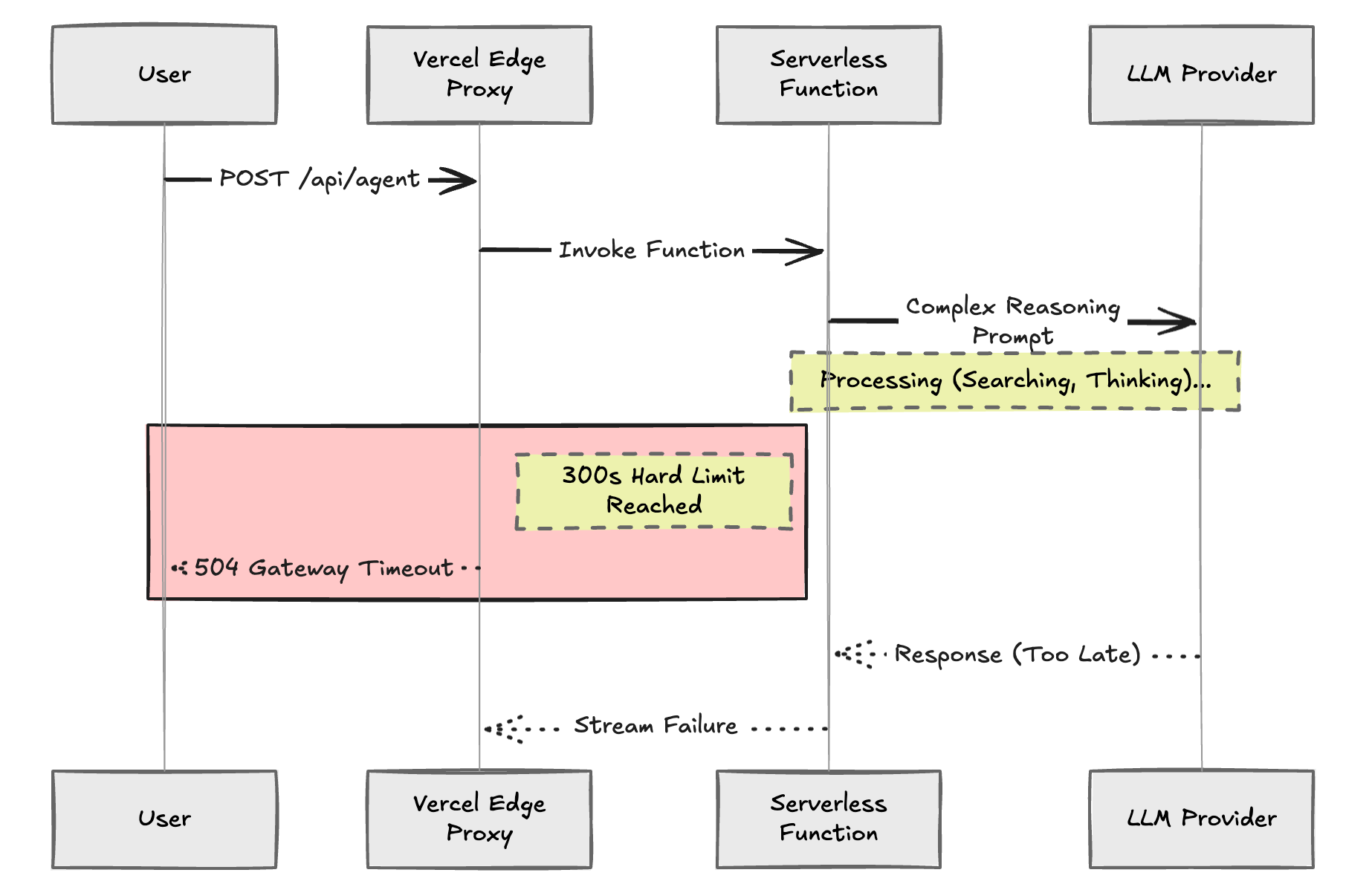

Running deep research agents or multi-step reasoning loops quickly exceeds the hard execution limits imposed by the platform.

- Hobby Plan: Serverless functions are strictly capped at 10 seconds.

- Pro Plan: The default timeout is 15 seconds, configurable up to a maximum of 300 seconds (5 minutes).

For an autonomous agent that needs to scrape a website, parse the DOM, query a vector database, and then generate a Chain-of-Thought response, this 5-minute window is often insufficient. In our testing, long-running agents consistently terminated with 504 Gateway Timeout errors once the hard limit was reached.

Edge Functions are even more restrictive, enforcing a strict limit on the time between the request and the first byte of the response. If your agent requires extensive "thinking time" before streaming the first token, the connection is severed by the platform's proxy layer.

Source: Vercel Functions Limitations Documentation

Fig 1: The Serverless Timeout Wall

Cold Starts on Heavy Workloads

While Edge Functions are fast, they lack full Node.js compatibility, forcing teams to use standard Serverless Functions for operations involving heavy dependencies or database connections. Loading large prompt templates, validation schemas (like Zod), or establishing SSL connections to an external Vector Database (e.g., Pinecone or Weaviate) introduces significant latency during initialization.

Our benchmarks indicated that Serverless Functions connecting to an AWS RDS instance experienced cold starts ranging from 800ms to 2.5 seconds. Unlike persistent servers that maintain connection pools, serverless functions must frequently re-establish TCP/TLS handshakes on new invocations. This adds perceptible latency to the user experience.

Architectural Dependency on Edge Middleware

Vercel Edge Middleware utilizes a proprietary runtime environment (EdgeRuntime) rather than the standard Node.js runtime. While it adheres to web standards like fetch, it lacks support for native Node APIs such as fs, net, or C++ addons.

Consequently, routing logic or custom middleware developed specifically for Vercel’s Edge is not easily portable. Migrating this logic to a standard containerized environment (Docker) or a different cloud provider (AWS Lambda) often requires a rewrite of the gateway layer. This creates an architectural dependency where the cost of exiting the platform increases linearly with the complexity of the middleware logic implemented.

Vercel AI Gateway Features Review

Vercel AI Gateway is frequently positioned as a comprehensive traffic management solution. We evaluated its capabilities against the requirements of a production-grade API Gateway.

Caching Capabilities

Vercel’s caching strategy relies primarily on HTTP Cache-Control headers and URL-based keys. This approach is insufficient for LLM workloads where distinct prompts may be semantically equivalent (e.g., "What is the price?" vs "Price check"). True semantic caching requires embedding the prompt and performing a vector similarity search to find cached responses.

Implementing this on Vercel requires manual engineering: you must deploy a separate Vercel KV (Redis) instance and write custom logic to handle vector comparisons. Advanced caching strategies are not out-of-the-box features but rather custom implementations built on top of paid primitives like Vercel KV.

Observability and Metrics

The Vercel dashboard is optimized for web vitals (LCP, FID) rather than AI-specific metrics. By default, there is no visibility into token throughput, cost per user, or LLM latency breakdown.

To obtain these insights, engineering teams must instrument third-party observability platforms such as Helicone or Langfuse. While the SDK supports these integrations, they represent additional distinct vendors to manage and pay for, rather than a native capability of the gateway itself.

Why is TrueFoundry a Better Production-Grade Alternative?

TrueFoundry is designed to address the infrastructure limitations inherent in serverless architectures. This section details how it facilitates production-grade AI deployment.

Async Workers for Agents

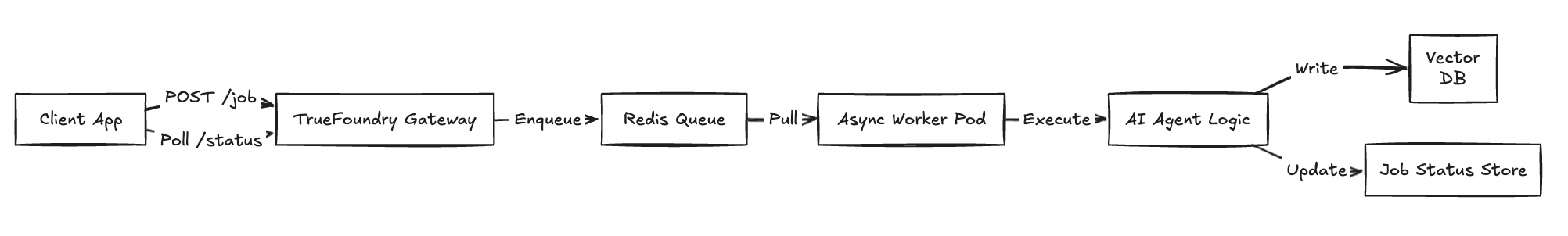

TrueFoundry decouples the execution of long-running tasks from the HTTP request/response cycle. It supports asynchronous job runners that operate without the hard execution time limits found in serverless environments.

This architecture allows agents to perform extensive tasks—such as scraping hundreds of pages or processing large datasets—over periods spanning minutes or hours. By utilizing Kubernetes Jobs or background workers, the system prevents 504 timeouts entirely. The client receives a job ID immediately, and the work is processed reliably in the background utilizing a queue-based architecture.

Fig 2: TrueFoundry Async Architecture

Private Networking and VPC Deployment

Security requirements in enterprise environments often mandate that data does not traverse public networks. TrueFoundry deploys AI gateways directly inside your own cloud VPC (AWS, GCP, or Azure).

This configuration ensures that connections between your inference services and your data stores (like RDS or private vector indexes) are routed over internal, low-latency private networks (e.g., AWS PrivateLink). Sensitive payloads are processed within your security perimeter, mitigating data exfiltration risks associated with multi-tenant edge networks.

Cost Control with Spot Instances

Vercel charges a premium for the convenience of serverless execution (billing based on GB-hours). In contrast, TrueFoundry orchestrates workloads on raw cloud compute, enabling the utilization of Spot Instances (AWS) or Preemptible VMs (GCP).

By leveraging Spot fleets for interruptible inference workloads, teams can reduce compute costs by approximately 60% compared to on-demand pricing. Furthermore, TrueFoundry manages the lifecycle of these instances, handling interruptions gracefully to maintain service availability.

Source: AWS Spot Instance Pricing

Comparing Vercel AI vs TrueFoundry

The following table contrasts the operational characteristics of both platforms for production workloads.

When to Eject from Vercel and Move to TrueFoundry?

Vercel is an optimal choice for frontend development and rapid AI prototyping. However, production-grade AI workloads often necessitate greater control over cost and infrastructure than the serverless model permits.

TrueFoundry provides a purpose-built platform for executing AI backends at scale, eliminating timeouts, opaque billing structures, and platform-specific runtime dependencies.

If your team is seeking to simplify AI infrastructure while reducing operational overhead, connect with the TrueFoundry team to evaluate how the platform can support your specific production requirements.

FAQs

Is Vercel AI safe?

Vercel AI utilizes standard encryption for data in transit and rest. However, as a multi-tenant SaaS platform, it may not meet the strict data residency or isolation requirements (single-tenant VPC) mandated by highly regulated industries compared to a self-hosted solution on TrueFoundry.

Is Vercel trustworthy?

Yes, Vercel is a reputable Series D technology company that hosts major web properties. Concerns regarding "trust" typically refer to "platform risk"—the strategic risk of building on a proprietary ecosystem—rather than security or business integrity issues.

What are the disadvantages of Vercel?

The primary technical disadvantages are the strict execution timeouts (maximum 5 minutes), the 4.5MB request body limit, the inability to attach GPUs for custom model hosting, and the potential for complex scaling costs.

How much does Vercel AI cost?

The Vercel AI SDK is open source. Infrastructure costs are tied to the Vercel hosting plan: Pro starts at $20/user/month, but usage-based charges for Function Duration and Data Transfer apply. High-volume AI apps can see rapid cost escalation due to these usage meters.

When not to use Vercel?

Avoid Vercel if your application requires long-running autonomous agents (>5 mins), processing of large binary files (>4.5MB), hosting of custom open-source models on GPUs, or strict private networking (VPC) isolation.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)