What is AI Gateway ? Core Concepts and Guide

Introduction

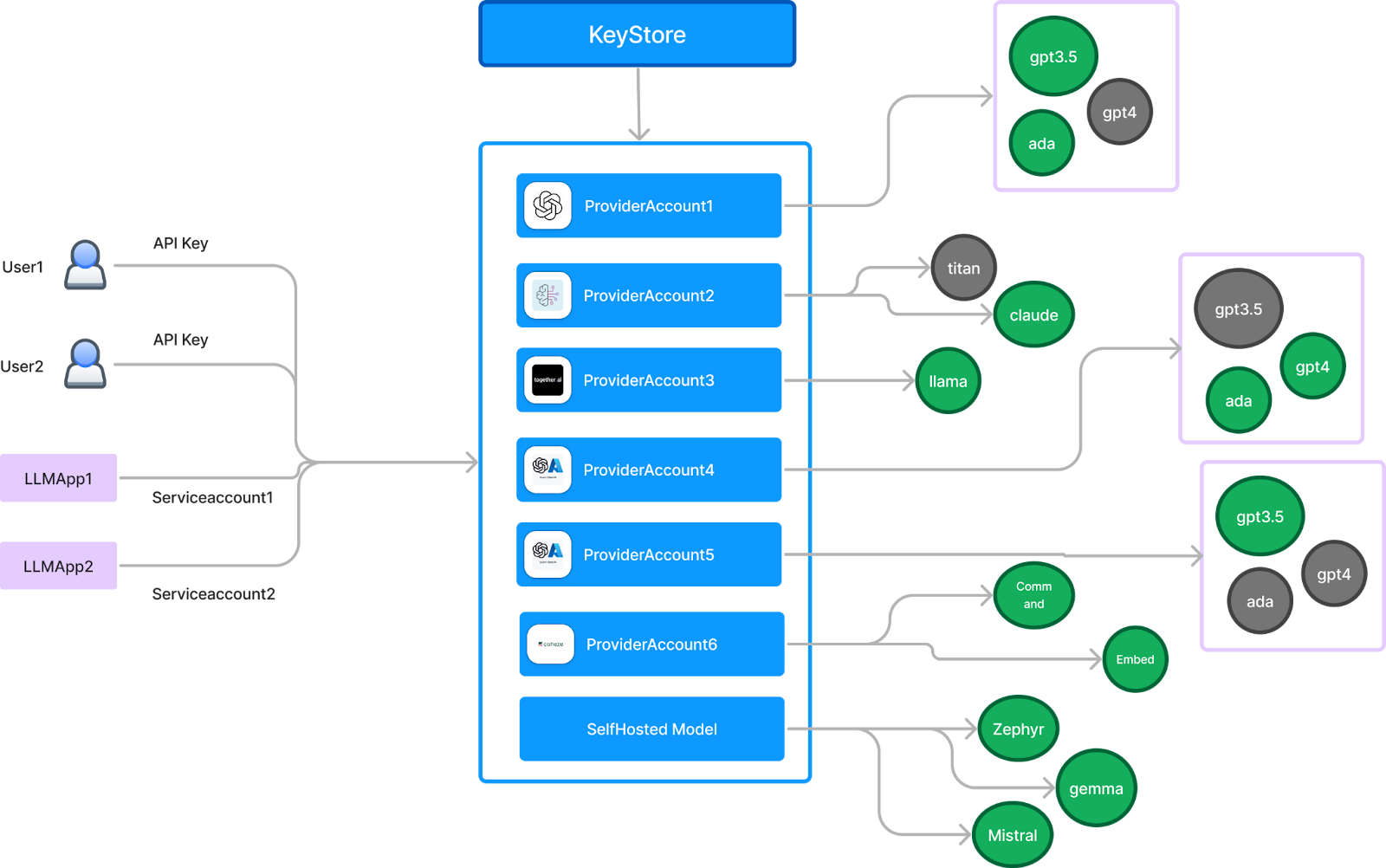

As organizations adopt LLMs across workflows, managing access, performance, cost, and compliance becomes a critical challenge. AI Gateways solve this problem by acting as a centralized control plane for all LLM usage, standardizing how teams query, monitor, and scale models in production. They unify multiple providers (like OpenAI, Anthropic, Mistral, and open-source LLMs) under a single API, enforce authentication policies, track usage, and enable cost attribution. TrueFoundry’s AI Gateway is one such enterprise-grade solution designed for modern GenAI applications, offering observability, rate limiting, prompt versioning, and more, helping businesses deploy AI reliably, securely, and at scale.

What is an AI Gateway?

An AI Gateway is an abstraction layer that unifies access to multiple Large Language Models (LLMs) through a single API interface. It provides a consistent, secure, and optimized way to interact with models across providers such as OpenAI, Anthropic, Cohere, Together.ai, or open-source models like Mistral and LLaMA 2 deployed on your own infrastructure.

At its core, an AI Gateway handles the heavy lifting of integrating, routing, authenticating, and monitoring LLM usage across different endpoints. Instead of dealing with multiple SDKs, authentication tokens, rate limits, and pricing models, teams can route all model requests through the Gateway. This streamlines development and enables governance at scale.

TrueFoundry’s AI Gateway is built for enterprise-grade performance and observability. It allows teams to:

- Route requests to the best model based on latency, cost, or use case

- Automatically retry failed calls and cache responses to save costs

- Define per-user or per-team rate limits and quotas

- Track usage metrics, latencies, and cost at granular levels

- Enforce fine-grained access control through API keys or tokens

- Version prompts for consistent and reproducible outputs

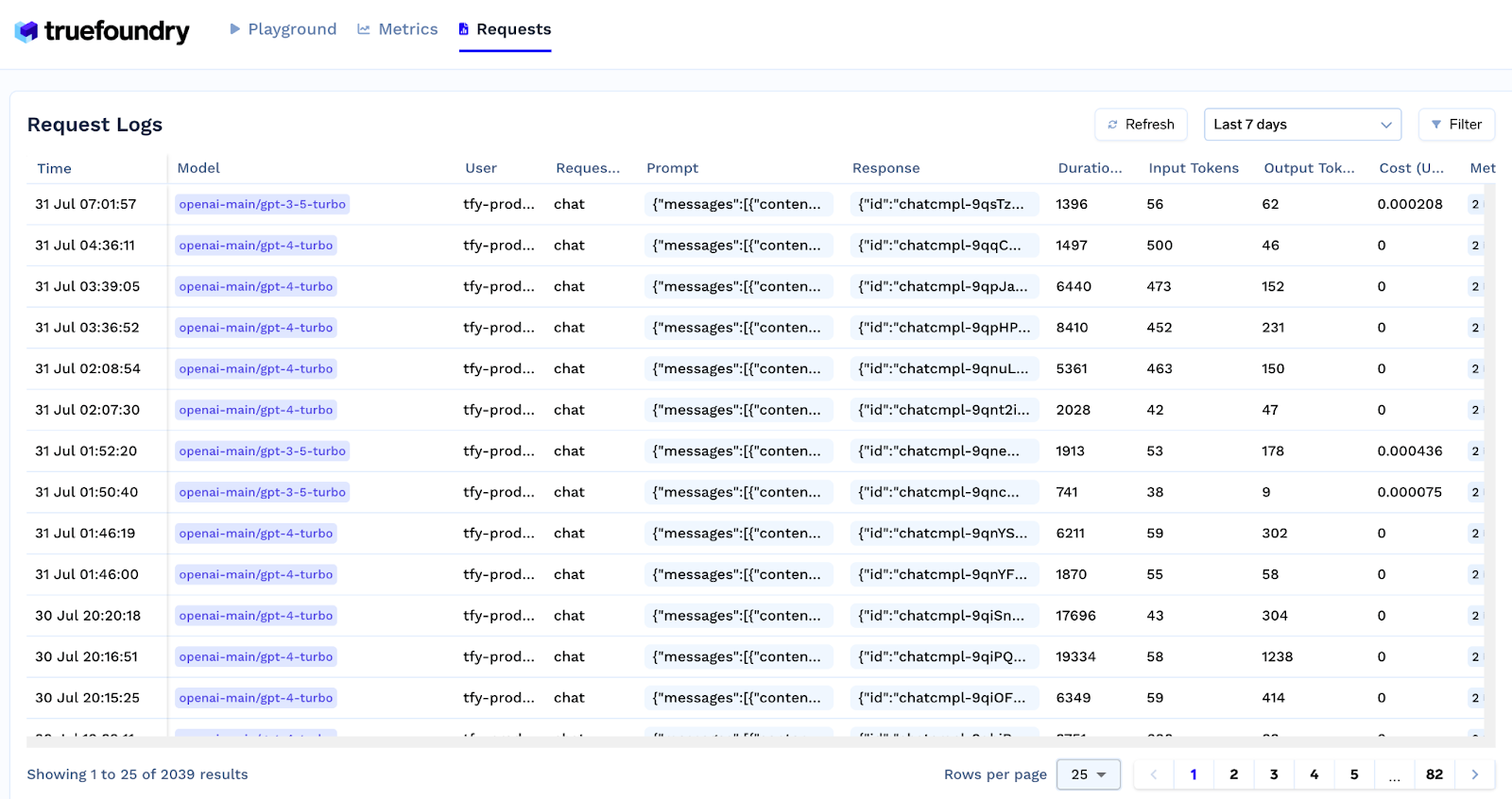

- Capture and monitor input/output data for debugging and improvement

In addition, the Gateway supports streaming and non-streaming modes, tool calling (function calling), prompt templating, and tagging for team-level cost breakdowns. With built-in observability, TrueFoundry enables tracking of not just latency and token usage but also user-specific access, traffic trends, and per-endpoint performance.

As LLM usage grows across teams, use cases, and environments, an AI Gateway becomes the foundation for operationalizing generative AI in production. It provides control, visibility, and optimization across the entire lifecycle of LLM interactions.

Key Features of AI Gateway

An AI Gateway brings a structured and scalable approach to managing LLM usage across teams and environments. Below are the key features that make it essential for modern GenAI workflows:

Unified Access: AI Gateways offer a single API interface to access multiple LLMs across vendors like OpenAI, Anthropic, or in-house models. This eliminates the need to manage individual APIs, SDKs, or keys for each provider.

Authentication and Authorization: AI Gateways enforce secure access through centralized key management. Developers receive scoped API keys while root keys remain protected, integrated with secret managers like AWS SSM, Google Secret Manager, or Azure Vault.

Role-Based Access Control (RBAC): Ensures that only authorized users can access specific models or actions, aligning with enterprise security standards.

Performance Monitoring: Track latency, error rates, and token throughput for each model endpoint. This helps detect issues early, optimize routing, and maintain SLAs.

Usage Analytics: Detailed logs and dashboards show who used which model, when, and how, offering transparency across projects and enabling cost attribution per user, team, or feature.

Cost Management: Gateways track token-level usage and associate costs with users, teams, or endpoints. This provides clear visibility into spend patterns and helps prevent cost overruns.

API Integrations: Support for external APIs and tools such as evaluation pipelines, prompt guardrails, or vector databases enables seamless integration with broader AI/ML ecosystems.

Custom Model Support: Users can bring their own fine-tuned or proprietary models into the Gateway, routing traffic alongside commercial models.

Caching: Store and reuse identical or similar LLM responses to save tokens and reduce latency.

Routing and Fallbacks: Intelligent request routing based on latency, cost, or reliability. Includes fallback mechanisms and auto-retries to improve resiliency.

Rate Limiting and Load Balancing: Supports user-level quotas, rate limiting, and load balancing across model providers for optimal throughput and stability.

How to Evaluate an AI Gateway

Evaluating an AI Gateway requires a comprehensive assessment of its capabilities across access control, model integration, observability, and cost governance. A robust AI Gateway should simplify model usage while ensuring scalability, performance, and security for production-grade applications.

Authentication and Authorization

A strong AI Gateway centralizes API key management by issuing individual keys to each user or service while safeguarding root keys using secret managers like AWS SSM, Google Secret Store, or Azure Vault.

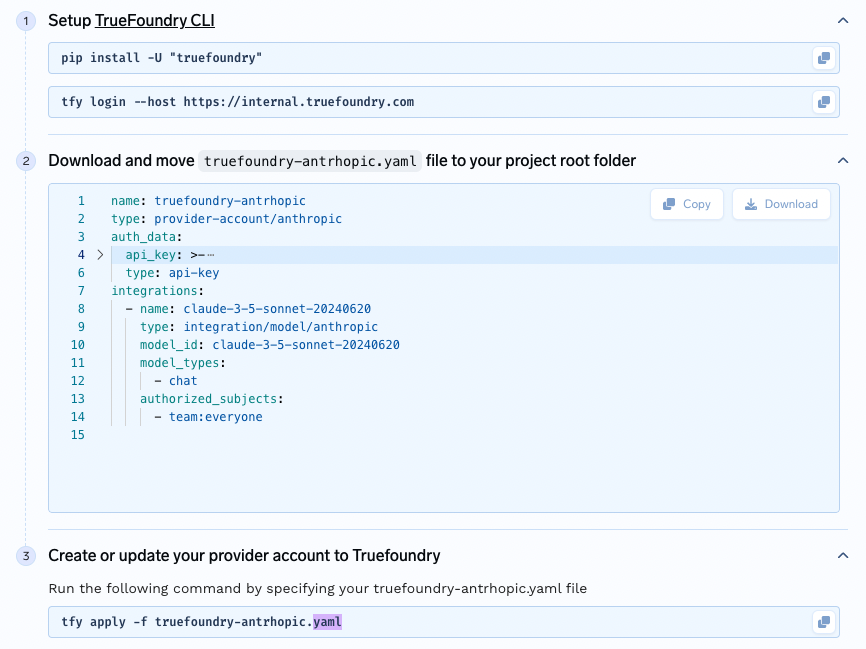

TrueFoundry’s Gateway allows administrators to manage fine-grained access to all integrated models, whether self-hosted or third-party, via a unified admin interface. Access control configurations are tracked in versioned YAML files, ensuring auditability and compliance.

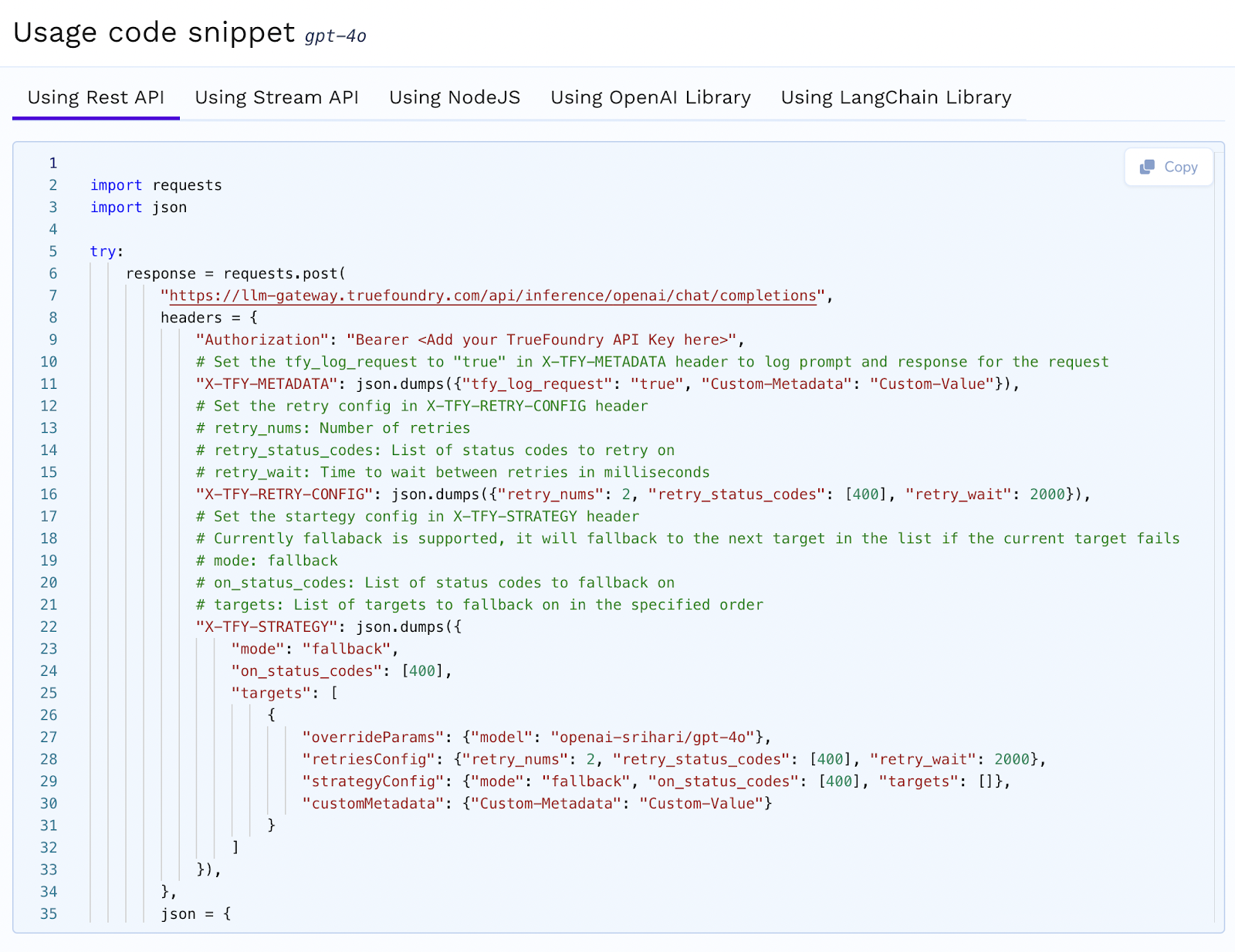

Unified API and Code Generation

The AI Gateway should offer a standardized interface for interacting with multiple models. TrueFoundry follows the OpenAI request-response format, making it compatible with LangChain and OpenAI SDKs. Developers can switch between models without modifying their code. TrueFoundry also provides auto-generated code snippets for different providers and programming languages, simplifying integration.

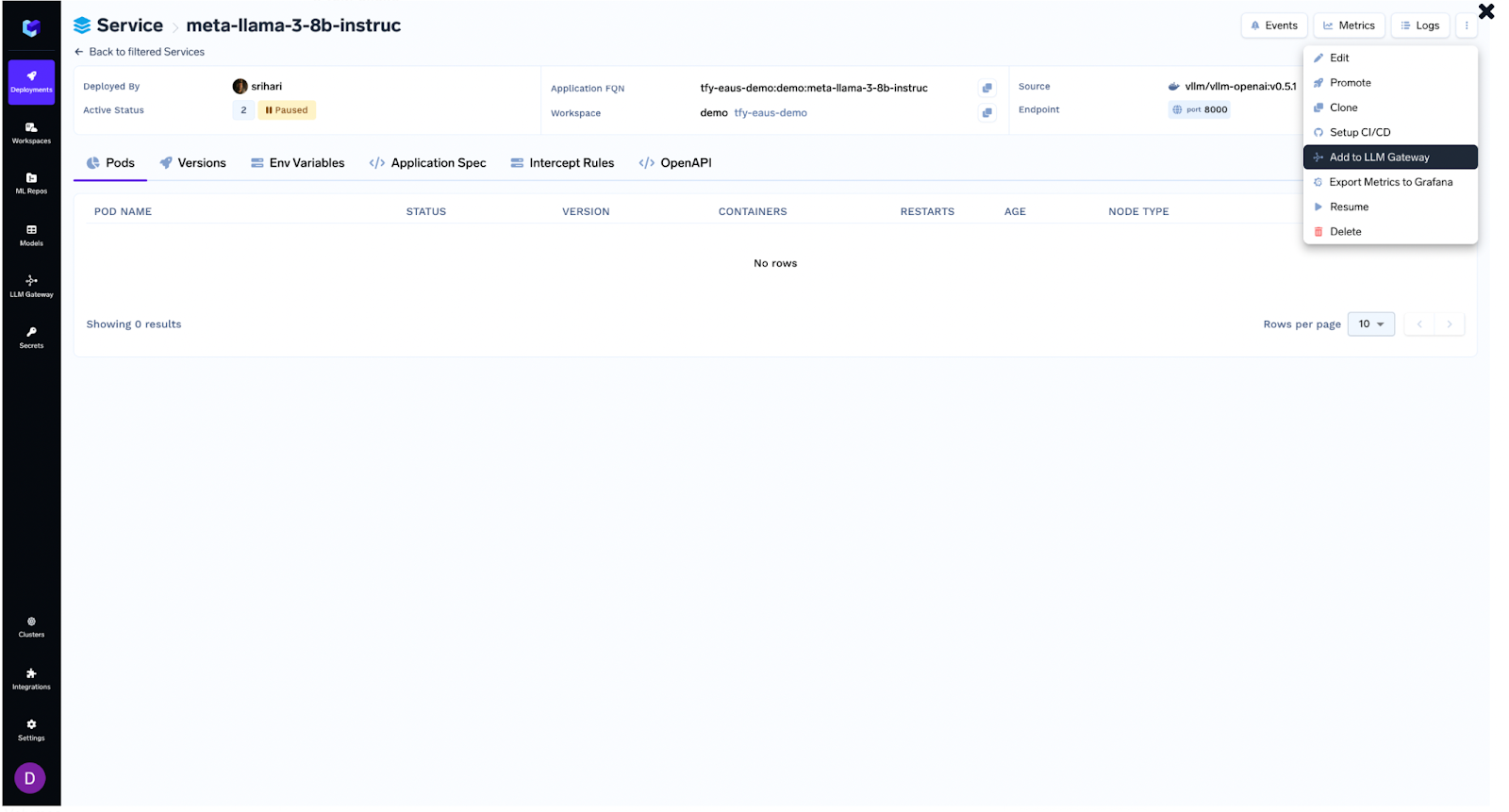

Model Selection

TrueFoundry supports three key routes for model access: third-party providers (like OpenAI, Cohere, AWS Bedrock, and Anthropic), self-hosted open-source models (deployed via HuggingFace or custom infrastructure), and TrueFoundry-hosted models shared across clients. This flexibility enables teams to mix and match models based on use case, budget, or latency requirements.

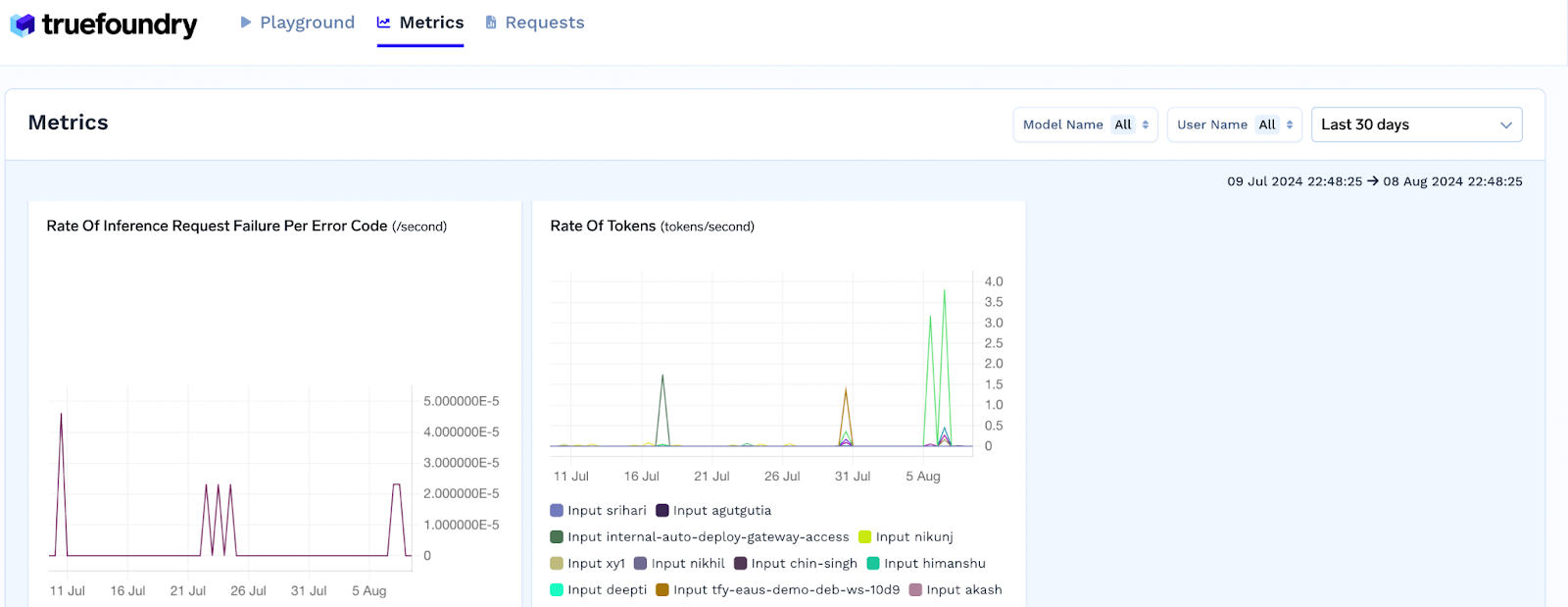

Performance Monitoring

To ensure reliability, the Gateway should monitor latency, error rates, throughput, and inference failures. TrueFoundry captures key metrics like request latency, rate of tokens, and rate of inference failures, making it easy to identify performance bottlenecks through real-time dashboards.

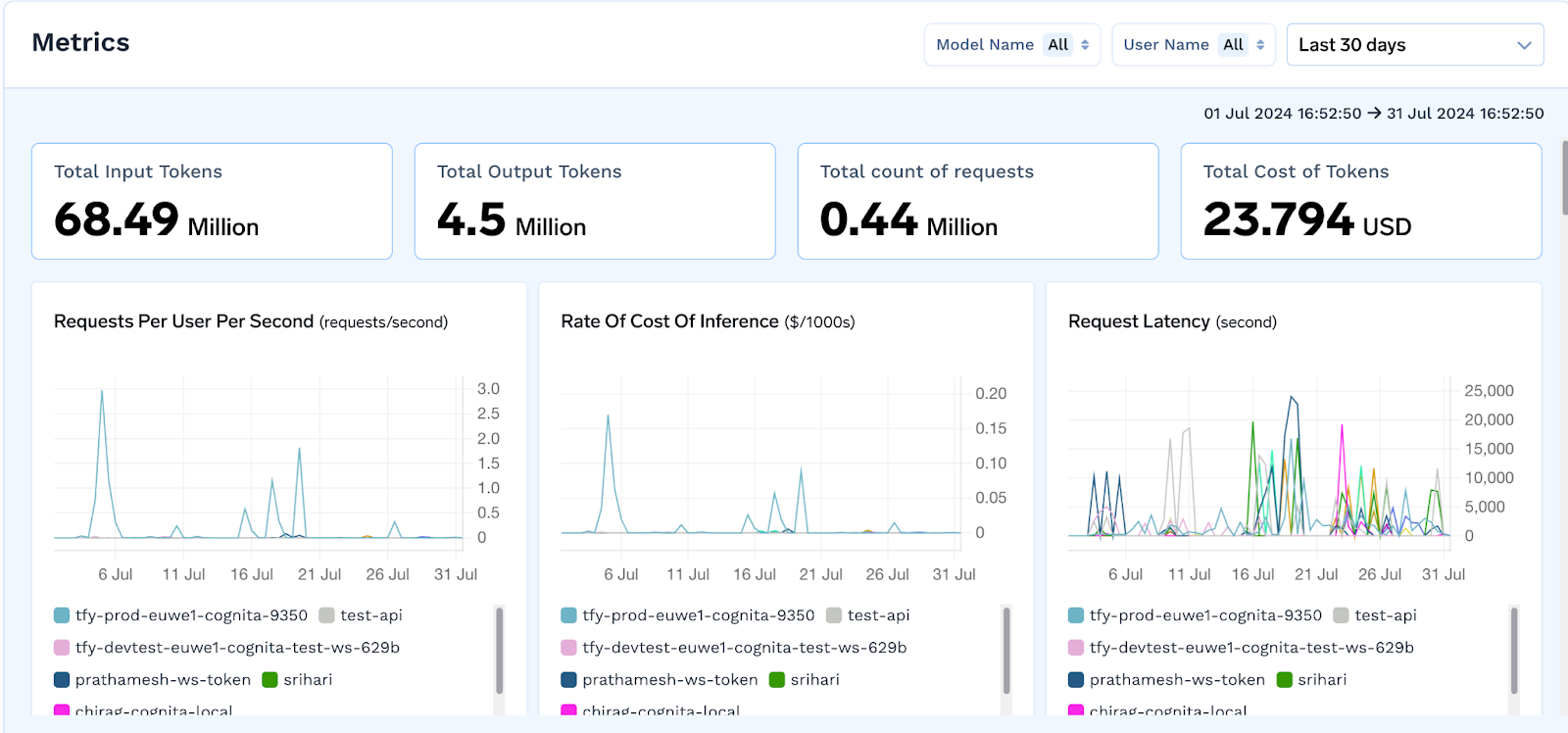

Usage Analytics

Understanding how, when, and by whom models are used is critical for governance. TrueFoundry logs detailed request and response activity, token consumption, and cost per model. These insights help teams manage workloads and optimize usage patterns.

Cost Management

The Gateway should log costs from all model interactions, whether hosted internally or through commercial APIs. TrueFoundry provides full visibility into model usage costs across users, teams, and projects. Integrated dashboards allow organizations to track spend, configure alerts, and apply rate limits or budget caps to control overages.

Advanced Features of an AI Gateway

Advanced features in an AI Gateway determine how effectively it can operate in real-world, production-scale environments. TrueFoundry’s AI Gateway brings a rich set of capabilities that optimize performance, improve reliability, and seamlessly integrate with broader systems, making it enterprise-ready from day one.

Model Caching

Caching helps reduce latency and save costs by avoiding redundant model calls. TrueFoundry supports both exact match caching (for identical prompts) and semantic caching (for similar meaning queries), which enhances speed without compromising on relevance. You can configure cache expiration policies and manually invalidate outdated entries when needed. This ensures that the gateway serves fast, accurate, and up-to-date responses.

- Caching Modes Supported: Exact Match and Semantic Caching, with configurable expiry and invalidation.

Intelligent Routing and Reliability

For production-critical applications, the gateway automatically routes traffic to alternative models if the primary one fails, ensuring uninterrupted service. Automatic retries help recover from transient errors without user intervention. Built-in rate limiting helps enforce quotas and prevent overuse, while load balancing distributes traffic across multiple models or providers to maintain optimal throughput and minimize latency.

- Routing Enhancements: Fallbacks, auto-retries, rate limiting, and load balancing.

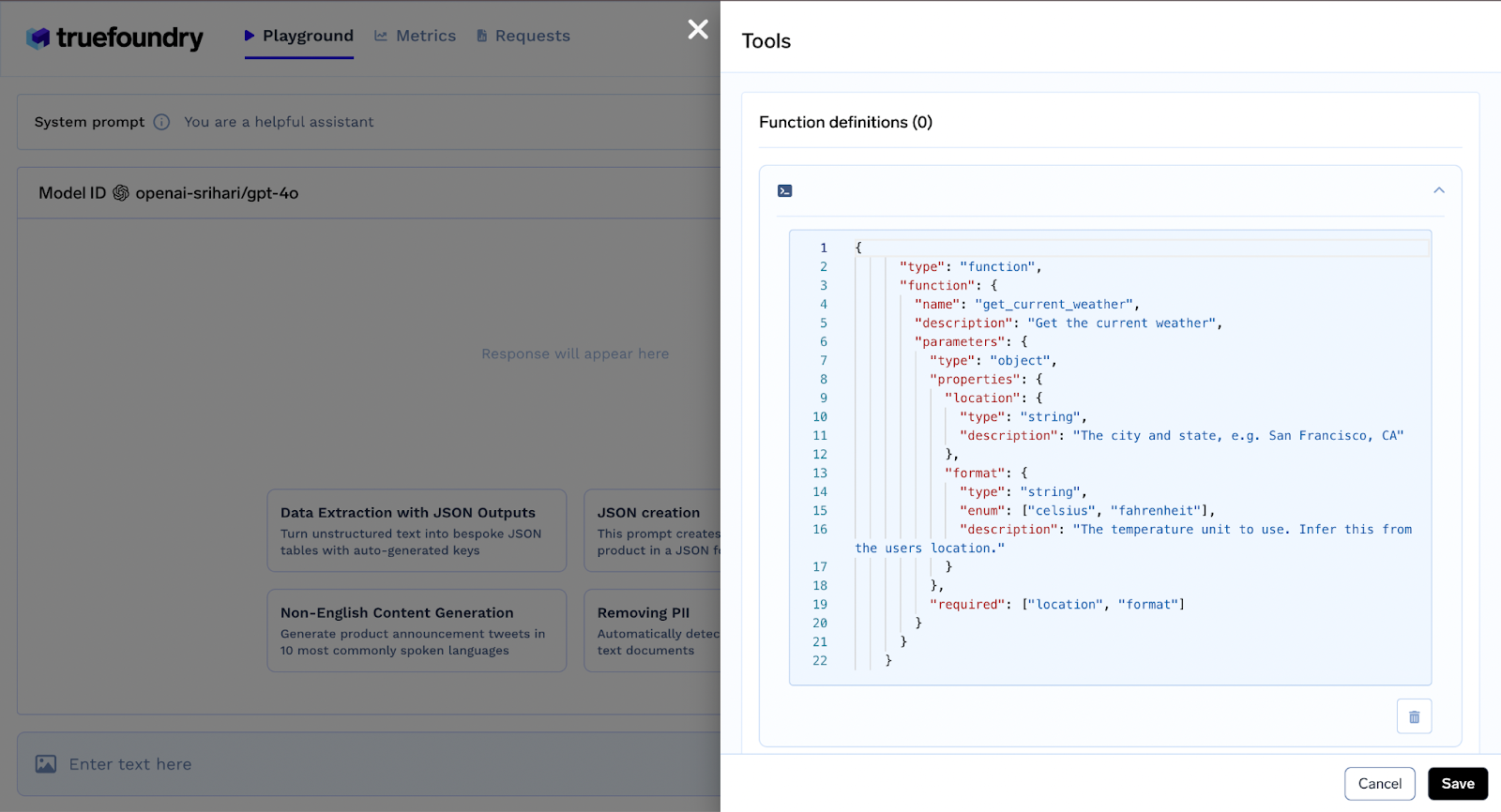

Tool Calling (Simulated Function Invocation)

TrueFoundry’s Gateway supports tool calling by simulating interactions with external APIs. While the actual function is not executed by the gateway, the model can return structured outputs representing the intended tool call. This is ideal for building workflows where LLMs need to decide when and how to invoke tools, enabling developers to design and test these behaviors safely.

- Tool Simulation: Structured output for modeled API/function calls, without actual execution.

Multimodal Support

Modern applications often involve more than just text. The Gateway supports multimodal inputs such as text and images within the same request, which unlocks use cases like document Q&A, visual search, or customer support enriched with screenshots or product photos. This makes the AI Gateway suitable for both traditional NLP and next-gen AI applications that require context from multiple data formats.

- Multimodal Inputs: Combine text, images, and structured data in a single request.

API Integrations and Ecosystem Connectivity

TrueFoundry enables deep integration with your existing stack. You can plug in observability tools like Prometheus and Grafana for real-time monitoring, implement safety layers using Guardrails AI or NeMo Guardrails, and evaluate model quality continuously using Arize or MLflow. This connected ecosystem ensures that your AI system is not just performant, but also safe, transparent, and continuously improving.

- Ecosystem Integration: Monitoring, guardrails, and evaluation frameworks built in.

Benefits of an AI Gateway

An AI Gateway delivers significant operational, financial, and engineering advantages for organizations integrating large language models (LLMs) into their products and workflows. It acts as a control plane for AI consumption, providing a consistent interface, enforcing security, and optimizing performance at scale.

Centralized Access and Governance

When multiple teams or applications need to interact with different LLM providers, managing individual keys, tokens, and access rights becomes complex. An AI Gateway centralizes access control, enabling role-based permissions, audit logging, and secure key management.

Example: A global enterprise deploying AI features across marketing, product, and support teams uses an AI Gateway to assign scoped API keys and restrict each team’s access to specific models, reducing the risk of accidental misuse or data leakage.

Cost Transparency and Budget Control

LLMs can become a significant operational cost, especially with growing usage across teams. AI Gateways provide fine-grained cost tracking by user, team, or project. This visibility helps organizations manage budgets, identify inefficiencies, and introduce chargeback models where appropriate.

Example: A SaaS company offering AI-powered features to its customers monitors usage via the gateway and uses the data to implement tiered pricing based on actual token consumption.

Seamless Model Switching and Abstraction

The unified API layer allows organizations to swap LLMs or providers without modifying application code. This makes it easier to test new models, negotiate better pricing, or shift from commercial to open-source deployments.

Example: A startup initially using a commercial LLM transitions to a fine-tuned open-source model for data privacy and cost savings, without changing their codebase, thanks to the gateway abstraction.

Improved Reliability and Resilience

Gateways offer built-in fallbacks, automatic retries, caching, and load balancing to ensure uninterrupted service and consistent performance, even under load or during provider outages.

Example: A high-traffic chatbot system handles sudden traffic spikes by dynamically routing requests across multiple providers while falling back to cached responses when needed.

Compliance and Observability

For regulated industries, the ability to track and audit model usage is critical. AI Gateways integrate with monitoring, logging, and security tooling to meet compliance standards and internal governance policies.

Example: A healthcare company logs every request and response through the gateway, enabling complete traceability for audit purposes while maintaining data access boundaries.

Conclusion

As organizations scale their use of large language models, the need for a secure, reliable, and efficient interface becomes critical. An AI Gateway serves as that foundational layer, abstracting away the complexity of managing multiple providers, enforcing access controls, tracking costs, and ensuring performance at scale. It empowers teams to experiment, deploy, and monitor LLM-powered applications with confidence and control.

Whether you're building internal copilots, customer-facing chat interfaces, or multimodal AI workflows, an AI Gateway helps standardize infrastructure while remaining flexible enough to support evolving model ecosystems. Features like caching, routing, cost attribution, and tool calling further extend its value for enterprise-grade deployments.

In a rapidly changing AI landscape, adopting an AI Gateway is not just a convenience; it’s a strategic investment in operational maturity, observability, and long-term scalability.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.