Enterprise-Ready Prompt Evaluation: How TrueFoundry and Promptfoo Enable Confident AI at Scale

As enterprises accelerate the adoption of large language models (LLMs), the conversation is rapidly shifting from experimentation to production readiness. Teams are no longer asking whether AI can be used, but how it can be deployed reliably, safely, and at scale. This transition introduces a new set of challenges: ensuring prompt quality, preventing regressions, and maintaining governance as models, prompts, and use cases evolve.

To address these challenges, TrueFoundry and Promptfoo have partnered to deliver a tightly integrated solution that brings systematic prompt evaluation into enterprise AI infrastructure. By combining Promptfoo’s robust prompt testing capabilities with the TrueFoundry AI Gateway, organizations can confidently move AI workloads into production while maintaining high standards for quality, reliability, and governance.

Why Prompt Evaluation Is a Critical Enterprise Problem

In modern AI applications, prompts are effectively part of the application logic. Small changes to a prompt — or even a change in the underlying model — can significantly impact output quality, tone, correctness, or safety. Despite this, many organizations still rely on manual testing or informal reviews to validate prompt changes before release.

As AI systems scale across teams and products, this lack of structure becomes a business risk. Inconsistent outputs can degrade customer experience, regressions can slip into production unnoticed, and platform teams struggle to enforce quality standards across a growing AI footprint. What enterprises need is a way to treat prompts with the same rigor as code — evaluated, tested, and governed as part of the deployment pipeline.

Promptfoo: Bringing Discipline to Prompt Testing

Promptfoo was built to solve this exact problem. It provides a framework for evaluating LLM prompts across datasets, models, and test cases, enabling teams to quantify quality rather than rely on intuition. With Promptfoo, teams can compare outputs across models, define custom evaluation criteria, and catch regressions early in the development lifecycle.

Most importantly, Promptfoo enables prompt evaluation to become repeatable and automated. Instead of relying on ad-hoc reviews, teams can integrate prompt tests into CI/CD workflows, ensuring that every prompt change is validated against clearly defined expectations before it reaches production.

TrueFoundry AI Gateway: The Control Plane for Enterprise AI

While prompt evaluation is essential, enterprises also need a secure and standardized way to operationalize AI at scale. This is where the TrueFoundry AI Gateway plays a critical role. The AI Gateway provides a unified API layer to access and manage hundreds of LLMs and MCP servers, while enforcing enterprise requirements such as authentication, access control, observability, and policy enforcement.

By centralizing AI traffic through the Gateway, organizations gain visibility and control over how models are used across teams and environments. This architectural approach ensures that AI innovation does not come at the cost of security, compliance, or operational complexity.

A Powerful Integration: Prompt Evaluation at the Gateway Layer

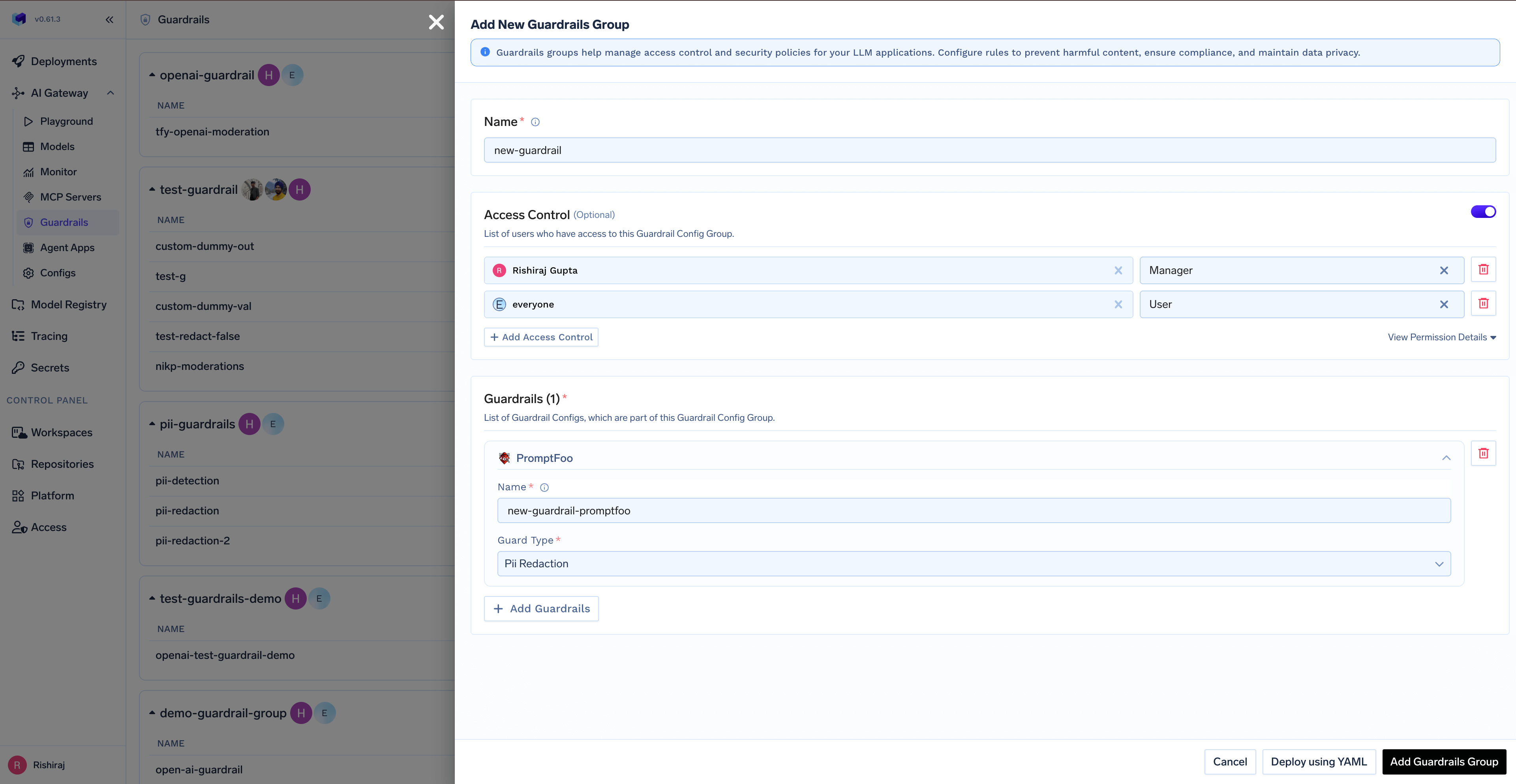

The integration between Promptfoo and the TrueFoundry AI Gateway brings these two capabilities together in a seamless workflow. Promptfoo evaluations can now be configured as guardrails within the Gateway, allowing every request to be assessed against defined quality and behavior criteria.

This means that prompt evaluation is no longer limited to development or testing environments. Instead, it becomes an enforceable policy at the infrastructure level. Requests that fail evaluation criteria can be flagged, logged, or blocked, ensuring that only validated AI behavior reaches downstream users and systems.

By embedding prompt evaluation directly into the AI Gateway, organizations gain a single, consistent mechanism to enforce quality across models, teams, and applications.

Business Impact: Turning AI Risk into Competitive Advantage

From a business perspective, this partnership helps organizations move faster without increasing risk. Automated prompt evaluation reduces the time spent on manual reviews and debugging, enabling teams to ship AI features more quickly and with greater confidence. At the same time, centralized enforcement through the Gateway ensures consistency, even as AI usage scales across the organization.

For platform and engineering leaders, this integration simplifies governance. Instead of relying on fragmented tooling and informal processes, teams can define organization-wide standards for prompt quality and enforce them uniformly. This leads to fewer production incidents, improved customer trust, and better alignment between engineering velocity and business expectations.

Enabling the Next Phase of Enterprise AI

The partnership between TrueFoundry and Promptfoo reflects a broader shift in how enterprises approach AI. As LLMs become foundational to products and workflows, organizations need infrastructure that supports not just experimentation, but long-term reliability and governance.

By combining enterprise-grade AI infrastructure with systematic prompt evaluation, TrueFoundry and Promptfoo enable teams to treat prompts as first-class citizens in the software lifecycle — tested, governed, and deployed with confidence.

Getting Started

Organizations can begin using the integration by configuring Promptfoo as a guardrail within the TrueFoundry AI Gateway and defining evaluation criteria aligned with their business and product requirements. From there, prompt quality becomes an enforceable standard rather than a best-effort practice.

To learn more about how to set up and use the integration, explore the TrueFoundry documentation:

https://truefoundry.com/docs/ai-gateway/promptfoo

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.