Vercel AI Gateway vs OpenRouter: Which one is best for you?

Introduction

As teams adopt large language models across products and internal systems, AI gateways have become a common architectural layer. Instead of integrating separately with each model provider, teams increasingly look for a single API that abstracts provider differences, simplifies routing, and reduces integration overhead.

This has led to the rise of gateway-style offerings that promise faster development and easier experimentation. Among these, Vercel AI Gateway and OpenRouter are frequently compared - often because they both sit between applications and multiple LLM providers.

However, while they appear similar on the surface, the two are built for very different needs and stages of AI adoption. One is optimized for frontend developer experience, while the other prioritizes broad access to models and rapid experimentation.

The goal of this comparison is to clarify those differences across scope, architecture, and production readiness - so teams can choose the right gateway for their use case.

What Is Vercel AI Gateway?

Vercel AI Gateway is part of Vercel’s broader application platform and is designed to make it easy for developers to consume LLMs inside web and frontend-driven applications.

At a high level, Vercel AI Gateway:

- Provides a unified interface to selected LLM providers

- Is tightly integrated with the Vercel AI SDK

- Works seamlessly with Next.js, serverless functions, and edge runtimes

Its primary focus is developer experience. Developers building applications on Vercel can add LLM capabilities with minimal setup, without worrying about provider-specific SDKs or credentials.

Importantly, Vercel AI Gateway is best understood as an application-layer gateway. It is optimized for simplifying LLM usage inside Vercel-hosted apps, rather than acting as an infrastructure-level control plane for AI across teams, environments, or deployments.

What Is OpenRouter?

OpenRouter is a cloud-based model routing and aggregation platform that provides a single API for accessing a wide range of LLMs across providers

OpenRouter’s core strengths include:

- Access to hundreds of models from multiple providers

- Easy switching and comparison between models

- Aggregated billing and rate limits through one API

This makes OpenRouter particularly attractive for:

- Developers experimenting with different models

- Teams benchmarking or comparing LLM behavior

- Rapid prototyping without committing to a single provider

OpenRouter is intentionally lightweight. It focuses on routing and aggregation, not on deployment, governance, or infrastructure management. As a result, it works well as a model router, but is not designed to serve as a centralized AI control layer for production systems.

Vercel AI Gateway vs OpenRouter: Core Differences at a Glance

Architecture and Deployment Scope

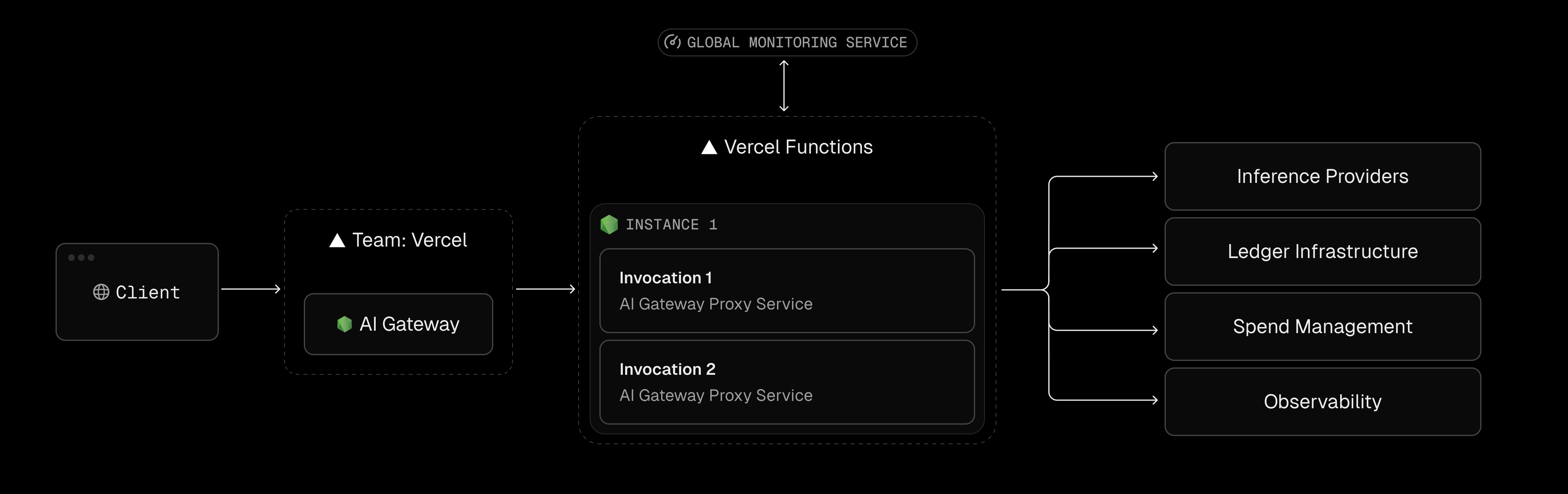

Vercel AI Gateway architecture

Vercel AI Gateway is built as a managed gateway inside the Vercel ecosystem. Your app (often using the Vercel AI SDK) sends requests to the gateway, and Vercel handles provider connectivity, routing behavior, and usage controls. The gateway is positioned to help teams ship faster without managing provider accounts and keys, while offering operational knobs like budgets, usage monitoring, load-balancing, and fallbacks.

Scope implication: it’s optimized for Vercel-hosted apps and developer workflows, not for running the gateway inside your own private infra.

OpenRouter architecture

OpenRouter is a cloud routing layer: your application calls OpenRouter’s API, and OpenRouter routes traffic to the chosen model/provider. It supports routing controls like provider routing, and offers features like Auto Router for selecting between models based on the prompt, plus model fallbacks/load balancing depending on availability.

From a data/ops standpoint, OpenRouter documents that it logs basic request metadata and that prompts/completions are not logged by default (unless you opt in).

It also supports team usage patterns via Organizations (shared credits, centralized key management, and usage tracking).

Scope implication: it’s great for multi-model access and routing, but still fundamentally a managed cloud service rather than something you deploy inside your network boundary.

Where TrueFoundry Fits: Enterprise AI Gateway Perspective

Vercel AI Gateway and OpenRouter both help unify model access, but many teams encounter a new set of requirements as they move from single applications or experimentation to enterprise-scale AI deployments. At this stage, convenience alone is no longer sufficient.

Common requirements that emerge include:

- Private deployments (VPC, on-prem, or air-gapped environments)

- Centralized governance across multiple teams and applications

- Auditability and policy enforcement as default behavior

- Consistent observability across models, providers, and environments

This is the gap that TrueFoundry is designed to fill.

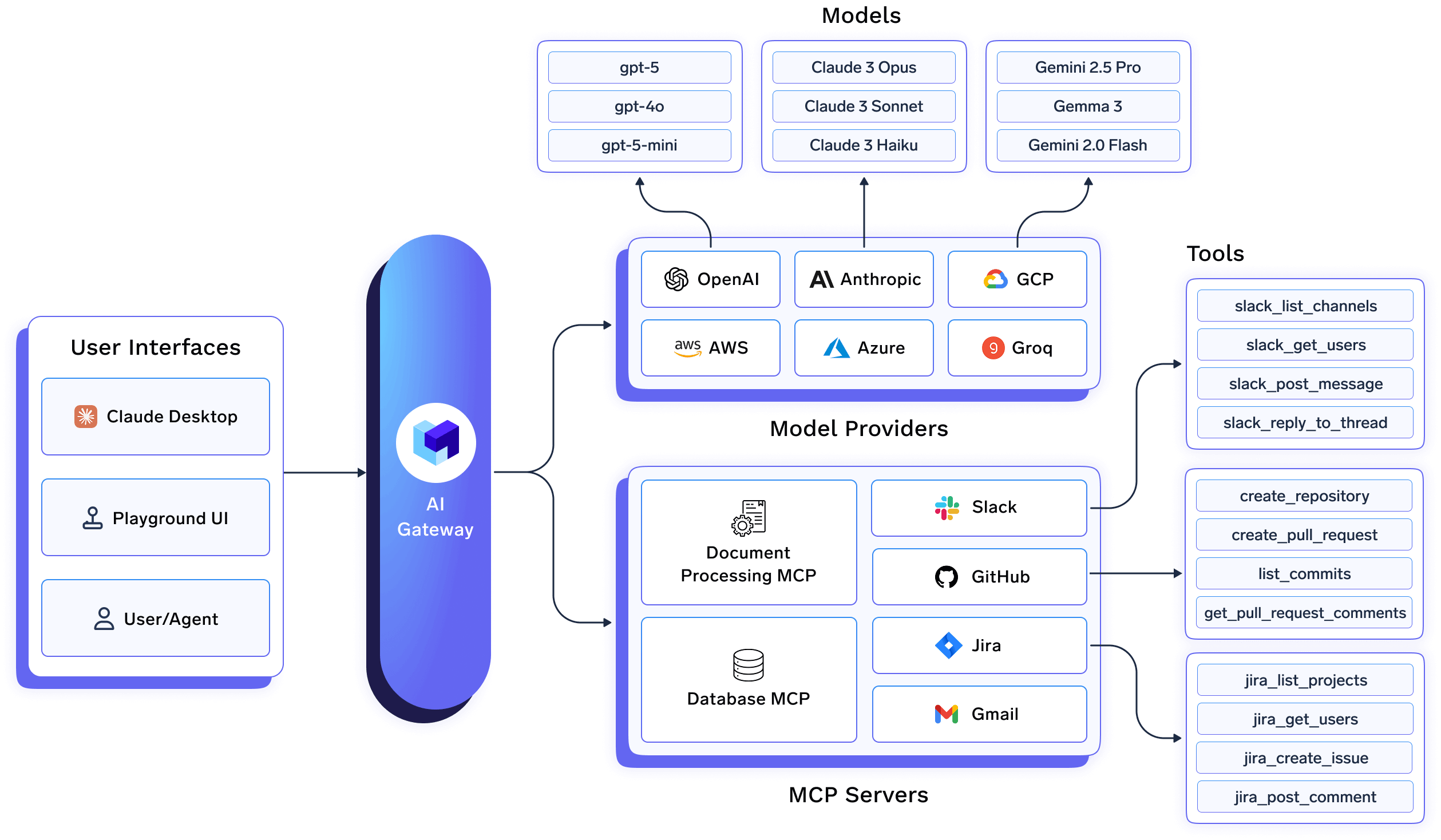

TrueFoundry’s AI Gateway is built as an infrastructure-level control plane, not just a routing or application convenience layer. It can be deployed as SaaS or self-hosted inside your own cloud or on-premise infrastructure, allowing organizations to keep LLM traffic within their security and compliance boundaries while still standardizing access behind a single gateway.

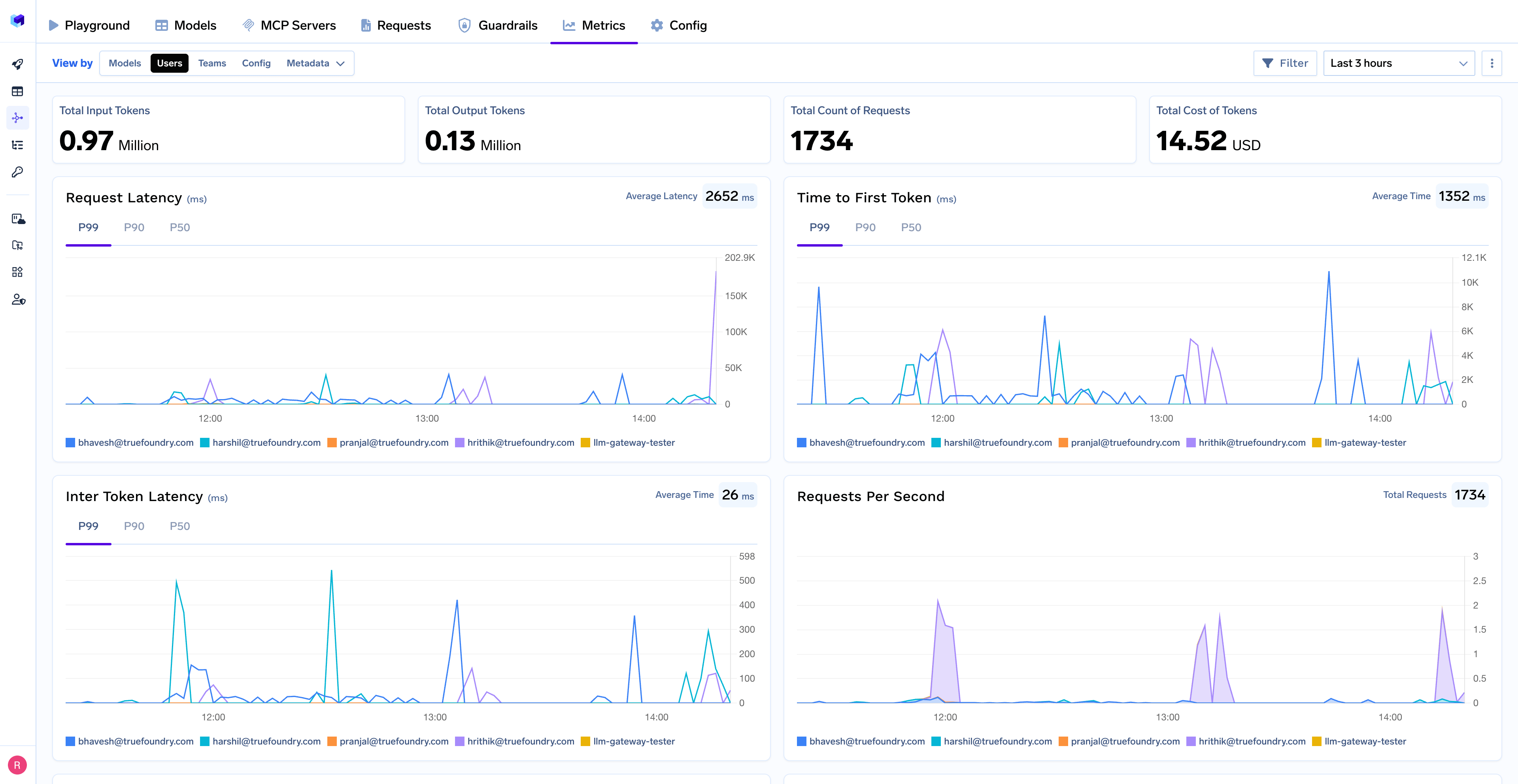

Beyond basic routing, the TrueFoundry gateway provides:

- Centralized access control and usage policies enforced consistently across teams

- Unified observability, including usage, latency, and cost attribution by app or team

- Model-agnostic routing, working across self-hosted models, fine-tuned models, and external providers

- Production-ready deployment primitives, designed to integrate cleanly with Kubernetes-native environments

Rather than replacing developer-focused tools, TrueFoundry complements them as organizations mature. It is built for teams that need enterprise-grade security, compliance, and operational visibility as LLMs move from isolated experiments into core business workflows.

In practice, this means treating LLM access as shared infrastructure, not application-specific logic - where policies, observability, cost controls, and deployment boundaries are enforced centrally, independent of how individual teams build or deploy their applications.

Conclusion

Vercel AI Gateway and OpenRouter both play an important role in simplifying access to large language models, but they are built for different stages of AI adoption. Vercel AI Gateway prioritizes developer experience within the Vercel ecosystem, while OpenRouter excels at model aggregation and rapid experimentation.

As organizations scale beyond individual applications, new requirements emerge - around governance, observability, deployment control, and compliance. At this stage, application-level or routing-only gateways often become limiting.

This is where infrastructure-level gateways like TrueFoundry come into play. By treating LLM access as shared enterprise infrastructure rather than application logic, teams gain the control and visibility needed to operate AI systems reliably in production.

Choosing the right gateway ultimately depends on where your organization is in its AI journey and understanding these distinctions early helps avoid architectural rework as AI becomes core to the business.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.