From LLM Experiments to Production Systems

Why the TrueFoundry AI Gateway and LangSmith Belong Together

Most organizations discover the hard problems of AI only after their first few successful demos. Early prototypes feel deceptively simple. A prompt, a model call, a response. But as these systems move into production, complexity compounds quickly. Requests fan out across multiple models. Agents call tools, retry, branch, and adapt. Prompts change weekly. Models are swapped. Quality drifts. And suddenly, teams are operating distributed AI systems without the operational foundations they would never skip for traditional services.

This is where most AI platforms begin to fracture. Execution is scattered. Observability is shallow. Evaluation is manual and retrospective. The result is not just technical debt but organizational risk. When something goes wrong, teams cannot explain why. When quality regresses, no one notices until users complain. When leadership asks how models are behaving in production, the answers are anecdotal.

The integration between the TrueFoundry AI Gateway and LangChain’s LangSmith exists to solve this class of problems, not at the level of individual model calls, but at the level of system design.

The Missing Control Plane in AI Architectures

Modern AI systems are inherently distributed. They span multiple model providers, multiple execution environments, and increasingly, multiple autonomous agents. Yet most organizations still treat LLM calls as application level concerns rather than platform level infrastructure.

Without a centralized execution layer, there is no natural place to enforce governance, apply policy, or capture consistent telemetry. Without LLM aware observability, traces devolve into opaque logs that miss the very information engineers need to debug model behavior. Without continuous evaluation, quality becomes a subjective conversation rather than a measurable signal.

TrueFoundry’s AI Gateway addresses the first half of this problem by acting as a unified execution layer for all LLM traffic. LangSmith addresses the second half by providing observability and evaluation designed specifically for LLM systems. The integration between them is what turns these capabilities into a coherent control plane.

TrueFoundry AI Gateway as the Execution Layer

At a strategic level, the TrueFoundry AI Gateway establishes a single, governed entry point for all model and agent requests. Applications and agents no longer talk directly to model providers. They talk to the gateway.

This architectural decision matters because it creates a consistent surface for policy enforcement, routing decisions, and telemetry generation. The gateway determines which model is used, under what constraints, in which environment, and with what safeguards. It also becomes the one place where production behavior can be observed comprehensively.

For platform leaders, this is the inflection point where AI systems stop being a collection of scripts and start behaving like infrastructure.

LangSmith as the System of Record for AI Behavior

While the gateway controls execution, LangSmith becomes the system of record for understanding what actually happened.

LangSmith is not a generic monitoring tool. It is designed around the primitives of LLM systems. Prompts, responses, chains, agents, tool calls, token usage, latency, and intermediate steps are all first class concepts. This allows engineers to reason about AI behavior with the same clarity they expect from traditional distributed tracing, but in a language that matches how LLM systems actually work.

In this integration, LangSmith is not embedded inside TrueFoundry. It remains a distinct, specialized system. That separation is intentional and strategically important.

OpenTelemetry as the Architectural Contract

One of the most important aspects of the TrueFoundry and LangSmith integration is that it is built on OpenTelemetry. The AI Gateway exports traces using standard OpenTelemetry protocols, and LangSmith ingests those traces as an OpenTelemetry compliant backend.

This design choice avoids tight coupling and proprietary glue. It allows organizations to adopt LangSmith without changing how they deploy or route models. It also enables enterprise requirements that are often ignored in early stage AI tooling, such as region specific endpoints, self hosted LangSmith deployments, and VPC isolated environments.

From a platform perspective, OpenTelemetry is not just an implementation detail. It is the contract that allows observability to scale with organizational complexity rather than fighting it.

What Tracing Enables in Real Systems

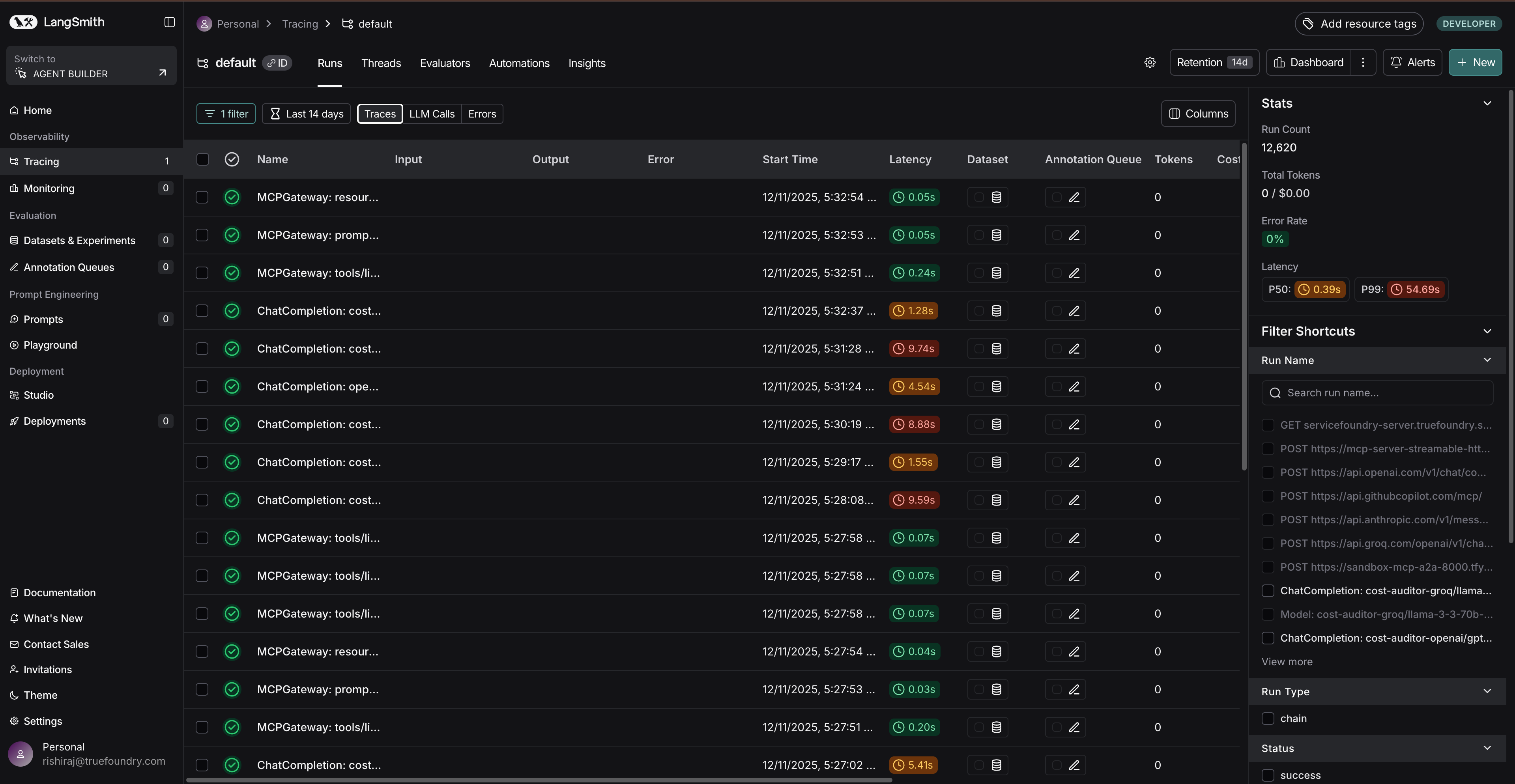

When requests flow through the TrueFoundry AI Gateway, tracing happens automatically. Each model invocation, agent step, and response is captured and exported to LangSmith. What engineers see in LangSmith is not a flat log but a structured execution trace that reflects the actual shape of the AI system.

This makes a qualitative difference in day to day operations. Teams can inspect which prompt version was used in production. They can see how long each step took and where retries occurred. They can trace failures back to specific agent decisions rather than vague error messages.

Observability stops being reactive and becomes diagnostic.

Closing the Loop with Continuous Evaluation

Observability alone is not enough. Knowing what happened does not tell you whether it was acceptable.

This is where LangSmith’s evaluation capabilities complete the picture. Model outputs captured through the gateway can be evaluated against curated datasets that represent expected behavior. Actual outputs are compared to true datasets using task specific metrics or model based judges.

Over time, this turns evaluation into a continuous process rather than an offline exercise. Quality regressions surface early. Prompt changes can be assessed objectively. Model upgrades become measurable rather than risky.

For organizations operating AI at scale, this is the difference between shipping with confidence and shipping on hope.

Running LangSmith Inside a VPC

For many organizations, observability data cannot leave controlled network boundaries. The integration explicitly supports this reality.

LangSmith can be self hosted inside a VPC and exposed through an internal endpoint such as https://self-hosted-langsmith:3000. In this setup, LangSmith runs behind private networking and is reachable only from approved services such as the TrueFoundry AI Gateway.

Once LangSmith is deployed, the key requirement is identifying its OpenTelemetry ingestion endpoint. In most self hosted deployments, this follows the LangSmith API base path and exposes an OpenTelemetry endpoint under /api/v1/otel, with traces typically posted to /v1/traces. This results in a full trace ingestion URL such as https://self-hosted-langsmith:3000/api/v1/otel/v1/traces.

The AI Gateway is then configured to export OpenTelemetry traces to this endpoint using OTLP over HTTP with Proto encoding. Authentication is handled via the LangSmith API key passed as a header. From the gateway’s perspective, this is no different than sending traces to the managed LangSmith service. The only difference is that traffic remains entirely inside the VPC.

This design allows organizations to meet strict security and compliance requirements without sacrificing observability depth.

Why This Matters for AI Leaders

For senior engineers and platform leaders, the value of the TrueFoundry and LangSmith integration lies in what it enables organizationally.

It creates a shared foundation where execution, observability, and evaluation are aligned. It allows teams to reason about AI systems with the same rigor they apply to traditional distributed systems. It supports enterprise requirements without sacrificing developer velocity.

Most importantly, it acknowledges a simple truth. AI systems are production systems. They deserve production grade infrastructure.

A Composable Path Forward

The partnership between TrueFoundry and LangSmith is not about bundling tools. It is about composing the right abstractions. TrueFoundry provides the execution and governance layer. LangSmith provides deep visibility and evaluation. OpenTelemetry connects them.

Together, they form a control plane that allows organizations to move from experimental AI to dependable AI without losing insight, accountability, or trust. That is not just an integration. It is an architectural stance.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.