Semantic Caching for Large Language Models

Introduction

As large language models (LLMs) move into production, teams quickly discover that inference cost and latency scale faster than usage. Even well-designed applications end up sending similar questions repeatedly, phrased differently, but asking for the same underlying information.

Traditional caching techniques fall short in this environment. Exact-match caches only work when prompts are identical, which is rare in natural language systems. The result is unnecessary model calls, wasted tokens, and higher infrastructure load.

Semantic caching addresses this gap by caching responses based on meaning rather than exact text. By reusing answers for semantically similar prompts, organizations can significantly reduce inference costs and improve response times without changing application behavior or model quality.

For production LLM systems, semantic caching is emerging as a foundational optimization layer, especially in high-traffic, enterprise workloads.

What Is Semantic Caching in LLM Systems?

Semantic caching is a caching technique that retrieves stored LLM responses based on semantic similarity between prompts, instead of exact string matches.

In a semantic cache:

- Prompts are converted into vector embeddings

- These embeddings are compared against previously cached prompts

- If a new prompt is semantically close enough to a cached one, the stored response is reused

For example, the following prompts may all map to the same cached response:

- “Summarize this report”

- “Give me a short summary of this document”

- “What’s the key takeaway from this file?”

Although the wording differs, the intent is the same. Semantic caching recognizes this similarity and avoids repeated inference.

Unlike traditional key-value caching, which operates at the text level, semantic caching operates at the intent level. This makes it especially effective for LLM-powered applications where user input is variable but meaning is stable.

In production systems, semantic caching typically runs before the model invocation, allowing fast cache lookups and ensuring that only genuinely new queries reach the LLM.

Why Traditional Caching Fails for LLMs

Traditional caching relies on exact matches. A request is cached only if the next request is textually identical. This approach works well for APIs and structured queries - but it breaks down for natural language.

In LLM systems, users rarely repeat prompts word-for-word:

- “Explain this error”

- “Why am I seeing this error?”

- “What caused this issue?”

All three express the same intent, yet an exact-match cache treats them as entirely different requests. As a result:

- Cache hit rates remain low

- Identical reasoning is recomputed repeatedly

- Inference costs and latency increase unnecessarily

This limitation becomes more severe in production environments where:

- Queries are user-generated

- Agents reformulate prompts dynamically

- Workloads scale across teams and applications

Exact-match caching operates at the string level, while LLM workloads operate at the meaning level. The mismatch between the two is why traditional caching provides limited value for large language models.

Semantic caching resolves this gap by caching at the intent level, making it a far better fit for LLM-driven systems.

Semantic Caching vs Prompt Caching

Prompt caching optimizes for identical requests, which are rare in LLM systems.

Semantic caching optimizes for repeated intent, which is how users actually interact with language models.

For production LLM workloads - especially chat, support, search, and agentic systems- semantic caching provides far greater efficiency gains when implemented centrally through an LLM Gateway.

How Semantic Caching Works

Semantic caching adds a lightweight decision layer before LLM inference, ensuring that only genuinely new requests reach the model.

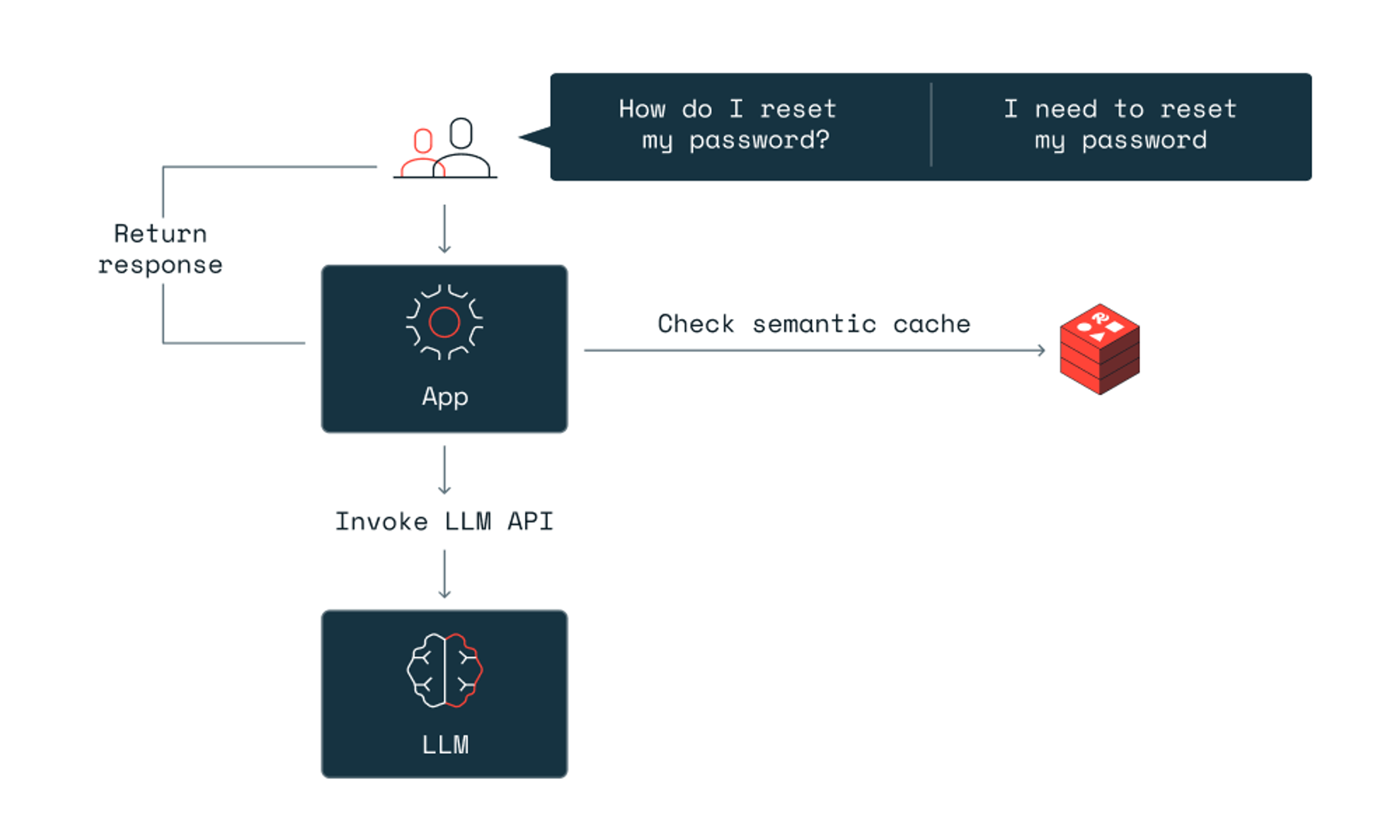

High-Level Flow

- Receive the prompt

An application sends a request to the LLM system. - Generate an embedding

The prompt is converted into a vector representation that captures its meaning. - Search the semantic cache

The embedding is compared against stored embeddings from previous prompts. - Apply a similarity threshold

If a close semantic match is found, the cached response is selected. - Fallback to the LLM

If no suitable match exists, the request is sent to the model and the new response is cached for future use.

This flow is fast, inexpensive, and typically adds only minimal overhead compared to full inference.

Why This Works Well in Production

- Cache lookups are significantly cheaper than model inference

- Similar user intent naturally creates high cache reuse

- The cache adapts automatically as usage grows

By operating at the semantic level, this approach captures real-world repetition that exact-match caching misses - making it a practical optimization for large-scale LLM systems.

Where Semantic Caching Delivers the Most Value

Semantic caching is most effective in LLM systems where intent repeats frequently, even if phrasing varies.

Internal Knowledge Assistants

Employees often ask the same questions in different ways. - about policies, processes, or documentation. Semantic caching avoids recomputing identical answers across teams.

Customer Support and Help Desks

Support queries tend to cluster around common issues. Semantic caching reduces latency and inference cost while keeping responses consistent.

Documentation and Q&A Systems

Search-style questions over product or technical docs benefit from high cache reuse, especially as usage scales.

Agentic and Workflow-Based Systems

LLM agents frequently rephrase similar sub-questions during multi-step reasoning. Semantic caching prevents redundant inference across agent runs.

On-Prem and GPU-Constrained Environments

When inference capacity is limited, semantic caching becomes a critical efficiency lever, helping stretch expensive GPU resources further.

In these scenarios, semantic caching significantly improves cost efficiency and response time without requiring changes to application logic.

Key Benefits of Semantic Caching for LLMs

Semantic caching delivers clear, measurable gains in production LLM systems - especially at scale.

Lower Inference Costs

By reusing responses for semantically similar prompts, semantic caching reduces repeated model calls and token consumption, directly lowering compute and API costs.

Faster Response Times

Cache hits return responses almost instantly, improving user experience for interactive applications like chatbots and internal tools.

Better Resource Utilization

Fewer redundant inference runs mean GPUs and inference capacity are used more efficiently, critical in on-prem or capacity-constrained environments.

More Predictable Performance

Caching smooths traffic spikes and reduces latency variance, making system behavior more stable under load.

No Application Changes Required

Because caching operates below the application layer, teams can realize these benefits without rewriting prompt logic or changing user workflows.

Design Considerations and Trade-offs

While semantic caching is powerful, it must be designed carefully to avoid incorrect or stale responses.

Similarity Threshold Tuning

If the similarity threshold is too low, the cache may return responses that are not fully relevant. If it is too high, cache hit rates drop. Most systems require workload-specific tuning to strike the right balance.

Cache Freshness and Invalidation

Some prompts depend on data that changes over time. For these cases, semantic caches need:

- Time-to-live (TTL) policies

- Context-aware invalidation

- Environment-specific rules

Without this, cached responses may become outdated.

Observability and Control

Teams need visibility into:

- Cache hit and miss rates

- Impact on latency and cost

- Which workloads benefit most

Semantic caching should be measurable and configurable, not a hidden optimization.

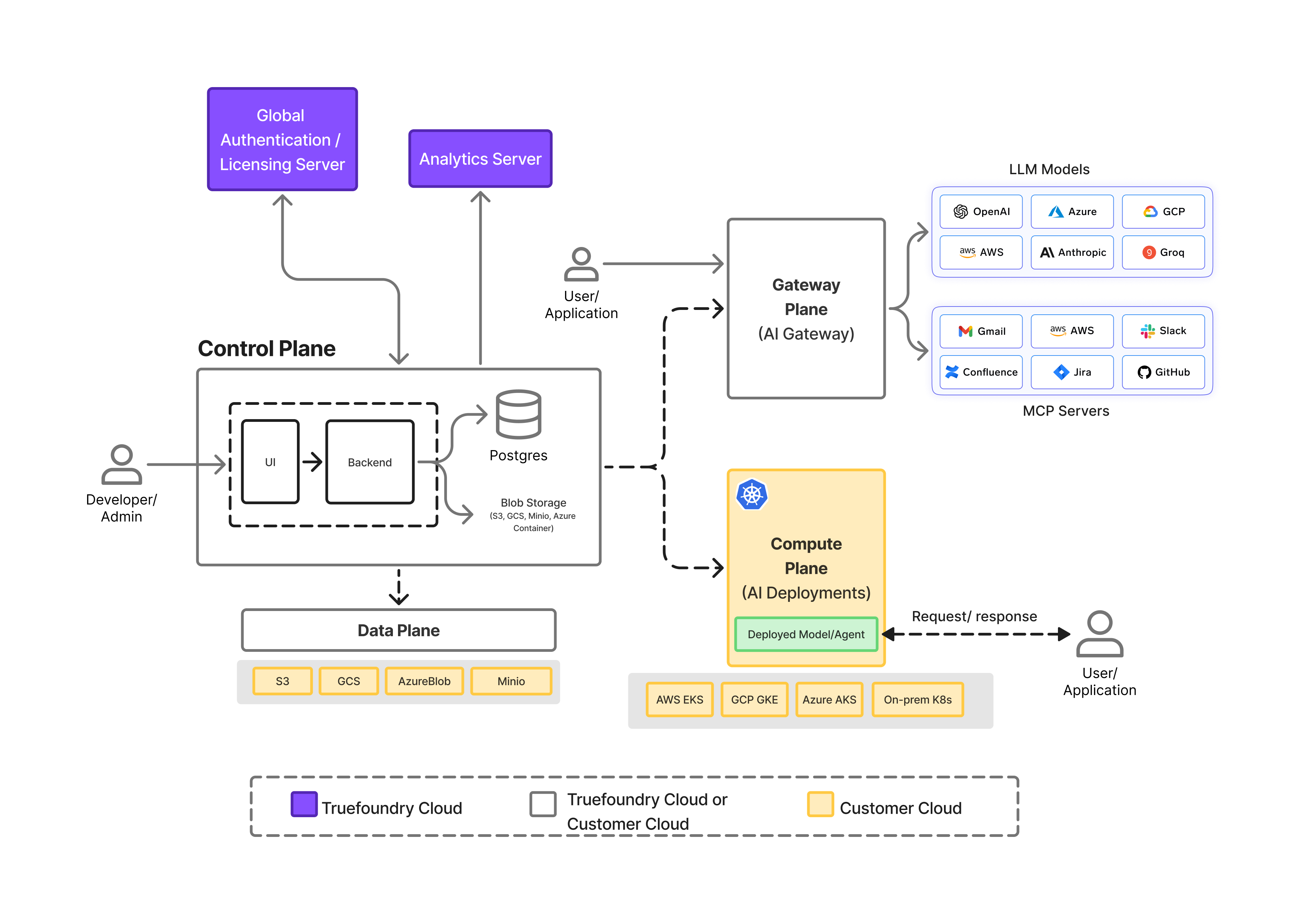

Semantic Caching in the TrueFoundry LLM Gateway

In production environments, semantic caching delivers the most value when it is implemented at the gateway layer, not embedded within individual applications.

The TrueFoundry LLM Gateway integrates semantic caching as a first-class, centralized capability, ensuring that all LLM traffic benefits from caching without requiring changes to application logic.

With semantic caching built into the gateway, TrueFoundry enables:

- Shared semantic cache across teams and services, improving cache hit rates as usage scales

- Centralized control over similarity thresholds and TTLs, applied consistently across environments

- Unified observability, linking cache hits directly to cost savings and latency improvements

- Model-agnostic optimization, working seamlessly across self-hosted, fine-tuned, or external models

Because the cache operates at the gateway level, applications remain fully decoupled from caching logic. Teams can adjust cache behavior, invalidate entries, or refine policies centrally without touching application code.

As part of the broader TrueFoundry platform, semantic caching in the LLM Gateway fits naturally alongside routing, governance, and observability, turning caching from an ad-hoc optimization into a managed infrastructure capability.

How TrueFoundry Implements Semantic Caching

Semantic caching works best when it’s centralized and policy-driven, so every application benefits without duplicating logic. In TrueFoundry, semantic caching is implemented as part of the LLM Gateway layer, sitting directly in the request path before model inference.

Where it sits in the request flow

When an application sends a request to an LLM through the TrueFoundry LLM Gateway:

- The gateway generates (or receives) an embedding for the incoming prompt.

- It performs a similarity lookup against the semantic cache (backed by a vector index).

- If the best match crosses the configured similarity threshold, the gateway returns the cached response immediately.

- If not, the request is routed to the selected model, and the new response is cached for future reuse.

This means semantic caching becomes a default optimization layer for every LLM consumer behind the gateway.

Centralized controls

Because caching is gateway-managed, TrueFoundry lets teams define consistent behavior across services:

- Similarity thresholds (tuned per workload)

- TTL / freshness policies (to avoid stale answers)

- Scope controls (cache per app/team/env vs shared across apps)

- Opt-in / opt-out for specific routes or use-cases

This prevents the common problem where each application implements its own caching logic and gets inconsistent results.

Built for production: observability and governance

TrueFoundry’s LLM Gateway ties semantic caching into platform-level visibility so teams can measure impact and stay compliant:

- Cache hit/miss rates and latency impact

- Token and inference savings attribution by app/team

- Audit-friendly request traces (with safe logging controls)

This makes semantic caching an operational capability you can manage, not a black box.

Why gateway-level semantic caching matters

Implementing semantic caching at the gateway means:

- Higher cache reuse across multiple apps

- Faster rollout and policy updates

- No application code changes

- Consistent governance and observability

TrueFoundry’s approach turns semantic caching from an ad-hoc optimization into a managed part of your LLM infrastructure, alongside routing, access control, and monitoring.

Conclusion

As LLM usage scales in production, repeated inference quickly becomes one of the largest cost and latency drivers. Traditional caching is not sufficient for natural language workloads, where intent repeats far more often than exact phrasing.

Semantic caching addresses this gap by reusing responses based on meaning, making it a practical optimization for real-world LLM systems. When implemented centrally through the TrueFoundry LLM Gateway, semantic caching becomes more than a performance tweak, it becomes a governed, observable, and reusable infrastructure capability.

By combining semantic caching with routing, access control, and observability at the gateway layer, teams can reduce inference costs, improve response times, and scale LLM applications without adding complexity to application code.

For enterprises building production-grade AI systems, semantic caching is no longer optional, it is a key part of running LLMs efficiently and predictably at scale.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)