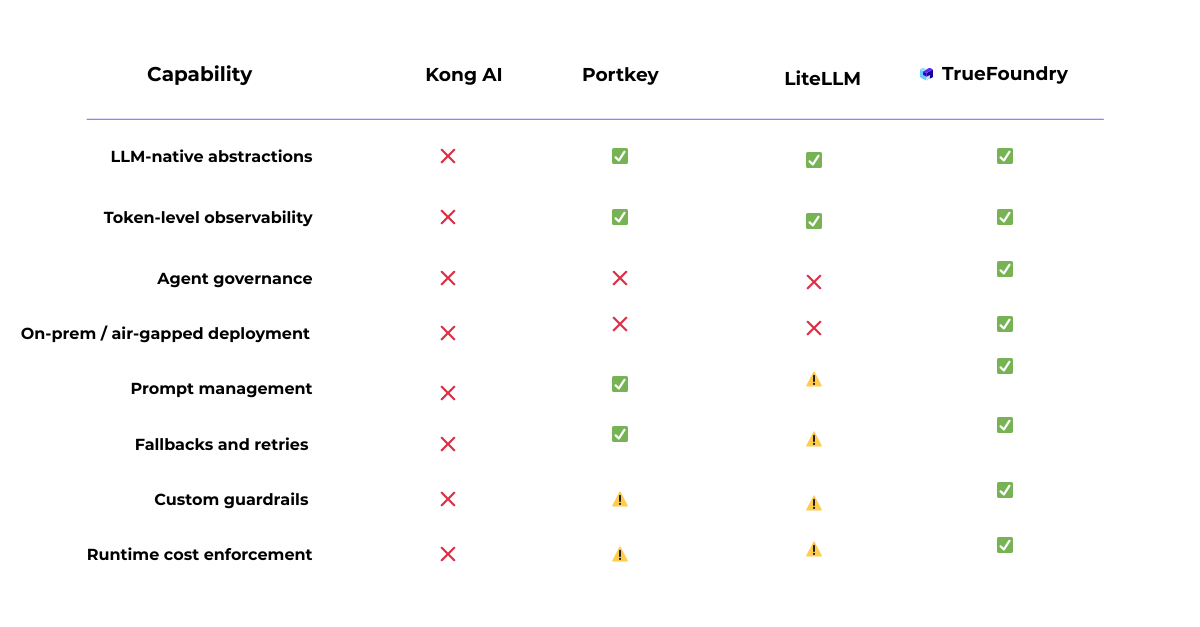

A Definitive Guide to AI Gateways in 2026: Competitive Landscape Comparison

In 2026, enterprises can no longer afford to modify an LLM Gateway into a makeshift AI Gateway. AI is only going to get more embedded in customer-facing workflows, making a dedicated gateway layer non-negotiable for reliable AI-powered applications. The typical enterprise AI infrastructure is often multi-model, multi-team, and multi-cloud, leading to complex compliance and cost accountability.

Over the last year, we’ve seen three broad categories emerge to tackle the problem of governance and resilience of GenAI:

- AI & LLM Gateways (Portkey, LiteLLM, Kong AI)

- Cloud-Native AI Platforms (AWS Bedrock, SageMaker, Azure AI Foundry)

- Data & ML Platforms (Databricks)

Each category optimizes for a different phase of AI adoption. Problems arise when tools optimized for one phase are stretched to handle another.

In this blog, we bring together all competitive research into one definitive landscape, explaining where each platform fits, where they break down, and what enterprises need to take into consideration when choosing a vendor that best fits their requirements.

1. Kong AI: Traditional API Gateway Adapted for AI

Kong is an API gateway, often used in Kubernetes‑based microservice architectures. Kong AI builds on this foundation by introducing plugins and integrations designed to route traffic to large language models.

What Kong AI Does Well

- Enterprise-grade API security and rate limiting

- Mature Kubernetes ingress and plugin ecosystem

- Familiar to platform teams already using Kong

Where Kong AI Breaks Down

- Treats LLM calls as opaque HTTP requests

- No token-level cost or usage visibility

- No understanding of prompts, agents, or tools

- No model-aware routing or fallback logic

- No AI governance primitives (prompt lifecycle, agent tracing)

As AI usage grows, these gaps become more visible. Cost attribution, model selection strategies, and AI‑specific governance must be handled outside the gateway, often inside application code.

Bottom line: Kong AI is effective as an API gateway, but AI remains a secondary concern rather than a native abstraction.

2. Portkey: Application-Level LLM Gateway

Portkey is an AI gateway designed specifically for LLM applications. Instead of treating AI requests as generic HTTP calls, Portkey introduces prompt‑ and model‑aware routing and observability.

What Portkey Does Well

- Prompt- and model-aware routing

- Token-level observability and cost tracking

- Built-in retries, fallbacks, and caching

- Excellent developer experience for LLM apps

Where Portkey Falls Short

Portkey’s design is intentionally application‑focused, which introduces constraints at enterprise scale

- Application-scoped, not organization-wide

- Limited environment isolation (dev vs prod)

- No control over runtime execution or infrastructure

- Weak cost attribution across teams and environments

- Not designed for on-prem or air-gapped deployments

As AI becomes a shared internal capability rather than a single application feature, these limitations often require additional infrastructure layers.

Best for: Single-team LLM applications moving into early production.

3. LiteLLM: Developer-First Open-Source Gateway

LiteLLM is an open‑source LLM gateway that provides a unified, OpenAI‑compatible API for accessing dozens of model providers.

What LiteLLM Does Well

- OpenAI-compatible API for 100+ models

- Open source and easy to self-host

- Strong spend tracking and rate limiting

- Popular for internal developer enablement

Where LiteLLM Falls Short

- YAML-based configuration doesn’t scale to enterprises

- No native UI for governance or experimentation

- Limited observability without third-party tools

- No SLAs, audit trails, or enterprise support

Best for: LiteLLM is an effective entry point but requires significant augmentation for regulated or multi‑team environments.

4. AWS Bedrock: Serverless Model APIs

AWS Bedrock offers managed, serverless access to foundation models from providers such as Anthropic and Amazon. It abstracts infrastructure entirely and bills purely on token usage.

What AWS Bedrock Does Well

- Instant access to proprietary models (Claude, Titan)

- Zero infrastructure management

- Scales to zero for spiky workloads

Hidden Trade-Offs of AWS Bedrock

- Linear token-based pricing → very expensive at scale

- Strict rate limits unless you buy Provisioned Throughput

- Provisioned Throughput often costs $20k–$40k+/month

- No ownership of models or inference stack

These trade‑offs often catch teams by surprise as workloads move from experimentation to sustained production use.

Bottom line: Bedrock optimizes for speed and simplicity, not long‑term cost efficiency or control.

5. AWS SageMaker: Managed ML Infrastructure

SageMaker provides a comprehensive suite for training, tuning, and deploying machine learning models. Unlike Bedrock, it exposes infrastructure choices directly to users.

What AWS Sagemaker Does Well

- Full control over training and fine-tuning

- Runs inside private VPCs

- Supports any custom model

Drawbacks of AWS Sagemaker

- High DevOps and MLOps overhead

- Pay for instances 24/7 (idle cost is real)

- Complex debugging and scaling

- Requires dedicated MLOps teams

Bottom line: SageMaker offers control but at the cost of operational simplicity.

6. Databricks: The Lakehouse ML Platform

Databricks approaches AI from a data‑first perspective, integrating ML and GenAI capabilities into its Lakehouse architecture.

What Databricks Does Well

- Best-in-class data engineering and Spark workflows

- Collaborative notebooks

- Strong Mosaic AI training story

Where Databricks Falls Short

- DBU + cloud compute = double tax

- Inference feels bolted-on

- Strong lock-in via Delta Lake + Photon

- Not optimized for real-time GenAI serving

Bottom line: Databricks excels at data engineering, not AI serving.

The Common Thread: Gateways Without Governance

Across Kong, Portkey, LiteLLM, and even Bedrock, the same issue emerges: They manage requests, not AI systems.

Across gateways and managed services, a recurring issue appears: most tools focus on requests, not systems.

They answer questions like:

- How do I route this call?

- Which provider is faster?

They struggle with:

- Who owns this model in production?

- How do we enforce org‑wide policies?

- How do we prevent cost incidents across teams?

- How do we isolate regulated workloads?

These are infrastructure‑level concerns.

Where TrueFoundry Fits: An AI Control Plane

TrueFoundry occupies a different layer in the stack. Instead of focusing solely on API routing or managed services, it treats AI workloads—models, agents, services, and jobs—as first‑class infrastructure objects. This shifts the responsibility from application code to the platform itself.

The TrueFoundry AI Gateway is built with the following core principles:

- Lifecycle over requests: Deployment, execution, scaling, and monitoring are governed centrally

- Environment‑based controls: Policies attach to dev, staging, and production

- Infrastructure awareness: GPUs, concurrency, and runtime behavior are visible and controlled

- Deployment flexibility: Cloud, VPC, on‑prem, and air‑gapped

This means that the AI Gateway is a component of a larger system, allowing enterprises to scale their AI use cases seamlessly.

When does TrueFoundry’s AI Gateway Make Sense?

The TrueFoundry AI Gateway becomes critical when AI usage moves beyond isolated applications and becomes a shared, production-critical capability. At that stage, challenges are often less about individual model calls and more about operational consistency across teams and environments.

Here’s how TrueFoundry's AI Gateway differs from other solutions:

1. Managing AI Systems Rather Than Individual Requests

Many AI tools focus on request-level concerns such as routing, retries, and basic observability. This is usually sufficient in early stages.

As usage expands, however, models and agents begin to behave more like long-lived services. Teams need clearer ownership, lifecycle management, and operational boundaries. TrueFoundry is designed to manage AI workloads—models, services, and jobs—as infrastructure components with defined deployment and runtime characteristics.

2. Environment-Level Governance

In many stacks, access controls and usage policies are configured at the application or SDK level. Over time, this can lead to inconsistency as the number of services grows.

TrueFoundry applies controls at the environment level, separating development, staging, and production by default. Policies defined at this layer apply uniformly to all workloads deployed within an environment, reducing reliance on per-application configuration.

3. Cost and Resource Controls at Runtime

AI costs often increase due to concurrency, retries, or background workloads rather than individual requests. TrueFoundry addresses this by enforcing limits on concurrency, throughput, and resource usage during execution.

This allows organizations to manage shared infrastructure more predictably as usage scales.

4. Infrastructure-Aware Observability

While token-level metrics are useful, they do not fully explain system behavior in production. TrueFoundry correlates request-level signals with infrastructure metrics such as CPU/GPU utilization and autoscaling behavior, helping teams understand performance and cost drivers in context.

5. Deployment Flexibility

Some organizations operate under constraints that require private networking, on-prem deployments, or strict data residency. TrueFoundry is designed to run in these environments, allowing AI workloads to be governed using the same infrastructure standards applied elsewhere in the organization.

Conclusion

The current AI platform landscape reflects the speed at which generative AI has evolved. Many tools address real problems—routing, model access, observability, or training—but they do so from different starting points. As a result, no single category naturally covers the full set of operational requirements that emerge once AI becomes production-critical.

TrueFoundry offers the most value when AI workloads need to be operated with the same discipline as other production systems—across environments, under shared policies, and with predictable resource behavior.

Understanding where each platform fits, and where its design assumptions begin to break down, is essential. The right choice depends less on individual features and more on how an organization expects its AI usage to evolve over time.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.