6 Best LLM Gateways in 2025

AI in 2025 is moving fast. Too fast. Models get faster every quarter. New providers pop up weekly. Prices swing like crypto. And if you’re not careful, your AI stack turns into a fragile, expensive mess.

That’s why the smartest teams aren’t connecting to models directly anymore; they’re running everything through an LLM gateway. Think of it as your AI command center: one layer that unifies providers, slashes latency, enforces compliance, and gives you the observability you need to sleep at night.

Here’s the truth: the gateway you choose will decide how fast you can ship, how reliable your systems are, and how much you end up paying. Pick right, and you move with the speed of the frontier. Pick wrong, and you’ll be stuck firefighting.

So the real question isn’t “Do I need an LLM gateway?” It’s “Which one will carry me through 2025?”

Why Do You Need Best LLM Gateway?

Building with AI in 2025 is no longer about picking the single best model. The reality is messy: different providers excel in different areas, pricing models shift constantly, and no one LLM dominates every use case. What works for chat today might fall short for code generation tomorrow. This is where an LLM gateway makes all the difference.

An LLM gateway acts as a smart middle layer between your applications and the rapidly changing world of model providers. Instead of wiring your system directly to each API and dealing with custom integrations, performance quirks, or vendor lock-in, you connect to one gateway. From there, you gain flexibility, reliability, and control.

Performance improves because the gateway can automatically route requests to the fastest or most cost-effective option. Observability comes built in with real-time insights into costs, latency, and quality, often powered by integrated LLM observability tools. Compliance becomes easier since data governance and security standards are enforced consistently. And most importantly, gateways offer future-proofing. When a new model or provider arrives, you can adopt it instantly without rebuilding your stack.

In short, an LLM gateway keeps your AI strategy agile. It lets you experiment without friction, scale without bottlenecks, and optimize costs without compromising performance.

As AI adoption accelerates, the real winners will not only be those who use LLMs but also those who manage them wisely. The gateway is where that wisdom lives.

How to Choose the Best LLM Gateway

Not all gateways are created equal. Choosing the right one is less about fancy features and more about how well it fits your team’s goals, scale, and workflow. Think of it as picking the foundation for your AI stack: the wrong choice will slow you down, while the right one will quietly power everything you build.

The first thing to consider is performance. A good gateway should be able to route requests intelligently, balancing speed, reliability, and cost without forcing you to micromanage. Latency and uptime matter, especially when your users are waiting on responses in real time.

Next comes integration and flexibility. Your gateway should support multiple providers, open APIs, and easy switching. If it locks you into one ecosystem, you are back where you started: vendor dependency.

Observability and monitoring are just as critical. Look for dashboards, cost tracking, and usage insights. Without them, you are flying blind and will struggle to optimize or justify spend.

Security and compliance cannot be an afterthought. Whether it is SOC2, GDPR, or enterprise-grade encryption, the gateway should enforce consistent policies across every provider.

To sum it up, here are the key factors:

- Performance: latency, uptime, and routing efficiency

- Flexibility: multi-provider support and easy integration

- Observability: clear cost and usage insights

- Security: compliance and data protection

The best LLM gateway is the one that disappears into the background and lets you focus on building.

6 Best LLM Gateways in 2025

The market for LLM gateways is heating up fast. New players are entering, established ones are evolving, and each promises to be the smartest layer between you and the world of models. But not all of them deliver the same value. Some focus on speed, others on cost control, and a few lean heavily into enterprise compliance.

The right gateway for you depends on your use case, whether you are scaling a startup product, running enterprise workloads, or experimenting with cutting-edge models. Below are six of the most notable gateways in 2025, each bringing a different flavor of performance, flexibility, and control.

1. TrueFoundry

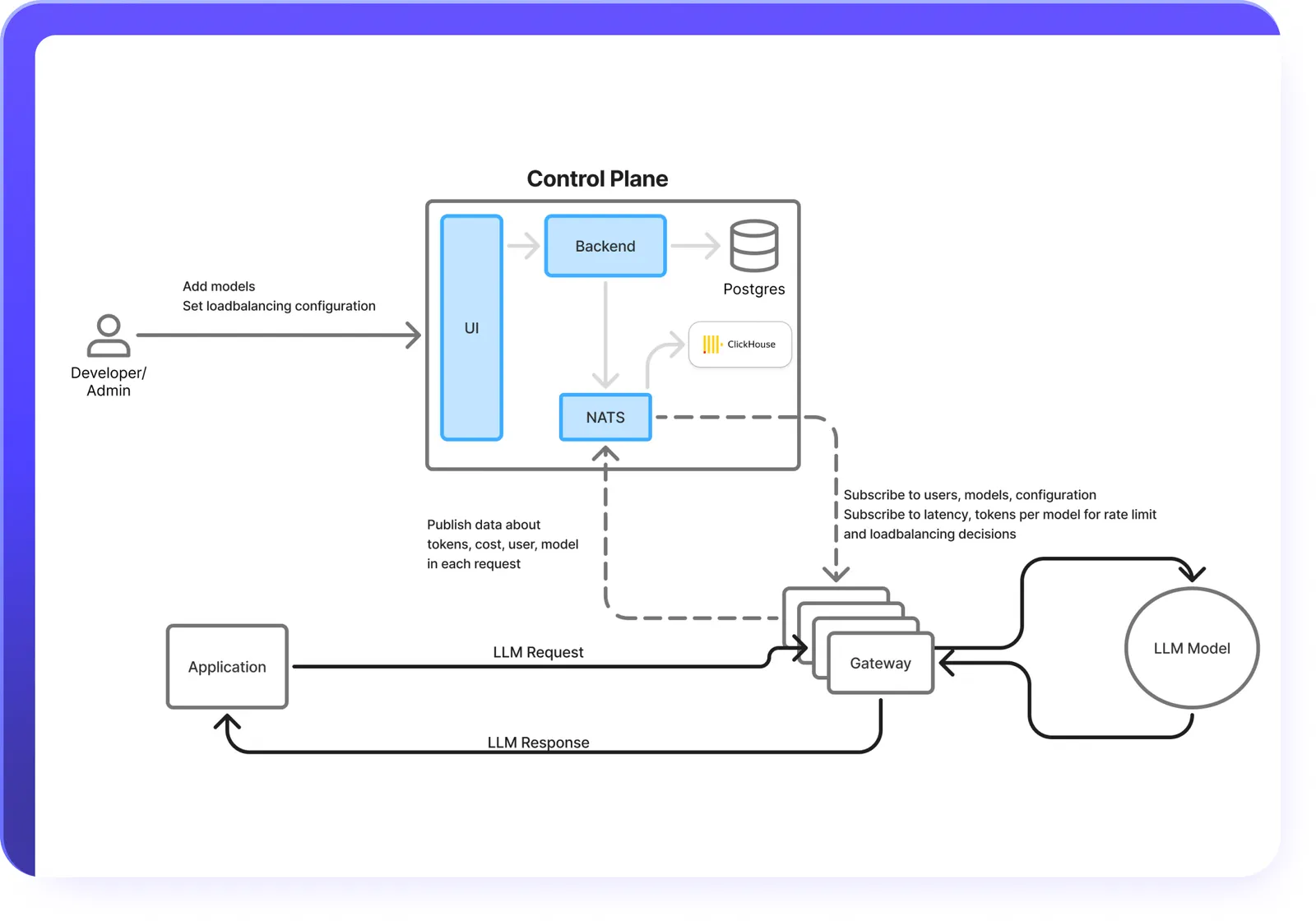

TrueFoundry stands out as one of the leading LLM gateways in 2025, designed for enterprises that need production-ready AI without the usual complexity. It combines orchestration, governance, and scalability into a single platform, making it easier to deploy, manage, and optimize LLM workflows at scale.

Intelligent Orchestration: The LLM Gateway at TrueFoundry coordinates multi-step agent workflows, handling memory, tool integration, and reasoning across tasks. This ensures agents can plan, act, and adapt seamlessly while giving teams full visibility and control.

Tools and Prompt Lifecycle Management: With its MCP and Agents Registry, TrueFoundry offers a centralized library of APIs and tools with schema validation and access controls. Prompt Lifecycle Management adds versioning, testing, and monitoring, enabling enterprises to maintain consistent, auditable agent behavior.

Flexible Model Deployment: TrueFoundry supports any LLM or embedding model, with optimized backends like vLLM, TGI, and Triton. It also integrates with frameworks such as LangGraph, CrewAI, and AutoGen, allowing fine-tuning on proprietary data and production-ready deployment of custom agents.

Enterprise-Grade Compliance and Scalability: The platform operates in secure VPC, on-prem, hybrid, or air-gapped environments, meeting SOC 2, HIPAA, and GDPR standards. GPU orchestration, fractional GPU support, and autoscaling ensure cost efficiency, with some enterprises reporting up to 80% higher GPU utilization.

TrueFoundry is a top-tier choice for organizations that want a gateway that balances flexibility, security, and operational efficiency, making it ideal for serious AI deployments.

2. Helicone

Helicone is an open-source AI gateway designed for developers who want a lightweight, high-performance solution to manage multiple LLM providers. Built in Rust and optimized for edge deployments, Helicone offers a unified API that simplifies integration and improves observability.

Key Features

Unified API for Multiple Models: Helicone provides a single API that works across dozens of LLMs, including GPT, Claude, and Gemini, eliminating the need for multiple SDKs or keys.

Intelligent Routing and Failover: The gateway can automatically switch models, optimize for cost, and balance load, ensuring reliable performance across different providers.

Built-in Observability: Developers get real-time monitoring of requests and responses, token usage, latency, and costs through a centralized dashboard.

Custom Rate Limiting: Application-specific rate limits allow precise control over usage and spending.

Edge-Optimized Performance: Helicone is optimized for edge deployments, minimizing latency and delivering very low overhead even under heavy load.

Limitations

Limited Enterprise Features: Helicone lacks advanced role-based access controls, audit logging, and strict policy enforcement, which may be needed for regulated environments.

Basic Integration Support: While it supports multiple providers, it does not yet offer extensive model ecosystems or advanced integrations for complex enterprise setups.

For teams that need additional enterprise features like advanced access control or broader integrations, considering a Helicone alternative can help fill those gaps without compromising developer-friendly simplicity.

3. OpenRouter

OpenRouter is a developer-focused AI gateway that provides access to multiple large language models through a single API. It simplifies integration and management, making it ideal for teams seeking flexibility and efficiency.

Key Features

Unified API Access: Connects to multiple LLMs from providers like OpenAI, Anthropic, and Google, reducing the complexity of managing multiple SDKs.

Automatic Routing and Fallback: Requests are routed to the best model based on performance, cost, and availability, with automatic fallback in case of failures.

Transparent Pricing and Billing: Clear per-token pricing and consolidated billing make cost management simple.

Bring Your Own Key (BYOK): Allows using personal API keys for more control over authentication and costs.

Limitations

Rate Limits on Free Models: Free-tier models have strict limits, which can restrict testing or development.

Latency Under Heavy Load: Response times may increase depending on the model and traffic.

4. Portkey

Portkey is an open-source AI gateway designed to streamline access to over 1,600 AI models, including large language models (LLMs), vision, audio, and image models. It offers a unified API that simplifies integration and management, making it an ideal choice for developers seeking flexibility and efficiency.

Key Features

Unified API Access: Portkey provides a single API endpoint that connects to numerous AI models from various providers, reducing the complexity of managing multiple SDKs and credentials.

Smart Routing and Failover: The platform intelligently routes requests to the most suitable model based on factors such as cost, performance, and availability. In case of failures, it automatically falls back to alternative models, ensuring high reliability.

Advanced Observability: Portkey offers real-time monitoring of request/response payloads, token usage, latency metrics, and costs, all accessible through a centralized dashboard.

Guardrails Integration: The gateway integrates with Prisma AIRS, providing real-time AI security to safeguard applications, models, and data from a wide range of threats.

Caching and Cost Optimization: Portkey implements simple and semantic caching to reduce latency and save costs, enhancing the efficiency of AI operations.

Limitations

Rate Limits on Free Models: Free-tier models are subject to strict rate limits, which can restrict development and testing for users relying on free models.

Complexity for Small-Scale Applications: While feature-rich, Portkey's extensive capabilities may be more suited for large-scale applications, potentially introducing unnecessary complexity for smaller projects and prompting teams to explore alternatives to Portkey.

5. LiteLLM

LiteLLM is an open-source AI gateway designed to simplify access to over 100 large language models (LLMs) and other AI services. It offers a unified API that allows developers to integrate various AI models seamlessly, making it an attractive choice for teams seeking flexibility and efficiency.

Key Features

Unified API Access: LiteLLM provides a single API endpoint to connect with multiple LLMs from providers like OpenAI, Azure, AWS Bedrock, Hugging Face, and Google Vertex AI. This standardization reduces the complexity of managing multiple SDKs and credentials.

Budget and Rate Limit Management: The platform allows setting budgets and rate limits per user, team, or API key. This feature helps in controlling costs and ensuring fair usage across different users and teams.

Streaming Support: LiteLLM supports streaming responses from models, enabling real-time interaction and enhancing user experience.

Logging and Observability: It integrates with tools like Prometheus, Datadog, and S3/GCS for logging and monitoring, providing insights into usage patterns and performance metrics.

Guardrails Integration: LiteLLM supports integrating guardrails to ensure safe and compliant AI usage, with options for pre, post, or during model call enforcement.

Limitations

Basic Access Control in Open-Source Version: The open-source version offers basic access control features. Advanced features like JWT authentication and audit logs are available in the enterprise version.

Potential Performance Bottlenecks at High Load: Some users have reported performance degradation under high request rates, indicating potential scalability challenges in certain scenarios.

Also explore: Top 5 LiteLLM Alternatives in 2026

6. Unify AI

Unify AI is an open-source AI gateway designed to simplify access to a wide array of large language models (LLMs) and other AI services. It offers a unified API that allows developers to integrate various AI models seamlessly, making it an attractive choice for teams seeking flexibility and efficiency.

Key Features

Unified API Access: Unify AI provides a single API endpoint to connect with multiple LLMs from providers like OpenAI, Anthropic, and Google Vertex AI. This standardization reduces the complexity of managing multiple SDKs and credentials.

Dynamic Model Routing: The platform intelligently routes requests to the most suitable model based on factors such as cost, performance, and availability, ensuring optimal resource utilization.

Real-Time Observability: Unify AI offers real-time monitoring of request/response payloads, token usage, latency metrics, and costs, all accessible through a centralized dashboard.

Guardrails Integration: The gateway integrates with Prisma AIRS, providing real-time AI security to safeguard applications, models, and data from a wide range of threats.

Caching and Cost Optimization: Unify AI implements simple and semantic caching to reduce latency and save costs, enhancing the efficiency of AI operations.

Limitations

Rate Limits on Free Models: Free-tier models are subject to strict rate limits, which can restrict development and testing for users relying on free models.

Complexity for Small-Scale Applications: While feature-rich, Unify AI's extensive capabilities may be more suited for large-scale applications, potentially introducing unnecessary complexity for smaller projects.

Finding the Best Fit for Your Needs

Choosing the right LLM gateway is not just about picking the most popular option; it’s about matching the platform to your team’s goals, scale, and workflow. Each gateway we’ve covered has its strengths, and the “best fit” depends on your priorities.

If you are a startup or small team, lightweight, open-source options like Helicone or LiteLLM can be appealing. They offer low overhead, fast integration, and strong observability without requiring extensive infrastructure or compliance management.

For enterprises with complex workflows, TrueFoundry or Portkey provides robust orchestration, fine-grained access control, and compliance features. They allow you to manage agents, version prompts, and enforce guardrails while optimizing costs at scale.

If your priority is developer flexibility and multi-model access, gateways like OpenRouter and Unify AI simplify integrations with a single API and intelligent routing. They make it easier to experiment across multiple LLMs while keeping an eye on latency and usage.

Ultimately, the right gateway balances performance, cost, compliance, and scalability for your specific use case. Start by mapping your technical requirements, user base, and expected traffic, then evaluate how each gateway aligns with those needs. The ideal choice is the one that supports growth, keeps your infrastructure manageable, and lets your team focus on building, not firefighting.

Conclusion

Selecting the right LLM gateway can make or break your AI strategy in 2025. Whether you prioritize speed, cost-efficiency, compliance, or multi-model access, the gateways we’ve covered offer solutions for every need. TrueFoundry and Portkey excel in enterprise-grade orchestration and security, while Helicone, LiteLLM, OpenRouter, and Unify AI provide developer-friendly flexibility and lightweight integration. The key is to align your choice with your workflow, scale, and goals. A carefully chosen gateway not only simplifies model management but also empowers your team to innovate faster, optimize resources, and deliver AI applications with confidence.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

%20(28).png)