What is AI Agent Registry

AI systems are becoming more modular and collaborative, with specialized agents designed to handle specific tasks—whether that’s retrieving data, executing workflows, or making autonomous decisions. As these agents multiply within an enterprise or across platforms, managing them efficiently becomes critical. This is where an AI Agent Registry comes in.

What is AI Agent Registry

AI agents are autonomous programs that can reason, act, and collaborate on tasks. As organizations deploy more specialized agents (e.g. resume-parsing bots, scheduling assistants, analytics agents), teams need a way for these agents to discover each other, share capabilities, and integrate into workflows.

An AI Agent Registry serves as a centralized (or federated) catalog of running agents and their metadata, much like a model registry for ML models. This registry enables capability discovery and orchestration: agents (or humans) query the registry to find the right agent for a task, inspect its abilities, and obtain connection details. In essence, it acts as a “phone book” or AI agent discovery platform for autonomous agents.

For enterprise AI and MLOps teams, an agent registry provides standardization and governance over agent deployments. Similar to how TrueFoundry offers a model registry UI, an agent registry gives a single window for browsing, versioning, and controlling agents.

By indexing each agent’s identity, version, and capabilities in a common format (e.g. “agent cards” or JSON schemas), the registry makes it far easier for teams to reuse agents, track what’s deployed, and ensure secure interactions. As a result, it has become a critical enterprise AI registry architecture component for enabling agent interoperability and autonomous agent governance across departments.

Key Functions of an AI Agent Registry

An AI Agent Registry provides several essential functions for an agentic ecosystem:

1. Agent Registration: Agents register by submitting a metadata payload to the registry (often via a REST endpoint). This agent card includes fields like agent name, description, version, endpoint URL, and the agent’s declared capabilities or skills.

For example, a FastAPI endpoint might accept a JSON payload and store an AgentCard object in the registry’s database. A simple FastAPI snippet could look like:

@app.post("/agents/register", status_code=201)

def register_agent(registration: AgentRegistration):

agent_card = AgentCard(**registration.dict())

registry.register_agent(agent_card) # store in database or in-memory list

return {"status": "registered", "name": agent_card.name}

This matches the pattern from the A2A protocol examples, where teams “publish their agents’ Agent Cards to this registry, making their capabilities discoverable by other agents”.

2. Discovery and Search: Clients (other agents, orchestration services, or user interfaces) query the registry to find agents by capability, tag, or keyword. For instance, a search API could filter agents whose metadata matches a query. Standardized discovery may use well-known URLs (e.g. fetching an agent’s .well-known/agent.json file) or a central search endpoint (e.g. GET /agents?skill=document-extraction). This enables the platform to function as an “AI agent discovery platform” where tasks can be automatically routed to the right agent.

3. Metadata Management: The registry maintains rich metadata for each agent. Besides name and version, metadata may include authentication credentials, supported interaction protocols (A2A, REST, etc.), data types (text, images, files), and trust credentials. For example, research proposals suggest using “cryptographically verifiable” AgentFacts or PKI certificates to ensure trust. The registry might also track lifecycle information like last heartbeat or health.

4. Health Monitoring & Heartbeats: To keep the registry accurate, agents often send periodic heartbeats. If an agent fails to check in (e.g. within 30 seconds), the registry can mark it stale or remove it. This ensures that only active agents are discoverable and helps with autonomous agent governance by detecting unhealthy or offline agents.

5. Access Control and Governance: A registry enforces who can register or call which agent. Just as not every user should see every AI tool, not every agent should be accessible to all callers. The registry can implement RBAC policies, returning specific Agent Cards based on client permissions. For instance, internal agents may access private endpoints, while external agents see only public capabilities. By centralizing agent endpoints and their ACLs, the platform ensures that agent interactions comply with enterprise security policies.

6. Audit Logging and Observability: Recording each registration, discovery query, or invocation provides an audit trail. Enterprise teams can log when agents are used, by whom, and for what purpose. Registry-driven orchestration (like TrueFoundry’s AI Gateway) can stream LLM tool calls and responses back to a UI. Similarly, agent registries may feed logs and metrics into monitoring tools for observability, helping teams understand usage patterns and debug integration issues. For example, frameworks like Kagent emphasize “metrics, logging, and tracing for all tool calls, giving deeper insights into how your AI agent is interacting with external APIs”. An agent registry can aggregate this data at the platform level.

Together, these functions make an agent registry more than just a database – it is an integration backbone, enabling agents to find and use each other reliably under enterprise governance.

Architecture of an AI Agent Registry

Below is a conceptual architecture of an enterprise AI registry. Autonomous agents register with the central service, which stores their metadata and provides search/discovery APIs. The platform UI or orchestration layer interacts with this registry to find agents.

In this enterprise AI registry architecture, agents (A, B) register by calling the Registry API, which stores their AgentCard in the Metadata Database. The Policy/Governance module enforces access controls on registrations and lookups. The Discovery/Search service allows clients and the UI to find agents by querying the DB (for example, full-text search or filtering by capability tags). An administrative UI (like TrueFoundry’s model registry interface) can visualize all registered agents, their versions, and access rules.

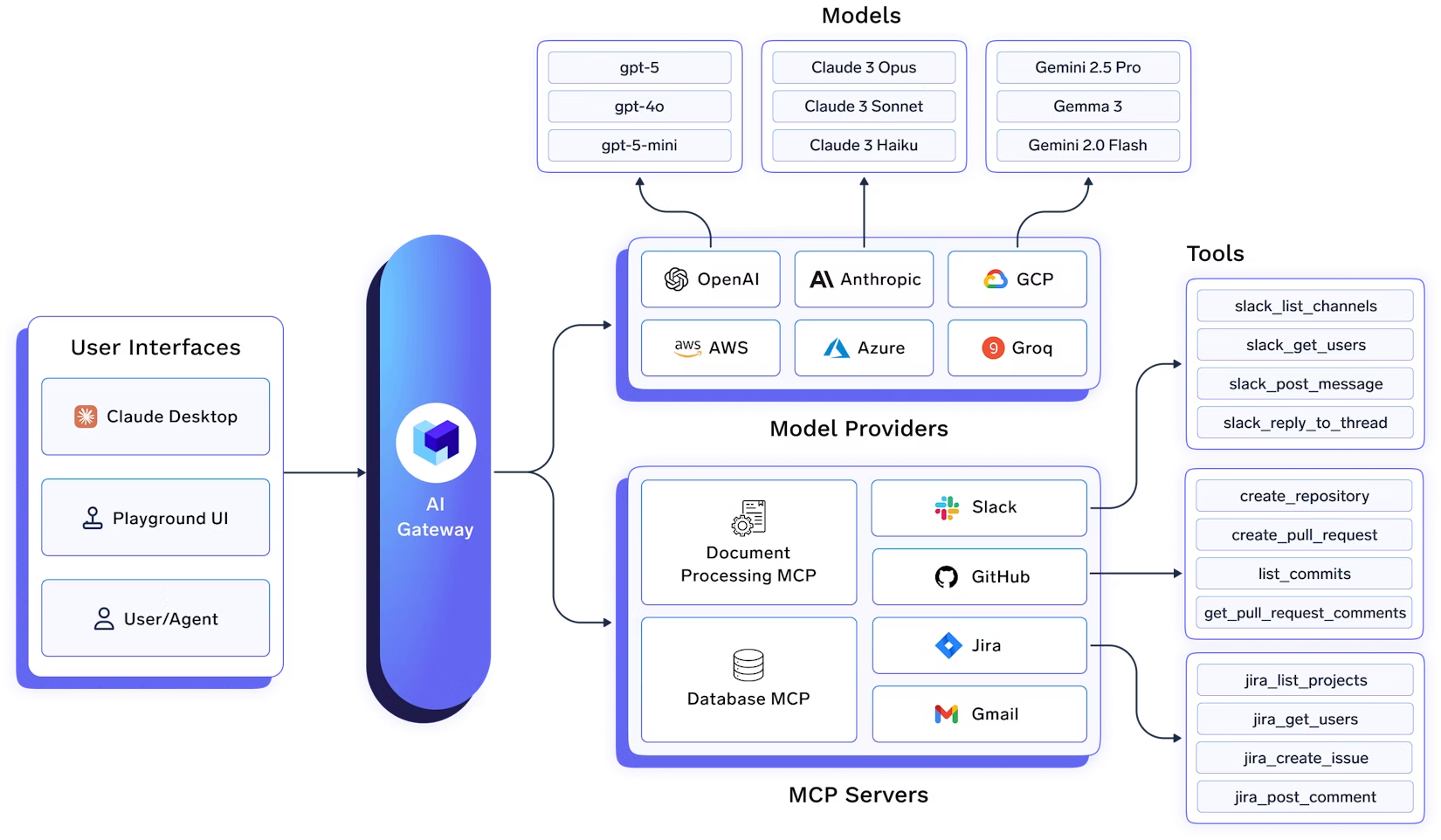

Under the hood, one might use a high-performance key-value store or graph database for metadata, and standard web frameworks (e.g. FastAPI) to implement the API. The Agent Name Service (ANS) proposal, for example, suggests a DNS-inspired directory using PKI for identity. TrueFoundry’s AI Gateway similarly centralizes “access to AI development tools” with a registry and OAuth flows for secure token management. Our architecture mirrors these enterprise-grade patterns: a central registry service for discovery, integrated with authentication (OAuth/PKI), an orchestration layer for agent workflows, and a UX layer for visibility.

Frameworks Supporting Agent Registries

Several open frameworks and protocols are emerging to make AI agent registries interoperable, standardized, and secure:

- MCP (Model Context Protocol): Originally designed to standardize how LLMs communicate with external tools, MCP has rapidly evolved into a tool and agent interoperability layer. MCP servers expose capabilities through a consistent schema, and centralized registries (e.g. GitHub’s MCP registry) already act as catalogs for discoverable MCP services.

- LangGraph: An orchestration framework for AI workflows. When paired with protocols like A2A, LangGraph enables multi-agent pipelines where tasks (retrieval, computation, reasoning) are dynamically delegated to the right sub-agents. While LangGraph’s primary focus is workflow orchestration, it often integrates with registries or catalogs to select appropriate agents at runtime.

- Agent2Agent (A2A) Protocol: An open standard (led by Google and the A2A community) for agent-to-agent communication. A2A defines a JSON-RPC format and an Agent Card schema for registration and discovery. With the python-a2a library, for example:

from python_a2a.discovery import AgentRegistryregistry = AgentRegistry(name="Enterprise Registry")agents = list(registry.get_all_agents()) # returns registered AgentCardsThis implements the A2A “phone book” design. Agents can also self-register and send heartbeats via the enable_discovery helper.

- Agent Protocol (AP by AGI, Inc.): A REST-based specification with an OpenAPI schema. Any compliant agent must expose endpoints like POST /ap/v1/agent/tasks. This gives registries a unified way to interact with heterogeneous agents, since clients can use the same API surface across different implementations.

- NANDA (Networked Agents and Decentralized AI): A decentralized registry model that maps agent identifiers to cryptographically verifiable AgentFacts (capabilities, endpoints, trust metadata). It supports privacy-preserving discovery, dynamic updates, and revocation. Architecturally, NANDA works like a DNS for agents, making global discovery scalable and secure.

- LOKA Protocol: A layered framework focusing on decentralized identity and ethical governance. Its Universal Agent Identity Layer (UAIL) provides globally unique, verifiable agent IDs (via DIDs/VCs), making it directly useful for secure registry enrollment and discovery. LOKA also emphasizes accountability and ethics, adding governance layers to registry operations.

- Other Ecosystems: Tools like Kagent (for Anthropic’s MCP) provide registries/gateways to centralize tool endpoints. AWS Strands Agents and GPT Agents are SDKs that can plug into registry infrastructures. Many teams also adopt LangChain or custom microservices with a lightweight database to maintain a registry pattern.

Together, these frameworks address key aspects of the registry challenge: discovery (A2A, NANDA), interoperability (Agent Protocol, LangGraph), identity (LOKA), and governance (Kagent, TrueFoundry-like gateways). By aligning with one or more of these standards, enterprises can ensure smooth agent interoperability while keeping security and scalability in mind.

Benefits of an AI Agent Registry

Implementing an AI agent registry provides many advantages for enterprise AI teams:

- Automated Discovery: Agents no longer need to be hard-coded or manually configured. A registry lets systems dynamically find the right agent for a job. For example, an orchestration service can query “show me all agents with the invoice-processing capability” and get a list instantly. This accelerates development and reduces duplication.

- Interoperability and Standardization: With a central catalog, different teams’ agents speak a common language. The registry enforces consistent metadata schemas (like Agent Cards), so any agent can integrate into the ecosystem. This unlocks agent interoperability – agents built on different frameworks can discover and coordinate as long as they register properly. The A2A and Agent Protocol standards exemplify this approach, making agents “tech stack agnostic” by exposing fixed API endpoints.

- Governance and Security: An agent registry centralizes access control. By specifying which roles or teams can invoke each agent, the platform enforces governance policies. TrueFoundry’s model registry, for example, stores authentication details and OAuth tokens in the control plane so that only authorized users access a model or tool. Similarly, an agent registry can integrate with enterprise IAM: using a single sign-on token or personal access token to grant an agent caller access to multiple agents seamlessly. Tailored agent cards allow the registry to hide sensitive endpoint details from unauthorized clients, improving security.

- Versioning & Lifecycle: Just like model registries track versions, an agent registry can record different releases of an agent’s logic. Teams can tag agents with version numbers and see exactly which version was deployed where. This enables rollbacks and reproducibility – you always know which agent code was used in production workflows.

- Observability and Metrics: Centralized registries can collect telemetry on agent usage. By funneling all agent calls through a gateway or tracked endpoints, the platform can log response times, success/failure rates, and usage counts. As noted, tools like Kagent bring full observability to agent interactions. This feedback helps MLOps teams monitor system health, spot bottlenecks, and optimize resource usage. For instance, if one agent is overloaded, the platform might scale it or route requests to a backup agent.

- Efficiency and Reuse: By cataloging capabilities, the registry encourages reuse of existing agents instead of building new ones for each project. Teams can quickly discover if an agent already performs the needed function. Over time, this creates a marketplace of vetted agents (analogous to a “Model Zoo” for models), where the most reliable agents evolve and become standards.

Each of these benefits helps enterprises move towards robust AI ops. By treating agents as first-class assets in a registry, organizations gain the governance, discoverability, and auditing that have long been standard for data and models, extending them to autonomous agents.

Challenges in Implementing an AI Agent Registry

Building an enterprise-grade agent registry is not trivial. Teams must navigate several challenges:

- Metadata Standardization: There are competing standards for agent descriptions. For example, the MCP approach uses a centralized GitHub-based mcp.json registry, whereas A2A uses decentralized “Agent Card” JSON files. NANDA proposes an “AgentFacts” schema with cryptographic signing. Deciding on one metadata model (or supporting multiple) is complex. Inconsistent schemas can hinder cross-team integration, so the registry must define a clear schema or translation layer.

- Centralization vs. Decentralization: Central registries offer governance but can be a single point of failure. MCP’s centralized metaregistry simplifies discovery but requires trusted infrastructure (as TrueFoundry’s gateway demonstrates). In contrast, A2A’s decentralized model lets each agent self-publish via .well-known/agent.json. This makes discovery more scalable but harder to govern centrally. An enterprise registry may need to bridge both worlds (e.g. periodically crawling agent-well-known endpoints into the central index).

- Security and Trust: Ensuring that registered agents are trustworthy is critical. Malicious agents or fake registrations could poison the system. Solutions like ANS introduce Public Key Infrastructure so that every agent has verifiable identity, akin to how DNSSEC secures domain names. The registry must authenticate agents on registration and use encryption (mTLS) on all endpoint. Protecting agent metadata (which can include sensitive API endpoints or credentials) is also a challenge; it often requires encrypting or not storing secrets in plain text.

- Scalability: A large organization might have hundreds or thousands of agents. The registry must scale to handle high registration and query loads. Efficient indexing (e.g. full-text or vector indices for capability search) is needed. It also must handle churn: agents spinning up and down. Proper caching, pagination of search results, and horizontal scalability are engineering hurdles.

- Governance Complexity: Administrators must decide who can register agents, which environments are visible (dev/test vs prod), and how policies are enforced. Implementing fine-grained access control (e.g. one agent sees another only if they share a project) complicates the system. The registry itself becomes a policy enforcement point, and misconfigurations can break workflows.

- Interoperability Across Domains: In federated or multi-cloud setups, agents may reside in different security domains. The registry must handle cross-domain discovery (as NANDA aims for). Issues like network isolation, DNS resolution, and credential federation come into play.

- Maintainability: Keeping the registry up-to-date (e.g. removing stale agents via heartbeat timeouts) and backward compatibility of metadata schemas is an ongoing task. The 2025 survey notes that maintainability and authentication are key comparison factors. Teams must version APIs and plan for upgrades (e.g. adding new agent metadata fields without breaking clients).

Despite these challenges, modern proposals and case studies address many of them. For instance, ANS uses a DNS-like naming scheme with secure resolution algorithms, and the A2A community has published best practices for agent card security. An enterprise registry implementation will likely combine multiple strategies: federated discovery, strong identity, and a robust governance layer.

Best Practices for AI Agent Registries

To successfully deploy and manage an agent registry, teams should follow these recommendations:

- Start Small and Iteratively: Begin with a minimal set of agents and a simple registry prototype. As David Alami advises for tool registries, “Don’t wait until you have 50 tools. Even if you have just two or three, put them into a simple registry structure with a standard interface”. Early use uncovers requirements for security and workflow. Iterate by adding more agents, refining metadata, and integrating protocols (like A2A) over time.

- Adopt Standards and Interfaces: Use a common agent API specification. For example, implementing the Agent Protocol ensures each agent exposes the same endpoints (e.g. /ap/v1/agent/tasks). Define a clear Agent Card schema or JSON model (name, version, endpoint, capabilities, inputs/outputs). This makes the registry code simpler and encourages third-party compliance.

- Semantic Search and Discovery: Enable content-based discovery. Besides keyword tags, consider indexing the text descriptions or even example usage of agents in a vector store. Then client agents can do a quick semantic search over the registry. (Tool-registries often use this pattern to match tools to tasks.) E.g., use a simple embeddings database of agent descriptions so an agent asking “handle invoice numbers” finds the “invoice-extractor” agent.

- Enforce Governance from Day One: Integrate IAM/SSO so that registry actions are authenticated. Use policies early: ensure only authorized roles can register or query certain agents. It’s much harder to bolt on governance later. For example, TrueFoundry’s registry ties agent access to OAuth tokens and user permissions. Similarly, the registry could refuse public queries for private-agent cards or automatically mask details unless a valid token is provided.

- Monitoring and Logging: Build visibility into the registry itself. Log every registration, query, and invocation. Use correlation IDs so you can trace a task as it hits multiple agents. This is in line with ML platform best practices where observability is critical. Periodically review logs to remove stale agents (using heartbeat logs) and spot unusual patterns (e.g. an agent that registers many times in a short span).

- Continuous Integration: Treat agent code and their registry entries like software. When an agent is updated, use CI/CD pipelines to update its registry metadata (e.g. bump version). Provide automation (CLI/SDK) for registration. For example, a deployment script could do

requests.post("http://registry/v1/agents/register", json=agent_card)

- after each release. This ensures the registry is never out of sync with live agents.

- User-Friendly Interfaces: Offer a self-service portal or CLI for users to explore agents. The TrueFoundry model registry UI (figured above) is a good pattern: display searchable lists of agents, filters by capability, and buttons for “test” or “deploy” agent. Similarly, include API explorer tabs showing how to call each agent (code snippets). This lowers the barrier for data scientists and developers to adopt agents.

- Leverage Existing Frameworks: Use or extend open-source libraries where possible. The python-a2a library, Agent Protocol SDKs, and LangGraph all provide building blocks that reduce boilerplate. As Alami notes, frameworks like these give teams a jumpstart. For example, using python-a2a’s enable_discovery automates heartbeat logic, and Agent Protocol’s Node/TS SDK simplifies writing compliant agents.

By following these best practices, teams can make the AI agent registry a robust, scalable component of their MLOps or ModelOps platform. Over time, it will evolve into a core part of the enterprise AI discovery platform – analogous to how model registries became indispensable for managing ML lifecycle.

Conclusion

AI Agent Registries are poised to become a foundational element of enterprise AI infrastructure. As autonomous agents proliferate, having a standardized discovery mechanism ensures that agents can coordinate rather than collide. The research consensus is clear: “the need for standardized registry systems to support discovery, identity, and capability sharing has become essential”. By centralizing agent metadata, registration, and governance, enterprises enable seamless agent interoperability and strong autonomous agent governance.

Moving forward, we expect more convergence around protocols (e.g. A2A, Agent Protocol, AgentFacts) and more tooling (like TrueFoundry’s gateway for tools). Eventually, an agent registry will be as routine as a model registry today – providing audit trails, version control, and a searchable catalog of AI capabilities. For enterprise AI teams, investing in an agent registry now means gaining a scalable platform for orchestrating complex AI workflows, reducing integration friction, and unlocking the full potential of agentic AI in production.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.