AWS Bedrock vs Azure AI: Which AI Platform To Choose?

For engineering teams building Generative AI on AWS, the architecture decision often narrows to two primary services: AWS Bedrock and Azure AI. This is rarely just a choice between models; it is a fundamental decision regarding ecosystem integration, identity management (IAM vs. Entra ID), and long-term infrastructure commitments. Azure holds the exclusive enterprise license to OpenAI’s GPT-4o, heavily integrated into the Microsoft 365 stack. Conversely, AWS Bedrock prioritizes a "model agnostic" approach, offering a unified serverless API for Anthropic’s Claude, Meta’s Llama, and Amazon’s own Titan models.

As organizations move from proof-of-concept to production, the initial convenience of these walled gardens often hits operational ceilings. We frequently observe friction points regarding Provisioned Throughput (PT) commitments, opaque rate limiting, and identity fragmentation. This report analyzes AWS Bedrock and Azure AI from a structural and economic perspective, and introduces TrueFoundry as an architectural alternative for teams requiring a control plane independent of the underlying cloud provider.

Quick Comparison: AWS Bedrock vs Azure AI vs TrueFoundry

This section breaks down the primary architectural focus of each platform.

AWS Bedrock functions as a serverless abstraction layer. It excels at aggregating disparate foundation models (FMs) behind a standardized InvokeModel API. It allows AWS-native teams to switch between Anthropic and Llama models without altering infrastructure code, provided they stay within the AWS security boundary.

Azure AI is the enterprise wrapper for OpenAI. While it offers other models, its primary utility is providing GPT-4o and DALL-E 3 with the compliance, security, and private networking features (VNETs, Private Links) that the direct OpenAI API lacks. It is optimized for organizations already deeply entrenched in the Microsoft enterprise stack.

TrueFoundry operates as a cloud-agnostic AI Gateway and training platform. It decouples the model serving layer from the infrastructure provider. This allows engineers to route traffic to whichever provider offers the best price/performance ratio for a specific query, or to host open-source models (like Llama or Mixtral) within their own Kubernetes clusters on spot instances.

The Core Philosophy of AWS Bedrock Vs Azure AI

Strategic alignment dictates platform behavior. Understanding the engineering philosophy behind these hyperscalers helps predict future feature velocity and constraints.

AWS Bedrock: The Model Supermarket

AWS Bedrock operates on a philosophy of aggregation. By not having a singular dominant internal model (Titan has seen lower adoption compared to GPT-4), AWS is incentivized to partner. It is currently the only major cloud provider offering first-party, secure access to Anthropic’s Claude 3.5 Sonnet and Opus.

For DevOps teams, Bedrock behaves like a standard AWS service. It integrates natively with CloudWatch for logs and IAM for fine-grained permissioning. If your application logic requires switching between model providers—for example, using Llama for summarization and Claude for reasoning—Bedrock minimizes the code changes required to do so.

Azure AI: The OpenAI Engine

Azure AI’s strategy centers on depth rather than breadth. The platform is designed to make OpenAI models viable for regulated industries. While OpenAI’s direct API is sufficient for startups, Azure AI adds the necessary layers for SOC2 and HIPAA compliance, including private networking and regional data residency guarantees.

The trade-off is dependency. Azure’s roadmap is tightly coupled with OpenAI’s release cycle. When OpenAI experiences instability, Azure AI workloads can be affected, though Azure offers distinct SLAs. The value proposition here is less about model choice and more about the integration of GPT-4o into data residing in Azure Blob Storage and Microsoft Fabric.

Pricing Structures and Hidden Costs

Unit economics in Generative AI are volatile. While on-demand pricing is transparent, scaling production workloads introduces complex cost structures regarding throughput guarantees and networking.

AWS Bedrock Pricing

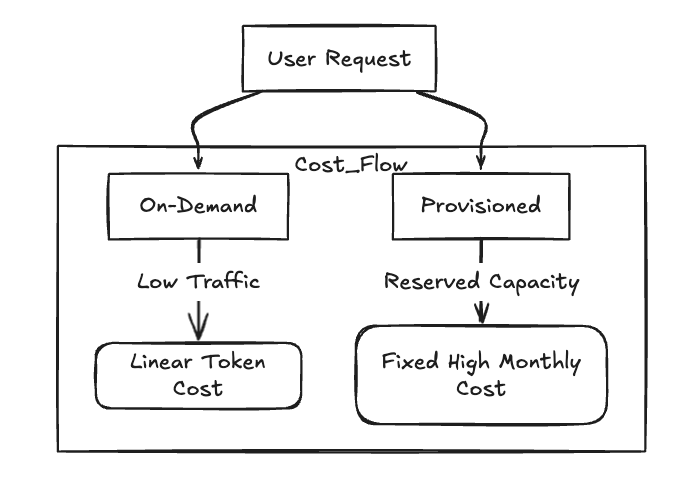

Bedrock offers two primary consumption models: On-Demand and Provisioned Throughput. On-demand pricing is standard (per 1k input/output tokens) and competitive for bursty workloads. However, AWS applies rigid throttling on this tier.

For guaranteed availability, AWS requires Provisioned Throughput. This is where costs escalate. You purchase "Model Units" for a specific time commitment (often 1 or 6 months).

- The Cost Reality: A single model unit for a high-end model like Claude 3 Opus can cost thousands of dollars per month, regardless of usage.

- The Trap: If your traffic spikes, you cannot simply burst; you must purchase additional units, often with minimum time commitments.

- Source: AWS Bedrock Pricing Page

Azure AI Pricing

Azure utilizes a Pay-As-You-Go model mirroring OpenAI’s direct rates, and a Provisioned Throughput Unit (PTU) model.

- The Availability Constraint: Securing PTUs for GPT-4 is historically difficult due to GPU scarcity. We have seen enterprise customers wait weeks for capacity approval in specific regions.

- The Network Tax: If you require VNET integration (keeping traffic off the public internet), you often need to utilize Azure API Management (APIM) as a gateway, which incurs its own hourly premium and data processing charges.

- Source: Azure AI Services Pricing

Fig 1: The divergence between linear on-demand costs and step-function provisioned costs.

Ecosystem Lock-In: IAM vs Entra ID

Identity management acts as the strongest form of vendor lock-in. Moving compute is easy; moving identity and data is hard.

Data Gravity on AWS

Bedrock is the logical choice if your vector embeddings (stored in OpenSearch or RDS PostgreSQL) and unstructured data (S3) already reside in AWS.

- Latency: Keeping the RAG (Retrieval-Augmented Generation) loop within the same AWS region minimizes network latency (typically <10ms internal).

- Egress Fees: Moving terabytes of context data from S3 to Azure for inference triggers AWS Data Transfer Out fees, which currently hover around $0.09 per GB depending on the region.

- Source: AWS Data Transfer Pricing

The Microsoft Copilot Stack

Azure AI’s strength lies in Entra ID (formerly Active Directory). For internal enterprise apps, Azure AI Studio can respect document-level permissions.

- Scenario: If a user queries a knowledge base indexed from SharePoint, Azure AI can automatically filter results based on that user's Entra ID permissions.

- The Lock-in: Replicating this granular permission-aware RAG pipeline on AWS requires significant custom engineering to map Active Directory roles to IAM policies or application-level logic.

Developer Experience: Agents and Guardrails

Day-two operations—debugging, tracing, and safety—differ significantly between the platforms.

AWS Bedrock Agents

Bedrock Agents are essentially orchestrators for AWS Lambda functions. You define an OpenAPI schema, and the Agent uses the LLM to determine which Lambda to trigger.

- Pros: Extremely powerful for infrastructure automation (e.g., "Restart the staging server if CPU > 80%").

- Cons: The reasoning capabilities depend heavily on the underlying model. Debugging why an Agent selected the wrong Lambda function can be opaque compared to custom code orchestration.

Azure AI Studio

Azure prioritizes "Responsible AI" tooling. Azure AI Studio includes distinct content safety models that sit in front of the LLM.

- Jailbreak Detection: Azure provides out-of-the-box classifiers to detect prompt injection attacks and protected material usage.

- Evaluation: The studio offers built-in evaluation flows to test model performance against a "Golden Dataset," a feature that is often more mature than Bedrock’s current evaluation capabilities.

- Source: Azure AI Content Safety Documentation

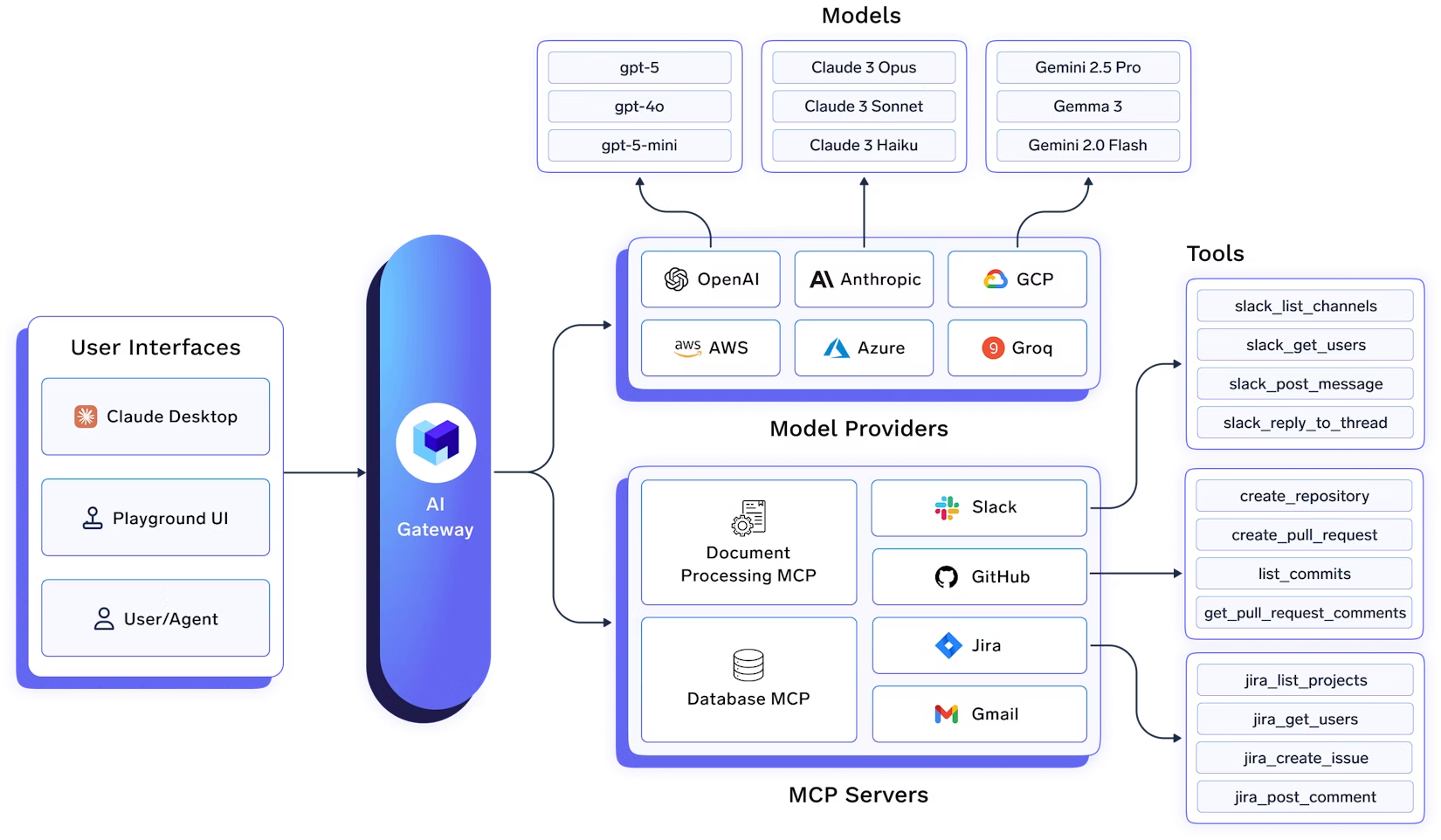

TrueFoundry: The Multi-Cloud Neutral Zone

TrueFoundry offers an architecture that treats cloud providers as interchangeable commodities rather than dependencies.

Cloud Arbitrage Advantage

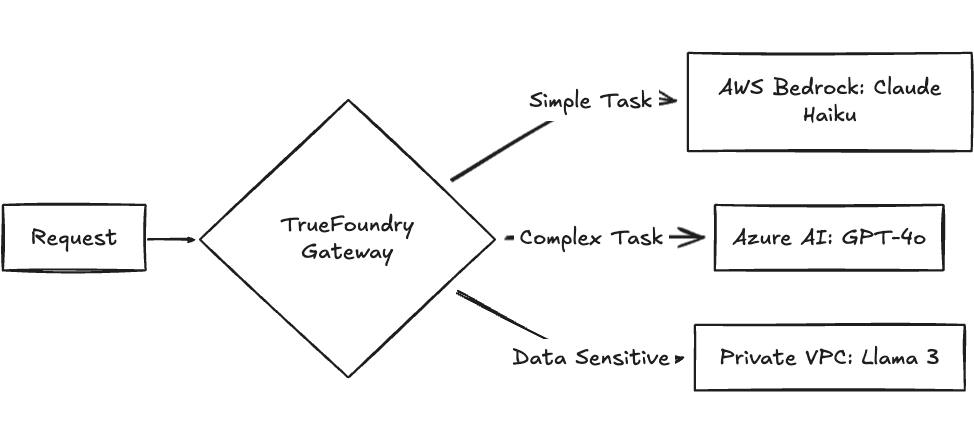

TrueFoundry acts as a unified AI Gateway. This middleware layer allows you to configure routing rules based on cost, latency, or model availability.

- Routing Logic: A request can be analyzed for complexity. If the prompt is simple, route to Claude 3 Haiku on AWS (cheaper). If complex, route to GPT-4o on Azure.

- Redundancy: If Azure East US reports an outage or rate limit error, the gateway automatically fails over to a fallback model on AWS or a self-hosted model, ensuring uptime.

Fig 2: Gateway routing logic optimizing for cost and compliance.

Unified Authentication and Governance

Managing API keys across ten different developer teams and two clouds is a security risk. TrueFoundry centralizes this into a single control plane.

- Centralized Budgeting: Set a budget of $500/month for the "Marketing App." Once hit, the gateway stops issuing tokens, regardless of whether the backend is AWS or Azure.

- Traceability: A single log stream captures inputs/outputs across all providers, simplifying audit and debugging workflows.

Private Model Hosting

For predictable workloads, token-based pricing often exceeds the cost of rented compute. TrueFoundry facilitates deploying open-source models (like Llama 3 or Mixtral) directly into your own Kubernetes cluster (EKS/AKS).

- Spot Instances: By utilizing AWS/Azure Spot instances, teams can lower inference costs by roughly 50-70% compared to on-demand instances.

- Privacy: The data never leaves your VPC. The model weights run on your controlled infrastructure.

How Do AWS Bedrock vs Azure AI vs TrueFoundry Compare?

The following table contrasts the technical capabilities of the platforms.

Which Platform Should You Choose?

The decision should be based on your existing technical debt and future scaling requirements.

Choose Azure AI If: You are structurally a "Microsoft Shop." Your enterprise data resides in OneLake, your identity provider is Entra ID, and your legal team has already vetted the Microsoft BAA (Business Associate Agreement). The integration friction is lowest here for pure Microsoft stacks.

Choose AWS Bedrock If: You are an "AWS Native" builder. Your applications run on ECS or Lambda, and you require the reasoning capabilities of Anthropic’s Claude without managing the infrastructure overhead. It is the path of least resistance for data already sitting in S3.

Choose TrueFoundry If: You prioritize leverage and unit economics. You anticipate high-volume workloads where token costs will balloon, necessitating a move to open-source models on Spot instances. You require a "kill switch" to move traffic between clouds to avoid vendor lock-in or outages.

Why The Future Is Multi-Model?

History in cloud computing suggests that abstraction layers eventually win. Just as Kubernetes abstracted the VM, AI Gateways are abstracting the model provider.

AWS Bedrock and Azure AI are powerful, but they are designed to retain your compute spend within their respective perimeters. A multi-model strategy reduces the risk of price hikes and model deprecation.

Book a demo to see how TrueFoundry can unify your AWS and Azure models into a single, governed AI pipeline.

FAQs

Is Azure AI better than Amazon Bedrock?

"Better" is subjective to the workload. Azure AI is generally superior for applications requiring GPT-4o and deep Microsoft 365 integration. Amazon Bedrock is superior for teams that prefer Anthropic's Claude models and want to maintain a serverless architecture within AWS.

Is AWS or Azure better for AI?

AWS offers a broader set of "primitive" tools (SageMaker, Bedrock, chips like Inferentia) suitable for teams building custom AI stacks. Azure offers a more productized, application-layer experience dominated by its OpenAI partnership.

What is the Azure equivalent of AWS Bedrock?

The closest equivalent is Azure AI Studio (specifically the Model Catalog), which allows users to deploy various models (OpenAI, Llama, Phi) via an API endpoint, similar to Bedrock’s InvokeModel.

What makes TrueFoundry a better alternative to AWS Bedrock and Azure AI?

TrueFoundry acts as a neutral control plane. Unlike Bedrock or Azure AI, it does not lock you into a single cloud’s infrastructure. It allows for cost-arbitrage routing, unified governance across multiple clouds, and the ability to host models on significantly cheaper Spot instances in your own VPC.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)