Understanding Azure AI Gateway Pricing for 2026 – A Complete Breakdown

Azure has positioned itself as the go-to enterprise-ready platform for building and deploying AI applications, especially through Azure OpenAI and its deep integration with the Microsoft ecosystem. For organizations already invested in Azure, enabling Azure AI Gateway capabilities feels like a natural extension of their existing cloud footprint.

However, Azure AI pricing is not centralized or simple. Unlike a SaaS subscription with a single sticker price, Azure costs are spread across multiple services—API management, model usage, networking, logging, and security—each billed independently.

This blog explains how Azure AI Gateway pricing actually works, where costs allow for granular billing but often result in complex, fragmented invoices, and why many enterprises are evaluating alternatives like TrueFoundry to simplify pricing and regain control over their infrastructure.

The Three Layers of Azure AI Pricing

Azure AI cost is layered, meaning teams pay separately for the model, the gateway that manages the traffic, and the underlying infrastructure. Understanding these three layers is critical for accurate cost forecasting.

1. The Model Layer (Azure OpenAI Service)

The most visible component of your bill is the model itself, yet looking at the sticker price often leads to underestimating the total spend. Azure OpenAI operates on a consumption-based model where costs are strictly dictated by the volume of input (prompt) and output (completion) tokens processed.

Pricing varies significantly depending on the intelligence required; a request to GPT-4o will cost exponentially more than one to GPT-3.5 Turbo or a DALL-E image generation model. It is important to remember that these token costs are just the tip of the iceberg—they represent only the visible portion of your total AI spend, excluding the infrastructure required to serve them securely.

2. The Gateway Layer (Azure API Management)

To implement a true Azure gateway for AI, Microsoft recommends using Azure API Management (APIM). This is where unforeseen or ancillary costs often begin to mount.

Azure API Management is essential for handling rate limiting, authentication, caching, and policy enforcement. However, these gateway costs are billed independently and are not included in your Azure OpenAI token pricing. Furthermore, accessing enterprise-grade features—such as high throughput and private networking—requires moving up to higher APIM tiers, which triggers substantial fixed monthly fees rather than flexible pay-as-you-go billing.

3. The Compute Layer (Azure Machine Learning)

When your strategy involves deploying open-source models (like Llama 3 or Mistral) or custom fine-tuned models, Azure introduces a distinct infrastructure cost layer.

Azure AI Studio deploys these models using Managed Online Endpoints, which are backed by dedicated virtual machines. Unlike the serverless nature of Azure OpenAI, these endpoints run continuously. You pay for the compute instances 24/7—even during nights and weekends when inference traffic drops to zero—turning what should be a variable cost into a permanent fixed expense.

The Gateway Premium: The Cost of Enterprise Security

For many teams, Azure API Management becomes the surprisingly expensive component of the AI gateway Azure stack.

Standard vs Premium APIM

While the "Developer" or "Standard" tiers of APIM appear affordable, they often lack a critical feature for production environments: VNET Integration.

Regulated industries, such as Finance and Healthcare, typically mandate private networking for all AI traffic and data flows to ensure compliance. This security requirement forces most enterprises to bypass the cheaper tiers and upgrade directly to the Premium APIM tier, regardless of their actual traffic volume.

The Enterprise Price Tag

The upgrade to Premium APIM introduces a massive jump in baseline costs. It carries a high fixed monthly fee—often upwards of $2,700 per unit/month—that applies regardless of usage.

For startups and mid-sized teams, this creates a significant barrier to entry. You could end up paying tens of thousands of dollars annually just for the privilege of having a secure gateway, before a single model inference is ever performed.

The Commitment Trade-off: Paying for Predictable Performance

Azure’s solution to latency and reliability issues introduces another major pricing commitment known as Provisioned Throughput Units (PTUs).

The Noisy Neighbor Problem

On standard "Pay-as-You-Go" tiers, your requests share compute capacity with other Azure customers. This often leads to the "noisy neighbor" effect, where AI request latency can fluctuate due to multi-tenant resource contention. As your application traffic scales, this unpredictability can degrade the user experience, forcing teams to seek more stable alternatives.

Provisioned Throughput Units (PTUs)

To guarantee consistent throughput and latency, Azure offers PTUs. However, this stability comes at the cost of flexibility. PTUs require long-term commitments (usually monthly or yearly), effectively converting your variable Azure AI costs into large, fixed infrastructure expenses. You are forced to pay for the maximum capacity you might need, rather than the capacity you actually use.

Unforeseen Operational Costs in Azure AI Studio

Beyond core services, several smaller operational fees accumulate across the Azure AI ecosystem.

Content Safety and Responsible AI Filters

Azure enforces default safety and moderation checks on AI inputs and outputs. While valuable, these come with processing fees. High-volume filtering or enabling advanced features like jailbreak detection increases the per-request processing cost. These costs scale linearly with your traffic, meaning that as you grow, your "safety bill" grows with you.

Monitoring and Observability Costs

Observability is crucial, but storing prompt and response logs in Azure Monitor or Application Insights can be surprisingly expensive. Ingestion and retention fees increase rapidly with high-volume AI workloads—especially if you are logging full prompts for debugging. The storage premium for Azure Monitor is significantly higher per GB than standard blob storage alternatives, acting as a significant multiplier on observability costs.

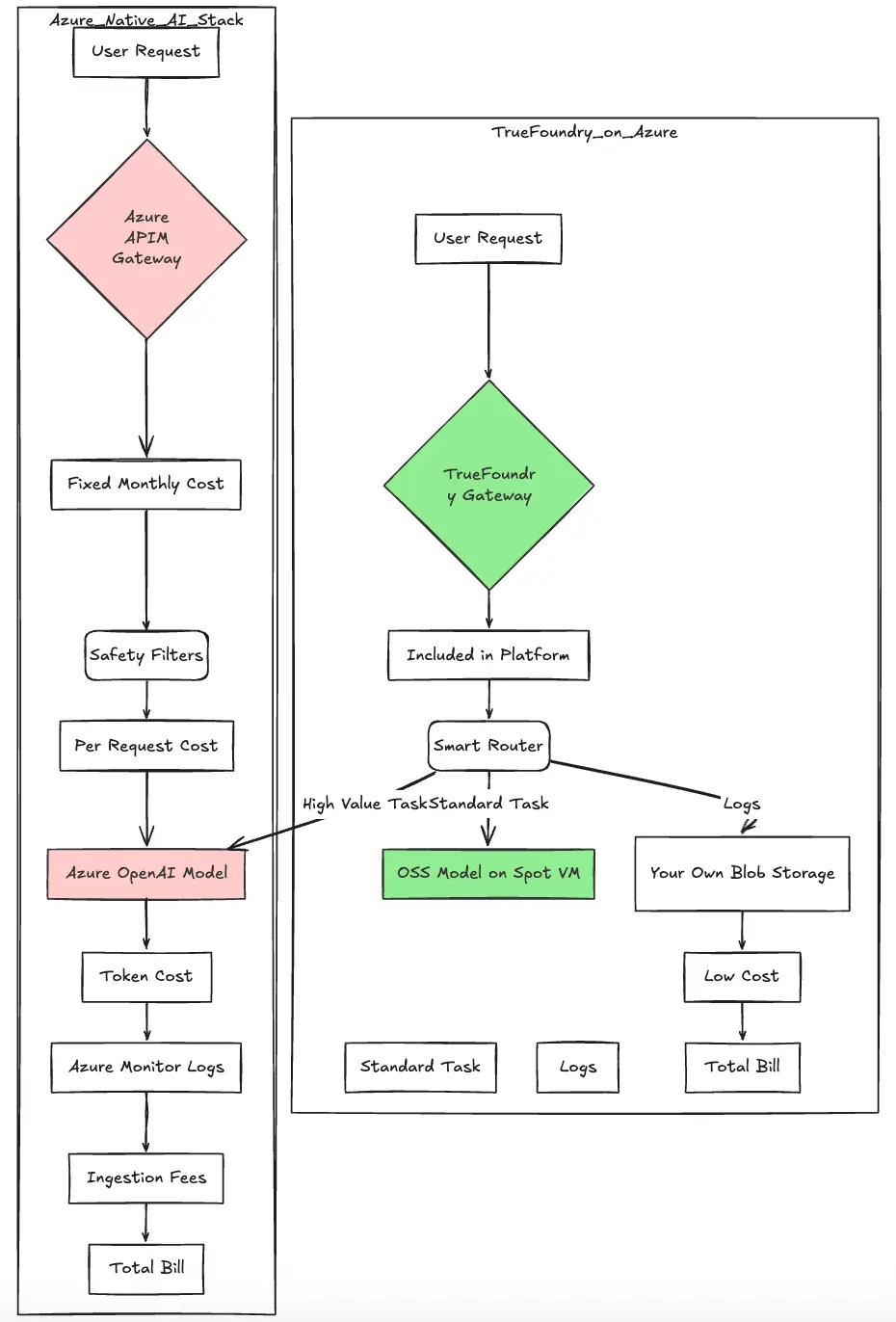

TrueFoundry vs Azure Native AI Stack: Workflow Comparison

When Do Azure AI Native Features Make Sense?

Despite its layered cost structure, Azure’s native AI stack remains the right choice in specific enterprise scenarios:

- Deep Ecosystem Integration: If you are building Copilot-style applications that need deep access to SharePoint, Teams, and Microsoft Graph API data.

- Leveraging Enterprise Agreements (EA): Large organizations often have massive Azure commit buckets that can be used to temporarily offset Azure AI usage costs.

- Centralized Compliance: When IT governance demands a single vendor for all billing, security, and compliance controls, the premium is often viewed as a necessary cost of doing business.

Why Teams Add TrueFoundry to Their Azure Subscription

Many organizations adopt a hybrid approach rather than relying exclusively on Azure’s native AI stack. They use TrueFoundry to orchestrate workloads on top of their Azure infrastructure.

- Hybrid Compute: TrueFoundry allows you to host open-source models on your own Azure Kubernetes Service (AKS) clusters using low-cost Spot Instances.

- Selective Routing: Azure OpenAI is reserved only for complex or high-value inference tasks, while cheaper open-source models handle the bulk of routine traffic.

- Result: This approach significantly reduces the overall Azure AI pricing impact while keeping data inside your Azure account.

How TrueFoundry Removes the Azure “Infrastructure Tax”

TrueFoundry simplifies Azure AI pricing by flattening layered costs into a unified control plane.

- Built-in AI Gateway: TrueFoundry includes a robust AI gateway, eliminating the need to pay for Azure API Management entirely. This unified AI deployment strategy allows organizations to manage multiple providers and prompt management from a single, secure interface.

- No Premium for Security: Secure VNET deployment is a standard feature, not an upsell requiring premium tiers. Enterprises can quickly establish this secure perimeter by following our step-by-step guides for Azure integration and Azure Repos set-up.

- Smart Routing vs PTUs: Instead of buying expensive PTUs for reliability, TrueFoundry uses smart routing to automatically failover traffic across different regions or even different providers (like AWS or GCP) if Azure experiences latency.

- Unified Control: It enables unified routing across Azure OpenAI, AWS Bedrock, and private models from a single interface. This includes native support for training and fine-tuning, ensuring your custom models are as cost-effective as your inference tasks.

Azure AI Ecosystem vs TrueFoundry on Azure

A side-by-side comparison highlights architectural and cost differences at scale.

Don’t Let Infrastructure Costs Eat Your AI Budget

Azure provides powerful AI capabilities, but the "infrastructure tax" is real and persistent. You shouldn't have to pay a premium on gateways and networking just to access your models. TrueFoundry allows you to stay on Azure while regaining control of your costs.

While Azure provides the tools, TrueFoundry provides the economic discipline to scale. If you're ready to stop paying for expensive gateway tiers and want to see how much your organization can save, book a demo with TrueFoundry to calculate your potential savings today.

Frequently Asked Questions

What is an Azure gateway?

An Azure gateway generally refers to Azure API Management (APIM) when used to manage traffic for AI services. It acts as a middleware layer handling authentication, rate limiting, and routing between your applications and backend services like Azure OpenAI.

What makes TrueFoundry more cost-effective than the Azure AI gateway?

TrueFoundry eliminates the need for the expensive Azure API Management Premium tier by providing a built-in AI gateway. Additionally, it lowers compute costs by enabling the use of Spot Instances for hosting open-source models and reduces storage costs by logging data to standard Blob storage rather than Azure Monitor.

How to purchase Azure AI?

You can purchase Azure AI services through the Azure Portal using a Pay-As-You-Go subscription or an Enterprise Agreement. Costs are billed monthly based on consumption (tokens, compute hours, and gateway uptime).

How much does Azure AI gateway cost?

The cost depends heavily on your security requirements. For basic setups, the Standard tier starts at approximately $147 per month. However, for enterprise environments that require the gateway to be deployed entirely inside a private virtual network (VNET Injection), you must upgrade to the Premium tier. This tier costs approximately $2,795 per month per unit—a fixed infrastructure cost that applies regardless of your actual AI traffic volume.

Source: Azure API Management Pricing

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

![8 Vercel AI Alternatives and Competitors for 2026 [Ranked]](https://cdn.prod.website-files.com/6295808d44499cde2ba36c71/69a877c7e93c05705b362d65_ci.webp)