Vercel AI Alternatives: 8 Top Picks You Can Try in 2026

Vercel revolutionized the initial setup for AI integration. Their AI SDK reduces the boilerplate required to connect a Next.js frontend to OpenAI’s API, and the Edge Runtime handles streaming infrastructure beautifully. For prototyping, B2C wrappers, or low-traffic internal tools, the Vercel ecosystem remains a top-tier choice.

However, Vercel’s architecture is optimized for frontend delivery, not machine learning operations. As applications graduate from prototype to high-scale production, engineering teams often encounter architectural ceilings: cost scaling driven by function duration, the complexity of deploying custom fine-tuned models (e.g., Llama 3 or Mistral) inside a private VPC, and a need for granular control over the inference stack.

TrueFoundry has emerged as a primary choice for engineering teams requiring Vercel-like developer experience (DX) applied to their own cloud infrastructure. This report evaluates eight alternatives based on infrastructure ownership, model flexibility, and unit economics.

Why Do Teams Migrate from Vercel AI?

The migration away from Vercel AI usually stems from three specific architectural or operational requirements that emerge at scale.

1. The API-Integration Constraint

Vercel’s AI SDK is primarily designed to chain frontend requests to third-party APIs like OpenAI or Anthropic. This architecture works well for generalist models but creates friction when teams need to deploy self-hosted, fine-tuned models. Swapping an external GPT-4 call for a 7B parameter model running on a T4 GPU typically requires re-architecting the backend logic, as it moves beyond simple API wrapping.

2. Data Privacy & VPC Compliance

Regulated industries (FinTech, Healthcare) often operate under strict mandates regarding data residency. Enterprise security policies frequently require that inference occur within a private VPC (Virtual Private Cloud) where data ingress/egress is strictly controlled by the customer. While Vercel offers strong security measures, it operates as a multi-tenant PaaS. Many enterprises prefer—or are required—to own the entire compute stack inside their own AWS or GCP accounts.

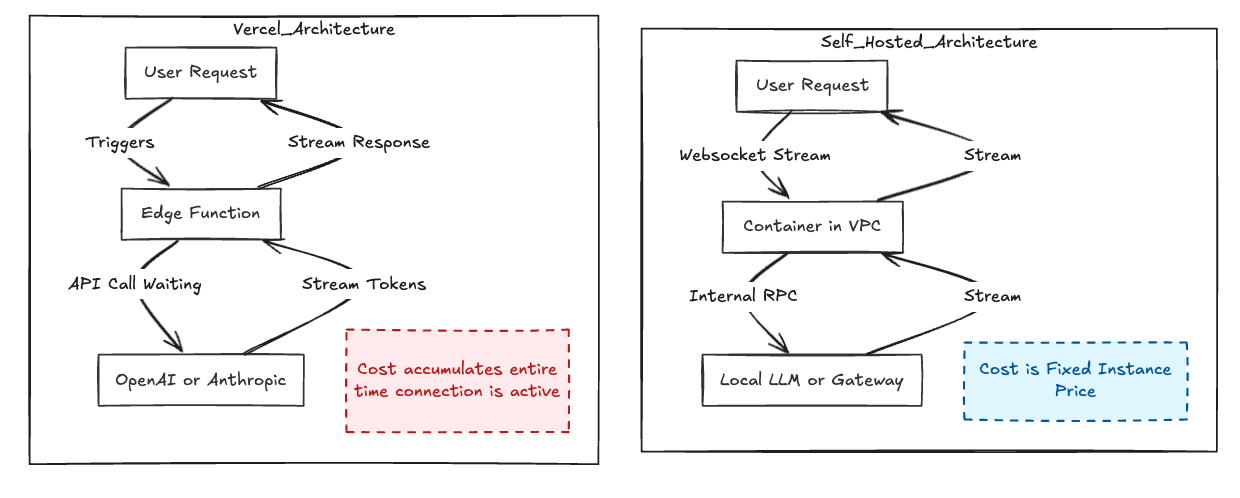

3. The "Function Duration" Cost Model

Vercel’s Serverless Function pricing is largely based on GB-seconds (memory allocation × duration).

- Standard Web App: A request takes 200ms.

- LLM App: A stream can take 20–40 seconds.

The Cost Impact:

Running a high-traffic AI app on a serverless model means paying for the compute time while the server simply "waits" for the LLM to generate tokens. At scale, this billing model can result in significantly higher costs compared to a containerized service running on fixed-cost instances.

How Did We Evaluate These Alternatives?

We prioritized engineering constraints over marketing claims. The alternatives below were scored against four technical criteria:

- Model Agnosticism & Self-Hosting: The platform must support arbitrary model deployment (Hugging Face weights, Docker containers) and deployment into a customer-owned VPC.

- Enterprise Security & Compliance: Support for SOC2 compliance controls, RBAC (Role-Based Access Control), and air-gapped deployments.

- Observability: The ability to trace distinct steps in a RAG pipeline and log latencies (TTFT - Time To First Token).

- Framework Flexibility: The tool must operate independently of the Next.js ecosystem, supporting Python (FastAPI/Flask) or Go backends.

The Top 8 Vercel AI Alternatives

1. TrueFoundry

Best for: Teams seeking full infrastructure ownership without Kubernetes complexity.

TrueFoundry functions as a Machine Learning Platform as a Service (MLPaaS) that installs directly onto your existing cloud (AWS, GCP, Azure). Unlike Vercel, which abstracts the infrastructure to prioritize frontend speed, TrueFoundry abstracts the complexity of Kubernetes while retaining full control over the underlying compute. It decouples the "Brain" (Model Inference/Training) from the "UI," allowing teams to deploy fine-tuned open-source models alongside their application logic within their own security perimeter.

Key Features

- Model Registry & Deployment: One-click deployment of Hugging Face models, standardizing the packaging of weights and Docker containers. It automatically configures CUDA drivers and resource requests.

- LLM Gateway: A centralized routing layer that normalizes APIs across providers (OpenAI, Azure, Local LLMs). Handles failover, retries, and caching.

- FinOps Dashboard: Provides granular visibility into GPU utilization and inference costs. Users can set budget limits per project.

- Fine-Tuning Jobs: Native support for orchestration of LoRA/QLoRA fine-tuning jobs on your own data.

Why TrueFoundry?

TrueFoundry addresses the unit economics of scaling AI. By deploying inference servers on reserved or spot instances within your VPC, teams can eliminate the markup on data transfer and serverless execution time. Because you own the model weights and the data, compliance with data sovereignty requirements (GDPR, HIPAA) is streamlined.

Pricing

- Developer Plan: Free for individual developers/small teams.

- Pro Plan: Usage-based management fee (starts ~$0.03/hr/vCPU).

- Enterprise: Custom volume discounts and SLA guarantees.

- Note: Users pay their cloud provider directly for raw compute costs.

2. Portkey

Best for: Teams who want a "Serverless" feel with better reliability and observability.

Portkey acts as middleware between the application and model providers. While it does not manage underlying GPU infrastructure like TrueFoundry, it hardens API-based AI apps for production through advanced routing.

Key Features

- AI Gateway: Unified API signature for 200+ LLMs with load balancing and fallbacks.

- Observability: Captures latency metrics (P99, TTFT) and cost per request.

- Prompt Management: A version-controlled CMS for prompts.

Pros & Cons

- Pros: Fast integration; open-source core available.

- Cons: Strictly an intermediary layer—does not host custom models or manage GPU infrastructure.

3. LangChain & LangSmith

Best for: Complex agentic workflows and application logic.

LangChain is an orchestration framework, and LangSmith is its companion platform for tracing. This is less of a hosting competitor and more of a logic-layer alternative.

Key Features

- Deep Tracing: Visualizes the entire chain (Retrieval, LLM, Parser).

- Evaluation: Run regression tests on prompts against "Golden Datasets."

Pros & Cons

- Pros: Essential for debugging complex agents.

- Cons: High learning curve; the library adds abstraction overhead compared to raw API calls.

4. LlamaIndex

Best for: Advanced RAG (Retrieval-Augmented Generation) pipelines.

LlamaIndex focuses specifically on data ingestion and retrieval. It is the go-to component for the "Context" part of the stack, offering deeper data handling than Vercel’s basic vector integrations.

Key Features

- Data Connectors: Ingest from 100+ sources (Notion, SQL, Discord).

- Advanced Indexing: Supports hierarchical indices and hybrid search.

Pros & Cons

- Pros: Optimized for structured/unstructured data handling.

- Cons: Not a serving platform for models; requires integration with a compute provider.

5. Haystack by Deepset

Best for: Python-centric Search and QA pipelines.

Unlike Vercel’s JavaScript-centric ecosystem, Haystack is Python-based, making it a standard choice for data science teams building NLP pipelines.

Key Features

- Modular Pipelines: Workflows defined as Directed Acyclic Graphs (DAGs).

- Deepset Cloud: Managed platform for deploying these pipelines.

Pros & Cons

- Pros: Python-native; modular; strong enterprise support.

- Cons: Higher barrier to entry for frontend developers used to TypeScript.

6. LiteLLM

Best for: DIY Proxy Server management.

LiteLLM is a lightweight, open-source proxy server that standardizes I/O formats to the OpenAI schema. It is a self-hosted alternative to Vercel AI SDK’s routing.

Key Features

- Unified Interface: Call Bedrock, Azure, and HuggingFace using standard chat payloads.

- Budgeting: Set budgets per API key.

Pros & Cons

- Pros: Low latency; no vendor lock-in.

- Cons: Self-managed; requires you to handle hosting and scaling of the proxy.

7. Weights & Biases (W&B)

Best for: Model training metrics and prompt engineering evaluation.

Weights & Biases is used during the development of the model or prompt strategy (LLMOps), rather than the deployment of the final app.

Key Features

- Experiment Tracking: Logs hyperparameters and loss curves for fine-tuning.

- W&B Prompts: Analyzes prompt performance.

Pros & Cons

- Pros: The industry standard for ML metrics.

- Cons: Not a hosting platform—integrates with platforms like TrueFoundry for deployment.

8. Cloud-Native Options (AWS Bedrock / Azure OpenAI)

Best for: Teams with a single-cloud procurement mandate.

The major cloud providers offer managed services to consume models via API without managing servers, keeping data within the cloud contract boundary. AWS Bedrock and Azure OpenAI allow users to consume models via API without managing servers, within the compliance boundary of an existing cloud contract.

Key Features

- Private Connectivity: Access via PrivateLink/Private Endpoints.

- Provisioned Throughput: Reserve capacity (TPM) to guarantee latency.

Pros & Cons

- Pros: Consolidated billing and high compliance standards.

- Cons: Vendor lock-in; UI/UX is generally less intuitive than specialized startups.

Summary: Selecting the Right Architecture

To select the correct architecture for 2026, map your team's primary constraints to the recommended solutions below.

Conclusion

Vercel is an effective launchpad for AI functionality, but its abstraction layer can become a constraint as technical requirements increase. 2026 will be defined by infrastructure ownership. As AI features transition from "nice-to-have" to core product value, the ability to control the inference runtime, manage GPU costs directly, and secure data within a private boundary is essential.

TrueFoundry bridges the gap between Vercel's developer experience and the operational rigor required for production AI. It provides platform engineering teams with necessary controls without sacrificing the agility that frontend developers expect.

Frequently Asked Questions

Who are the competitors of Vercel?

In general web hosting, competitors include Netlify, AWS Amplify, and Cloudflare Pages. In the AI infrastructure space, the primary competitors are TrueFoundry (for full stack/backend control), AWS Bedrock (for managed models), and Portkey (for API gateway capabilities).

Is there anything better than Vercel?

For simple websites and B2C apps, Vercel is a strong choice. However, for teams building AI-first products that require custom model deployment, fine-tuning, or strict VPC compliance, TrueFoundry is often the preferred alternative as it removes the cost overhead of Vercel's serverless model.

Is Cloudflare better than Vercel?

Cloudflare (specifically Cloudflare Workers AI) offers lower latency and competitive compute costs for edge inference. However, Cloudflare's developer experience is less integrated with frameworks like Next.js compared to Vercel. For enterprise-grade AI orchestration that spans beyond simple edge functions, TrueFoundry offers a more comprehensive management suite than both.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.