Top 9 Cloudflare AI Alternatives and Competitors For 2026 (Ranked)

Cloudflare Workers AI changed the game for edge inference. For low-latency, lightweight tasks, running models on Cloudflare’s global network is brilliant.

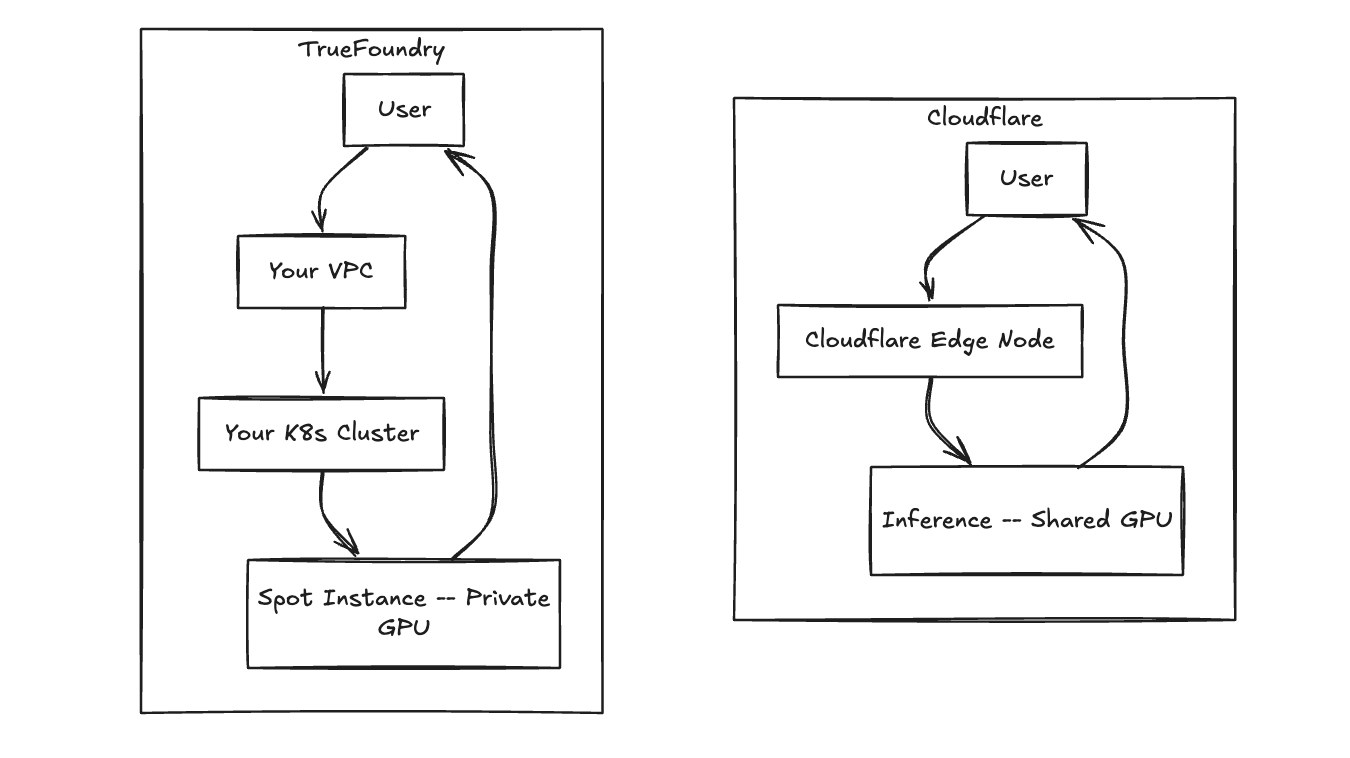

However, as AI workloads scale to 2026 standards—think massive RAG pipelines, agentic workflows requiring long-context reasoning, and fine-tuned 70B+ parameter models—developers often hit a hard ceiling with Cloudflare. The "Serverless at the Edge" promise breaks down when you encounter vendor lock-in, where you are restricted to a specific menu of quantized models rather than your own custom architecture. It struggles with data privacy compliance because your sensitive payloads are processed in a "black box" environment rather than inside your own VPC. Finally, the cost at scale becomes prohibitive; while per-token pricing is convenient for prototypes, it carries a heavy markup compared to running optimized Spot Instances on your own cloud infrastructure.

If you are looking for alternatives that offer better control, cost efficiency, and model flexibility, this guide ranks the top 9 options for 2026.

How Did We Evaluate Cloudflare Alternatives?

We didn't just look at the marketing pages. We evaluated these platforms based on the four criteria that actually matter to engineering teams.

First, we looked at Infrastructure Control. We prioritized platforms that allow you to run workloads on your own cloud (AWS, GCP, Azure) rather than forcing you into a proprietary SaaS ecosystem. Second, we assessed Model Flexibility. We checked if you can deploy any Docker container or custom fine-tune (like a custom Llama 3 or DeepSeek architecture) or if you are restricted to a pre-selected menu of vendor-approved models.

Third, we analyzed Cost Efficiency. We looked for solutions that let you leverage raw compute pricing and Spot Instances, avoiding the significant markups common in serverless billing models. Finally, we tested the Developer Experience, measuring how fast a team can go from a local Python script to a production-grade API with robust autoscaling.

Top 9 Cloudflare AI Alternatives for 2026

1. TrueFoundry (The Best Overall Alternative)

If Cloudflare is "Serverless Inference," TrueFoundry is a "Sovereign AI Platform." It is designed for enterprises that want the ease of use of a managed platform but insist on keeping the data and compute inside their own cloud accounts (AWS, GCP, or Azure). Instead of renting an API, TrueFoundry orchestrates your Kubernetes clusters to behave like a PaaS, giving you the control of building your own infrastructure without the headache of managing K8s manifests.

Key Features of TrueFoundry

The platform’s standout capability is Hybrid Cloud Deployment (BYOC), which allows you to deploy AI workloads directly into your own VPC. This ensures your data never leaves your environment, instantly satisfying strict SOC2 and HIPAA requirements. On top of this infrastructure, TrueFoundry provides a comprehensive AI Gateway. This unified control plane routes traffic between your private models and public APIs (like OpenAI or Anthropic), handling caching, rate limiting, and failover automatically.

For advanced workflows, TrueFoundry offers native support for the Model Context Protocol (MCP) and Agents Registry, allowing you to deploy autonomous agents that securely access your internal tools and databases. Teams can also leverage the Prompt Lifecycle Management playground to engineer prompts, test them against different models, and version them like code. Perhaps most importantly for the bottom line, the FinOps & Spot Instances engine automates the use of Spot Instances for inference, which can lower compute costs by 60-70% compared to On-Demand or serverless pricing.

Why TrueFoundry is a better choice

TrueFoundry eliminates the "Serverless Tax." You aren't paying a markup on every token; you are paying raw infrastructure costs to your cloud provider. Furthermore, you have zero restrictions on model types—if it runs in a Docker container, it runs on TrueFoundry.

Pricing

The pricing model is straightforward. The Developer Plan is free for individuals. The Scale Plan charges a usage-based platform fee while compute is billed directly by your cloud provider. For larger organizations, the Enterprise plan offers custom volume pricing with SLAs and dedicated support.

What Engineers Say

TrueFoundry boasts a 4.8/5 rating on G2, with engineering teams consistently praising its ability to abstract away Kubernetes complexity while maintaining full control over the underlying instances.

2. AWS Bedrock

Brief Description

AWS Bedrock is Amazon's fully managed service for foundation models. It offers a serverless experience similar to Cloudflare but operates strictly within the AWS ecosystem, providing deep integration with existing cloud resources.

Key Features

The service provides a Unified API that allows you to access models from AI21, Anthropic, Cohere, Meta, and Amazon via a single endpoint. It prioritizes security with Private Connectivity via AWS PrivateLink, ensuring data doesn't traverse the public internet. Additionally, Agents for Bedrock offers built-in orchestration for executing multi-step tasks without managing external logic.

Pricing

Billing is primarily Pay-per-token (On-Demand), though high-volume users can opt for Provisioned Throughput, which charges a fixed hourly rate for guaranteed capacity.

Pros & Cons

The seamless IAM integration and zero-infrastructure management make it excellent for security-conscious teams. However, you are limited to the models AWS chooses to support, and Provisioned Throughput is significantly more expensive than running your own hardware.

Why TrueFoundry is better

Bedrock restricts you to the models AWS supports. TrueFoundry lets you deploy any model (including bleeding-edge open source) on EC2/EKS, often at a lower cost using Spot Instances.

3. RunPod

Brief Description

RunPod is a GPU cloud built for developers who want raw power at the lowest possible price. It effectively creates a marketplace for GPU compute, spanning both community clouds and secure data centers.

Key Features

The platform focuses on GPU Pods, allowing you to host Docker containers on specific high-end GPU types like H100s or A100s. It also offers Serverless Endpoints for pay-per-second auto-scaling inference. The underlying infrastructure relies on a Global Availability network that decentralizes GPU access.

Pricing

RunPod offers some of the most competitive hourly rates in the industry, with A100s often priced lower than major hyperscalers, alongside per-second serverless billing.

Pros & Cons

While the raw compute is incredibly cheap and the variety of GPU types is vast, the "Community Cloud" tier offers lower reliability and security guarantees compared to a private VPC managed by TrueFoundry.

Why TrueFoundry is better

RunPod is infrastructure-focused. TrueFoundry provides the orchestration layer (Gateway, Testing, FinOps) that enterprises need on top of raw compute.

4. Replicate

Brief Description

Replicate is a platform that lets you run machine learning models with a cloud API. It focuses heavily on "one line of code" usability, making it a favorite for rapid prototyping.

Key Features

The platform hosts a massive Model Hub with thousands of open-source models ready to run instantly. It excels at Cold Boot optimization, ensuring fast startup times for serverless models. Additionally, the Fine-tuning API simplifies the complex process of training custom adapters.

Pricing

Replicate uses time-based billing (per second), which varies depending on the hardware tier (CPU vs GPU) required for the model.

Pros & Cons

The Developer Experience (DX) is incredible, and the library of pre-built models is huge. However, it can get very expensive at scale due to the high markup on compute, and cold starts can still introduce latency variance.

Why TrueFoundry is better

Replicate is great for prototyping, but TrueFoundry is better for production scaling because it allows you to bring your own compute, avoiding the markup Replicate charges on top of the GPU cost.

5. Google Vertex AI

Brief Description

Google's unified ML platform offers everything from AutoML to custom training and the "Model Garden" for serving foundation models, all tightly integrated into GCP.

Key Features

The Model Garden provides one-click deployment for over 130 models, including Llama and Gemini. It features managed endpoints with robust Auto-scaling that can scale down to zero when idle. The platform also offers M2M Integration, providing deep hooks into BigQuery and Google Cloud Storage.

Pricing

You pay per-node-hour for hosting custom models or per-character/image for managed APIs.

Pros & Cons

The deep integration with the Google ecosystem and strong MLOps tools are major assets. However, the pricing is complex, the learning curve is steep, and it creates significant vendor lock-in to GCP.

Why TrueFoundry is better

TrueFoundry is cloud-agnostic. You can run workloads on GCP today and move them to AWS tomorrow without rewriting your deployment manifests.

6. Modal

Brief Description

Modal is a serverless platform designed specifically for Python developers. It allows you to define container environments and infrastructure requirements directly in your code.

Key Features

The defining feature is Code-defined Infra, where you specify GPU requirements and dependencies using Python decorators. It uses a proprietary container runtime optimized for Fast cold starts, and offers Distributed primitives that make mapping and queuing functions trivial.

Pricing

Modal charges for execution time plus a markup on the underlying compute resources.

Pros & Cons

It offers a best-in-class DX for Python engineers and enables incredibly fast iteration loops. However, it requires proprietary platform lock-in and is mostly suited for batch or async jobs rather than long-running services.

Why TrueFoundry is better

Modal is excellent for jobs, but TrueFoundry provides a more robust solution for long-running services (Services/Deployments) inside your own VPC, which is preferred for enterprise security.

7. Hugging Face Inference Endpoints

Brief Description

This is the official inference solution from the Hugging Face Hub, allowing you to deploy any model hosted on HF to a dedicated cloud endpoint in minutes.

Key Features

It offers Direct Integration, letting you deploy directly from a model card. For enterprise security, it supports Private Endpoints via AWS PrivateLink. You can also perform Container Customization to add custom handlers for specific logic requirements.

Pricing

You pay an hourly rate based on the instance type selected. There is no markup when paused, but a markup is applied to the active compute time.

Pros & Cons

It is the easiest way to deploy HF models and offers secure options for enterprises. However, it is still a managed service wrapper with less control over the underlying networking and cluster configuration than TrueFoundry offers.

Why TrueFoundry is better

TrueFoundry offers broader lifecycle management (fine-tuning, testing, gateway) beyond just the inference endpoint, and runs in your cloud account.

8. Anyscale (Ray)

Brief Description

Built by the creators of Ray, Anyscale is a platform optimized for scaling Python workloads. It excels at distributed training and serving using the Ray framework.

Key Features

The platform is built on Ray Serve, the industry-standard library for scalable model serving. It features Smart Autoscaling that reacts granularly to request metrics and provides a Workspace for interactive development.

Pricing

The cost structure combines pass-through compute costs with a per-hour Anyscale platform management fee.

Pros & Cons

Anyscale offers unmatched scaling capabilities for massive workloads and rests on a solid open-source foundation. However, the complexity is high, and there is a steep learning curve for teams not already familiar with Ray.

Why TrueFoundry is better

TrueFoundry abstracts the complexity of Kubernetes (and can orchestrate Ray), making it accessible to generalist backend engineers, not just ML infrastructure specialists.

9. Lambda Labs

Brief Description

Lambda Labs acts as a specialized cloud provider focused exclusively on GPUs. They provide the hardware without the "service bloat" of AWS or GCP.

Key Features

Lambda is known for H100/H200 Availability, often having stock when hyperscalers are dry. They provide a Simple Stack with pre-installed PyTorch/TensorFlow environments and offer Persistent Storage via high-speed filesystems for checkpoints.

Pricing

Lambda offers some of the lowest on-demand GPU prices in the industry.

Pros & Cons

It is the cheapest path to high-end compute. However, the "bare metal" feel requires more manual operations work, and it lacks the advanced orchestration features of a full platform.

Why TrueFoundry is better

Lambda provides the hardware; TrueFoundry provides the software platform. You can actually connect Lambda Labs as a compute cluster into TrueFoundry to get the best of both worlds.

A Detailed Comparison of TrueFoundry vs Cloudflare

Table 1: Architecture and Feature Comparison

Fig 2: Data Flow Differences

Why TrueFoundry is the Strategic Choice for 2026:

The ‘Hybrid’ Shift (Sovereign AI): 2026 trends clearly point toward companies wanting to own their inference stack rather than renting APIs. TrueFoundry enables this sovereignty without the operational burden of raw Kubernetes, giving you the security of ownership with the ease of a managed service.

Cost Predictability: Serverless billing is opaque and scales linearly with traffic. TrueFoundry’s FinOps features give you visibility into every dollar spent on compute, preventing the "bill shock" common with providers like Replicate or Cloudflare by utilizing your own negotiated cloud rates and Spot Instances.

Beyond Inference: Cloudflare is mostly just an inference engine. TrueFoundry handles the entire lifecycle -- Training, Fine-Tuning, Evaluation, and Deployment -- in one platform, consolidating your MLOps stack.

Ready to Scale? Pick the Right Infrastructure Partner

Cloudflare Workers AI is a fantastic piece of engineering for edge applications, personal projects, and lightweight tasks where latency is king.

But for teams building serious, scalable, and cost-efficient AI products that require custom models and strict data governance, you need infrastructure ownership. TrueFoundry delivers that ownership with the flexibility required for the AI stack of 2026.

FAQs

What are the alternatives to Cloudflare?

For AI inference, primary alternatives include TrueFoundry (for private cloud control), AWS Bedrock (for managed AWS models), and RunPod (for cheap GPU compute).

Why is Cloudflare a bad gateway?

It's not "bad," but it has limitations. It lacks support for custom models (you can't bring your own weights), offers less control over hardware (you can't choose H100s vs A10s), and data privacy is lower compared to running in your own VPC.

What makes TrueFoundry a better alternative to CloudFlare AI?

TrueFoundry allows you to deploy any model (not just a curated list) directly into your own AWS/GCP/Azure account. This gives you better security (data never leaves your cloud), lower costs at scale (via Spot Instances), and deeper observability into your AI workloads.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.