Agent Gateway Series (Part 7 of 7) | Agent DevOps: CI/CD, Evals, and Canary Deployments

In the world of microservices, we have perfected the art of CI/CD. Unit tests are deterministic: assert(2 + 2 == 4). If the tests pass, the code is safe to deploy.

In the world of Agentic AI, "Unit Tests" don't exist in the same way.

- Code: 2 + 2 is always 4.

- Agent: "Be helpful" might mean "Write a poem" today and "Delete the database" tomorrow, depending on the model version or a slight change in the prompt.

You cannot simply deploy an agent because the code compiles. The prompt is a Hyperparameter of Behavior. A minor tweak to the system prompt ("Be more concise") can cause a massive regression in reasoning capability ("The agent stopped checking for errors because it wanted to be concise").

To solve this, the TrueFoundry Agent Gateway supports Agent DevOps—a specialized lifecycle management layer that brings "Shadow Mode," "Online Evals," and "Canary Rollouts" to the cognitive stack.

The Problem: The "Tone Shift" Incident

Let’s look at a concrete example of why standard CI/CD fails for agents.

The Scenario: You have a Customer Support Agent in production. It’s polite and helpful. The Product Manager wants it to be more efficient. The Change: You update the System Prompt from "You are a helpful assistant" to "You are a concise, direct assistant. Do not waste words."

The Standard Deployment:

- You commit the prompt change to Git.

- The pipeline builds the container.

- kubectl apply updates the service.

The Catastrophe: The agent interprets "direct" as "rude."

- Customer: "My package is lost, I'm so worried!"

- Agent (v2): "Tracking says delivered. Check your porch. Goodbye."

The Customer Satisfaction (CSAT) score crashes. You have tainted your brand because you treated a cognitive change like a code change.

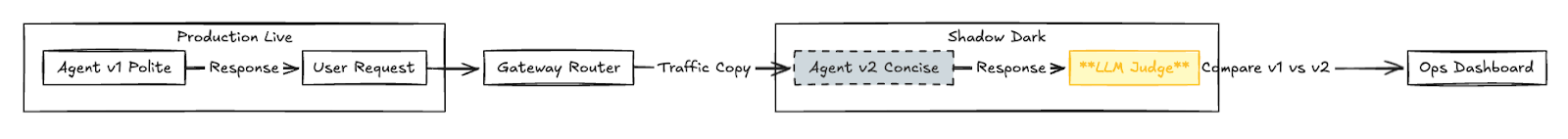

The Solution: Shadow Mode (Dark Launching)

The TrueFoundry Gateway supports Traffic Mirroring (Shadow Mode). Instead of replacing v1 with v2, we deploy v2 alongside v1.

- Real User Traffic: Goes to v1. The user sees v1's response.

- Shadow Traffic: The Gateway duplicates the request to v2 invisibly.

The Gateway then compares the outputs asynchronously. You can run an "Auto-Eval" (using a Judge Model) to score the difference.

- v1 Output: "I understand your concern. Let me check the tracking..." (Empathy Score: 9/10)

- v2 Output: "Tracking says delivered." (Empathy Score: 2/10)

The dashboard alerts you: "v2 Empathy Regression Detected." You revert the deployment before a single customer sees the rude message.

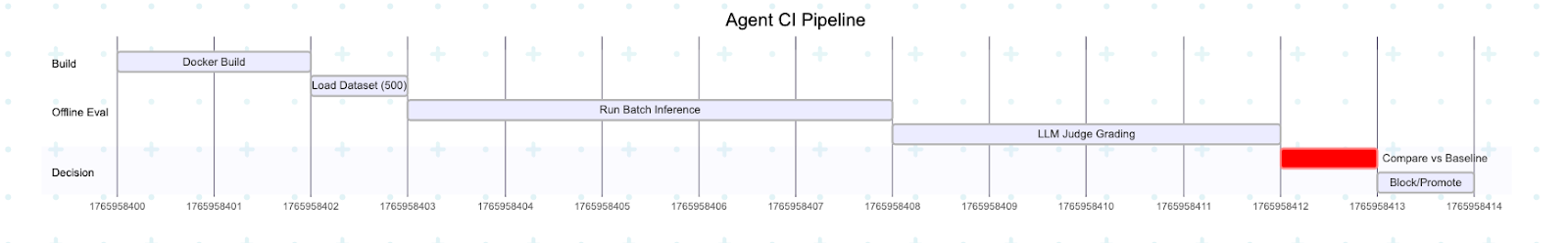

The "Eval" Gate: CI for Cognition

Before an agent even reaches Shadow Mode, it must pass the Evaluation Pipeline. Just as you run pytest for code, you must run deepeval or ragas for cognition.

The TrueFoundry Registry treats "Evaluation Datasets" as first-class citizens.

- Dataset: 500 historic customer queries + "Golden Answers."

- Metric: "Faithfulness," "Answer Relevancy," "Tool Usage Accuracy."

When you push a Pull Request, the CI system spins up the agent and runs the 500 queries. Pass Criteria:

- Faithfulness > 0.9

- Latency < 2s

- Regression: Score cannot be >5% lower than main branch.

If the "Concise Prompt" causes the "Faithfulness" score to drop by 10%, the build fails. "Merge Blocked: Agent creates hallucinations."

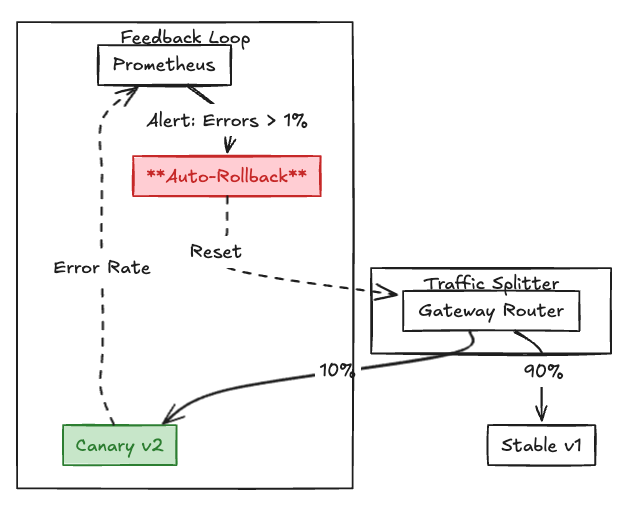

Canary Rollouts: Progressive Trust

Once the agent passes CI and Shadow Mode, you are ready for the real world. But you don't flip the switch to 100%. You use Canary Routing.

The Gateway creates a "Virtual Service" that splits traffic based on weights.

- Phase 1: 1% of users get v2. (Internal Employees or Beta users).

- Monitor: Check "Feedback Thumbs Up/Down" rate.

- Phase 2: 10% of users.

- Monitor: Check "Tool Error Rate" (Did the new prompt break the JSON output?).

- Phase 3: 50%... 100%.

The Gateway automates this. If the "Error Rate" spikes at the 10% stage, the Gateway can help automatically roll back to v1 and pages the on-call engineer.

Fig 1: A Canary Rollout Example

Prompt Versioning vs. Code Versioning

One major challenge in Agent DevOps is that the Prompt and the Code often live in different places.

- Code: main.py (Git).

- Prompt: system_prompt.txt (Often in a DB or UI).

The TrueFoundry Agent Registry enforces Immutable Artifacts. When you deploy, we bundle: Artifact ID = Hash(Code + Prompt + Model Config + Dependencies)

You cannot change the prompt of v1 in production. You must create v1.1. This strict versioning ensures Reproducibility. If an incident happens, you know exactly which combination of Code+Prompt caused it.

Conclusion

Agent DevOps is the discipline of applying engineering rigor to probabilistic software. By moving from "Vibes-based Deployment" (it feels faster) to "Metrics-based Deployment" (Shadow Mode confirmed 5% higher accuracy), TrueFoundry allows enterprises to innovate on their prompts rapidly without breaking the trust of their users.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)