OpenCode Token Usage: How It Works and How to Optimize It

Introduction

AI-assisted coding tools like OpenCode fundamentally change how developers interact with code. Instead of operating on isolated snippets, these systems reason across files, dependencies, and historical context. The result is a significant productivity boost but also a new cost and scalability challenge that many teams underestimate: token usage.

Unlike traditional developer tools with predictable licensing costs, OpenCode usage is governed by token-based pricing. Every interaction, code generation, refactoring, debugging, or review - consumes tokens. As teams scale usage across developers, repositories, and automated agents, token consumption becomes the primary cost driver.

What makes this particularly tricky is that token usage is often non-intuitive. Small changes in context size, prompt structure, or agent behavior can result in large swings in token consumption. Without a clear mental model of how tokens are used, teams struggle to predict costs, optimize workflows, or enforce guardrails.

This blog breaks down how token usage works in OpenCode at a technical level, why code-related workloads are especially token-heavy, and what platform teams should understand before scaling usage in production.

How Token Usage Works in OpenCode

At its core, OpenCode token usage follows the same mechanics as most LLM-powered systems: tokens are consumed for both inputs and outputs. However, the nature of coding workloads introduces additional complexity.

Prompt Tokens vs Completion Tokens

OpenCode token usage can be broadly divided into two categories:

- Prompt tokens: Everything sent into the model

- Completion tokens: Everything generated by the model

In OpenCode, prompt tokens typically include:

- The user’s instruction (e.g., “refactor this function”)

- Code context (files, snippets, diffs)

- System-level instructions or agent policies

- Tool or agent state (in multi-step workflows)

Completion tokens include:

- Generated code

- Explanations or comments

- Structured outputs used by agents or tools

From a cost perspective, prompt tokens are often the dominant factor in OpenCode usage, especially as repositories and context sizes grow.

Why Code Workloads Consume Disproportionately More Tokens

Code-related tasks behave very differently from natural language queries. Several factors contribute to higher token consumption:

1. Large Context Windows Are Common

Unlike chat-based use cases, OpenCode often sends:

- Entire files

- Multiple related files

- Dependency graphs

- Test cases or configuration files

Even a “small” codebase can quickly translate into tens or hundreds of thousands of tokens when multiple files are included.

2. Structural Tokens Add Up

Source code is dense. Syntax, indentation, symbols, and formatting all count toward tokens. A few thousand lines of code can consume far more tokens than an equivalent amount of plain text.

3. Multi-Step Reasoning and Iteration

OpenCode workflows frequently involve:

- Planning steps

- Code generation

- Validation

- Corrections or retries

Each step may re-send context or intermediate outputs, multiplying token usage across a single task.

4. Agent-Based Execution Amplifies Usage

When OpenCode is used via agents or automation (for example, refactoring across multiple files or running in CI pipelines), token usage compounds quickly:

- Context is reused across steps

- Intermediate state is passed repeatedly

- Retries occur automatically

This makes agent-driven usage powerful but also expensive if not bounded.

Why Token Usage Is Hard to Predict Without Instrumentation

One of the biggest challenges with OpenCode token usage is that developers rarely see the full context being sent to the model. Editors and tools abstract away:

- Which files were included

- How much of each file was sent

- Whether previous outputs were reused as context

As a result, two seemingly similar tasks can have wildly different token footprints. Without explicit tracking at the request level, teams often discover cost issues only after usage spikes.

This is why understanding token mechanics is not enough on its own. Teams need visibility into actual token consumption per task, per developer, and per workflow to make informed optimization decisions.

Common Scenarios That Drive High OpenCode Token Usage

Most spikes in OpenCode token usage are not caused by a single obvious mistake. They emerge from how OpenCode is used in real-world engineering workflows—especially when tools and agents are integrated deeply into development and automation pipelines.

Below are the most common scenarios that disproportionately increase token consumption.

1. Large Repository or Multi-File Context Injection

One of the biggest contributors to high token usage is overly broad context inclusion. Many OpenCode workflows include entire directories or large subsets of a repository to “be safe,” even when only a small portion of the code is relevant.

Examples include:

- Sending entire service directories for a single function change

- Including test suites and configuration files unnecessarily

- Re-sending the same files across multiple steps in an agent workflow

Because prompt tokens scale linearly with context size, this pattern alone can multiply costs quickly.

2. Repeated Context Rehydration Across Iterations

OpenCode often operates iteratively: generate code, review, adjust, regenerate. In many setups, each iteration resends the full context, including files and previous outputs.

This leads to:

- Duplicate token consumption across retries

- Exponential growth in usage for long-running tasks

- High costs even for “simple” changes that require several iterations

Without caching or intelligent context reuse, iteration becomes one of the most expensive patterns.

3. Unbounded Agent Execution

When OpenCode is used via agents or automated workflows, token usage can escalate rapidly if execution is not explicitly bounded.

Common causes include:

- Agents with no maximum step or retry limit

- Recursive reasoning chains

- Agents re-evaluating large contexts at each step

Because these processes often run in the background, teams may not notice runaway usage until costs spike.

4. Refactoring and Code Review Tasks at Scale

Refactoring and review tasks tend to be more token-intensive than code generation because they require:

- Reading and reasoning over existing code

- Comparing old and new implementations

- Explaining or validating changes

When these tasks are applied across large codebases or multiple pull requests, token usage increases significantly.

5. CI, Automation, and Background Jobs

OpenCode usage embedded into CI pipelines or automation workflows introduces a different risk profile. These systems:

- Run frequently and automatically

- Often process large diffs or repositories

- May retry silently on failure

Even modest per-run token usage can become expensive when multiplied across many builds or deployments.

6. Lack of Visibility at the User or Task Level

Finally, one of the most overlooked drivers of high token usage is the absence of visibility. When teams cannot see:

- Who is consuming tokens

- Which tasks are most expensive

- How usage changes over time

Optimization becomes guesswork. Teams often respond by restricting usage globally, rather than addressing the specific workflows that drive costs.

Best Practices to Optimize OpenCode Token Usage

Once teams understand where token usage comes from, the next step is optimization. Importantly, optimization is not about limiting usage arbitrarily, it’s about using tokens intentionally so that productivity gains don’t turn into uncontrolled costs.

Below are practical best practices that consistently reduce OpenCode token usage without degrading output quality.

1. Reduce Context Size Deliberately

The most effective optimization lever is controlling what context is sent to the model. More context is not always better, especially when it’s irrelevant.

Practical techniques include:

- Passing file-level context instead of entire directories

- Including only the functions or classes under modification

- Excluding generated files, vendored code, and large configs by default

A good rule of thumb: if a file is not required to reason about the change, it should not be part of the prompt.

2. Prefer Retrieval Over Context Stuffing

Instead of sending large amounts of code upfront, teams should move toward on-demand retrieval.

Examples:

- Retrieve symbols or definitions only when referenced

- Fetch test cases or configs conditionally

- Use indexed lookups rather than static context injection

This approach reduces prompt size while often improving reasoning quality, since the model receives more targeted information.

3. Scope Prompts to the Task, Not the Repository

Generic prompts tend to encourage broader reasoning and larger outputs, which increases both prompt and completion tokens.

Better patterns:

- Explicitly constrain the task (“modify only this function”)

- Specify output format and limits

- Avoid open-ended instructions like “analyze the codebase”

Task-scoped prompts not only reduce token usage but also improve determinism.

4. Bound Agent Execution Explicitly

Agent-based workflows amplify token usage if left unchecked. Every agent should operate within clearly defined limits.

Key guardrails include:

- Maximum number of reasoning steps

- Hard limits on retries

- Time or token budgets per task

Without these bounds, agents can unintentionally reprocess large contexts multiple times, driving up usage.

5. Cache and Reuse Where Possible

Many OpenCode workflows repeat similar tasks across iterations or users. Caching can significantly reduce redundant token consumption.

Applicable scenarios:

- Reusing analysis results across retries

- Caching intermediate representations in multi-step flows

- Avoiding repeated explanation generation when not required

Even partial caching at the workflow level can yield meaningful savings.

6. Optimize Completion Size, Not Just Prompts

While prompt tokens often dominate, completion tokens matter too, especially in refactoring or explanation-heavy workflows.

Techniques include:

- Requesting diffs instead of full files

- Limiting explanations unless explicitly needed

- Enforcing output length or structure

Clear output constraints reduce unnecessary verbosity.

7. Instrument Token Usage Early

Finally, optimization should not be reactive. Teams should instrument token usage from day one.

At a minimum, this means tracking:

- Tokens per request

- Tokens per user or workflow

- Cost per task over time

Without this data, teams cannot distinguish between productive usage and waste.

Why OpenCode Token Usage Becomes Hard to Control at Scale

Most teams don’t struggle with OpenCode token usage on day one. The problems emerge gradually as usage spreads across developers, repositories, and automated workflows. What starts as an individual productivity tool quickly becomes shared infrastructure and token usage scales in ways that are difficult to predict or manage.

1. Token Usage Becomes Distributed Across Many Actors

At scale, OpenCode is no longer used by a single developer in an editor. It is used by:

- Multiple engineers across teams

- Background agents running long-lived workflows

- CI and automation jobs triggered frequently

- Internal tools built on top of OpenCode

Each of these consumers generates token usage independently. Without a centralized view, it becomes difficult to answer basic questions like who is using tokens, for what purpose, and at what cost.

2. Application-Level Controls Don’t Generalize

Early optimization efforts are often implemented at the application or tool level, custom prompt limits, context trimming, or retry logic. While these help locally, they don’t scale across:

- Different editors or IDE integrations

- Multiple OpenCode-powered services

- Agent frameworks with their own execution loops

As a result, policies become fragmented and inconsistent. One team optimizes aggressively while another unknowingly drives up costs.

3. Automation Amplifies Small Inefficiencies

Automation changes the math. A workflow that consumes a modest number of tokens per run can become expensive when:

- Triggered on every pull request

- Executed across multiple branches

- Retried silently on transient failures

Because these jobs run without direct human visibility, inefficiencies compound quickly. Token usage spikes often originate from automation rather than interactive usage.

4. Lack of Attribution Masks the Real Drivers

Without fine-grained attribution, teams see only aggregate usage numbers. This makes optimization reactive and blunt.

Common failure modes include:

- Blanket usage limits that reduce productivity

- Disabling useful workflows due to cost surprises

- Optimizing the wrong tasks while high-cost flows persist

Effective control requires knowing which workflows generate value and which generate waste something aggregate metrics cannot reveal.

5. Governance and Cost Controls Lag Behind Adoption

In many organizations, AI tooling adoption outpaces governance. OpenCode usage spreads faster than:

- Budget ownership is defined

- Policies are formalized

- Guardrails are implemented

By the time token usage becomes a concern, the tooling is already deeply embedded in workflows, making retroactive controls difficult and disruptive.

What This Means for Platform Teams?

The core issue is not misuse - it’s decentralized usage without centralized control. As OpenCode becomes shared infrastructure, token usage must be managed the same way teams manage compute, storage, or CI resources.

This requires:

- Centralized visibility across users and workflows

- Consistent enforcement of limits and policies

- Attribution that aligns cost with ownership

Without this shift, token usage remains unpredictable, and optimization efforts stay reactive.

Monitoring and Governing OpenCode Token Usage in Production

Once OpenCode usage reaches production scale, ad-hoc tracking and manual optimizations stop working. At this stage, token usage must be treated like any other shared infrastructure resource - measured continuously, governed centrally, and tied to ownership.

Why Application-Level Monitoring Is Not Enough

Many teams start by tracking token usage inside individual tools or workflows. While this provides local insight, it breaks down quickly when:

- Multiple editors or IDEs are used

- OpenCode is embedded in internal tools

- Agents and automation run outside developer workflows

Each integration reports usage differently, and none provide a holistic view. As a result, platform teams lack a single source of truth for token consumption.

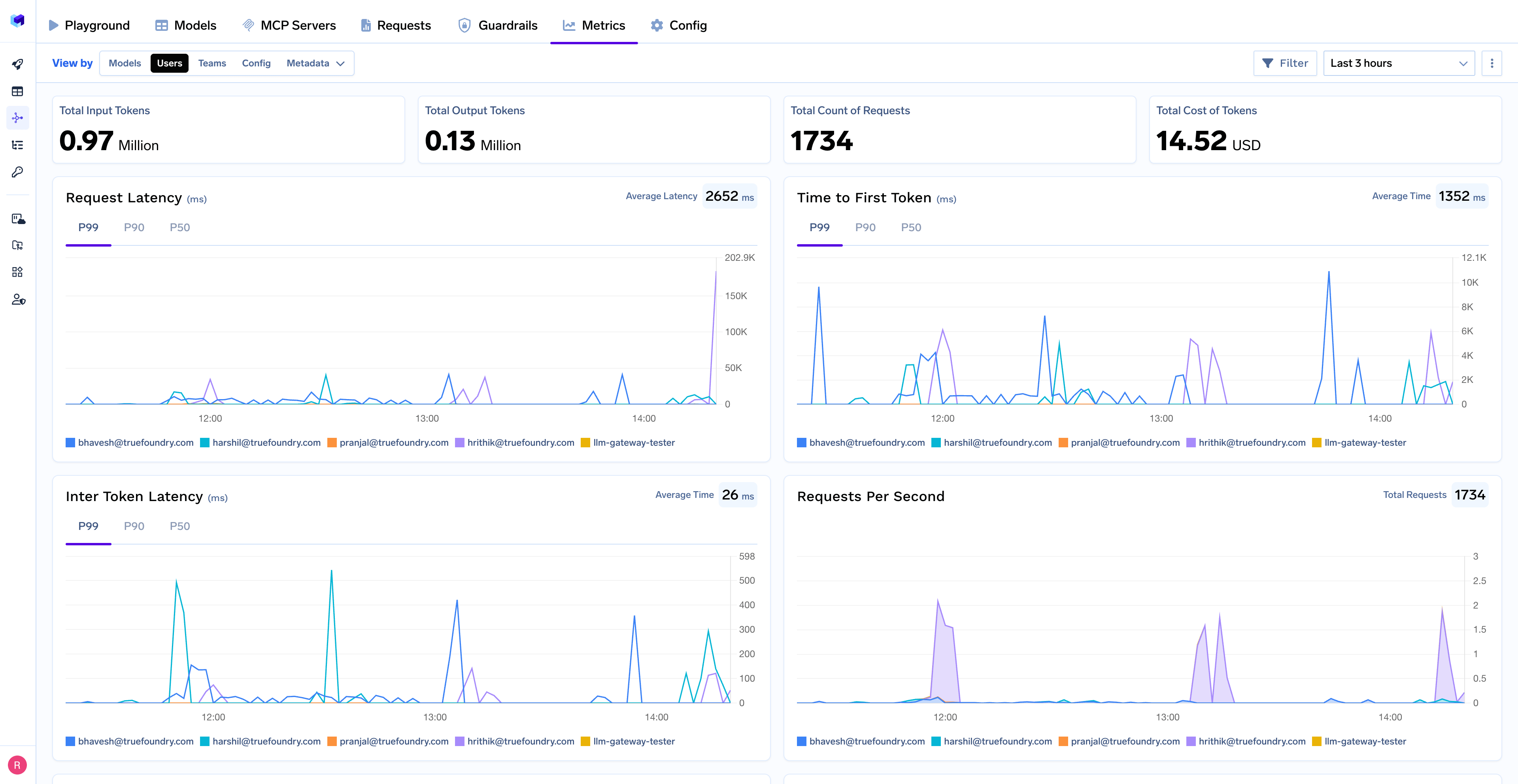

What Effective Token Monitoring Looks Like

At scale, monitoring needs to happen at the request level, not just the tool level. Effective setups capture:

- Tokens consumed per request (prompt + completion)

- Cost per request based on model pricing

- Identity context (user, service, agent, repo, environment)

- Latency, retries, and failure modes

This allows teams to answer questions like:

- Which workflows are the most expensive per run?

- Which repos or agents drive sustained usage?

- Where do retries or failures inflate token counts?

Without this granularity, optimization efforts remain coarse and often misdirected.

Cost Attribution and Ownership

Governance starts with attribution. Token usage must be mapped to owners who can act on it.

Common attribution models include:

- Per developer or team

- Per repository or project

- Per workflow or automation pipeline

Once ownership is clear, cost conversations shift from abstract budgeting to concrete decisions about which workflows deliver sufficient value.

Policy Enforcement and Guardrails

Monitoring alone does not prevent cost overruns. Production systems require enforcement mechanisms that operate in real time.

Typical guardrails include:

- Per-user or per-team token budgets

- Rate limits for high-frequency workflows

- Hard caps for background agents

- Environment-based limits (e.g., stricter limits in CI)

These controls should be enforced centrally so that all OpenCode-powered workflows inherit them automatically.

Why Centralization Matters?

The common thread across effective governance setups is centralization. Token usage policies, limits, and visibility must live at a shared control point rather than being reimplemented across tools.

This is where infrastructure-oriented platforms such as TrueFoundry fit naturally. By centralizing AI traffic, observability, and policy enforcement, platform teams can manage OpenCode token usage consistently across developers, agents, and automated systems - without slowing down individual teams.

Managing OpenCode Token Usage with TrueFoundry

From a platform standpoint, the core challenge with OpenCode token usage is not understanding how tokens are consumed, but where control and visibility should live.

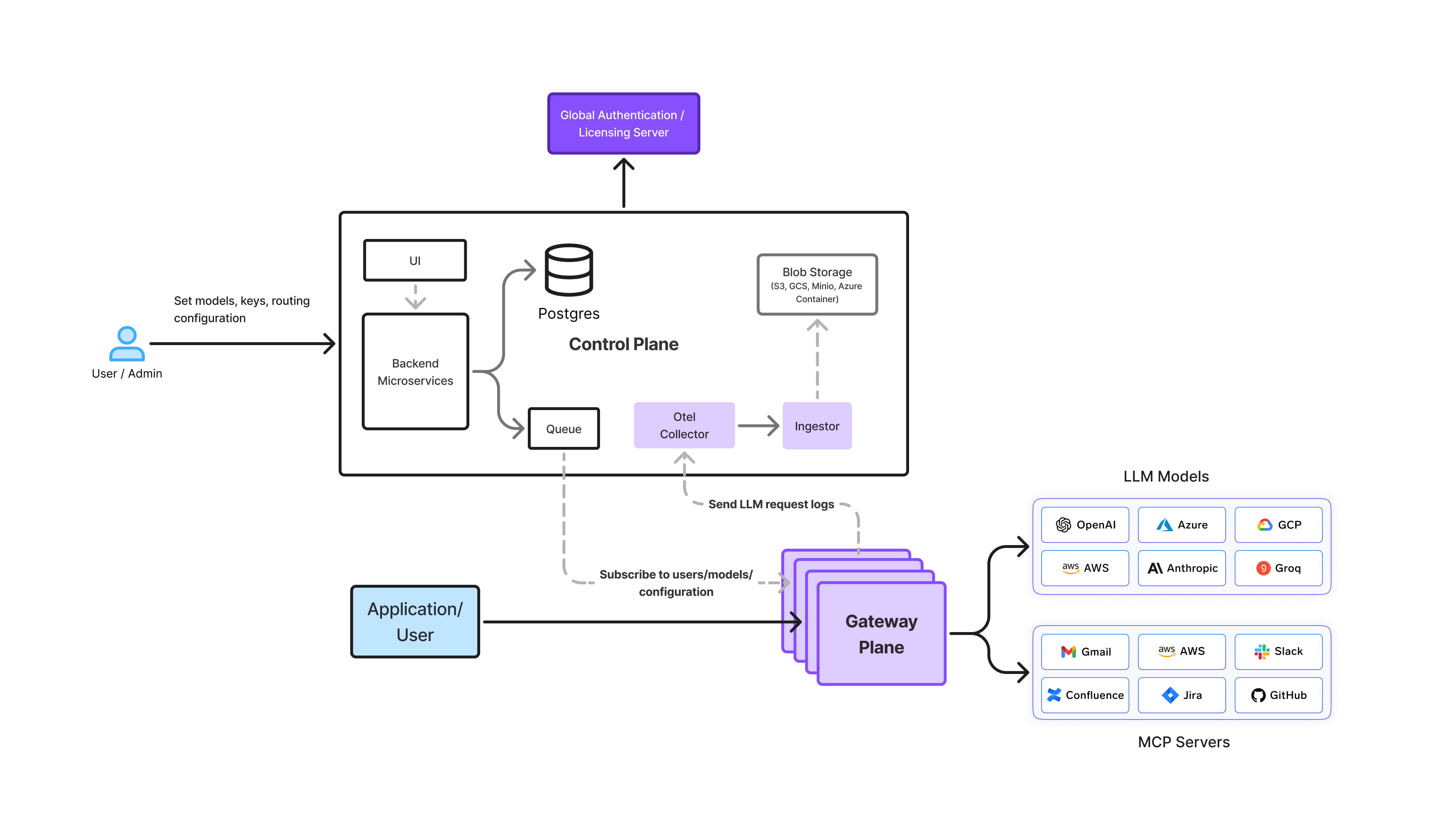

TrueFoundry approaches this problem by treating AI and LLM usage including developer-facing tools like OpenCode as shared infrastructure that must be observable, governable, and cost-aware by default. At the center of this approach is the AI Gateway, which acts as the control plane for all LLM traffic across the organization.

Centralizing OpenCode Traffic Through the AI Gateway

In a TrueFoundry setup, OpenCode does not interact directly with underlying LLM providers. Instead, all requests flow through the AI Gateway, which provides a single, consistent interface for inference.

Architecturally, this enables:

- A single entry point for all OpenCode-generated requests

- Uniform handling of prompt and completion traffic

- Centralized enforcement of limits, policies, and routing

By removing direct model access from individual tools, platform teams gain full visibility into how OpenCode is actually being used across developers, agents, and automation.

Token-Level Observability as a First-Class Primitive

TrueFoundry’s AI Gateway captures token usage at the request level, including:

- Prompt tokens vs completion tokens

- Model and provider used

- Latency, retries, and failure signals

- Identity context (user, team, service, environment)

Critically, this telemetry is not locked into a vendor-controlled system. Logs and metrics are persisted in the customer’s own cloud and storage, allowing teams to:

- Run custom analysis on token usage patterns

- Correlate OpenCode usage with repos, CI jobs, or incidents

- Retain full ownership of sensitive prompt and code data

This avoids the “black box” problem common with AI tooling and makes long-term optimization possible.

Cost Attribution and Policy Enforcement at the Platform Layer

Because all OpenCode traffic passes through the gateway, cost controls can be applied consistently and in real time.

Platform teams can:

- Attribute token usage to developers, teams, or projects

- Enforce per-team or per-environment budgets

- Apply rate limits or hard caps to agent-driven workflows

- Differentiate controls between interactive usage and automation

These policies are enforced once at the gateway and automatically apply to every OpenCode-powered workflow without requiring changes to editors, plugins, or internal tools.

Supporting Scale, Automation, and Agent-Based Workflows

TrueFoundry’s architecture is designed for environments where OpenCode usage extends beyond the IDE. CI pipelines, background jobs, and agents often generate the largest and least visible token consumption.

By routing these workloads through the same AI Gateway, teams can:

- Detect runaway agent execution early

- Compare interactive vs automated usage patterns

- Apply stricter controls to non-interactive workloads

This makes it possible to scale OpenCode usage across the organization without losing predictability or control.

Conclusion

OpenCode token usage is the real scaling constraint for AI-assisted coding. As usage spreads across developers, repositories, automation, and agents, token consumption becomes difficult to predict and control without centralized visibility and governance.

Managing this at the tool or application level doesn’t scale. Token usage needs request-level observability, clear attribution, and real-time enforcement, treating AI-assisted coding as shared infrastructure, not an isolated feature.

Platforms like TrueFoundry reflect this approach by centralizing OpenCode traffic through an AI Gateway, enabling teams to monitor, govern, and optimize token usage consistently. For platform and engineering leaders, the takeaway is simple: if OpenCode is core to how software is built, token usage must be managed with the same rigor as any other critical infrastructure resource.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.