What Is AI Model Deployment ?

AI model deployment is the process of making trained machine learning models available for real-world use through production systems. While frameworks like PyTorch and TensorFlow have made model training accessible to many developers, deploying these models reliably at scale presents distinct technical and operational challenges.

Current industry data shows that 78% of organizations reported using AI in 2024, yet only 1% of business leaders report that their companies have reached AI maturity. This gap between model development and production deployment has become a primary bottleneck for AI adoption across industries.

The deployment challenge stems from fundamental differences between development and production environments. Model training typically occurs in controlled settings with cleaned datasets, predictable computational resources, and offline evaluation metrics. Production deployment requires handling real-time data streams, variable load patterns, integration with existing business systems, security requirements, and operational monitoring, none of which are addressed during the training phase.

Technical requirements for production AI systems include sub-second response times for user-facing applications, horizontal scalability to handle traffic variations, fault tolerance for system failures, data validation for incoming requests, and comprehensive observability for performance monitoring. These requirements often necessitate different skill sets, infrastructure patterns, and operational practices compared to model development.

What is AI Model Deployment?

AI model deployment is the process of making a trained machine learning model available in a production environment where it can receive input data and return predictions or insights to end users or applications. But deployment isn't just about copying model files to a server; it encompasses the entire infrastructure needed to serve your model reliably.

Consider a recommendation system for an e-commerce platform. During development, data scientists train the model using historical user behavior data. But deployment means creating a system that can:

- Receive real-time user requests (potentially thousands per second)

- Process each user's browsing history and current context

- Generate personalized recommendations in under 100 milliseconds

- Handle traffic spikes during sales events

- Learn from new user interactions to improve over time

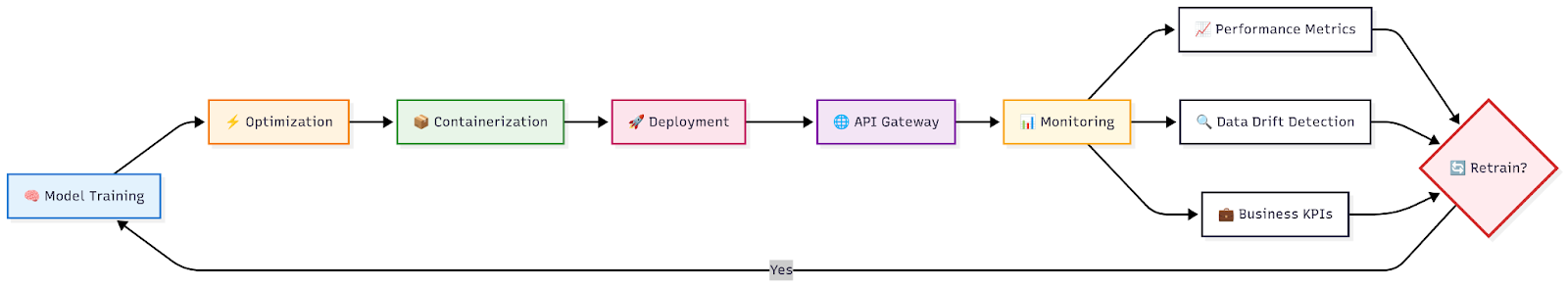

The deployment process involves several key phases: Model preparation includes optimizing the trained model for production and ensuring it can handle production data patterns. Infrastructure setup involves provisioning compute resources and configuring serving frameworks. Integration connects your model to existing business systems through APIs and monitoring tools. Validation ensures the deployed model behaves correctly under production conditions.

What makes AI model deployment particularly challenging compared to traditional software deployment is the inherent uncertainty in ML systems. AI models can produce different outputs for similar inputs, their performance can drift over time, and their resource requirements can vary unpredictably based on input complexity.

How Does AI Model Deployment Work?

The deployment process typically follows a well-established pipeline, though the specific implementation varies based on your model type, infrastructure, and business requirements.

Model Packaging and Optimization

Before your model can serve predictions, it needs to be packaged in a format suitable for production. This often involves converting from training frameworks like PyTorch or TensorFlow to optimized serving formats. Model optimization techniques can dramatically improve serving performance, quantization reduces model precision, often achieving 2-4x speedups with minimal accuracy loss. For large language models, techniques like KV-cache optimization can reduce memory usage by 50-80%.

Serving Infrastructure Setup

Once your model is optimized, it needs a serving infrastructure. This typically involves containerizing your model with frameworks like Docker, which ensures consistent behavior across different environments. Modern serving frameworks like vLLM, SGLang for language models or Triton Inference Server handle many complex aspects automatically, including batching requests for GPU efficiency.

API Layer and Request Handling

Your deployed model needs an API layer to receive requests and return predictions. This involves creating RESTful endpoints that accept input data, perform preprocessing, call your model for inference, and format responses. Request handling requires input validation, error handling, rate limiting, and authentication.

Monitoring and Observability

Once deployed, monitoring becomes crucial. Unlike traditional software, where you primarily monitor system metrics, ML models require tracking prediction quality, data drift (when input patterns change), model performance metrics, and business KPIs that your model affects.

Deployment Architectures & Strategies

The architecture you choose for deploying AI models significantly impacts performance, cost, scalability, and operational complexity.

Real-Time Inference Architecture

Real-time inference serves predictions immediately as requests arrive, typically through RESTful APIs. This architecture excels for user-facing applications where low latency is critical, fraud detection systems, recommendation engines, or personalization features. The infrastructure involves load balancers distributing requests across multiple model server instances, with auto-scaling based on traffic patterns.

Performance optimization becomes critical. Model caching eliminates redundant computations, while request batching groups multiple incoming requests together, dramatically improving GPU utilization. Some teams achieve 5-10x throughput improvements through intelligent batching strategies.

Batch Processing Architecture

Batch processing handles large volumes of data periodically rather than responding to individual requests immediately. This approach works well for generating daily reports, processing overnight data pipelines, or computing monthly recommendations. Batch architectures often use distributed computing frameworks like Apache Spark to parallelize inference across multiple nodes.

Edge Deployment Architecture

Edge deployment brings models closer to where data is generated, running inference on devices like smartphones or local servers. This reduces latency, improves privacy, and enables offline operation. Edge deployments require significant model optimization since devices have limited compute and memory resources.

Serverless and Hybrid Strategies

Serverless deployment uses cloud functions that automatically scale based on demand, charging only for actual compute time used. Many enterprises adopt hybrid approaches, combining multiple deployment strategies, real-time inference for user-facing features, batch processing for analytics, and edge deployment for mobile applications.

Tools & Frameworks for Deployment

The AI deployment ecosystem has specialized tools designed to handle different aspects of the deployment pipeline.

High-Performance Serving Frameworks

Here's the updated section with one-line code examples for each framework:

1. vLLM has emerged as the gold standard for large language model serving, implementing advanced optimization techniques like PagedAttention and continuous batching.

# vLLM:

python -m vllm.entrypoints.openai.api_server --model meta-llama/Llama-2-7b-hf --port 8000

2. SGLang (Structured Generation Language) provides another high-performance option, specializing in complex reasoning workloads and structured output generation with advanced caching mechanisms that can achieve 2-5x speedups for multi-turn conversations and agent workflows.

# SGLang:

python -m sglang.launch_server --model-path meta-llama/Llama-2-7b-hf --port 30000

3. Hugging Face Text Generation Inference (TGI) offers optimized serving for Hugging Face models with features like tensor parallelism, token streaming, and continuous batching, providing enterprise-grade performance with seamless Transformers integration.

# HF TGI:

docker run --gpus all -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:latest --model-id meta-llama/Llama-2-7b-hf

4. TensorRT-LLM delivers maximum performance optimization for NVIDIA GPUs through advanced model compilation, achieving up to 10x speedups through precision optimization and kernel fusion.

# TensorRT-LLM:

trtllm-build --checkpoint_dir ./llama-7b-checkpoint --output_dir ./llama-7b-trt --gemm_plugin float16

NVIDIA Triton Inference Server provides a unified platform for serving models from multiple frameworks through a single API, enabling dynamic batching and model ensembles.

# Triton:

tritonserver --model-repository=/models --backend-config=python, shm-default-byte-size=1048576

For teams looking for unified infrastructure, TrueFoundry's model serving capabilities abstract away the complexity of choosing and configuring individual serving frameworks, automatically selecting the optimal serving backend (vLLM, SGLang, TGI, TensorRT-LLM, or others) based on your model type and performance requirements.

Container Orchestration and MLOps Platforms

Kubernetes has become the standard for orchestrating containerized ML workloads, offering auto-scaling, rolling updates, and service discovery. MLflow provides model registry and experiment tracking, while platforms like TrueFoundry's AI Gateway provide unified access to multiple model providers with sub-3ms latency and enterprise-grade security.

Cloud Platform Services

Major cloud providers offer managed services: AWS SageMaker provides end-to-end ML lifecycle management, Google Vertex AI offers strong integration to Google's data services, and Azure Machine Learning provides comprehensive MLOps capabilities with OpenAI and Microsoft ecosystem integration.

Key Considerations for Deployment

Security and Privacy

AI models often process sensitive data, making security paramount. Input validation prevents models from behaving unpredictably with adversarial inputs. Data privacy considerations multiply in AI systems, and models can inadvertently leak training data through outputs. Enterprise security requirements often include role-based access control, audit logging, and compliance certifications.

Performance and Latency Optimization

User expectations for AI applications mirror traditional web applications, responses should be fast and reliable. Model optimization techniques like quantization can provide 2-4x speedups, while infrastructure choices significantly impact performance. GPU acceleration provides speedups for appropriate workloads but comes with higher costs.

Scalability and Resource Management

AI workloads have highly variable resource requirements. A language model might use a few hundred MBs of token activations for a short query but multiple gigabytes for complex reasoning. Traditional auto-scaling approaches often struggle with these patterns, requiring intelligent routing based on request complexity.

Cost Management

AI deployment costs can spiral quickly without proper controls. GPU-accelerated instances can cost $3-10 per hour, meaning misconfigured auto-scaling can generate thousands in unexpected charges. Model optimization directly impacts costs, a 4x speedup from quantization can translate to 75% cost reduction.

Deployment in Different Environments

Cloud Deployment

Cloud deployment offers the fastest path from development to production, with managed services handling infrastructure automatically. Advantages include unlimited scalability and access to specialized hardware, though ongoing costs can become substantial at scale.

On-Premises Deployment

On-premises deployment provides maximum control over infrastructure and data. This appeals to regulated industries and organizations with sensitive data requirements. Challenges include higher upfront costs and complexity of dynamic scaling. TrueFoundry's on-premises capabilities provide cloud-native architecture that can run in air-gapped environments.

Edge Deployment

Edge deployment brings inference to end-user devices, reducing latency and enabling offline operation. Model optimization becomes critical since devices have limited resources. Management complexity increases as you need mechanisms to update models across distributed devices.

Caption: TrueFoundry's unified platform enables seamless deployment across cloud, on-premises environments through a single management interface

What Are the Challenges?

Despite significant advances in AI deployment tooling, organizations continue to face substantial challenges when moving models from development to production.

The Skills Gap Crisis :

The AI deployment skills gap represents more than just a hiring challenge, it's a fundamental mismatch between educational curricula and industry needs. According to IBM research, 33% of enterprises cite "limited AI skills and expertise" as their top deployment barrier.

Traditional software engineers often struggle with ML concepts like model drift, statistical significance, and inference optimization. Conversely, data scientists who excel at model development frequently lack experience with production concerns like containerization, API design, and security hardening.

This skills mismatch manifests in several ways: over-engineered solutions where teams build complex infrastructure for simple models, under-engineered systems that can't handle production requirements, and operational blind spots where teams deploy models without proper monitoring or fallback mechanisms.

Data Complexity and Quality Issues :

Production data differs dramatically from controlled development datasets. Real-world data has missing fields, unexpected encodings, schema variations, and evolving distribution patterns.

A fraud detection model must handle schema variations from different payment processors, missing features from system failures, encoding inconsistencies, and distribution shifts from new payment methods. Data preprocessing often requires as much engineering effort as the model itself.

Infrastructure Complexity and Integration Challenges :

Modern AI deployment requires integrating dozens of specialized tools: Kubernetes, serving frameworks (vLLM, SGLang, Triton), monitoring systems (Prometheus, Grafana), data pipelines, and cloud services. Each component has distinct configuration requirements and APIs.

Integration complexity grows exponentially with components. Enterprise deployments involve custom GPU scheduling, service mesh configuration, monitoring stack integration, and specialized CI/CD pipelines. Vendor lock-in compounds these challenges, making migration difficult when requirements change.

Performance Optimization and Resource Management :

AI workloads exhibit highly variable performance characteristics that challenge traditional infrastructure management approaches. A language model might process a simple query ("What's the weather?") in 100ms using minimal GPU memory, but require 60 seconds and multiple gigabytes for complex reasoning tasks ("Write a comprehensive business plan for a sustainable energy startup").

This variability makes capacity planning extremely difficult. Traditional auto-scaling relies on predictable resource usage patterns, but AI workloads can have: Unpredictable memory requirements where similar requests use vastly different resources, Variable latency where response times vary by orders of magnitude, Batch size sensitivity where throughput depends heavily on request grouping strategies, and Model-specific scaling patterns where different model types require completely different infrastructure configurations.

Security and Compliance Challenges :

AI systems introduce novel attack vectors that traditional security tools don't address. Adversarial inputs can cause misclassification, while model inversion attacks can extract training data, exposing sensitive information.

Enterprise requirements add complexity: network isolation conflicting with cloud-native architectures, custom authentication systems, data residency constraints, and compliance frameworks (GDPR, HIPAA, SOC 2) requiring specific technical controls.

Monitoring and Observability Complexity :

Traditional application monitoring focuses on infrastructure metrics (CPU, memory, disk) and basic application metrics (request rate, error rate, latency). AI systems require additional layers of monitoring that many teams struggle to implement effectively.

Model-specific monitoring includes prediction confidence distributions, output quality metrics, and business KPI correlation. Data drift detection identifies when input patterns change in ways that might affect model performance. Model performance tracking monitors accuracy, precision, recall, and other relevant metrics over time.

The lag between model performance degradation and business impact can be substantial, making it difficult to establish clear cause-and-effect relationships. Teams need monitoring strategies that can identify potential issues before they significantly impact business outcomes, but building these capabilities requires significant expertise and ongoing maintenance.

Silent failures are particularly problematic, models continue processing requests while predictions become increasingly incorrect. The lag between performance degradation and business impact makes it difficult to establish cause-and-effect relationships, requiring monitoring strategies that identify issues before they impact business outcomes.

Conclusion

The journey from AI prototype to production system represents one of the most critical transitions in modern technology deployment. While many businesses have adopted AI in some form, very few are truly mature in their deployment practices. This gap represents both a challenge and an enormous opportunity.

Key Takeaways for Success

Start with Infrastructure: Choose platforms that can grow with your needs rather than building point solutions. Modern platforms like TrueFoundry demonstrate how unified infrastructure eliminates complexity while providing enterprise-grade performance.

Prioritize Operability from Day One: Monitoring, logging, and error handling should be designed into your deployment architecture from the beginning, not added as afterthoughts.

Plan for Scale and Variability: AI workloads behave differently from traditional applications. Design your architecture to handle variable resource requirements and unpredictable performance characteristics.

The Future of AI Deployment

Looking ahead, agentic AI systems and multi-modal models will create new infrastructure requirements. The market is consolidating around agentic AI platforms that provide comprehensive, integrated solutions rather than point tools. Organizations are recognizing that operational complexity outweighs the theoretical benefits of best-of-breed approaches.

Taking the Next Step

If your organization is ready to move beyond AI prototypes and build production systems that deliver real business value, start by evaluating your current deployment practices. Consider platforms that provide immediate value while supporting long-term growth.

TrueFoundry's comprehensive AI infrastructure platform offers a practical starting point, with proven enterprise deployments, sub-3ms latency performance, and support for everything from simple model serving to complex agentic workflows. The transformation from AI experimentation to AI-powered business operations will define competitive advantage in the AI-driven economy.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)