MCP Registry and AI Gateway

Between 2020 and 2023, foundation models like GPT-3 and GPT-4 proved that large language models can generate human-like text, write code, summarize documents, and answer complex questions, But these models were stateless and sandboxed, they couldn’t access internal systems, databases, or apps — and had no way to actually take real-world actions.

You could ask a model:

“Write a MongoDB query to list all collections in the compliance database.”

It would generate output that looked like a valid MongoDB query — but:

- It had no idea whether the database existed

- It didn’t know what collections were present in the DB

- It couldn’t tell whether the query would even run

- There was no way to inspect schemas, verify results, or trigger follow-up actions like summarizing or notifying another system

It was all guesswork — with no feedback loop.

To fix this, developers began building layers around LLMs:

- Prompt chaining

- Custom wrappers in Python

- REST API call injection via string templates

- Manual context stuffing

(“using the following schema, write the query”)

These were clever fixes but harder to maintain.

Frameworks like LangChain, LlamaIndex, and Semantic Kernel emerged to organize these workflows. These tools helped organize things, but the problems didn’t go away. Models were still hallucinating fields, falling for prompt injection, skipping validation, and had no standard way to run actual functions defined. Every new use case still felt like reinventing the wheel.

The real turning point came when developers realized:

“We don’t need models to guess commands. We need them to call real functions — the same way frontend apps call backend APIs.”

Around mid-2023, OpenAI introduced function calling, enabling models to return structured JSON output that directly mapped to real function calls.

It redefined what was possible with model integration

- LLMs could now interface with tools using clearly defined inputs and outputs

- Models behaved like API clients, rather than guess-based generators

- Tasks could now be automated with multiple steps and system interactions

With function calling came the rise of tools and agents — models that could chain actions, follow workflows, and interact with real systems

But…

1. Every implementation was vendor-specific.

2. There wasn’t any shared standard format for how tools were described or invoked.

This is where MCP (Model Context Protocol) enters — proposed as a general, open protocol for structured communication between models and external tools. Instead of hardcoding tool APIs into each LLM app, MCP offers a universal charger for AI-tool connections — like OpenAPI, Anthropic etc but for LLMs calling tools. It’s based on JSON-RPC 2.0, a widely used specification that supports remote procedure calls (RPCs) using JSON.

What MCP Defines

- How a model can call a tool method (e.g., runAggregation)

- How to pass parameters and get structured responses

- How tools describe their available methods (via schemas or manifests)

- A lightweight standard for tool integration across any backend (DB, CLI, cloud API)

Think of MCP as the API contract between a model and a tool. Without a standard protocol, every integration required custom engineering — costly, error-prone, and repetitive. MCP solved this by creating one universal language, simplifying tool integrations once and for all. But what exactly is MCP, and how does it work practically? Let’s dive deeper.

Model Context Protocol

MCP (Model Context Protocol) is a lightweight protocol purpose-built for structured communication between AI models and external tools.

At its core, MCP uses JSON-RPC 2.0, a battle-tested protocol for calling remote procedures with structured inputs and outputs — perfect for turning LLM output into real-world tool invocations.

So why invent another protocol?

Existing options like REST or GraphQL are either too generic, too verbose, or just too brittle for AI-first workflows. MCP bridges this gap by providing clear structure, minimal overhead, and an explicit focus on AI-centric workflows. It’s not meant to replace your APIs — it’s meant to let models use them safely, repeatably, and predictably.

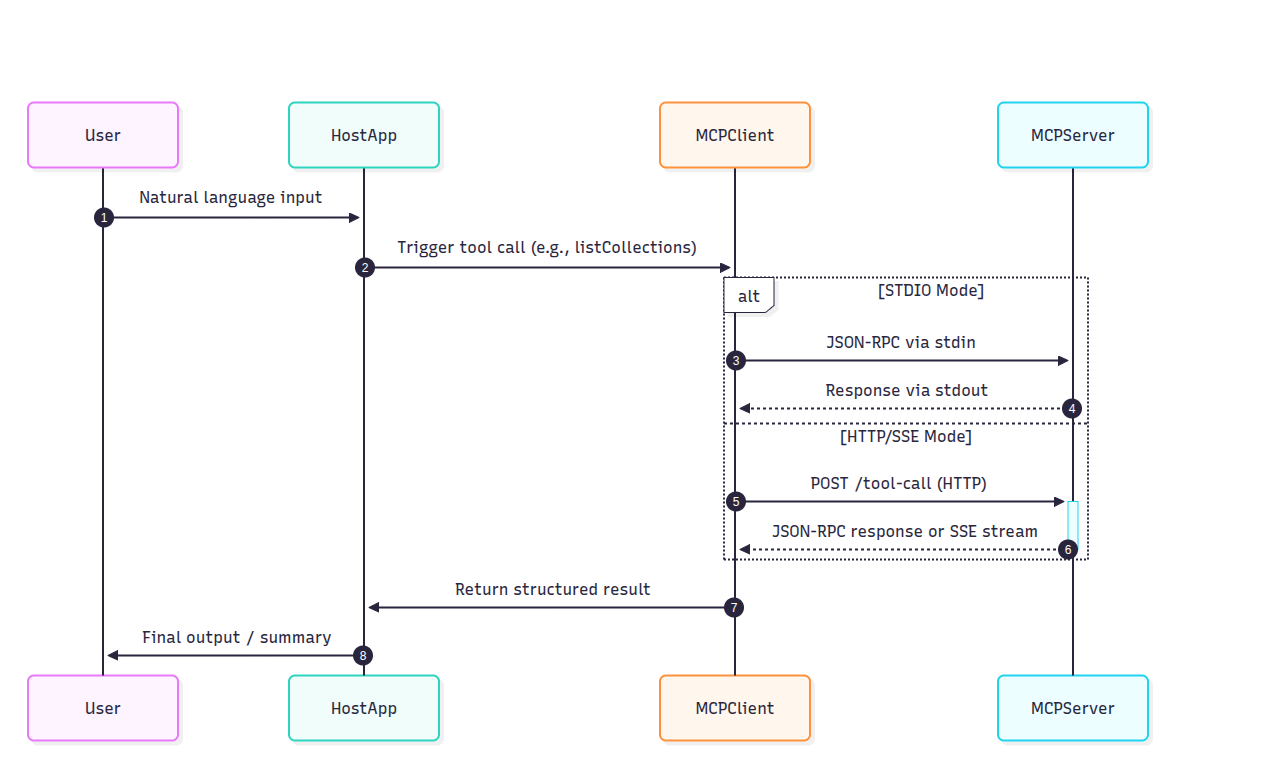

- Host App: Orchestrates tool calls, handles LLM output, chains responses — e.g., a GRC Agent that queries Mongo, summarizes results, and sends alerts.

- MCP Server: Implements domain-specific logic like listCollections, runAggregation, or sendMessageToSlack.

- MCP Client: A minimal library that handles request formatting, authentication, retries, and connects the Host to the appropriate MCP Server.

Servers typically expose:

- Tools: Executable commands like listCollections, runAggregation, or sendSlackMessage.

- Resources: Descriptive data like schema definitions, collection metadata, or database structure.

Optionally, servers can also expose:

- Prompts: Standardized agent prompts/templates

- Client-side primitives: Caching or batching hints

- Notifications: Real-time event streams (via SSE)

Transport Options: STDIO vs HTTP/SSE

MCP is transport-agnostic and supports two modes:

Both transports follow the same JSON-RPC format, so you can switch transports without rewriting logic.

So that’s the big idea behind MCP — a minimal, clean way for models to call tools without fragile prompt glue. No hallucinated commands. No manual context stuffing. Just clear inputs and outputs. But how does that actually work in a real app? Let’s walk through an example with a MongoDB-powered compliance assistant.

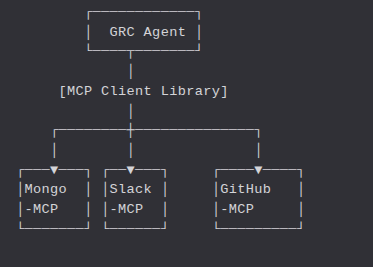

Automating a GRC Workflow Using Mongo-MCP

Imagine you’re building a GRC (Governance, Risk, and Compliance) assistant.

This assistant needs to:

- Fetch collections and audit logs from a MongoDB database

- Summarize findings for a compliance officer

- Notify relevant teams on Slack

- Optionally, file a follow-up issue on GitHub

In a traditional setup, you’d hardwire this logic using REST calls or Python scripts, stuffing schemas and credentials into prompt templates. Every integration would be custom — and fragile.

With MCP, each of these tools — MongoDB, Slack, GitHub — becomes a first-class function provider, exposing clearly defined methods like:

- listCollections

- runAggregation

- sendMessage

- createIssue

The GRC Agent (our Host App) simply calls these tools using MCP’s JSON-RPC schema

Scenario: Detect and Report Policy Violations User instruction: “Check the compliance database for any policy violations today and notify the team on Slack.”

1. User Input → Host App → LLM The GRC Agent (Host App) sends the user message to the model. The model is tool-aware and responds with:

{

"tool_calls": [

{

"name": "listCollections",

"arguments": {

"database": "compliance"

}

}

]

}

2. Host App Invokes Mongo-MCP via MCP Client This tool call is converted to a JSON-RPC request:

{

"jsonrpc": "2.0",

"method": "listCollections",

"params": {

"database": "compliance"

},

"id": "req-001"

}

3. Mongo-MCP Executes the Function Mongo-MCP maps this call to:

def listCollections(database: str) -> List[str]:

return mongo_client[database].list_collection_names()

It runs the function, gets the result, and responds:

{

"jsonrpc": "2.0",

"result": [

"audit_logs",

"policy_violations",

"user_sessions"

],

"id": "req-001"

}

4. Agent Chains the Next Call: runAggregation The model now generates a follow-up call based on the available collections:

{

"tool_calls": [

{

"name": "runAggregation",

"arguments": {

"database": "compliance",

"collection": "policy_violations",

"pipeline": [

{ "$match": { "timestamp": { "$gte": "2025-08-05" } } },

{ "$group": { "_id": "$severity", "count": { "$sum": 1 } } }

]

}

}

]

}

This results in another JSON-RPC call to Mongo-MCP, and the server returns:

{

"jsonrpc": "2.0",

"result": [

{ "_id": "high", "count": 5 },

{ "_id": "medium", "count": 12 }

],

"id": "req-002"

}

The agent passes this result back to the model with a prompt like:

“Summarize this policy violation data in plain English.”

The model replies:

“Today, there were 5 high-severity and 12 medium-severity policy violations in the compliance database.”

5. Model Calls Slack-MCP to Notify the Team Now the agent issues a final structured tool call:

{

"tool_calls": [

{

"name": "sendMessage",

"arguments": {

"channel": "#compliance-alerts",

"message": "5 high and 12 medium policy violations detected today. Please review."

}

}

]

}

The Slack-MCP server sends the message, and the workflow is complete. All this happened through structured JSON calls, not string manipulation or prompt engineering.

Why do you need a MCP Server Registry in MCP Gateway?

The Mongo-MCP demo looks clean. The model made structured tool calls. Each function worked as expected. No hallucinations. No brittle string templates. But that’s a happy path — and real systems aren’t just about working… they’re about working safely, reliably, and observably at scale.

In production, raw MCP falls short in a few key areas:

1. No Access Control (RBAC) Raw MCP has no built-in way to restrict who can call what.

- What if you want to allow run Aggregation but block deleteCollection?

- What if a model should only query certain datasets (e.g. finance can’t access HR)?

In real orgs, RBAC (role-based access control) is non-negotiable — especially when models are wired into sensitive tools.

2. No Authentication or API Keys Raw MCP doesn’t handle

- Validating which agent or model is making the call

- Scoping credentials per team, environment, or project

- Token expiry or revocation

This means anyone with access to the mcp server can call any tool — and there’s no audit trail.

3. No Observability You can’t fix what you can’t see.

- What’s the latency of each tool call?

- What’s the failure rate or retry count?

- Which tools are being overused or timing out?

With raw MCP, you don’t have dashboards, logs, or traces. You’re flying blind.

4. No Guardrails LLMs are creative — sometimes too creative.

Raw MCP has:

- No token limits (e.g. prevent massive aggregations)

- No result-size bounds (e.g. returning 5MB from Mongo)

- No circuit breakers or pause prompts (e.g. “Are you sure you want to send this Slack alert?”)

Without guardrails, one prompt bug can lead to thousands of Slack messages or accidental data wipes.

5. No Retry, Throttling, or Quotas In production

In production, tools don’t always behave perfectly — they can fail, time out, or respond slowly. Without safeguards, even well-behaved models can:

- Hit rate limits

- Hammer services with retries

- Expose sensitive tools to misuse

The raw MCP protocol assumes everything just works — a “happy path” world. But real-world infrastructure is messy. You need smart retry logic, caching, rate limiting, and access control to stay sane at scale.

- Authentication + token-based access

- RBAC per model, user, org, and tool

- Observability and tracing

- Quotas, limits, and retry strategies

- Approval workflows for sensitive actions

This is exactly what the TrueFoundry Gateway provides.

From Single-Server Demos to Enterprise-Grade Agent Workflows

In the first half of this article we learned what the Model-Context-Protocol is and used a Mongo mcp server to automate a legacy GRC platform. That toy example is great for a hack-day, but it quickly runs into real-world friction:

TrueFoundry’s AI Gateway packages the missing plumbing—an MCP registry, central auth, RBAC, guard-rails, and rich observability—so teams can move from “hello-world agent” to production safely and repeatedly.

What the Gateway Adds on Top of Raw MCP

TrueFoundry positions the Gateway as a control-plane that sits between your agents (or Chat UI) and every registered MCP server and LLM provider.

Key capabilities include:

- Central MCP Registry – add public or self-hosted servers once; discover them everywhere

- Unified credentials – generate a single Personal-Access-Token (PAT) or machine-scoped Virtual-Account-Token (VAT) that is automatically exchanged for per-server OAuth / header tokens behind the scenes

- Fine-grained RBAC – restrict which users, apps, or environments can see or execute which tools

- Agent Playground & built-in MCP client – rapid prototyping without writing a line of code

- Observability & guard-rails – latency, cost, traces, approval flows, rate-limits, and caching baked in

Think of it as the API gateway + service mesh + secret store for the emerging MCP environment. “As noted in the MCP Server Authentication guide, the Gateway handles credential storage and token lifecycle automatically.”

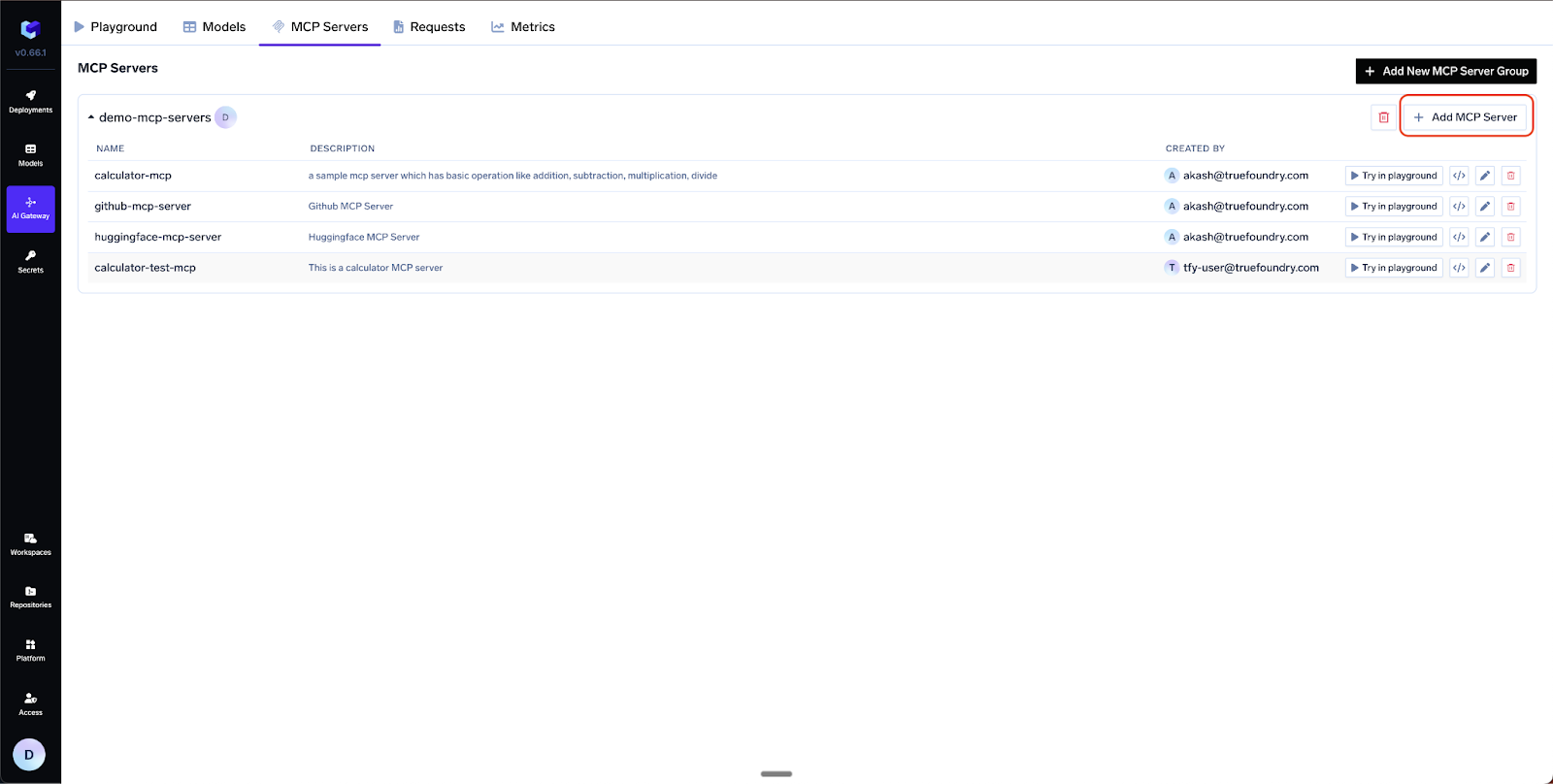

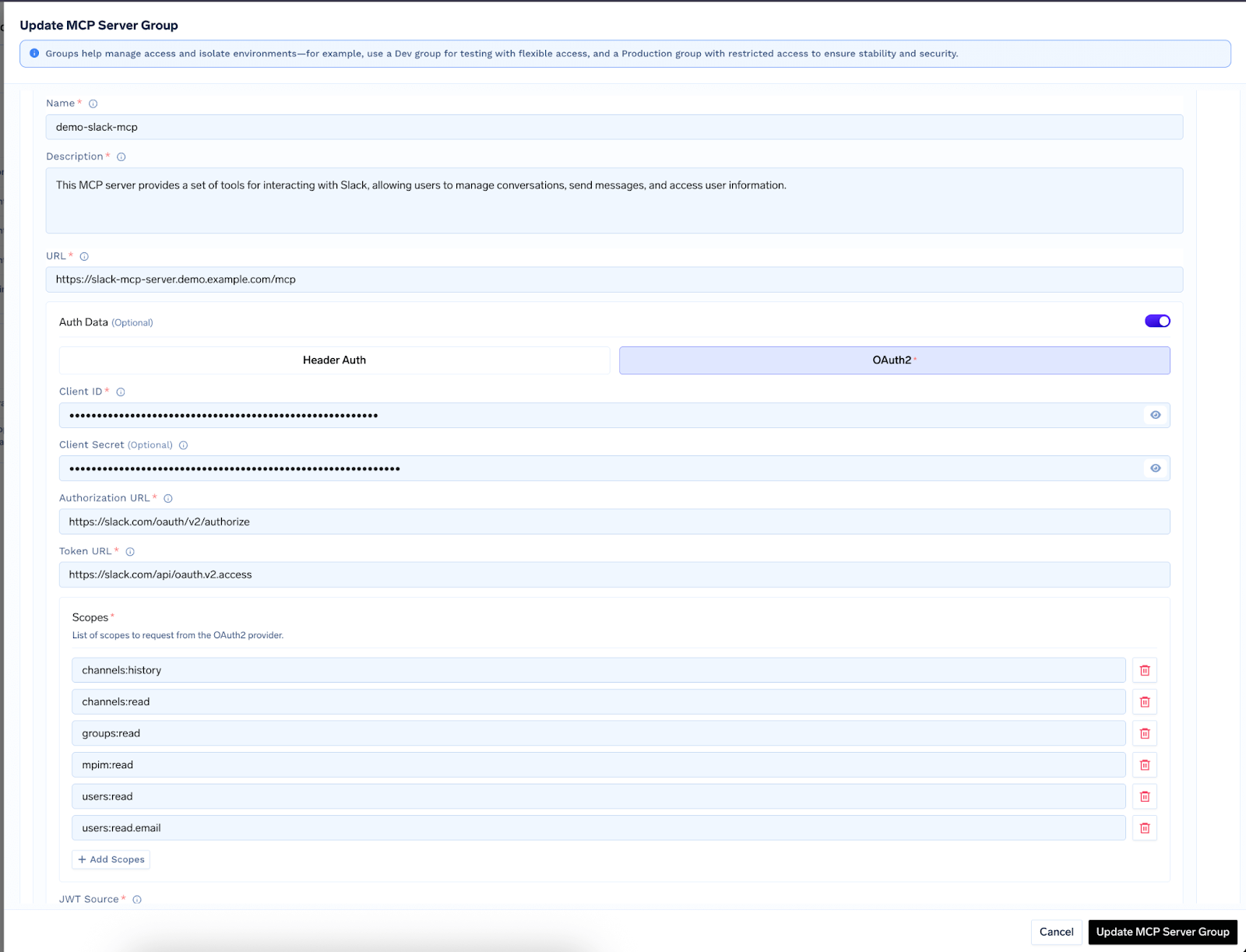

Hands-On: Registering Your First MCP Server

The very first step in the UI is to create a group—e.g. dev-mcps or prod-mcps. Groups let you attach different RBAC rules and approval flows to different environments.

“You can follow the TrueFoundry MCP Server Getting Started guide for detailed steps.”

AI Gateway ➜ MCP Servers ➜ “Add New MCP Server Group

name: prod-mcps

access control:

- Manage: SRE-Admins

- User : Prod-Runtime-Service-AccountsInside the group choose Add/Edit MCP Server and fill three things :

You can just as easily add:

- No-Auth demo servers (e.g., Calculator)

- Header-Auth servers that accept a static API key (e.g., Hugging Face)

- Any number of future servers (Atlassian, Datadog, internal micro-services)

Behind the scenes the Gateway stores credentials in its secret store and handles token refresh.

Authentication & RBAC

TrueFoundry supports three auth schemes per MCP server :

“These modes are described in more detail in the MCP Server Authentication documentation.”

Once a server is registered you don’t hand raw tokens to every developer. Instead they authenticate once to the Gateway and receive:

- PAT – user-scoped, human-friendly, good for CLI / experiments

- VAT – service-account scoped, locked to selected servers, perfect for production apps

The Gateway checks the calling token against:

- Group-level permissions (can this user reach any server in prod-mcps?)

- Server-level permissions (is slack-mcp whitelisted?)

- Tool-level permissions (can they call sendMessageToChannel?)

If any check fails the request is rejected before it hits your Slack workspace.

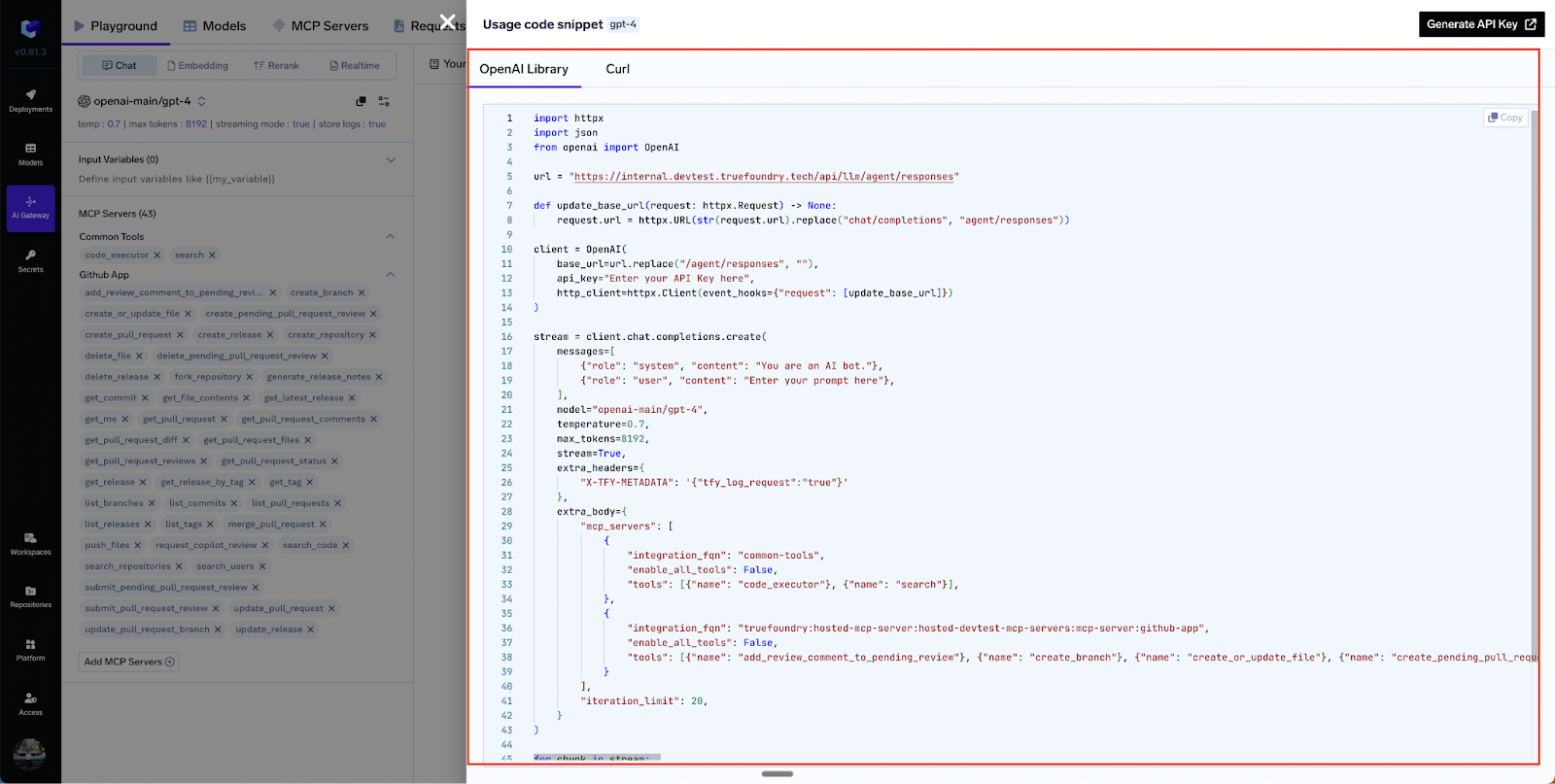

From Playground to Code: The Agent API

After experimenting in the UI you can click “API Code Snippet” to generate working Python, JS, or cURL examples .Below is a trimmed JSON body that wires three servers together (GitHub, Slack, Calculator):

POST/api/llm/agent/responses

{

"model": "gpt-4o",

"stream": true,

"iteration_limit": 5,

"messages": [

{

"role": "user",

"content": "Summarize open PRs on repo X and DM me the top blockers."

}

],

"mcp_servers": [

{

"integration_fqn": "truefoundry:prod-mcps:github-mcp",

"tools": [ {"name": "listPullRequests"}, {"name": "createComment"} ]

},

{

"integration_fqn": "truefoundry:prod-mcps:slack-mcp",

"tools": [ {"name": "sendMessageToUser"} ]

},

{

"integration_fqn": "truefoundry:common:calculator-mcp",

"tools": [ {"name": "add"} ]

}

]

}

“You can find a similar example in the Use MCP Server in Code Agent guide, which also includes complete Python and JS snippets.”

The streaming response interleaves:

- assistant tokens (LLM reasoning)

- tool-call chunks (function name + incremental arguments)

- tool-result events (JSON output)

This lets you build reactive UIs that show each step of the agentic loop in real time.

Observability, Guardrails & Policy

Even a “hello world” agent can cost real money and do real damage. TrueFoundry ships first-class observability:

- Latency / error dashboards – TTFT, task latency, HTTP errors, tool retries

- Token & cost tracking – attribute spend per model, per team, per feature flag

- OpenTelemetry traces – hop-by-hop spans across agent, MCP proxy, and LLM

- Rate-limits & caching – prevent runaway loops and reuse identical web-search results

- Guard-rail hooks – enforce PII-scrubbing, NSFW filters, or “human-in-the-loop approval” on any destructive tool

All metrics come out of the box; no side-car agents or custom exporters required.

Supporting All MCP Transports (HTTP & STDIO)

Many open-source servers still speak stdio (stdin/stdout) instead of HTTP. TrueFoundry recommends wrapping them with mcp-proxy and deploying as a regular service

# wrap a Python stdio server

mcp-proxy --port 8000 --host 0.0.0.0 --server stream python my_server.py

Ready-made templates exist for Notion and Perplexity servers, plus K8s manifests for Node or Python images. Once proxied, registration is identical to any other HTTP MCP endpoint.

“TrueFoundry’s MCP Server STDIO guide covers this proxying approach and provides deployment templates.”

Example Walk-Through: Enterprise Compliance Bot

Let’s return to our legacy GRC scenario but crank the ambition up:

“Keep our compliance evidence up-to-date. If a policy file changes in GitHub, store the diff in MongodB, create a Jira ticket, and post a summary in Slack.”

Servers Involved

Flow

- GitHub webhook hits a cloud function.

- The function calls the Agent API (VAT token) with the four servers enabled.

- LLM reasons → calls github.getFileDiff → mongo.insertDocument → jira.createIssue → slack.sendMessage.

- Each tool call and result is streamed back; observability captures latency of every hop.

- If the diff is > x (certain number of lines of code, as threshold), a guard-rail inserts “requires manual approval” and pauses execution; a security lead can approve via the Gateway UI.

Governance

- The VAT attached to the function only sees the four listed servers—least privilege.

- Separate dev and prod groups let you test against a sandbox Jira and staging Slack.

- Auditors can replay any incident: traces + full JSON payloads are retained 30 days by default.

Extending the Ecosystem: Building Your Own MCP Server

Because MCP is just JSON-RPC over HTTP or stdio, any internal service can expose tools, here is a small example :

- Drop this container into your Kubernetes cluster.

- Register it in the dev group with No-Auth while developing.

- Change to Header-Auth or OAuth2 before moving it to prod-mcps.

From that moment onwards, every agent in your company can reason over compliance controls with exactly the same ergonomics as Slack or GitHub.

Best Practices Cheat-Sheet

“This sheet has been adapted from the TrueFoundry MCP Overview, these guidelines help ensure secure and reliable deployments.”

Conclusion

MCP lets large language models (LLMs) speak the same language as tools — but it doesn’t handle things like security, discovery, or governance at an enterprise level. That’s where TrueFoundry’s AI Gateway steps in: it adds everything teams need out of the box — like a full MCP registry, built-in authentication, role-based access control (RBAC), deep observability, and a powerful Agent API to tie it all together.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.