Databricks Mosaic AI Gateway Pricing Explained (2026)

Introduction

Databricks Mosaic AI Gateway is positioned as a unified interface for managing, securing, and monitoring AI model usage within the Databricks ecosystem. For organizations already leveraging Databricks for ETL and data engineering, the integration of Mosaic AI provides a consolidated governance layer.

However, Mosaic AI Gateway pricing is not a simple add-on. Costs are fundamentally tethered to the Databricks Unit (DBU) model, specific compute tier selections, and platform-level dependencies like Unity Catalog. This analysis breaks down the operational cost structure of Mosaic AI Gateway and explains why high-scale engineering teams often evaluate unbundled alternatives like TrueFoundry to achieve clearer unit economics and architectural independence.

What Is Databricks Mosaic AI Gateway?

Mosaic AI Gateway serves as the centralized control plane for routing, monitoring, and governing AI model requests. It acts as a proxy between application logic and model endpoints, whether those models are external (e.g., GPT-4o via OpenAI) or hosted internally via Mosaic AI Model Serving.

Architecturally, the gateway provides the observability hooks required for prompt and response logging, latency tracking, and usage attribution. It is not an isolated binary but a feature set integrated into the Databricks Model Serving infrastructure. Consequently, its operational availability is tied to the reliability and scaling characteristics of the underlying Databricks workspace and the Unity Catalog governance layer.

The 'DBU' Currency: How Databricks Actually Bills You

Databricks does not bill per API request in the traditional SaaS sense. Instead, consumption is normalized into Databricks Units (DBUs). As of early 2026, DBU rates for AI workloads generally start at $0.07 per DBU for foundation model serving and can exceed $0.70 per DBU for serverless SQL operations used to analyze logs [Source: Databricks Pricing].

What Is a DBU?

A DBU is a proprietary metric representing processing power per hour. The challenge for platform teams lies in forecasting: a single AI request might involve multiple DBU-consuming events, including gateway routing, guardrail execution, and log ingestion into Delta Tables. DBU costs vary by plan (Standard, Premium, or Enterprise) and cloud provider.

Integrated Compute Economics

In standard deployments, organizations manage two cost streams: the payment to the cloud provider (AWS/Azure/GCP) for raw VM instances and the payment to Databricks for the DBU management fee. Databricks Serverless bundles these costs into a single rate. While this simplifies billing, the bundled rate typically includes a premium over raw infrastructure costs to cover platform management and orchestration [Source: Unravel Data Analysis].

Where Mosaic AI Gateway Fits Into Databricks Pricing

Mosaic AI Gateway costs are realized through the compute resources required to process requests. Every request passing through the gateway consumes compute time on a Model Serving endpoint.

The primary cost drivers include:

- Request Processing: The DBU burn associated with the gateway's logic for routing and load balancing.

- Observability Overhead: The compute and storage cost of writing request/response payloads to Inference Tables.

- Governance Checkpoint: The latency and compute cost added by Unity Catalog permission checks for every model invocation.

Mosaic AI Gateway Pricing Breakdown

The financial impact of using the gateway depends on whether the traffic is routed to external providers or internal hosted models.

External Model Routing

When the gateway routes traffic to external providers like OpenAI or Anthropic, organizations pay the provider's token fees directly. Additionally, Databricks charges for the gateway features (routing, tracking, and logging) through DBUs.

- Cost Vector: Traffic processed by the gateway incurs DBU consumption based on throughput.

- Infrastructure Requirement: Even for external routing, a serving endpoint must be "Active." In high-concurrency environments, this may require provisioned capacity that prevents full scale-to-zero.

Internal Model Serving (Mosaic AI Model Serving)

For models hosted within Databricks, costs are generally split into two modes:

- Pay-Per-Token: Frequently used for developmental testing or intermittent workloads. Proprietary models are billed at specific DBU rates per 1M tokens (e.g., ~$94 per 1M tokens for certain high-tier models) [Source: Databricks Proprietary Serving].

- Provisioned Throughput: The standard for production performance. This mode requires a minimum concurrency commitment, often starting at $0.07 per DBU, where you pay for reserved capacity 24/7. This model ensures availability but can result in idle capacity costs if traffic fluctuates significantly.

Associated Ecosystem Costs

The gateway itself is one component of the total cost of ownership. The supporting infrastructure often drives a significant portion of the monthly bill.

Unity Catalog Dependency

Mosaic AI Gateway relies on Unity Catalog for governance. Inference logs are stored in Delta Tables, which incur:

- Storage Costs: Standard cloud object storage fees.

- Inference Table Processing: Compute costs for the background jobs that ingest logs from the gateway.

- Analysis Costs: querying these logs for audit or billing requires Databricks SQL. At $0.70 per DBU for Serverless SQL, running frequent observability queries contributes to the overall platform spend.

Guardrails and Data Scanners

Enabling AI Guardrails—such as PII masking or toxicity filters—requires additional compute. Each guardrail runs a model or regex scanner on the request/response payload.

- Latency Impact: Internal benchmarks suggest P95 latency can increase by 50ms to 200ms depending on guardrail complexity.

- Compute Impact: Guardrail execution utilizes the Model Serving compute, which consumes DBUs at the standard rate.

Common Cost Challenges Teams Face with Databricks AI Pricing

- Variable DBU Consumption: Auto-scaling triggers are reactive. Sudden bursts in traffic can provision additional compute nodes that remain active for a minimum duration, impacting cost efficiency during short spikes.

- Attribution Complexity: DBUs are often aggregated at the workspace level. Isolating specific "AI Gateway" costs from broader data engineering workloads typically requires custom tagging and analysis of system tables.

- Ecosystem Dependencies: Utilizing the gateway ties logging and governance to the Databricks architecture (Unity Catalog, Delta Tables). Migrating to a different inference stack later requires re-implementing these governance layers.

Why Some Teams Look Beyond Databricks Mosaic AI Gateway

As AI deployments move from proof-of-concept to high-scale production, the DBU-based pricing model on every token can impact unit economics. Engineering teams often find that the comprehensive nature of the Databricks platform—while effective for data warehousing—adds architectural weight for simple application-side AI routing.

Additionally, the requirement to operate within the Databricks control plane may limit the adoption of specialized hardware (e.g., AWS Trainium/Inferentia) or alternative deployment strategies (e.g., on-premise Kubernetes) that can lower TCO.

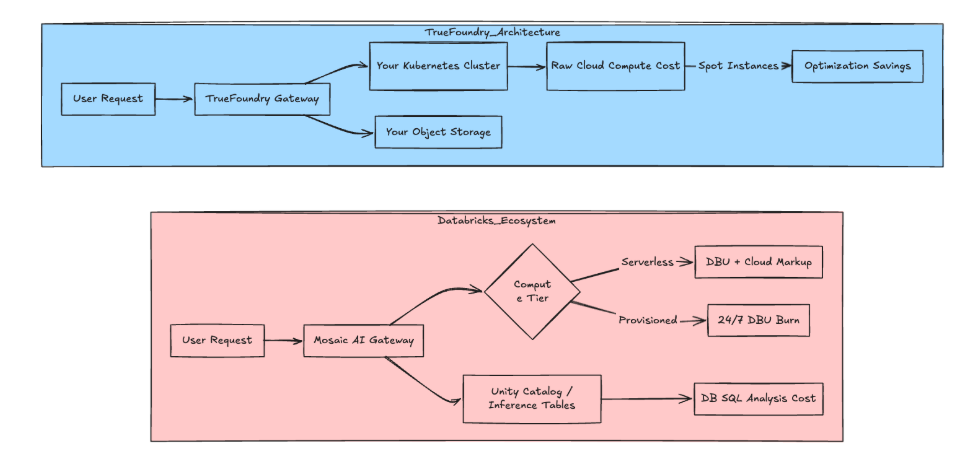

How TrueFoundry Approaches AI Infrastructure

TrueFoundry provides an alternative architecture designed for engineering teams who prioritize cost transparency and infrastructure control.

- Kubernetes-Native: TrueFoundry deploys directly into the customer's cloud account (AWS, Azure, GCP). There is no "management DBU" added on top of raw instance costs.

- Direct Routing: Unlike platform-bundled gateways, TrueFoundry does not charge a per-token markup for external routing.

- Infrastructure Optimization: The platform supports Spot instances for inference and granular scale-to-zero configurations. In many production scenarios, this approach reduces idle compute costs compared to provisioned throughput models [Source: TrueFoundry vs Databricks].

Table 1: Databricks Mosaic AI Gateway vs TrueFoundry: Cost Structure Comparison

Fig 1: Architecture and Cost Flow Comparison

Ready to Unbundle Your AI Stack?

While Databricks Mosaic AI Gateway offers integration benefits for teams already embedded in the Lakehouse, the DBU-based pricing model can lead to variable costs at scale. TrueFoundry offers a high-performance, cost-transparent alternative that allows engineers to own their infrastructure without the platform premium.

Would you like me to generate a personalized TCO (Total Cost of Ownership) projection comparing your current Databricks spend against a TrueFoundry deployment?

FAQs

How much does Databricks cost per month?

Monthly costs are highly variable and consumption-dependent. While entry-level usage is often nominal for small teams, enterprise-scale production workloads—driven by continuous availability requirements and extensive governance logging—can result in substantial monthly operational expenditures as DBU consumption scales linearly with throughput.

How does Databricks Mosaic AI pricing work?

It is consumption-based via the Databricks Unit (DBU) model. You are billed for the compute time of the Model Serving endpoint, the storage of inference logs in Delta Tables, and the compute resources required to analyze those logs via Databricks SQL.

How is TrueFoundry more cost-effective than Databricks Mosaic AI?

TrueFoundry operates on a bring-your-own-cloud model, eliminating the DBU management premium found in bundled platforms. By deploying directly to your Kubernetes clusters and enabling aggressive optimization strategies like Spot instances and granular scale-to-zero, it aligns serving costs directly with raw infrastructure prices.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.