Langchain vs Langgraph: Which is Best For You?

When it comes to building applications powered by large language models (LLMs), developers now have more choices than ever. Two of the most talked-about frameworks are LangChain and LangGraph. While both aim to simplify the process of connecting LLMs with tools, data, and workflows, they take very different approaches. LangChain has quickly become one of the most popular libraries for creating AI-driven applications, offering a wide ecosystem of integrations and abstractions. On the other hand, LangGraph—built on top of LangChain—focuses on stateful, agent-like systems, using a graph-based execution model to handle complex reasoning and multi-step interactions.

If you’re trying to decide between LangChain and LangGraph, it’s important to understand their strengths, limitations, and ideal use cases. This comparison will help you evaluate which framework best fits your project, whether you’re building simple LLM apps, robust AI agents, or scalable enterprise solutions.

What Is LangChain?

LangChain is an open‑source framework for designing LLM-powered AI applications.. It offers developers a library of modular components in Python and JavaScript that connect language models with external tools and data sources, while offering a consistent interface for chains of tasks, prompt management, and memory handling.

LangChain acts as a bridge between raw LLM capabilities and real‑world functionality. It helps developers create workflows called “chains”, where each step involves generating text, querying a database, retrieving documents, or invoking external APIs, all in a logical sequence. This modular structure not only speeds up prototyping but also promotes clarity and reuse, which is helpful whether you’re creating chatbots, summarizing documents, generating content, or automating workflows

Originally launched in October 2022, LangChain quickly evolved into a vibrant, community‑driven project. It has since earned adoption across hundreds of tool integrations and model providers, enabling easy switching between OpenAI, Hugging Face, Anthropic, IBM watsonx, and more. LangChain offers an elegant, structured way to bring language models into practical applications. It abstracts complexity, amplifies flexibility, and streamlines development, making it a go-to choice for teams building capable, LLM-based systems.

What Is LangGraph?

LangGraph is an open-source framework from the LangChain team that helps developers build smarter and more adaptable AI agent workflows. Instead of running tasks in a straight line like a traditional chain, LangGraph organizes them into a graph, where each “node” represents a task and the “edges” define how those tasks connect. This design makes it possible to create flows that can branch, loop, and maintain state, giving agents the flexibility to handle more complex scenarios.

One of LangGraph’s key strengths is that it supports long-running, state-aware agents. If an agent encounters an error or needs to pause, it can pick up exactly where it left off. You can also build in human checkpoints, so a person can review or adjust an action before the agent moves forward. In addition, LangGraph can remember past interactions and context over time, which is essential for creating agents that learn and adapt.

It also comes with strong production features. Developers can monitor workflows using tools like LangSmith, which provide visual debugging, detailed logs, and full visibility into how an agent makes decisions. LangGraph can run locally or be deployed on managed platforms like LangGraph Platform and Studio. LangGraph is built for reliability, flexibility, and transparency, making it a solid choice for complex AI systems that go beyond simple step-by-step automation.

LangChain vs LangGraph

LangChain is built to make complex LLM-powered workflows feel simple and intuitive. It excels when your tasks follow a predictable, sequential pattern, fetching data, summarizing, answering questions, and so on. Its modular design offers ready-made building blocks like chains, memory, agents, and tools, which makes prototyping fast and coding straightforward. If you want to assemble a workflow that sticks to a known path quickly, LangChain is your go-to.

On the other hand, LangGraph gives you power and flexibility where things start to break or loop. Instead of linear sequences, you design graph-based workflows with nodes, edges, explicit state, retries, branching logic, and even human-in-the-loop checkpoints. It shines when your application needs to adapt, backtrack, loop, or remember long-running context, think multi-stage agents, complex decision trees, or virtual assistants that need to reason over time.

When to Use LangChain

LangChain is the right choice when you want to quickly create LLM-powered applications that follow a predictable, step-by-step process. It works best for scenarios where your workflow moves in a clear sequence from start to finish without needing frequent backtracking or branching. Suppose you are building a question-answering bot, summarization tool, content generator, or any application that takes an input, processes it, and returns an output in one pass. In that case, LangChain offers all the components you need in a straightforward way.

One of LangChain’s biggest strengths is its extensive library of pre-built integrations. It supports a wide range of LLM providers, vector databases, APIs, and third-party tools, which means you can connect different services without building everything from scratch. This makes it a great option for rapid prototyping, where speed to market is important. Developers can assemble and test ideas quickly, then refine them without rewriting core logic.

LangChain also shines in educational, research, and proof-of-concept environments. Its modular structure makes it easy to experiment with different model prompts, memory configurations, and retrieval strategies. The built-in memory components are helpful for short-term context retention, such as maintaining conversation history within a chat session.

If your application does not require complex state management, multi-step reasoning loops, or adaptive branching logic, LangChain can keep your development process lean and maintainable. It’s a good fit for projects where stability, simplicity, and rapid iteration matter more than handling highly dynamic or long-running agent workflows. Choose LangChain when your focus is on building clear, structured, and well-integrated LLM workflows with minimal setup and maximum flexibility.

When to Use LangGraph

LangGraph is best suited for building AI agents and applications that need more than a straight, linear flow. If your system must adapt to changing inputs, revisit earlier steps, or handle multi-branch decisions, LangGraph gives you the structure to make that possible. It works especially well for agent-based architectures where the application may need to pause, wait for human input, and then resume from the exact point it left off.

You should consider LangGraph when state management is a core requirement. Unlike LangChain’s implicit state handling, LangGraph provides explicit control over the application’s state, making it easier to track and update data as the workflow evolves. This is critical for long-running processes, complex decision trees, or multi-stage tasks where preserving context over time directly impacts accuracy and reliability.

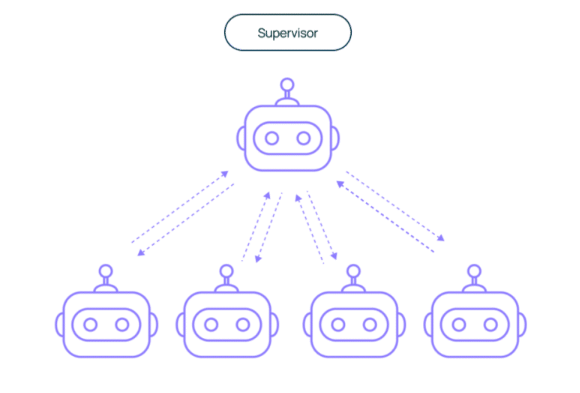

LangGraph also excels in collaborative and multi-agent environments. For example, if you are building a virtual assistant that coordinates between multiple AI agents, each with a specialized role, LangGraph allows you to orchestrate their interactions within a single, connected workflow. With graph-based execution, you can loop back to earlier stages, retry failed steps, and handle alternative paths without patching together workarounds.

For production-grade deployments, LangGraph’s integration with tools like LangSmith and its own LangGraph Studio provides visibility into every step an agent takes. Developers can debug, log, and monitor agents in real time, ensuring transparency and control.

Use LangGraph when you need workflows that are dynamic, adaptive, and resilient. It’s the better fit for complex AI agents, real-time decision-making, and applications that must maintain memory and context across sessions. If your project involves intricate branching logic or requires handling the unexpected with grace, LangGraph gives you the right architecture to make it happen.

LangChain vs LangGraph – Which Is Best?

Both LangChain and LangGraph are excellent tools, but they solve different problems. Deciding which is best for you comes down to how complex your workflows are and what kind of control you need over them.

When LangChain Might Be the Better Choice

LangChain is perfect if your application follows a clear, step-by-step process. It works well when the workflow is predictable, without frequent branching or looping back. For example, you might use LangChain to:

- Build a chatbot that answers questions using a single prompt-response cycle

- Create a summarization or content-generation tool

- Implement Retrieval-Augmented Generation (RAG) for quick information lookup

Its main strengths are speed, simplicity, and an extensive library of integrations. This makes LangChain especially appealing for prototyping, small-to-medium projects, and educational use, where getting something working quickly matters more than handling edge cases or complex branching.

When LangGraph Stands Out

LangGraph shines in situations where the application must adapt, backtrack, or run over a longer period while keeping track of state. It’s built for agent-style workflows that can:

- Loop through steps until a condition is met

- Pause and resume exactly where they left off

- Use human checkpoints for verification or adjustments

This makes LangGraph the stronger choice for multi-agent systems, complex decision-making, and production-grade deployments where flexibility and resilience are critical.

How to Decide

If you’re still unsure, consider these guiding points:

- Workflow Complexity: If it’s mostly linear, start with LangChain. If it has loops, branching, and adaptive logic, go with LangGraph.

- State Requirements: If you only need short-term memory for a single run, LangChain will do. If you need a persistent, controllable state, LangGraph is better.

- Long-Term Plans: If your application may grow into a more complex system later, LangGraph can save you a migration step.

Imp:

LangChain is the fast, approachable option for simple to moderately complex workflows. LangGraph is the robust, flexible choice for high-complexity, dynamic AI systems. Both are part of the same ecosystem, so you can start with one and transition to the other if your needs change. Your choice should align with your current project scope and your future scalability goals.

Why AI Gateways Matter for LangChain/LangGraph Users

When you build with LangChain or LangGraph, you’re creating powerful LLM-powered workflows. But getting them to run reliably, cost-effectively, and securely in production requires more than just orchestration. This is where an AI Gateway comes in. It acts as the control layer between your application and the models it uses, ensuring smooth routing, cost tracking, prompt management, and security.

Building a workflow in LangChain or LangGraph is only the first step. Once you move to production, managing the operational side of LLM usage becomes just as important as designing the workflow itself. An AI Gateway acts as a control layer, helping you route requests to the most suitable model, monitor performance, and keep your applications running smoothly.

Without this layer, it’s easy to run into issues like unpredictable latency, rising costs, or inconsistent prompt usage across different parts of your system. AI Gateways provide the visibility and control needed to maintain performance, optimize spending, and keep your LLM endpoints secure.

How TrueFoundry Complements LangChain and LangGraph

TrueFoundry AI Gateway extends the capabilities of your LLM workflows by offering:

Centralized LLM Management: Connect and manage multiple model providers such as OpenAI, Anthropic, and Hugging Face from one dashboard.

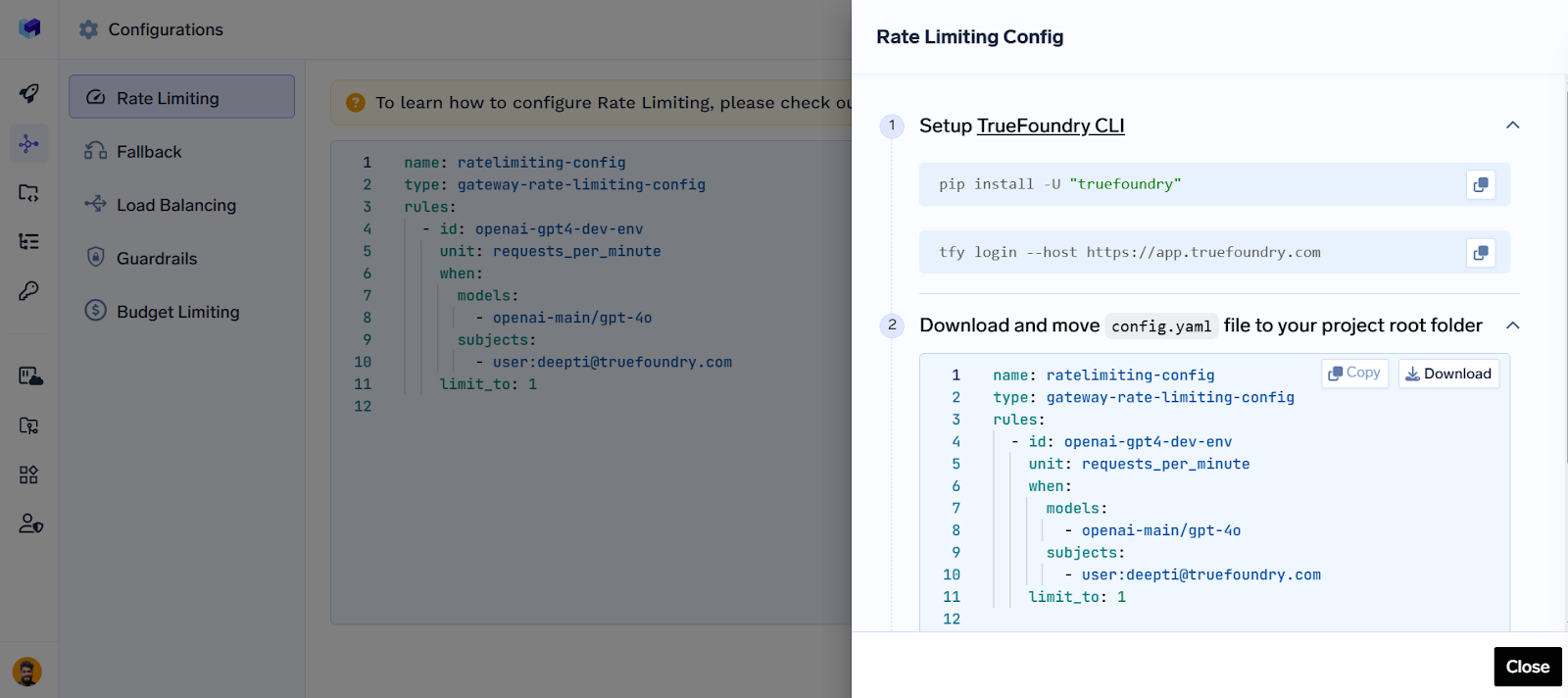

Routing, Rate Limiting, Fallback, Guardrails & Load Balancing: Optimize request flow, control usage, ensure safe outputs, switch to backups on failure, and balance traffic across models..

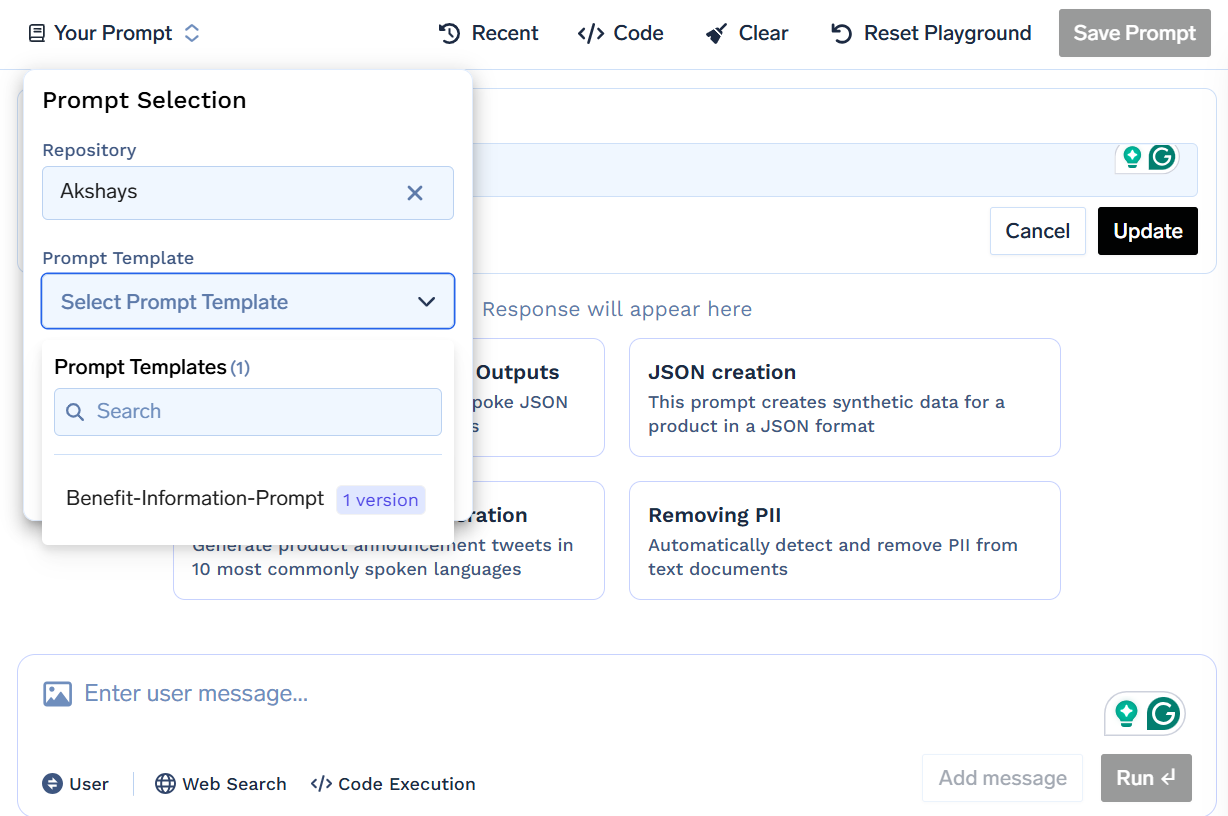

Prompt Management: Version, test, and roll back prompts with zero disruption to your live system.

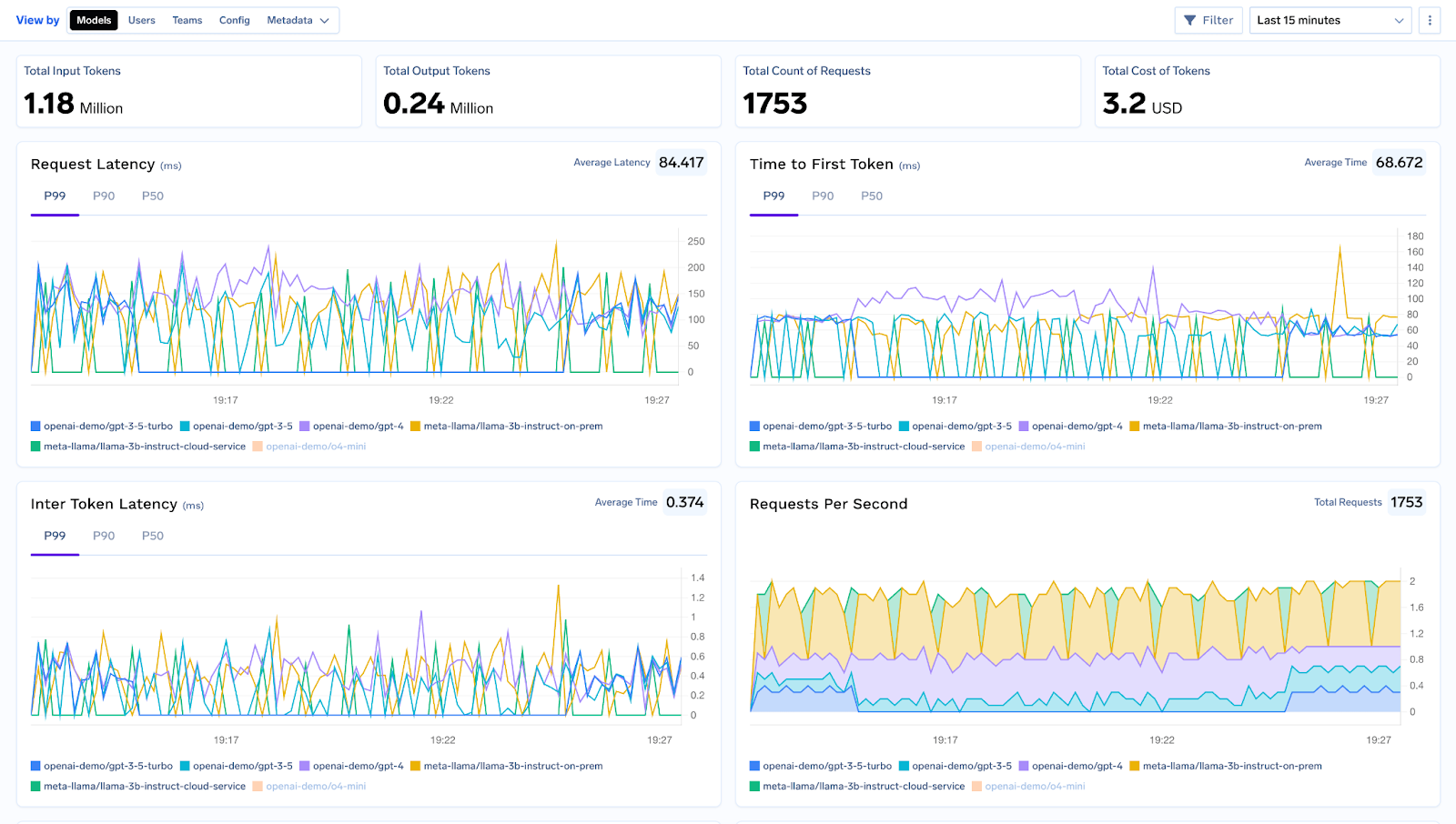

Observability, Tracing & Debugging: Monitor latency, token usage, and error rates in real time, and trace each request through your workflow for easier debugging and optimization..

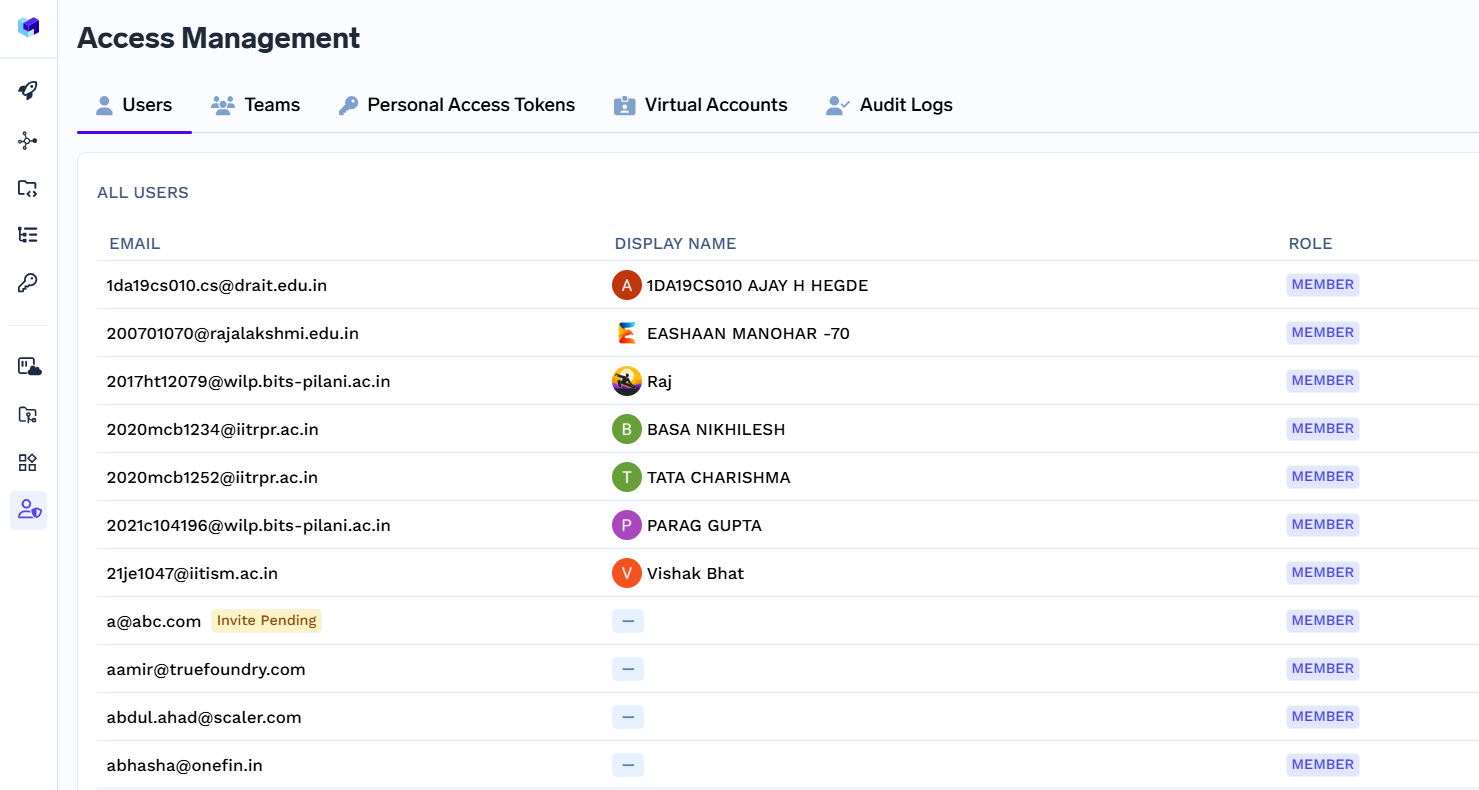

Access Control, RBAC & Compliance: Define who can access the resources using role-based access control, and maintain enterprise-level security and governance..

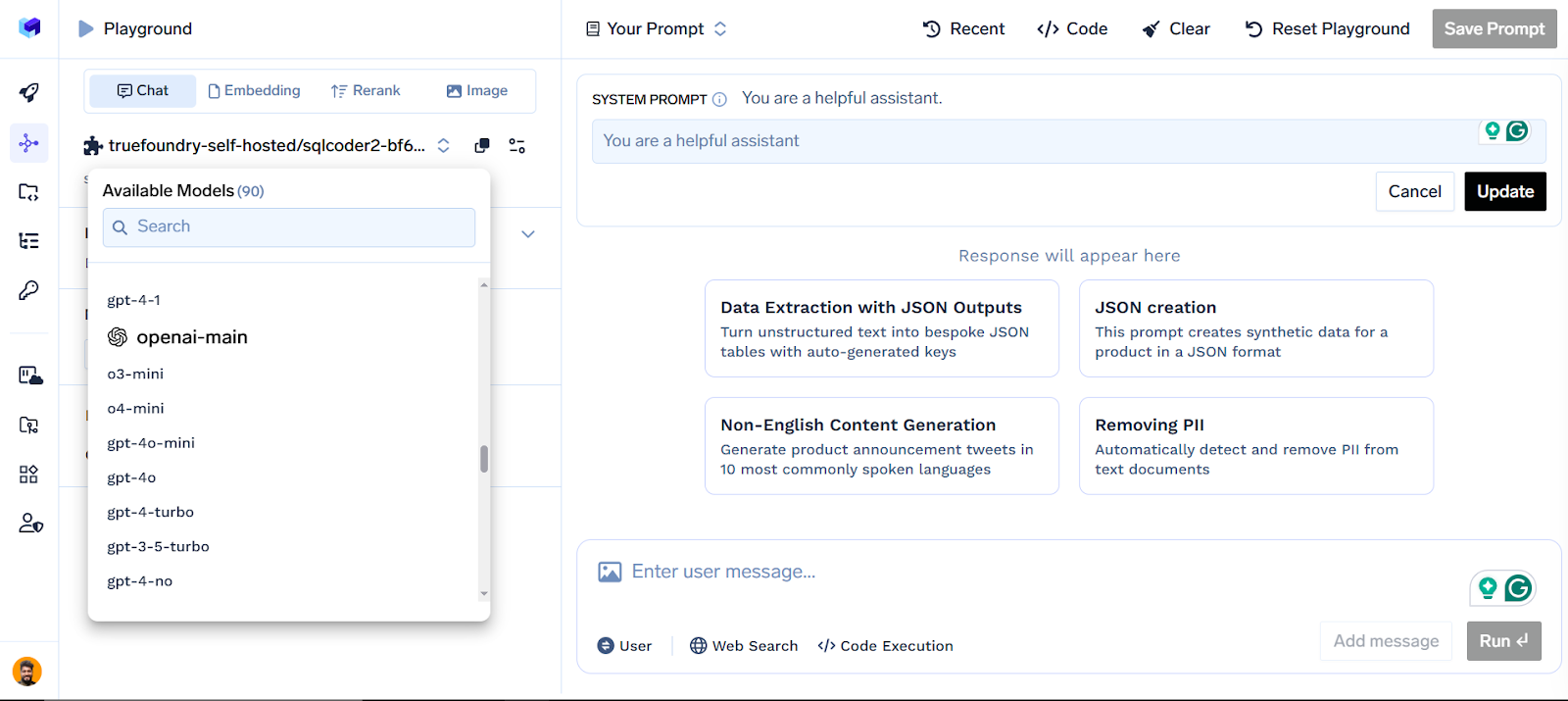

Why TrueFoundry Stands Out

TrueFoundry supports over 250 LLMs out of the box, giving you maximum flexibility. It’s designed for production-grade performance, offering caching, rate limiting, and advanced analytics. Whether you are running a simple LangChain sequence or a complex LangGraph agent network, it integrates seamlessly.

With enterprise-ready compliance, data governance, and security features, TrueFoundry ensures your LLM workflows are not only functional but also robust, scalable, and secure.

Conclusion

Both LangChain and LangGraph are powerful tools for building LLM-powered applications, each excelling in different scenarios. LangChain is ideal for simpler, linear workflows that benefit from rapid prototyping and extensive integrations, while LangGraph is designed for complex, adaptive, and stateful agent systems. Choosing the right one depends on your project’s complexity and long-term goals. Regardless of your choice, pairing these frameworks with TrueFoundry as your AI Gateway ensures your workflows are secure, efficient, and production-ready. With the right combination, you can move from concept to robust, scalable AI solutions with confidence.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.webp)