MCP vs API - Which Is Best ?

AI systems are evolving fast, but getting them to work seamlessly with real-world tools and data is still a major hurdle. Model Context Protocol (MCP) is a new standard that promises to make AI integration smoother and more secure by giving models structured access to external data and services.

Sounds familiar?

That’s because APIs have been doing something similar for decades, acting as the backbone of how software systems talk to each other. At first glance, MCP and APIs might seem like two versions of the same idea. But in reality, they operate at different layers and solve different problems.

In this article, we’ll break down what MCP actually is, how it compares to APIs, where each shines, and what it all means for developers, enterprises, and the future of AI integration.

What is MCP?

The Model Context Protocol (MCP) is an open standard that allows AI models to connect with external tools, data sources, and services in a safe and structured way. Instead of hardcoding integrations or relying on custom connectors, MCP defines a consistent protocol for exchanging context between a model and its environment.

This makes it easier for developers to extend model capabilities, ensure secure access to sensitive data, and standardize how AI interacts with external systems.

Key Features of MCP

- Standardized Communication: Provides a common language for AI models and external services, reducing custom integration overhead.

- Security and Governance: Uses controlled permissions and protocols to ensure safe access to data and tools.

- Extensibility: Allows developers to plug in new tools or datasets without modifying the core model.

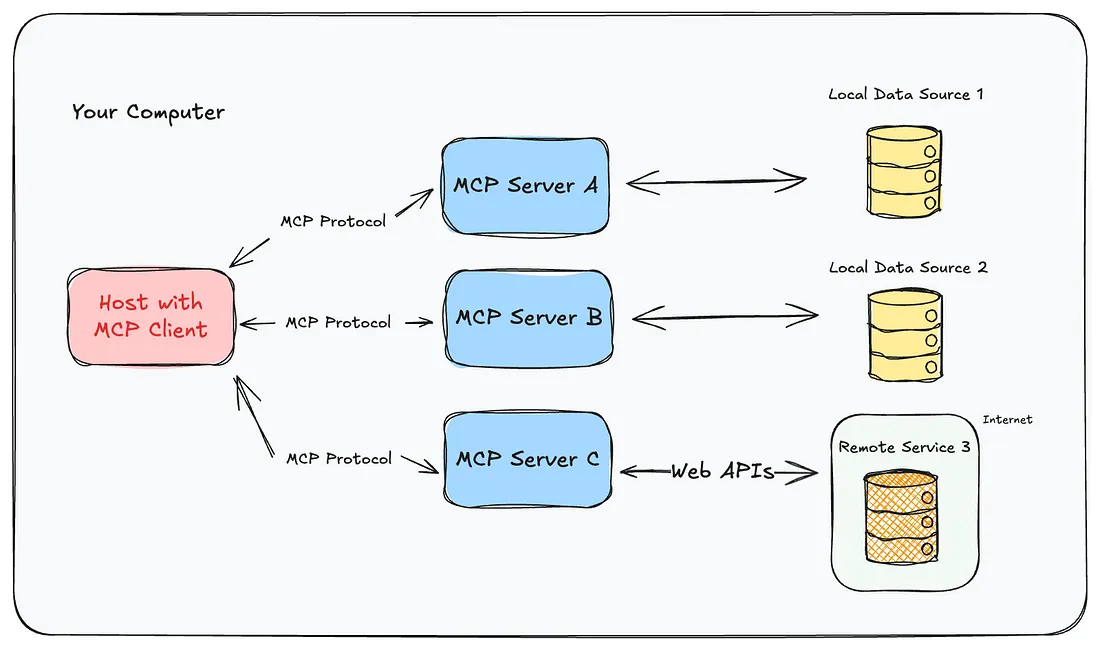

MCP Architecture

The architecture of MCP is built to balance flexibility with strict security controls. It follows a layered design that separates the model, external services, and communication channel. This separation ensures clear responsibilities, reduces complexity, and makes it easier to scale or extend the system without breaking existing workflows.

- Client Layer: The AI model or agent that initiates requests.

- Server Layer: External tools, APIs, or databases that provide data or functionality.

- Transport Layer: The communication channel, often built on JSON-RPC, that ensures structured, reliable message exchange.

- Permission Controls: Rules that govern what the model can access, protecting sensitive or private resources.

This layered approach ensures that models can interact with external environments while remaining secure, scalable, and easy to extend.

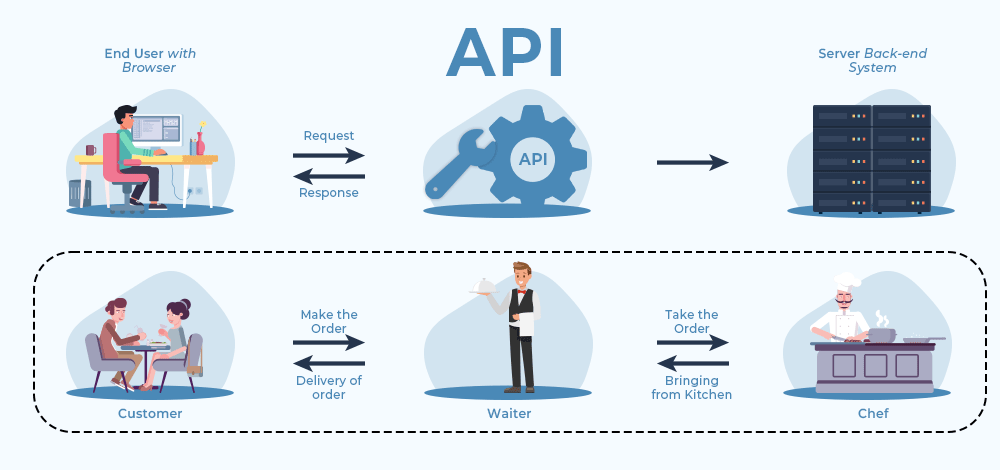

What is an API?

An Application Programming Interface (API) is a defined set of rules that allows two software applications to communicate with each other. Instead of directly accessing a system’s code or database, developers use APIs to request specific services or data in a structured and reliable way.

APIs are the backbone of modern software development, powering everything from mobile apps and web platforms to enterprise integrations.

Key Features of APIs

- Standardized Access: Provides a consistent way to interact with software components without exposing internal logic.

- Reusability: Enables developers to build once and reuse the same API across multiple applications or services.

- Interoperability: Makes it possible for diverse systems, often built on different technologies, to work together seamlessly.

API Architecture

The architecture of APIs is designed to simplify communication between systems while ensuring reliability and security. Most modern APIs follow REST or GraphQL standards, but the principles remain consistent across types:

- Client: The application requesting data or functionality.

- Server: The system providing the requested data or service.

- Endpoints: Defined URLs or routes that map to specific resources or actions.

- Protocols: Communication standards like HTTP or WebSockets that govern how data is transferred.

This structure makes APIs flexible, scalable, and essential for building connected ecosystems

MCP vs API: Comparison Table

Both MCP and APIs are designed to connect systems, but they approach integration from very different angles. MCP focuses on giving AI models a secure, standardized way to access external tools and data, while APIs are built to let applications talk to each other using defined requests and responses.

When deciding between the two, it’s important to see how they compare across aspects like architecture, scalability, security, and developer experience.

The table below highlights ten key differences:

MCP enables AI models to access tools and data securely, while APIs connect software applications reliably. Understanding their differences helps you choose the right integration for each scenario. Together, they can complement each other to build scalable, secure, and efficient systems.

Core Architecture Comparison

Understanding the core architectures of MCP and APIs is crucial for building scalable, secure, and efficient systems. While both aim to facilitate communication between components, their design philosophies and technical implementations differ substantially.

MCP (Model Context Protocol)

MCP (Model Context Protocol) is specifically designed for AI/LLM-driven workflows, enabling models to securely interact with external tools, data sources, and services in a structured, standardized manner. Its architecture is layered, modular, and context-aware. Key components include:

- Client Layer: The AI model or agent initiates requests, interprets tool capabilities, and determines execution flows. Clients manage local context and generate structured queries compatible with server schemas.

- Server Layer: Exposes tools, datasets, or services with machine-readable schemas. Servers can provide dynamic discovery of capabilities, enabling models to adapt without code changes. Data transformations, validation, and tool execution happen at this layer.

- Transport & Access Control Layer: Communication occurs via JSON-RPC, HTTP, or stdio transports. Hosts enforce authorization, allowing models to access only permitted tools or datasets. Session isolation ensures context from one model does not leak into another, and permission enforcement supports compliance and security.

- Schema & Capability Registry: MCP uses structured schemas to describe tool inputs, outputs, and constraints, allowing LLMs to reason about tool usage safely. This includes data type definitions, constraints, and operational metadata.

MCP architecture prioritizes dynamic context management, secure AI-driven interactions, and extensibility, making it highly suitable for agentic AI applications.

API (Application Programming Interface)

API Architecture is designed for general-purpose application integration, emphasizing predictable request-response patterns, scalability, and interoperability across heterogeneous systems. Its core technical components include:

- Client Layer: Applications or services that initiate requests to server endpoints. Clients manage authentication tokens, rate limits, retries, and local caching to optimize performance.

- Server Layer: Hosts endpoints and defines resource or action contracts. Servers handle request parsing, data validation, processing, and response formatting, typically returning JSON, XML, or protocol-specific payloads.

- Endpoints & Protocols: REST, GraphQL, SOAP, or WebSockets govern communication, supporting synchronous or asynchronous operations. Endpoints are structured around resources or actions, providing predictable access patterns.

- Security & Governance Layer: Implements API keys, OAuth, JWT authentication, throttling, and CORS policies. Rate-limiting and logging ensure both performance and compliance with enterprise security standards.

API architecture excels at broad interoperability, scalability, and structured software communication, but it lacks the dynamic tool discovery and AI-specific context-awareness of MCP.

Use Cases Analysis

In real-world applications, MCP and APIs serve different but complementary roles. MCP empowers AI models to interact with multiple tools and datasets dynamically, while APIs provide standardized, reliable connectivity between software systems. Understanding their practical applications helps organizations choose the right integration strategy.

MCP is ideal for dynamic, context-aware AI workflows, while APIs excel in structured, predictable software integration. By leveraging both together, organizations can build systems where AI models intelligently utilize API-driven data and services to deliver smarter, real-time solutions.

Security and Governance

Security and governance are critical considerations for both MCP and APIs, but they address different challenges based on their design and use cases.

MCP (Model Context Protocol) focuses on secure AI model interactions. Its architecture includes host-managed access controls, session isolation, and transport-level security. MCP allows hosts to define which tools, datasets, or services a model can access, reducing the risk of unauthorized actions or data leaks. Schema-based tool descriptions help models understand input/output constraints, preventing accidental misuse or injection attacks. Logging and auditing of model-tool interactions enable governance and compliance tracking in enterprise environments. MCP’s security model is particularly suited for agentic AI applications where LLMs execute multi-step workflows across sensitive data sources.

API Security and Governance emphasizes application-level protection and standardization. APIs implement authentication and authorization mechanisms such as API keys, OAuth, and JWT tokens, alongside rate limiting and throttling to prevent misuse or overloading. Logging, monitoring, and versioning policies ensure compliance, traceability, and backward compatibility across systems. Enterprises can enforce governance policies at the API gateway level, controlling who can access which endpoints and under what conditions.

MCP prioritizes context-aware, AI-specific security, while APIs provide broad, standardized application security and governance. Using both together allows organizations to maintain robust security across AI-driven workflows and traditional software integrations.

Developer Experience

Developer experience plays a crucial role in adoption and productivity for both MCP and APIs. MCP (Model Context Protocol) is designed to streamline AI model integration with external tools, offering SDKs, reference servers, and client libraries in multiple programming languages.

Developers can quickly set up hosts and servers, define tool schemas, and connect LLMs to services without extensive boilerplate code. Its structured JSON-RPC transport, schema validation, and dynamic discovery capabilities reduce errors and simplify debugging in complex AI workflows.

MCP:

- SDKs and reference implementations in multiple languages

- Dynamic tool discovery and schema validation for LLMs

- Built-in error handling and debugging support for AI workflows

APIs, by contrast, offer a mature ecosystem for general software integration. Developers benefit from standardized specifications like OpenAPI/Swagger, client libraries, and API gateways that simplify authentication, versioning, and monitoring. Clear endpoint contracts and extensive documentation make onboarding and maintenance predictable. Tools for testing, mocking, and monitoring APIs enhance developer productivity while ensuring that integrations remain stable and secure.

APIs:

- Standardized specifications (OpenAPI/Swagger) and endpoint contracts

- Client libraries, gateways, and monitoring tools for smooth integration

- Extensive documentation and testing frameworks for reliability

Overall, MCP optimizes AI-focused development, making multi-tool orchestration easier, while APIs provide robust, standardized developer support across general software applications.

Performance and Scalability

Performance and scalability are essential for both MCP and APIs, but their design focuses differ due to their target use cases.

Low-Latency Communication

MCP is optimized for AI-driven workflows, enabling low-latency interactions between LLM clients and multiple tool servers using JSON-RPC over HTTP or stdio. Structured schemas reduce processing overhead, ensuring rapid responses for multi-step AI tasks. APIs, on the other hand, rely on REST, GraphQL, or WebSockets, providing predictable latency for general application requests. While APIs are highly reliable, they may not dynamically adapt to complex, multi-tool AI workflows in real-time.

Horizontal Scaling and Concurrency

MCP supports horizontal scaling through multiple server instances handling concurrent model requests. Session isolation prevents workflow conflicts and ensures consistent performance. APIs also scale horizontally across distributed servers and cloud infrastructure, handling large volumes of client requests with load balancing, caching, and throttling. While API scaling is mature and well-understood, MCP scaling focuses specifically on parallel AI operations with dynamic tool access.

Workflow Efficiency and Optimization

MCP’s schema-based design allows AI models to reason about tool capabilities and execute tasks efficiently, minimizing redundant computations and data fetching. APIs achieve efficiency through optimized endpoints, caching strategies, and monitoring tools that maintain throughput and reliability. Unlike MCP, API efficiency is centered on predictable, general-purpose request-response patterns rather than dynamic AI reasoning.

MCP ensures low-latency, AI-optimized operations, while APIs provide scalable, robust performance for traditional software communication, excelling in their respective domains.

Conclusion

MCP and APIs serve distinct but complementary roles in modern software and AI ecosystems. MCP excels in AI-driven, context-aware workflows, enabling LLMs to dynamically access multiple tools and datasets while maintaining secure, structured interactions.

APIs provide robust, standardized communication across applications, microservices, and external platforms, ensuring predictable performance and scalability. Understanding the differences in architecture, use cases, security, developer experience, and performance allows organizations to choose the right integration strategy.

By combining MCP’s AI-focused capabilities with APIs’ general-purpose connectivity, teams can build intelligent, efficient, and secure systems that meet both AI and traditional software needs.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.