What is MCP Proxy?

The Model Context Protocol (MCP) has revolutionized how AI applications connect to external data sources and tools. As organizations scale their AI implementations, they're discovering that direct server connections aren't always the optimal approach. Enter MCP Proxy—a powerful intermediary layer that enhances security, scalability, and manageability of MCP deployments. Let's explore how MCP Proxy transforms enterprise AI architectures and why it's becoming essential for production deployments.

What is MCP Proxy?

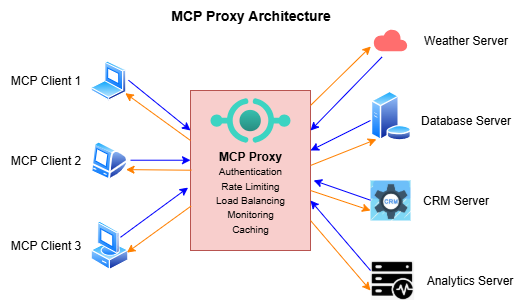

An MCP Proxy serves as an intelligent intermediary layer that sits between MCP clients (AI applications) and MCP servers (data sources and tools). Rather than establishing direct connections, the proxy acts as a centralized gateway that manages, routes, and enhances communication between these components.

Think of MCP Proxy as the "API Gateway for AI"—similar to how traditional API gateways manage REST endpoints, MCP Proxy manages MCP server connections with enterprise-grade features like authentication, rate limiting, observability, and security controls. The proxy architecture follows the standard MCP protocol, appearing as a regular MCP server to clients while acting as an MCP client to backend servers. This transparency ensures seamless integration with existing MCP-enabled applications.

At its core, an MCP Proxy provides unified access control and authentication mechanisms, enables protocol translation between different transport types (stdio, SSE, HTTP), implements enterprise security policies and governance, and offers centralized monitoring and observability. Most importantly, it aggregates multiple MCP servers behind a single endpoint, dramatically simplifying enterprise deployments.

How MCP Proxy Works?

MCP Proxy operates on a straightforward yet powerful architecture that leverages the JSON-RPC foundation of the Model Context Protocol. The proxy functions as both an MCP server (facing clients) and an MCP client (facing backend servers), creating a transparent bridge that adds value without disrupting existing workflows.

Modern proxies support multiple transport protocols simultaneously. This includes stdio transport for local server connections and development environments, Server-Sent Events (SSE) for real-time streaming capabilities, streamable HTTP for stateless, scalable deployments, and WebSocket for persistent, low-latency connections. This transport flexibility allows organizations to deploy MCP servers using their preferred protocols while providing clients with a consistent interface.

When a client makes a request to the proxy, the system follows a well-defined workflow. First, the proxy receives the JSON-RPC request from the client, then security policies are evaluated during authentication and authorization. Next, route resolution determines which backend server(s) to query, followed by protocol-specific formatting during request translation. The proxy then forwards requests to appropriate servers, aggregates multiple server responses if needed, and returns a unified response to the client.

Enterprise MCP Proxy implementations maintain session state to optimize performance and enable advanced features including connection pooling where persistent connections to backend servers reduce latency, context preservation to maintain user context and conversation state, load balancing to distribute requests across multiple server instances, and circuit breaking to automatically bypass failed servers.

Key Features of MCP Proxy

MCP Registry and Server Management

The foundation of any enterprise MCP deployment is a comprehensive registry that catalogs and manages available servers. A robust MCP registry provides a centralized catalog where administrators can register both public and privately hosted MCP servers, maintaining detailed metadata about each server's capabilities, authentication requirements, and access controls.

TrueFoundry's MCP Gateway exemplifies this approach with its centralized MCP registry that maintains a complete inventory of available servers and their authentication mechanisms. The registry handles user-specific OAuth2 flows, securely storing and refreshing access tokens while ensuring users can only access resources they're authorized for. This eliminates the chaos of distributed credential management and provides enterprise IT teams with the visibility and control they need.

The registry also enables dynamic tool discovery, allowing autonomous agents to discover and invoke tools at runtime rather than requiring hardcoded configurations. This flexibility is crucial for building adaptive AI systems that can respond to changing business requirements without manual intervention.

MCP Authentication and Access Control

Security is paramount in enterprise MCP deployments, and authentication represents the first line of defense. Traditional direct server connections require managing separate credentials for each MCP server, creating a complex web of authentication flows that becomes unwieldy at scale.

MCP Proxy centralizes authentication through several mechanisms. OAuth 2.0 integration supports both 2-legged and 3-legged OAuth flows for enterprise applications, while Personal Access Tokens (PATs) allow users to generate a single token for accessing all authorized MCP servers. For applications, Virtual Account Tokens (VATs) provide scoped access to specific server sets, and role-based access control (RBAC) ensures fine-grained permissions management.

The authentication layer also implements sophisticated token management, including automatic token refresh, secure credential storage, and session management across multiple server connections. This approach significantly reduces the security burden on individual developers while providing IT teams with comprehensive audit trails and access controls.

MCP Invocation and Tool Orchestration

The core value of MCP Proxy lies in its ability to orchestrate complex tool invocations across multiple servers. Unlike direct connections where each server operates in isolation, the proxy enables sophisticated workflows that span multiple data sources and capabilities.

Tool namespace management prevents naming conflicts by automatically prefixing tools with server identifiers. For example, a get_weather tool from a "weather-api" server becomes weather-api_get_weather, ensuring no collisions even when multiple servers expose similar functionality. This enables unified tool discovery where clients can discover all available tools through a single list_tools call rather than managing connections to multiple servers.

The proxy also enables cross-server workflows where complex AI agent behaviors can leverage tools from multiple servers seamlessly. This orchestration capability is what transforms simple tool calling into sophisticated enterprise automation, allowing organizations to build AI systems that integrate naturally with their existing technology stack.

Observability and Monitoring for MCP Servers

Enterprise deployments demand comprehensive observability into MCP operations. Traditional direct connections scatter monitoring across multiple endpoints, making it difficult to gain holistic insights into system performance and usage patterns.

MCP Proxy consolidates observability through centralized monitoring that provides end-to-end visibility into request flows, comprehensive metrics collection including latency, throughput, and error rates, detailed audit logging for compliance and security analysis, and real-time health monitoring of backend server availability.

Advanced observability features include request tracing that follows individual requests through the entire system, performance analytics that identify bottlenecks and optimization opportunities, usage analytics that track tool utilization patterns, and security monitoring that detects anomalous access patterns or potential security threats.

The observability layer also integrates with enterprise monitoring solutions like Grafana, Datadog, and custom dashboards, ensuring that MCP metrics flow seamlessly into existing operational workflows. This integration is crucial for maintaining enterprise-grade service level agreements and operational excellence.

Benefits of Using an MCP Proxy

Enhanced Security and Governance

The security benefits of MCP Proxy extend far beyond simple access control. By consolidating multiple server connections through a single, hardened gateway, organizations can implement consistent security policies across their entire MCP ecosystem. This approach dramatically reduces the attack surface compared to exposing multiple server endpoints directly to client applications.

Centralized authentication means clients authenticate once with the proxy rather than managing separate credentials for each server. The proxy handles backend authentication using service accounts or sophisticated token exchange mechanisms, ensuring that sensitive credentials never leave the secure gateway environment. Policy enforcement becomes consistent across all MCP interactions, with capabilities like sensitive data filtering, request sanitization, and response redaction implemented at the proxy layer rather than requiring individual server modifications.

Attack surface reduction is achieved by deploying backend servers in private networks, accessible only through the proxy. This network isolation significantly reduces exposure to external threats while maintaining the flexibility needed for complex enterprise integrations.

Operational Simplicity and Scale

MCP Proxy transforms operational complexity from a distributed challenge into a centralized management opportunity. Instead of configuring monitoring, scaling, and network policies for each individual server, operations teams can focus on a single, well-understood gateway component.

The unified deployment model allows proxies to be deployed using standard cloud-native patterns including containers, load balancers, and auto-scaling while backend servers focus purely on business logic. This separation of concerns enables different teams to optimize their components independently while maintaining overall system coherence.

Simplified client integration means applications need only integrate with a single proxy endpoint rather than managing connections to multiple servers. This dramatically reduces the complexity of client applications and makes it easier to add new MCP servers without requiring client-side changes.

Scalability and Performance Optimization

The proxy architecture enables sophisticated scalability patterns that would be difficult to implement with direct connections. Horizontal scaling through multiple proxy instances deployed behind load balancers provides linear scalability as demand grows. Backend server pooling allows multiple instances of the same server to be pooled behind the proxy for load distribution, improving both performance and reliability.

Geographic distribution becomes possible with proxies deployed in multiple regions and intelligent routing to the nearest backend servers. This approach minimizes latency for global deployments while maintaining consistent functionality across all regions.

Performance optimization features include intelligent caching where frequently requested data is cached with configurable time-to-live settings, request deduplication that collapses identical concurrent requests, connection pooling that maintains efficient connections to backend servers, and response compression that reduces network overhead.

Cost Optimization and Resource Efficiency

MCP Proxy deployments often result in significant cost savings through several mechanisms. Resource consolidation reduces per-server operational overhead by sharing infrastructure components like monitoring, logging, and security systems. Efficient resource utilization through connection pooling and request batching improves overall system efficiency.

Reduced development overhead allows teams to focus on building MCP servers that implement business logic rather than solving repeated infrastructure challenges like authentication, monitoring, and scaling. This acceleration in development velocity often represents the largest cost savings for organizations adopting MCP Proxy architectures.

MCP Proxy vs Direct MCP Server Connections

Understanding when to use MCP Proxy versus direct connections requires careful consideration of both technical and organizational factors. The choice between these approaches significantly impacts security, scalability, and operational complexity.

Performance and Technical Considerations

Direct connections offer minimal latency, typically adding only 1-2ms to request processing times. However, this performance advantage comes at the cost of limited scalability, with throughput constrained by individual server capacity. Resource usage follows a pattern of one connection per client-server pair, which can become inefficient as the number of clients and servers grows.

MCP Proxy introduces slightly higher latency, typically 3-5ms with proper optimization, but provides scalable throughput through connection pooling and load balancing. The resource efficiency of pooled connections often results in better overall system performance despite the small latency increase. Additionally, intelligent caching can significantly reduce backend load for frequently accessed data.

Security and Governance Comparison

Direct connections require per-server credential management, distributed security controls, and multiple exposed endpoints that each represent potential attack vectors. Audit trails are scattered across servers, making comprehensive security analysis difficult.

MCP Proxy provides centralized authentication, fine-grained role-based access control, centralized audit logging, and a single hardened endpoint that reduces overall attack surface. This consolidation dramatically improves security posture while simplifying compliance and governance requirements.

Operational Complexity Analysis

For simple deployments with few servers, direct connections offer straightforward setup and minimal operational overhead. However, as systems scale, the operational complexity grows significantly. Each server requires individual monitoring, manual scaling procedures, and distributed configuration management.

MCP Proxy requires more complex initial setup but dramatically simplifies operations at scale. Unified observability provides comprehensive system visibility, automated scaling capabilities handle demand fluctuations, and centralized configuration management reduces operational burden as the system grows.

Decision Framework

Choose direct connections for development and testing environments where simplicity is paramount, applications requiring sub-millisecond response times, single server scenarios, and basic use cases involving simple tool calling without complex workflows.

Choose MCP Proxy for production deployments requiring enterprise-grade security and monitoring, multi-server architectures that aggregate multiple MCP servers, scalability requirements involving horizontal scaling and load distribution, governance and compliance needs requiring centralized control and audit capabilities, and complex workflows involving cross-server tool orchestration.

Challenges and Considerations

Technical Implementation Challenges

Implementing MCP Proxy introduces several technical challenges that require careful architectural consideration. State management represents a primary concern since, unlike stateless HTTP proxies, MCP Proxy often needs to maintain session state to provide optimal user experience. This includes conversation context, user preferences, and server connection state. Designing stateful proxies requires careful consideration of state persistence, replication, and recovery mechanisms.

Protocol compatibility presents another challenge as different MCP servers may implement slightly different protocol versions or extensions. The proxy must handle these variations gracefully while presenting a consistent interface to clients. Version negotiation and feature detection become critical components for ensuring seamless operation across diverse server implementations.

Error handling and circuit breaking require sophisticated logic when aggregating multiple servers. If one server in a multi-server request fails, the proxy needs intelligent decision-making to determine whether to return partial results, retry with alternative servers, or fail the entire request. This complexity multiplies when dealing with dependencies between different server responses.

Performance and Resource Management

Connection pool management requires balancing resource usage with performance characteristics. Too few connections create bottlenecks that limit throughput, while too many connections consume excessive system resources. The optimal configuration depends on usage patterns, server characteristics, and infrastructure constraints.

Caching strategy implementation requires deep understanding of MCP tool semantics. Some tools return dynamic data that shouldn't be cached, while others return relatively static information suitable for extended caching periods. Implementing effective caching policies requires careful analysis of each tool's behavior and data characteristics.

Request batching presents opportunities for efficiency improvements but must be implemented carefully to avoid introducing unacceptable latency for individual requests. The challenge lies in identifying optimal batching windows that balance efficiency gains with response time requirements.

Security and Compliance Considerations

Token management becomes complex when securely managing authentication tokens for backend servers while providing seamless authentication for clients. This requires sophisticated token exchange mechanisms, secure credential storage, and proper token lifecycle management.

Data privacy concerns arise because the proxy potentially has access to all data flowing between clients and servers. Implementing proper data handling, encryption at rest and in transit, and privacy controls becomes crucial for maintaining enterprise security standards.

Rate limiting implementation must balance protecting backend servers from overload while providing good user experience. This requires careful tuning based on server capabilities, user behavior patterns, and business requirements.

Operational and Organizational Challenges

Monitoring and alerting complexity increases as the proxy becomes a critical component requiring comprehensive observability. Teams need to implement health checks, performance monitoring, and alerting for both the proxy itself and its connections to backend servers. This monitoring must integrate seamlessly with existing enterprise monitoring infrastructure.

Deployment coordination becomes necessary as updates to the proxy must be coordinated with backend server deployments to ensure compatibility and avoid service disruptions. This coordination requires sophisticated deployment pipelines and testing procedures.

Backup and recovery planning must account for the proxy's configuration and state. Critical system components need comprehensive backup strategies and tested recovery procedures to ensure business continuity during outages or disasters.

Implementing MCP Proxy

Architecture Planning and Design

Successful MCP Proxy implementation begins with comprehensive architecture planning that addresses both immediate needs and future growth requirements. Server discovery strategy determines how the proxy will identify and connect to backend MCP servers. Options include static configuration files suitable for stable environments, service discovery mechanisms that automatically detect new servers, and dynamic registration APIs that allow servers to self-register with the proxy.

Deployment topology decisions involve choosing between single proxy instances for simple deployments or multiple instances for high availability and load distribution. Geographic distribution requirements must consider network latency, data residency requirements, and disaster recovery needs.

Integration planning identifies how the proxy will connect with existing enterprise infrastructure including load balancers for traffic distribution, API gateways for external access control, identity providers for authentication integration, and monitoring systems for comprehensive observability. These integration points are critical for ensuring the proxy fits seamlessly into existing operational workflows.

Basic Implementation Approach

A fundamental MCP Proxy implementation requires several core components working in harmony. The proxy server itself handles client connections and implements the MCP protocol, while backend connection managers maintain connections to MCP servers and handle protocol translation. Authentication and authorization modules integrate with enterprise identity systems and enforce access policies.

Here's a simplified example demonstrating the basic structure of an MCP Proxy implementation using Node.js:

const express = require('express');

const { MCPClient } = require('@modelcontextprotocol/client');

const { StdioTransport } = require('@modelcontextprotocol/transport-stdio');

class MCPProxy {

constructor() {

this.servers = new Map();

this.app = express();

this.setupRoutes();

}

async addServer(name, config) {

const transport = new StdioTransport(config.command, config.args);

const client = new MCPClient(transport);

await client.connect();

this.servers.set(name, {

client,

config,

lastHealthCheck: Date.now()

});

console.log(`Added MCP server: ${name}`);

}

async listTools() {

const allTools = [];

for (const [serverName, server] of this.servers) {

try {

const tools = await server.client.listTools();

const prefixedTools = tools.map(tool => ({

...tool,

name: `${serverName}_${tool.name}`,

serverName

}));

allTools.push(...prefixedTools);

} catch (error) {

console.error(`Failed to list tools from ${serverName}:`, error);

}

}

return allTools;

}

async callTool(toolName, args) {

const [serverName, actualToolName] = toolName.split('_', 2);

const server = this.servers.get(serverName);

if (!server) {

throw new Error(`Server ${serverName} not found`);

}

try {

return await server.client.callTool(actualToolName, args);

} catch (error) {

console.error(`Tool call failed for ${toolName}:`, error);

throw error;

}

}

setupRoutes() {

this.app.use(express.json());

this.app.get('/health', (req, res) => {

res.json({ status: 'healthy', servers: Array.from(this.servers.keys()) });

});

this.app.get('/tools', async (req, res) => {

try {

const tools = await this.listTools();

res.json({ tools });

} catch (error) {

res.status(500).json({ error: error.message });

}

});

this.app.post('/tools/:toolName', async (req, res) => {

try {

const result = await this.callTool(req.params.toolName, req.body);

res.json(result);

} catch (error) {

res.status(500).json({ error: error.message });

}

});

}

start(port = 3000) {

this.app.listen(port, () => {

console.log(`MCP Proxy listening on port ${port}`);

});

}

}

Enterprise Implementation with TrueFoundry

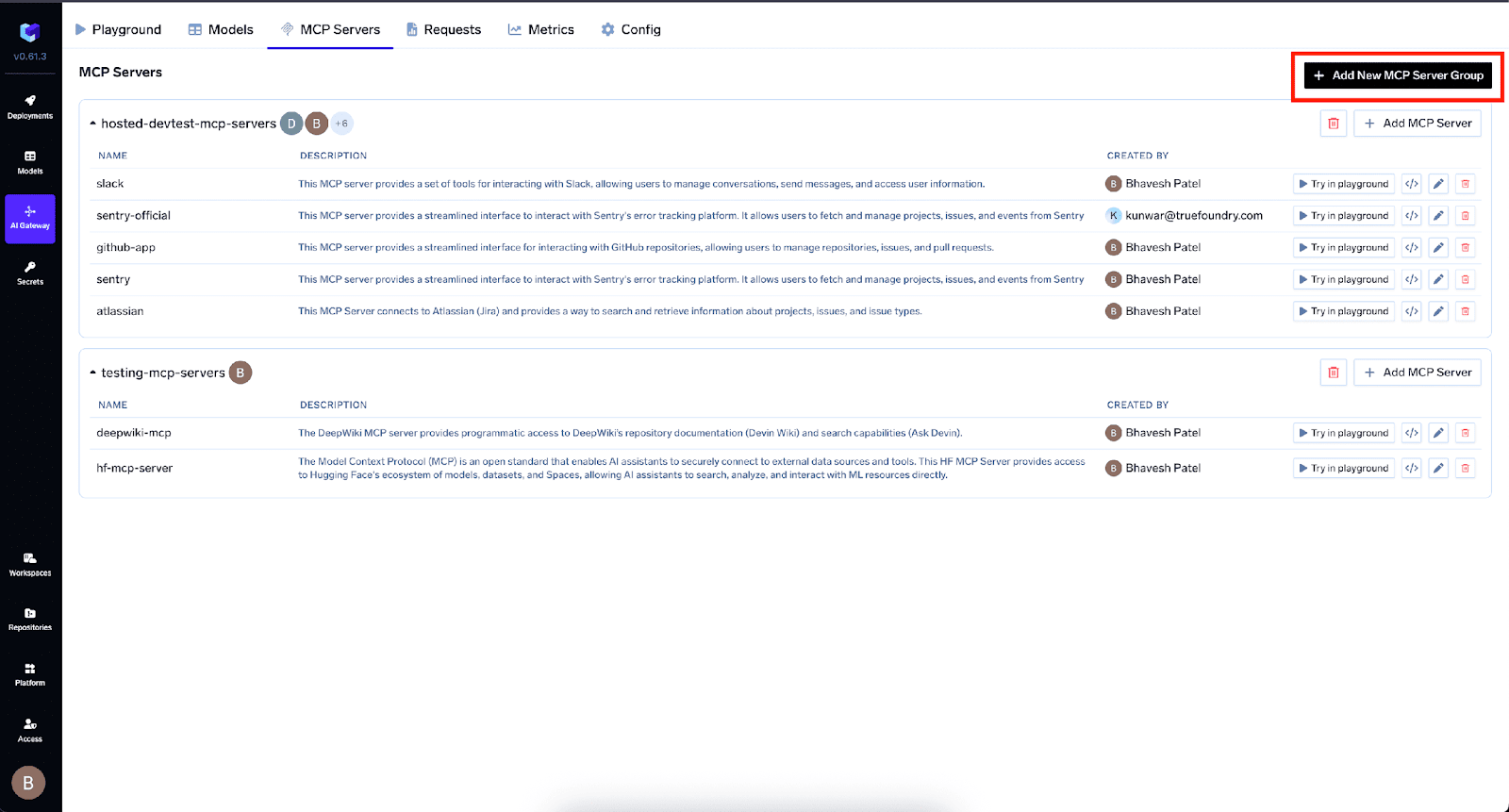

TrueFoundry's AI Gateway provides a production-ready MCP Proxy implementation that addresses the complex requirements of enterprise deployments. The platform offers a comprehensive MCP Registry and Gateway that simplifies server management while providing enterprise-grade features for security, monitoring, and scalability.

The TrueFoundry approach centers on a centralized MCP registry that maintains a complete inventory of available servers and their authentication mechanisms. This registry handles user-specific OAuth2 flows, securely storing and refreshing access tokens while ensuring users can only access resources they're authorized for. The system eliminates the chaos of distributed credential management and provides enterprise IT teams with comprehensive visibility and control.

Key architectural components include the centralized control plane that maintains the registry of all MCP servers and handles authentication mechanisms, unified access control through Personal Access Tokens (PATs) and Virtual Account Tokens (VATs), an integrated agent playground for testing and development, and comprehensive observability with real-time monitoring and audit trails.

To get started with TrueFoundry's MCP implementation, follow the Getting Started guide which walks through creating MCP Server Groups and configuring your first servers. The platform supports both public MCP servers from the community and privately hosted servers, with sophisticated access controls determining which users and teams can access specific servers.

TrueFoundry also supports stdio-based MCP servers through its proxy conversion capabilities, allowing organizations to deploy legacy servers as modern HTTP endpoints. For enterprise integrations, pre-built servers like the Atlassian MCP Server provide seamless Jira and Confluence integration.

Production Deployment Considerations

Production MCP Proxy deployments require careful attention to high availability, security, monitoring, and operational procedures. High availability typically involves deploying multiple proxy instances behind load balancers with comprehensive health checks and automatic failover capabilities.

Scaling strategies should implement horizontal pod autoscaling based on CPU utilization, memory consumption, and request volume metrics. The scaling policies must account for both sudden traffic spikes and gradual growth patterns while maintaining performance service level agreements.

Security implementation requires network policies that restrict access between system components, comprehensive secret management for all credentials and tokens, encryption for data in transit and at rest, and regular security audits to identify potential vulnerabilities.

Monitoring integration should connect with enterprise monitoring solutions to provide comprehensive observability, including performance metrics, error tracking, security monitoring, and capacity planning data. This integration ensures that MCP Proxy operations align with existing enterprise operational procedures and standards.

Conclusion

MCP Proxy represents a fundamental evolution in enterprise AI architecture, transforming how organizations deploy, manage, and scale their Model Context Protocol implementations. As AI applications become increasingly sophisticated and integrate with complex enterprise systems, the proxy pattern provides essential governance, security, and operational capabilities that production deployments demand.

The benefits extend far beyond simple connection management. Through centralized authentication, intelligent routing, comprehensive monitoring, and enterprise-grade security controls, MCP Proxy enables organizations to build robust, scalable AI systems that meet rigorous standards for reliability and compliance. The architecture pattern addresses critical challenges around security, observability, and operational complexity that emerge as organizations move from proof-of-concept implementations to production-scale deployments.

For organizations beginning their MCP journey, direct connections provide adequate functionality for development and initial experimentation. However, as systems mature and business requirements grow, adopting MCP Proxy becomes essential for long-term success. The transition from direct connections to proxy-mediated architecture represents a natural evolution that mirrors similar patterns in web services, microservices, and cloud computing.

TrueFoundry's AI Gateway exemplifies the production-ready implementation of MCP Proxy patterns, offering enterprises a comprehensive platform for scaling AI implementations while maintaining security and governance standards. The platform's integrated approach to MCP server management, authentication, and observability makes it an optimal choice for organizations committed to enterprise-scale AI deployment.

As the Model Context Protocol ecosystem continues evolving, the proxy pattern will play an increasingly critical role in enabling sophisticated AI-powered applications. The future of enterprise AI depends not just on building intelligent models, but on creating intelligent architectures that can adapt, scale, and secure these capabilities for real-world deployment. MCP Proxy serves as a foundational component of this intelligent architecture, enabling organizations to unlock the full potential of the Model Context Protocol while meeting the demanding requirements of enterprise production environments.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.