Amazon Bedrock Agents vs. The Control Plane: An Architectural Review

For DevOps engineers and architects operating within the AWS perimeter, Amazon Bedrock Agents—architecturally known as the AgentCore runtime—is the standard path for building agentic workflows. It standardizes the complex recursive loops required for agents, handling the reasoning, memory, and API orchestration that developers previously hand-rolled using libraries like LangChain.

Adopting a managed agent framework, however, often necessitates a tradeoff between initial velocity and long-term architectural control. It couples application logic to a specific cloud provider's orchestration philosophy. This report analyzes the technical architecture of Amazon Bedrock Agents, evaluates the operational realities regarding observability, and contrasts it with an agnostic control plane approach using the TrueFoundry Platform.

The Anatomy of the Amazon Bedrock Agent Runtime

Bedrock Agents function as an orchestration engine designed to execute multi-step tasks. Unlike a stateless InvokeModel API call, an Agent operates as a stateful loop.

When defining an Agent in Bedrock, developers configure three distinct primitives:

- The Action Group: An OpenAPI schema defining the agent's capabilities. These typically map to AWS Lambda functions, providing the compute layer for tool execution.

- The Knowledge Base: A vector store integration (typically Amazon OpenSearch Serverless) that provides RAG capabilities for grounding the model.

- The Orchestration Template: The prompt engineering logic instructing the model how to interpret user input, select the correct Lambda, and parse the output.

The Reasoning Loop

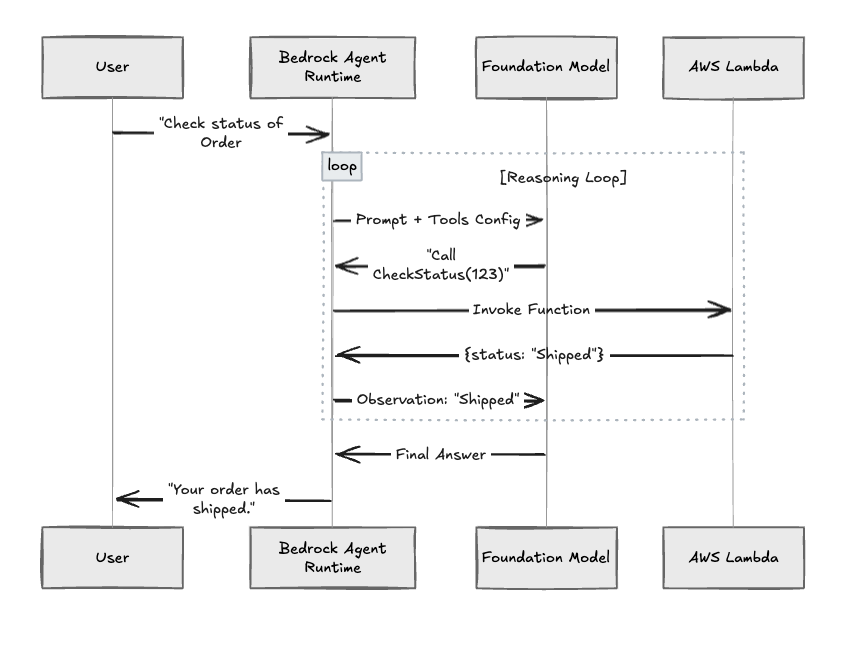

The primary utility is automating the reasoning steps. When a user asks to "Check the inventory for Item X and update the database," the runtime decomposes this request:

- Step 1: Determines the CheckInventory Lambda is required.

- Step 2: Constructs the payload.

- Step 3: Executes the Lambda and reads the response.

- Step 4: Determines UpdateDatabase is the next logical step based on the previous output.

Fig 1: The recursive orchestration loop managed by AWS Bedrock Agents.

Operational Trade-Offs

Managed services accelerate initial deployment, but Day 2 operations—debugging, scaling, and migration—often reveal the cost of abstraction.

Observability Considerations

In a fully managed runtime, the prompt loop is abstracted. The system instructions and tool definitions are constructed by AWS and sent to the LLM behind the service boundary.

If an agent hallucinates or calls the wrong tool, debugging can be complex because the raw context window and intermediate reasoning steps are often managed implicitly. This can lead teams to focus on debugging the final output rather than inspecting the granular reasoning process.

Architectures using an AI Gateway like TrueFoundry keep the orchestration logic transparent. The agent's "brain" runs on your infrastructure, ensuring every prompt, token, and reasoning step is visible in tracing tools like OpenTelemetry or Arize.

The AWS-Centric Tool Chain

Bedrock Agents are optimized for the AWS ecosystem. Calling a tool natively typically implies that the tool exists as a Lambda function.

If an enterprise uses external tools—such as a Snowflake database, a Salesforce API, or a service hosted on Azure—developers often coordinate via wrapper Lambdas in AWS to bridge the gap. This can introduce additional latency and maintenance overhead.

The industry is currently coalescing around the Model Context Protocol (MCP), an open standard allowing agents to connect to data sources universally. TrueFoundry is designed to be MCP-native, acting as a neutral hub where an agent can connect to a Google Drive MCP server, a local Postgres database, and an AWS Lambda function simultaneously, without custom infrastructure wrappers.

TrueFoundry: The Control Plane Architecture

TrueFoundry proposes a Control Plane architecture. Instead of bundling the model, runtime, and tools into a single vertical cloud service, this approach decouples them.

Here, the Cloud Provider (AWS, Azure, GCP) functions as the scalable backend for compute and models, while the TrueFoundry Gateway remains the governable interface for applications.

Routing and Economic Efficiency

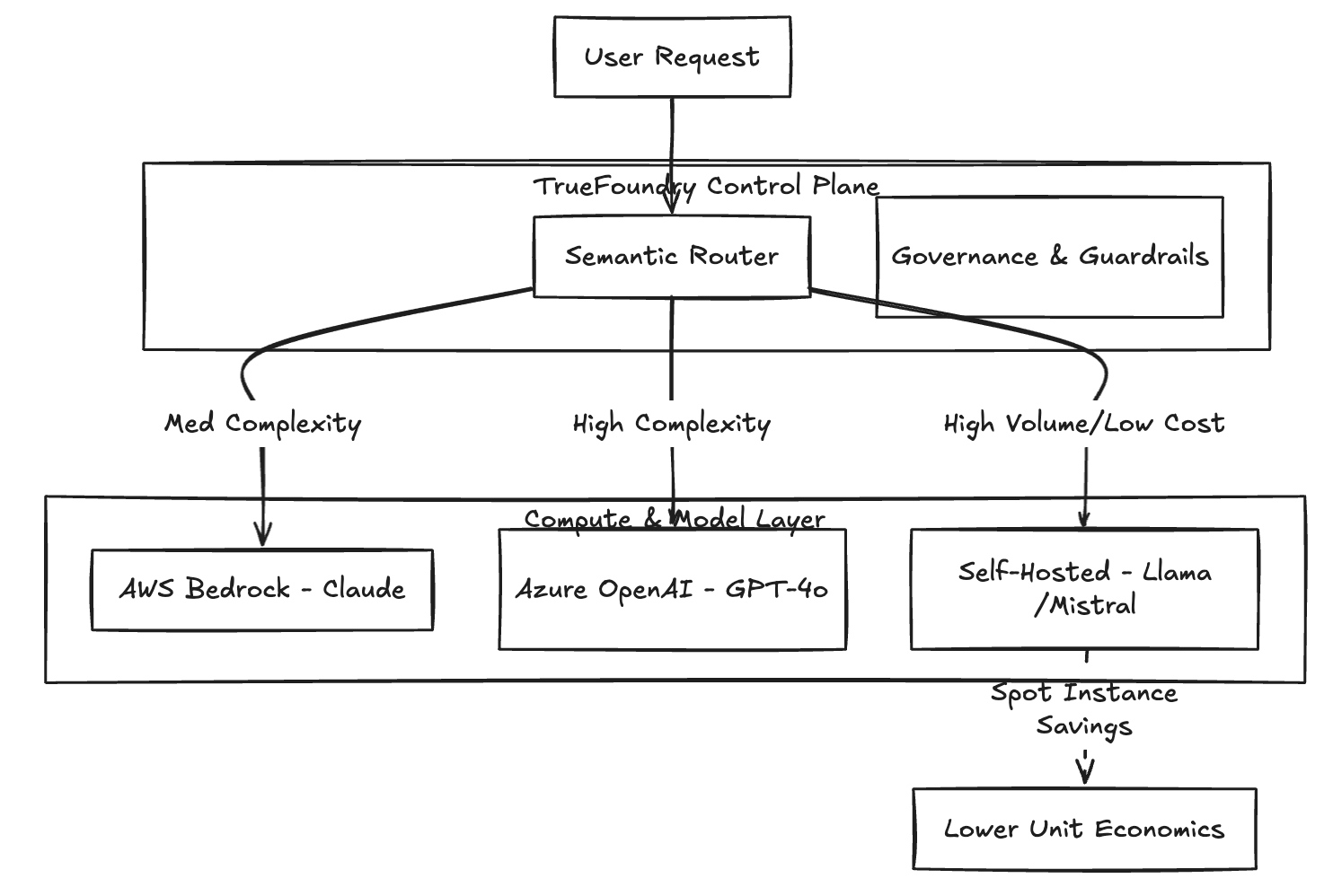

A defining characteristic of Bedrock Agents is the architectural binding to Bedrock models (Titan, Claude, Llama on Bedrock). Complex agents perform many steps, and using a high-end model like Claude 3.5 Sonnet for every step of a recursive loop can drive up costs.

TrueFoundry facilitates semantic routing. The Gateway analyzes the complexity of a step; if the agent merely needs to extract a date from a string, the request routes to a more cost-effective model (like Meta Llama) hosted on AWS Spot Instances. If the step requires complex reasoning, it routes to GPT-4o or Claude 3.5 Opus.

Fig 2: TrueFoundry’s routing logic optimizes unit economics by matching task complexity to the most cost-effective provider.

Feature Comparison: Managed Service vs. Control Plane

This table contrasts the capabilities of the AWS managed service against the TrueFoundry control plane.

The Hybrid Infrastructure Argument

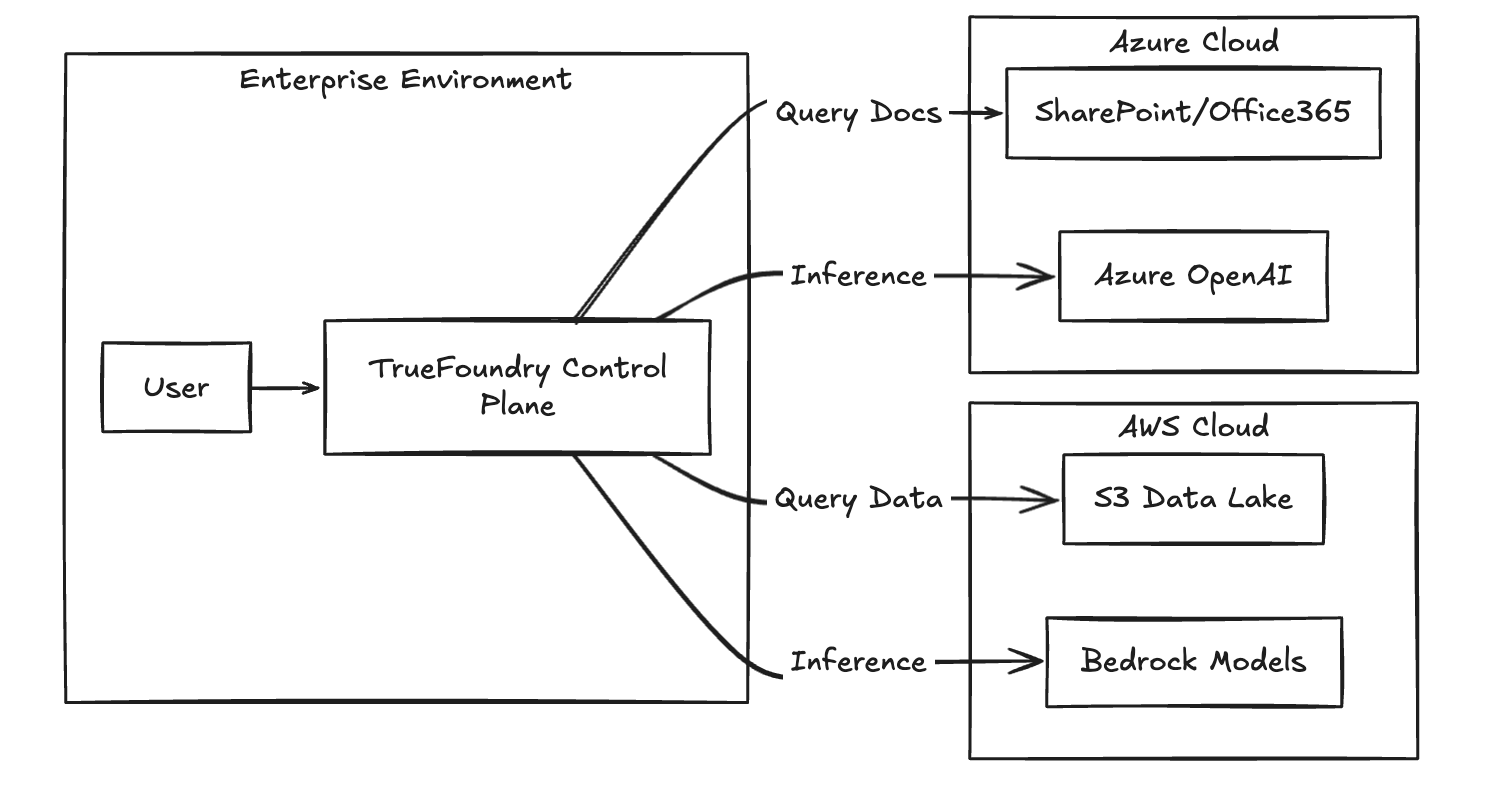

For many enterprises, the future is not "All-in on AWS" or "All-in on Azure," but a hybrid state dictated by data gravity and cost.

AgentCore excels when the entire lifecycle of the data—from ingestion to inference—lives inside AWS. However, as agentic workflows scale, they often require access to data in Microsoft SharePoint, Customer Data Platforms on Google Cloud, or on-premise warehouses.

TrueFoundry facilitates a cross-cloud routing pattern. The agent's logic resides in the control plane, allowing it to reach out to tools across different clouds without traversing complex VPNs or manually configuring API Gateways. This future-proofs the stack; if Azure OpenAI Service releases a new model that outperforms Claude, or if Llama 3 becomes viable for a specific use case, switching the underlying engine is a configuration change rather than a code rewrite.

Fig 3: The TrueFoundry routing architecture enabling cross-cloud data access.

Summary Recommendation

The choice between the AWS managed service and the TrueFoundry control plane is effectively a choice between integration speed and architectural optionality.

- Standardize on AWS Bedrock Agents if: Your engineering team is small, application logic is heavily composed of AWS Lambda functions, and there is no requirement to use models outside the Bedrock portfolio.

- Choose TrueFoundry if: You are building a platform that must serve multiple internal teams with different needs. You require centralized governance to manage budgets and security policies across AWS and Azure, or you intend to leverage open-source models on Spot instances to control the unit economics of high-volume agent workloads.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)