8 Best Databricks Mosaic AI Alternatives for AI Development in 2026

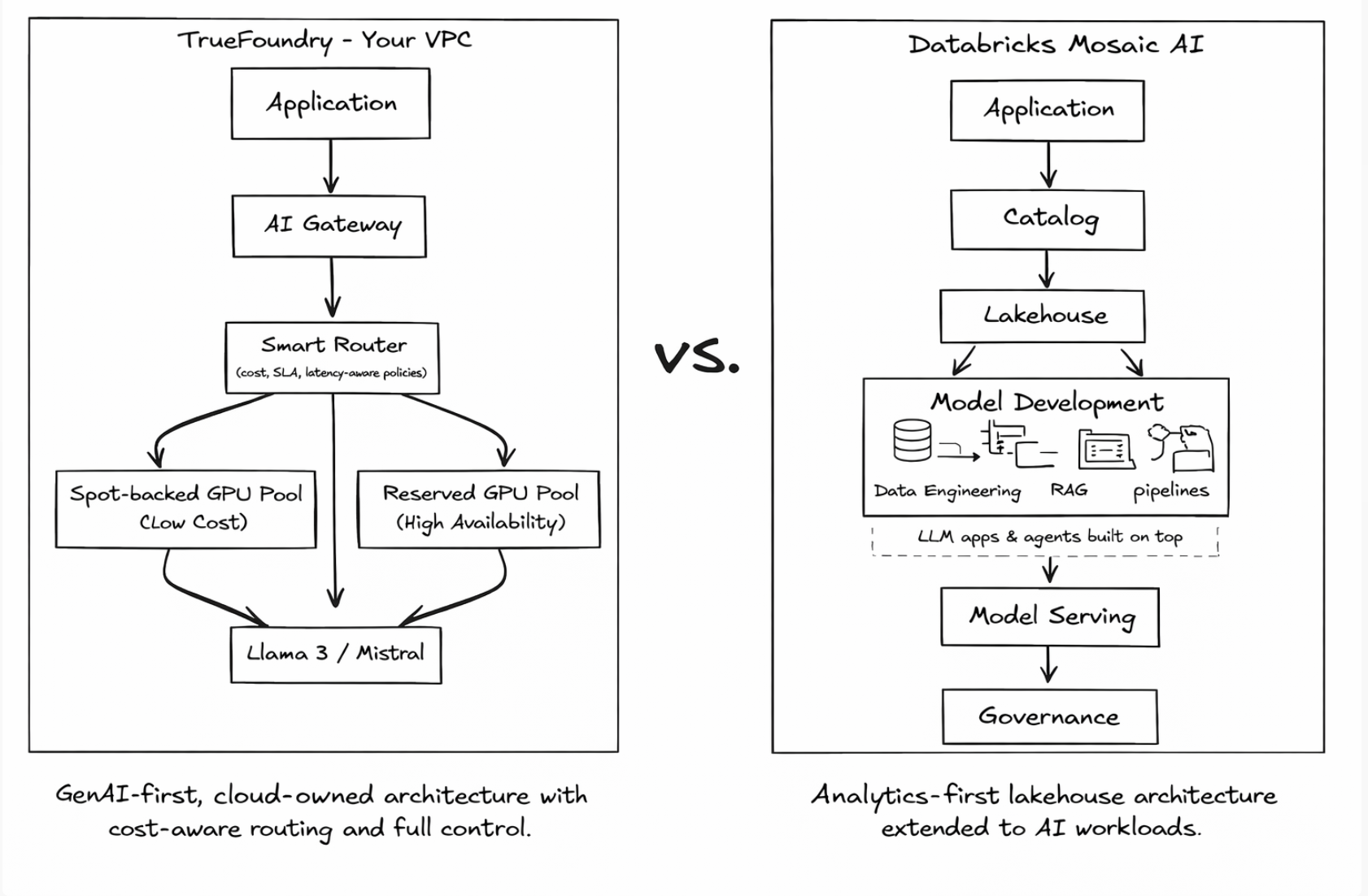

Databricks Mosaic AI is a comprehensive platform that brings together data engineering, model training, agent frameworks, and governance under the Databricks Lakehouse. For teams already deeply invested in Spark, Delta Lake, and Databricks workflows, Mosaic AI offers a unified way to move from data to production AI. However, Mosaic AI was fundamentally designed around a Spark-first analytics architecture not the GenAI-first reality teams face in 2026.

As organizations build LLM-powered applications, large-scale RAG pipelines, and autonomous agents, many teams begin to encounter friction with Databricks Mosaic AI. Common challenges include heavy coupling to Spark-based workflows, a steep learning curve for non–data engineers, opaque consumption-based pricing, and limited flexibility when deploying GenAI systems across clouds or custom infrastructure.

As a result, developers and platform teams are increasingly exploring Databricks Mosaic AI alternatives that are:

- Cloud-agnostic rather than lakehouse-locked

- Purpose-built for LLMs, agents, and prompts

- Easier to operate and scale without Spark expertise

- More transparent and predictable in cost

In this guide, we rank the top Databricks Mosaic AI competitors for 2026, focusing on platforms that better align with modern GenAI development. We’ll also explain why TrueFoundry is emerging as the best overall alternative for teams moving beyond Databricks’ analytics-first constraints.

How Did We Evaluate Databricks Mosaic AI Alternatives?

Not every Databricks alternative is a true Mosaic AI replacement. Some platforms focus on training, others on inference, and only a few are designed for end-to-end GenAI development. To ensure a fair and practical comparison, we evaluated each platform using the following criteria.

1. Cloud Flexibility & Portability

We assessed whether platforms support:

- Multi-cloud deployment (AWS, GCP, Azure)

- Running in your own VPC or private infrastructure

- Portability of code and models without vendor lock-in

Platforms tightly coupled to a single cloud or proprietary runtime scored lower, especially for teams prioritizing long-term flexibility.

2. GenAI-Native Capabilities

We looked for platforms that are purpose-built for GenAI, not retrofitted analytics tools. This includes native support for:

- LLM inference and fine-tuning

- RAG pipelines and vector workflows

- Agents, tools, and MCP-style execution

- Prompt lifecycle management

We also evaluated ecosystem compatibility with frameworks like LangChain, LlamaIndex, and Hugging Face.

3. Cost Transparency & Predictability

Databricks’ consumption-based pricing can make it difficult to forecast costs at scale. For alternatives, we evaluated:

- Clarity of pricing models (per-instance vs opaque consumption)

- Ability to estimate costs upfront

- Support for infrastructure optimization (autoscaling, spot instances)

Platforms with predictable and optimizable cost structures ranked higher.

4. Developer Experience & Learning Curve

Finally, we examined how quickly teams can move from code to production. Key questions included:

- Can non–data engineers use the platform effectively?

- Is Spark or deep data engineering expertise required?

- How much operational overhead is involved?

Platforms that enable fast iteration using familiar GenAI frameworks scored highest.

Top 8 Databricks Mosaic AI Alternatives for 2026

Before diving into each platform, here’s a quick comparison table to help you understand how the leading Databricks Mosaic AI alternatives differ in focus, pricing style, and core capabilities.

TrueFoundry (The Best Overall Alternative)

TrueFoundry is a cloud-agnostic, GenAI-native platform designed for teams building modern AI systems beyond Spark-centric analytics. Unlike Databricks Mosaic AI, which extends a lakehouse model into AI workflows, TrueFoundry is purpose-built for LLMs, agents, and production inference from day one.

TrueFoundry enables teams to deploy, operate, and scale AI workloads in their own cloud or VPC while retaining a PaaS-like developer experience. It supports the full AI lifecycle - training, fine-tuning, deployment, inference, observability, and governance without forcing teams into Spark-based abstractions. This makes it especially attractive for engineering-led teams building GenAI products rather than analytics-first platforms.

Key Features

- Deploy AI Workloads in Your Own Cloud or VPC on Kubernetes

Run models on AWS, GCP, or Azure with full network isolation, compliance, and infrastructure ownership. - AI Gateway

Centralize access to multiple LLM providers and self-hosted models with routing, rate limits, budgets, and observability built in. - MCP & Agents Registry

Manage agent execution, tools, and MCP servers centrally, enabling safe and scalable agentic workflows. - Prompt Lifecycle Management

Version, test, and roll out prompts systematically - treating prompts as first-class production assets. - Built-in Observability and Cost Controls

Track tokens, latency, errors, and spend at request-level granularity, with FinOps-style guardrails. - GenAI-First Developer Experience

Works seamlessly with frameworks like LangChain, LlamaIndex, and Hugging Face - no Spark expertise required.

Why TrueFoundry Is a Better Choice Than Databricks Mosaic AI

- Designed for GenAI-first workflows, not Spark-first analytics

- Cloud-agnostic and VPC-native, avoiding platform lock-in

- Lower learning curve for application and platform engineers

- Predictable, optimizable costs using autoscaling and spot instances

- Native support for agents, MCP, and modern RAG pipelines

Pricing Plans

TrueFoundry offers transparent, usage-based pricing, aligned with how teams consume infrastructure:

- Free Tier for experimentation

- Growth Tier for production GenAI workloads

- Enterprise Tier with advanced security, governance, and support

Since workloads run in your own cloud, infrastructure costs remain visible and controllable unlike opaque consumption models.

What Customers Say About TrueFoundry

TrueFoundry is highly rated on G2 and Capterra, with customers highlighting:

- Ease of deploying GenAI in private cloud environments

- Strong cost visibility and operational control

- Faster path from prototype to production compared to analytics-heavy platforms

If you’re building LLM apps or agents and feel constrained by Spark-first platforms, you can sign up for free or book a demo with TrueFoundry to see how it compares in real-world GenAI deployments.

AWS SageMaker

AWS SageMaker is Amazon’s flagship machine learning platform, designed for training, deploying, and managing models at scale. It is a natural choice for teams that are already deeply embedded in the AWS ecosystem and want tight integration with services like S3, IAM, and CloudWatch.

While powerful, SageMaker is primarily an MLOps platform, and adapting it for modern GenAI workflows often requires significant configuration and AWS-specific expertise.

Key Features

- Managed training jobs and pipelines

- Hosted inference endpoints

- Integrated experiment tracking and model registry

- Native AWS security and IAM integration

Pricing Plans

- Usage-based pricing for training and inference

- Separate charges for compute, storage, and endpoints

- Costs vary by instance type and runtime

Pros

- Deep integration with AWS services

- Highly scalable and enterprise-ready

- Strong support for traditional ML workflows

Cons

- Steep learning curve for non-ML specialists

- AWS lock-in

- GenAI workflows require additional tooling and setup

- Pricing complexity at scale

How TrueFoundry Is Better Than AWS SageMaker

TrueFoundry offers a simpler, GenAI-native experience that works across AWS, GCP, and Azure. It removes much of the operational complexity associated with SageMaker while adding native support for agents, prompts, and modern LLM workflows.

Google Vertex AI

Google Vertex AI is Google Cloud’s unified platform for building and deploying machine learning and GenAI models. It provides access to Google’s Gemini models alongside managed training, pipelines, and endpoints.

Vertex AI is a strong option for GCP-first teams, but its managed-service approach can feel heavyweight and restrictive for teams seeking portability.

Key Features

- Managed training and inference pipelines

- Access to Gemini and third-party models

- Integrated MLOps and experiment tracking

- GCP-native security and IAM

Pricing Plans

- Usage-based pricing across services

- Separate charges for training, inference, and pipelines

- Premium pricing for managed features

Pros

- Comprehensive AI tooling

- Strong performance and scalability

- Tight integration with GCP ecosystem

Cons

- Locked into Google Cloud

- Complex pricing structure

- Less flexibility for custom infrastructure optimization

How TrueFoundry Is Better Than Google Vertex AI

TrueFoundry provides cloud-agnostic deployment and cost optimization while focusing specifically on GenAI and agent-based systems. Teams avoid hyperscaler lock-in and gain more control over infrastructure and long-term costs.

Azure Machine Learning

Azure Machine Learning is Microsoft’s end-to-end ML platform for training, deploying, and managing models on Azure. It is commonly adopted by enterprises already standardized on Azure and Microsoft tooling.

While robust for traditional ML, Azure ML often feels heavyweight and MLOps-centric when used for fast-moving GenAI and agent-based development.

Key Features

- Managed training and inference endpoints

- Model registry and experiment tracking

- Azure-native security, IAM, and compliance

- Integration with Azure services and DevOps

Pricing Plans

- Usage-based pricing for compute and storage

- Costs vary by VM/GPU type and runtime

- Additional charges for managed endpoints and pipelines

Pros

- Enterprise-grade security and compliance

- Deep integration with Azure ecosystem

- Scales well for large organizations

Cons

- Azure lock-in

- Steep learning curve for app developers

- GenAI workflows require extra configuration

- Slower iteration compared to GenAI-native platforms

How TrueFoundry Is Better Than Azure Machine Learning

TrueFoundry offers a lighter, GenAI-first developer experience without tying teams to a single cloud. It enables faster iteration on LLM apps and agents while maintaining enterprise-grade governance and cost control across AWS, GCP, and Azure.

Snowflake (Snowpark + Cortex AI)

Snowflake has expanded into AI through Snowpark and Cortex AI, enabling teams to build ML and GenAI workflows directly where their data lives. This is appealing for analytics-heavy teams that want to keep everything inside the Snowflake ecosystem.

However, Snowflake’s AI capabilities remain data-warehouse–centric, which can limit flexibility for application-centric GenAI systems.

Key Features

- SQL- and Python-based ML with Snowpark

- Cortex AI for LLM-powered data workflows

- Tight integration with Snowflake data

- Consumption-based execution model

Pricing Plans

- Consumption-based pricing (credits)

- Costs tied to compute usage and queries

- Difficult to forecast at scale

Pros

- Strong data integration

- Familiar workflows for analytics teams

- Minimal data movement

Cons

- Not designed for full AI lifecycle

- Limited support for agents and complex orchestration

- Opaque, consumption-based pricing

- Strong platform lock-in

How TrueFoundry Is Better Than Snowflake (Snowpark + Cortex AI)

TrueFoundry is built for application-level GenAI, not analytics-first workflows. It supports agents, RAG pipelines, and production inference outside the data warehouse while still integrating cleanly with Snowflake as a data source.

Cake.ai

Cake.ai is a simplified AI/ML platform focused on helping teams deploy and manage models without deep infrastructure expertise. It aims to abstract away operational complexity and provide a more guided experience for ML workflows.

While approachable, Cake.ai is generally better suited for simpler ML use cases rather than complex, large-scale GenAI systems.

Key Features

- Managed model training and deployment

- Simplified MLOps workflows

- Built-in monitoring

- Opinionated platform abstractions

Pricing Plans

- Subscription-based pricing

- Tiered plans based on usage and features

Pros

- Easy to get started

- Reduced operational overhead

- Suitable for small to mid-sized teams

Cons

- Limited flexibility for advanced GenAI workflows

- Less control over infrastructure

- Not optimized for agents or large RAG pipelines

How TrueFoundry Is Better Than Cake.ai

TrueFoundry offers far greater flexibility and scalability, supporting complex GenAI systems, agents, and custom infrastructure while still providing a strong developer experience.

ClearML

ClearML is an open-core MLOps platform focused on experiment tracking, orchestration, and model management. It is popular among teams that want visibility into ML experiments and pipelines without committing to a fully managed SaaS.

ClearML is strong for tracking and orchestration, but it is not GenAI-first by design.

Key Features

- Experiment tracking and visualization

- Pipeline orchestration

- Model registry

- Open-source core with hosted option

Pricing Plans

- Free open-source tier

- Paid hosted and enterprise plans

Pros

- Flexible, open-core approach

- Strong experiment tracking

- Self-hosting option available

Cons

- Limited native support for LLMs and agents

- Requires additional tooling for production GenAI

- More MLOps-focused than app-centric

How TrueFoundry Is Better Than ClearML

TrueFoundry is LLM- and agent-native, offering built-in support for prompts, RAG, inference, and cost controls—capabilities that ClearML does not provide out of the box.

Domino Data Lab

Domino Data Lab is an enterprise ML platform designed for collaboration, governance, and model lifecycle management in regulated industries. It is commonly used by large organizations with strict compliance requirements.

Domino excels at governance but can feel slow and heavyweight for fast-moving GenAI development teams.

Key Features

- Enterprise model governance

- Collaboration and reproducibility

- Audit trails and compliance controls

- Centralized model management

Pricing Plans

- Enterprise-only pricing

- Custom contracts based on scale and support

Pros

- Strong governance and compliance

- Well-suited for regulated environments

- Mature enterprise features

Cons

- High cost and long sales cycles

- Less flexible for rapid GenAI iteration

- Limited GenAI-native tooling

How TrueFoundry Is Better Than Domino Data Lab

TrueFoundry balances enterprise-grade governance with GenAI-native speed. It enables rapid development of LLM apps and agents while still providing the controls enterprises need without the overhead of a heavyweight analytics-first platform.

Decision Framework: How To Choose a Databricks Mosaic AI Alternative

Choosing the right Databricks Mosaic AI alternative depends less on feature checklists and more on how your team actually builds and operates AI systems. The scenarios below can help guide that decision.

When to Stay with Databricks Mosaic AI

Staying with Databricks Mosaic AI makes sense if:

- Your organization is deeply invested in Spark, Delta Lake, and Databricks workflows

- Most AI workloads are tightly coupled to large-scale analytics and ETL pipelines

- Your team is primarily composed of data engineers and data scientists

- You value a single lakehouse-centric platform over flexibility

- Cost predictability is secondary to platform consolidation

For Spark-heavy analytics teams, Mosaic AI remains a strong, integrated choice.

When to Choose TrueFoundry

TrueFoundry is the best fit if:

- You are building LLM applications, RAG pipelines, or AI agents as core products

- You want to deploy AI workloads in your own cloud or VPC across AWS, GCP, or Azure

- You need GenAI-native primitives like AI gateways, agents, MCP, and prompt lifecycle management

- Your engineering teams prefer framework-native development (LangChain, LlamaIndex, Hugging Face) over Spark abstractions

- You want transparent, optimizable costs using autoscaling and spot instances

- You need to move fast without sacrificing governance, observability, or security

TrueFoundry is designed for teams moving beyond analytics-first platforms and treating AI as application infrastructure, not just data workloads.

When to Choose Other Alternatives

Other Databricks Mosaic AI competitors may be a better fit if:

- You are fully committed to a single cloud provider (SageMaker, Vertex AI, Azure ML)

- You need a data-warehouse–centric AI approach (Snowflake Cortex)

- Your primary focus is experiment tracking or traditional MLOps (ClearML, Domino Data Lab)

- You want low-level infrastructure control and are comfortable building your own platform layer (Runpod-style setups)

These platforms can work well in specific contexts but often require trade-offs in flexibility, GenAI-native capabilities, or operational simplicity.

Databricks Mosaic AI works best for analytics-driven AI teams. As AI systems evolve toward application-centric, agent-based architectures, many teams find greater leverage in platforms built specifically for GenAI.

For organizations looking to escape Spark lock-in while gaining cloud flexibility, modern GenAI tooling, and predictable costs, TrueFoundry is the most balanced and future-ready alternative.

Ready to Break Free from Databricks Lock-in?

Databricks Mosaic AI is a strong platform for Spark-based analytics and data-heavy machine learning workflows. For teams whose AI strategy is tightly coupled to large-scale ETL and lakehouse architectures, it continues to deliver value.

However, modern GenAI development - LLM applications, RAG pipelines, and autonomous agents, introduces new requirements that analytics-first platforms were not designed for. Engineering teams today need cloud flexibility, framework freedom, transparent pricing, and GenAI-native tooling to move fast and scale responsibly.

This is where TrueFoundry stands apart. By enabling teams to build and operate AI systems in their own cloud or VPC, TrueFoundry removes platform lock-in while delivering the primitives required for production GenAI - AI gateways, agents, MCP, prompt lifecycle management, and deep observability.

👉 If Databricks Mosaic AI feels heavyweight or restrictive for your GenAI roadmap, book a demo with TrueFoundry to see how teams are building faster, more flexible AI platforms in 2026.

Frequently Asked Questions

What is the difference between Snowflake Cortex and Databricks Mosaic AI?

Snowflake Cortex is designed to bring AI capabilities directly into the data warehouse, enabling SQL- and Python-based AI workflows close to data. Databricks Mosaic AI, on the other hand, extends the lakehouse model to cover model training, deployment, and governance. Both are data-platform–centric, whereas GenAI-native platforms like TrueFoundry focus on application-level AI systems, agents, and inference outside the data warehouse.

What are the use cases of Databricks Mosaic AI?

Databricks Mosaic AI is well-suited for use cases such as large-scale feature engineering, model training on structured and unstructured data, and ML workflows tightly integrated with Spark-based analytics. It is commonly used by data science teams building predictive models and analytics-driven AI rather than application-centric GenAI systems.

What is the best AI agent builder platform?

The best AI agent builder platform depends on your deployment and governance needs. For teams building production agents that require cloud flexibility, secure tool execution, cost control, and observability, TrueFoundry stands out as a leading choice. It provides agent registries, MCP support, and centralized governance—capabilities that go beyond experimental agent frameworks.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.