Top 9 Cloudflare AI Alternatives and Competitors For 2026

Cloudflare AI has quickly become a popular choice for developers who want to run lightweight AI inference at the edge. For use cases like low-latency text generation, basic image processing, or experimentation close to users, Cloudflare offers an excellent developer experience with minimal setup.

However, as AI workloads evolve toward 2026-scale requirements - agentic workflows, multi-model routing, massive RAG pipelines, and strict enterprise governance, many teams begin to hit real limitations with Cloudflare’s approach.

The most common friction points include:

- Vendor lock-in: You are constrained to Cloudflare’s curated model catalog, with limited control over versions, fine-tuning, or custom models.

- Data privacy and control: Inference runs inside Cloudflare’s managed environment, creating a “black box” for teams that need full VPC-level isolation or regulatory guarantees.

- Cost at scale: Serverless, per-request pricing can become expensive and unpredictable compared to optimized GPU clusters or spot instances.

- Limited lifecycle support: Cloudflare focuses primarily on inference, leaving training, fine-tuning, and deeper orchestration to external systems.

As AI systems mature, teams increasingly look for Cloudflare AI alternatives that offer more control, better cost efficiency, and broader model flexibility without sacrificing developer velocity.

In this guide, we rank the top Cloudflare AI alternatives and competitors for 2026, with a special focus on platforms that balance ease of use with infrastructure ownership. We’ll also highlight why TrueFoundry is emerging as the top choice for enterprises that want the best of both worlds: Cloudflare’s simplicity, combined with the control and scalability of running AI in their own cloud.

How Did We Evaluate Cloudflare Alternatives?

Not every “Cloudflare competitor” solves the same problem. Some platforms optimize for experimentation, others for raw GPU access, and only a few are designed for production-grade AI systems at scale. To create a fair and practical comparison, we evaluated each alternative using the following criteria:

1. Infrastructure Control

Can the platform run in your own AWS, GCP, or Azure account or are you locked into a vendor-managed environment? Infrastructure ownership is increasingly critical for data privacy, compliance, and long-term cost optimization.

2. Model Flexibility

Does the platform allow you to deploy any model including fine-tuned Llama 3, Mistral, custom embeddings, or proprietary models or are you limited to a fixed catalog?

3. Cost Efficiency at Scale

We compared serverless markup pricing against options that support:

- Raw GPU access

- Spot instances

- Autoscaling Kubernetes clusters

Platforms that offer predictable, transparent cost structures score higher for large-scale workloads.

4. Support for Modern AI Workloads

Does the platform handle:

- Agentic workflows

- Large RAG pipelines

- Multi-model routing

- Tool and MCP-based execution

- Edge-only inference is no longer sufficient for many teams.

5. Developer Experience

How quickly can developers go from code to production? We evaluated onboarding friction, APIs, SDKs, and day-2 operational complexity.

6. Production Readiness

We assessed observability, monitoring, governance, and operational controls- areas that become critical once AI systems move beyond prototypes. Using these criteria, we ranked the top 9 Cloudflare AI alternatives that are best positioned for teams building serious AI products in 2026.

Top 9 Cloudflare AI Alternatives for 2026

As AI systems move beyond simple edge inference into agentic workflows, large-scale RAG pipelines, and multi-model orchestration, teams need platforms that offer far more than just serverless inference APIs.

The alternatives below were selected based on their ability to deliver:

- Infrastructure ownership or flexibility

- Model freedom beyond curated catalogs

- Cost efficiency at scale

- Production readiness for modern AI workloads

We start with the strongest overall alternative for teams that want to scale AI seriously in 2026.

TrueFoundry (The Best Overall Alternative)

TrueFoundry is a full-stack AI platform designed for teams that want to run production-grade AI workloads in their own cloud or VPC, without giving up developer velocity. Unlike Cloudflare Workers AI, which abstracts away infrastructure entirely, TrueFoundry gives teams control where it matters - while still providing a high-level, PaaS-like experience.

TrueFoundry supports the entire AI lifecycle, from training and fine-tuning to deployment, inference, and observability. At its core, it enables teams to deploy any model - open source or proprietary on Kubernetes across AWS, GCP, or Azure, with built-in scaling, cost controls, and governance. This makes it particularly well-suited for enterprises building long-lived AI systems rather than lightweight edge demos.

Key Features

- Deploy AI Workloads in Your Own Cloud or VPC

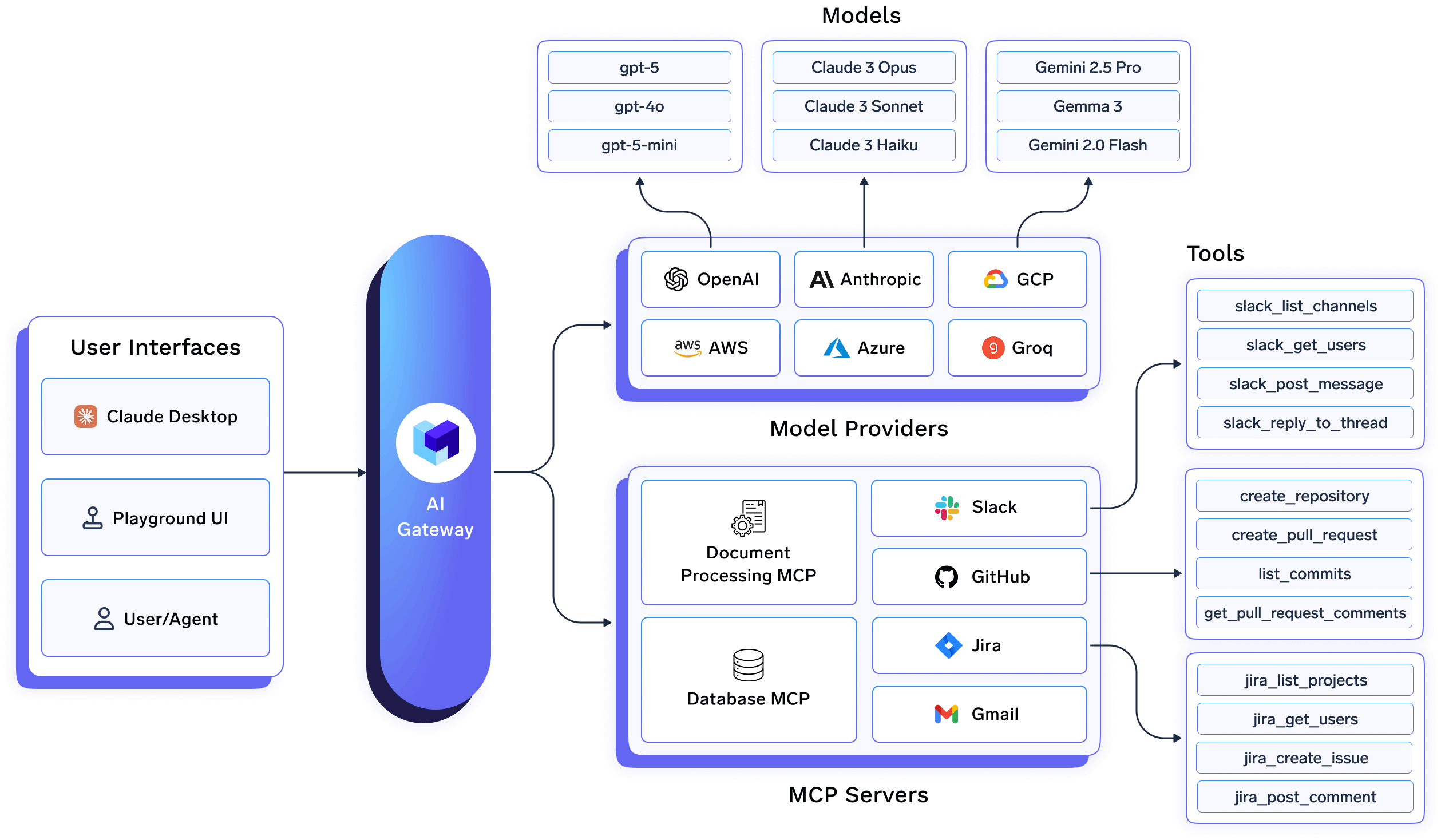

Run inference and training workloads directly in your AWS, GCP, or Azure account on Kubernetes, ensuring full data ownership, compliance, and network isolation. - AI Gateway for Multi-Model Routing and Control

Route traffic across multiple LLM providers and self-hosted models, enforce budgets, rate limits, and policies, and avoid vendor lock-in. - MCP & Agents Registry

Manage tools, MCP servers, and agent execution centrally, enabling safe and scalable agentic workflows beyond simple inference. - Prompt Lifecycle Management

Version, test, and roll out prompts systematically instead of treating them as untracked application code. - Built-in Observability and Cost Visibility

Track tokens, latency, errors, and spend at the request level - across models, teams, and environments. - Production-Grade Autoscaling and GPU Optimization

Use autoscaling, spot instances, and optimized GPU scheduling to significantly reduce inference costs compared to serverless pricing models.

Why TrueFoundry Is a Better Choice Than Cloudflare Workers AI

Cloudflare excels at edge-based inference, but TrueFoundry is built for ownership, scale, and flexibility:

- No model lock-in, deploy any open-source or custom model

- Full VPC-level data privacy

- Predictable costs using optimized compute instead of per-request pricing

- Support for agents, RAG pipelines, and complex workflows

- Covers the full AI lifecycle, not just inference

Pricing Plans

TrueFoundry follows a transparent, usage-based pricing model, aligned with how teams actually consume infrastructure.

- Free Tier: Ideal for experimentation and small teams

- Growth Tier: For production workloads with observability and scaling needs

- Enterprise Tier: Advanced governance, security, and custom deployments

Since workloads run in your own cloud, infrastructure costs remain visible and optimizable unlike opaque serverless pricing.

What Customers Say About TrueFoundry

TrueFoundry is rated highly on platforms like G2 and Capterra, with consistent praise for:

- Ease of deploying AI in private cloud environments

- Strong cost visibility and control

- Reliable support for production AI systems

Many customers highlight how TrueFoundry helped them move from prototypes to scalable, compliant AI platforms without rebuilding their stack.

AWS Bedrock

Amazon Web Services Bedrock is AWS’s managed service for accessing foundation models such as Anthropic Claude, Amazon Titan, and selected third-party models. It is designed for teams already deeply invested in the AWS ecosystem who want a native way to consume LLMs without managing infrastructure directly.

While Bedrock removes operational overhead, it still follows a managed, API-first model that limits flexibility as workloads grow more complex.

Key Features

- Managed access to foundation models (Claude, Titan, etc.)

- Native AWS IAM integration

- Serverless inference APIs

- Built-in guardrails and basic monitoring

Pricing Plans

- Pay-per-request or token-based pricing

- Separate pricing per model provider

- Additional AWS costs for logging, storage, and networking

Pros

- Tight integration with AWS services

- No infrastructure management required

- Enterprise-friendly security defaults

Cons

- Limited support for custom or fine-tuned open-source models

- Pricing becomes expensive at scale

- Primarily focused on inference, not full AI lifecycle

- Locked into AWS ecosystem

How TrueFoundry Is Better Than AWS Bedrock

TrueFoundry allows teams to deploy any model on their own infrastructure, including fine-tuned open-source models, while offering better cost predictability through spot instances and autoscaling. Unlike Bedrock, TrueFoundry is cloud-agnostic and supports the entire AI lifecycle beyond managed inference APIs.

Runpod

RunPod is a GPU cloud platform popular with developers who want low-cost access to GPUs for inference or experimentation. It is often used as a Cloudflare alternative when teams outgrow serverless pricing and want direct control over compute.

Runpod focuses on raw GPU access, leaving orchestration, scaling, and governance largely to the user.

Key Features

- On-demand and spot GPU instances

- Support for custom containers

- Lower-cost GPUs compared to hyperscalers

- Simple deployment workflows

Pricing Plans

- Hourly GPU pricing

- Lower costs via spot instances

- Pay only for compute used

Pros

- Cost-effective GPU access

- Good for experimentation and custom models

- Flexible container-based deployment

Cons

- Limited built-in observability and governance

- No native AI gateway or traffic management

- Requires significant DevOps effort for production

- Not designed for complex agentic systems

How TrueFoundry Is Better Than Runpod

TrueFoundry provides production-ready orchestration, observability, and governance on top of Kubernetes, while still enabling cost optimization through spot instances. Teams get the benefits of raw compute efficiency without having to build and maintain their own platform layer.

Replicate

Replicate is a popular API-based platform that makes it easy to run open-source models without managing infrastructure. Developers can deploy models with minimal setup and pay per second of execution, making Replicate attractive for prototyping and small-scale production.

However, Replicate’s convenience comes with trade-offs as workloads scale and requirements around privacy, cost predictability, and customization increase.

Key Features

- Hosted inference for popular open-source models

- Simple REST APIs for model invocation

- Automatic scaling and model hosting

- Community-driven model catalog

Pricing Plans

- Usage-based pricing (per second of execution)

- Different rates per model and hardware type

- No fixed monthly plans

Pros

- Extremely easy to get started

- No infrastructure or DevOps overhead

- Good selection of community models

Cons

- Limited control over infrastructure and networking

- Costs can become unpredictable at scale

- Minimal observability and governance

- SaaS-only deployment model

How TrueFoundry Is Better Than Replicate

TrueFoundry enables teams to run the same open-source models inside their own cloud, with full observability, governance, and cost optimization. Unlike Replicate’s black-box execution, TrueFoundry gives platform teams visibility and control over performance, data, and spend.

Google Vertex AI

Google Vertex AI is Google Cloud’s end-to-end platform for training, deploying, and serving ML and LLM models. It supports Google’s Gemini models alongside custom training and managed pipelines, making it a strong option for teams standardized on GCP.

While powerful, Vertex AI remains tightly coupled to Google Cloud and follows a managed-service approach that limits flexibility for hybrid or multi-cloud strategies.

Key Features

- Managed training and inference pipelines

- Access to Gemini and third-party models

- Integrated MLOps and experiment tracking

- Native GCP security and IAM integration

Pricing Plans

- Usage-based pricing for training and inference

- Separate costs for compute, storage, and pipelines

- Premium pricing for managed services

Pros

- Comprehensive ML and AI tooling

- Strong integration with GCP ecosystem

- Enterprise-grade scalability

Cons

- Locked into Google Cloud

- Complex pricing model

- Less flexibility for custom infra optimization

- Heavyweight for teams focused primarily on inference

How TrueFoundry Is Better Than Google Vertex AI

TrueFoundry offers a cloud-agnostic, lighter-weight platform that focuses on deployment, inference, and governance without locking teams into a single hyperscaler. It provides more flexibility to optimize costs and run AI consistently across AWS, GCP, or Azure.

Modal

Modal is a developer-first serverless platform that makes it easy to run Python-based AI workloads without managing infrastructure. It is popular for fast experimentation, internal tools, and lightweight inference pipelines.

Modal prioritizes developer speed, but its abstraction layer can become limiting as AI systems grow in complexity and scale.

Key Features

- Serverless Python execution

- Automatic scaling for inference workloads

- GPU support without infrastructure management

- Simple developer APIs

Pricing Plans

- Usage-based pricing

- Charges based on compute time and resources

- No fixed enterprise pricing tiers publicly listed

Pros

- Excellent developer experience

- Very fast time to production

- Minimal DevOps overhead

Cons

- Limited infrastructure control

- Less suitable for complex, long-running agent workflows

- SaaS-only deployment

- Limited governance and cost predictability at scale

How TrueFoundry Is Better Than Modal

TrueFoundry provides full infrastructure ownership and lifecycle control while maintaining a strong developer experience. It is better suited for long-lived, production AI systems that require governance, predictable costs, and support for complex pipelines beyond simple serverless execution.

Hugging Face Inference Endpoints

Hugging Face Inference Endpoints allow teams to deploy Hugging Face models as managed APIs with minimal setup. It is widely used for serving open-source models quickly and integrating them into applications.

While convenient, the managed nature of the service limits flexibility for teams with strict cost, networking, or compliance requirements.

Key Features

- Managed hosting for Hugging Face models

- Support for popular open-source architectures

- Autoscaling inference endpoints

- Easy integration with Hugging Face ecosystem

Pricing Plans

- Hourly pricing based on instance type

- Separate costs for compute and autoscaling

- Higher costs for larger GPUs

Pros

- Easy access to open-source models

- Strong ecosystem and community

- Low setup friction

Cons

- Limited control over underlying infrastructure

- Costs increase quickly with scale

- Less suitable for multi-cloud or hybrid deployments

- Observability and governance are basic

How TrueFoundry Is Better Than Hugging Face Inference Endpoints

TrueFoundry enables teams to deploy the same Hugging Face models inside their own cloud, with deeper observability, cost controls, and support for advanced workflows like agents and RAG pipelines—without being locked into a managed SaaS model.

Anyscale (Ray)

Anyscale is the commercial platform behind Ray, an open-source framework for distributed computing and AI workloads. It is often used by teams building large-scale, distributed inference, training, and agent systems that need fine-grained control over execution.

Anyscale is powerful, but it assumes a high level of platform and distributed systems expertise, which can slow down teams that want faster time-to-production.

Key Features

- Managed Ray clusters

- Distributed inference and training

- Native support for parallel and agent workloads

- Scales across large GPU clusters

Pricing Plans

- Usage-based pricing

- Costs tied to cluster size and runtime

- Enterprise pricing for managed services

Pros

- Extremely flexible and powerful

- Ideal for complex, distributed AI systems

- Strong open-source foundation

Cons

- Steep learning curve

- Requires Ray-specific expertise

- Less opinionated on governance and cost controls

- Slower onboarding for smaller teams

How TrueFoundry Is Better Than Anyscale

TrueFoundry delivers production-ready abstractions on top of Kubernetes without forcing teams to build everything using Ray primitives. It offers faster onboarding, built-in observability, and cost controls while still supporting complex workflows.

Lambda Labs

Lambda Labs provides GPU cloud infrastructure optimized for machine learning workloads. It is commonly used as a cost-effective alternative to hyperscalers for training and inference.

Lambda Labs focuses on raw infrastructure, leaving orchestration, scaling, and governance entirely up to the user.

Key Features

- On-demand GPU instances

- Competitive pricing for high-end GPUs

- Bare-metal and VM-based deployments

- Suitable for training and inference

Pricing Plans

- Hourly pricing by GPU type

- No bundled platform services

- Lower cost compared to major cloud providers

Pros

- Cost-effective GPU access

- Good performance for training workloads

- Simple infrastructure model

Cons

- No managed AI platform features

- Requires significant DevOps effort

- Limited observability and governance

- Not optimized for multi-team production environments

How TrueFoundry Is Better Than Lambda Labs

TrueFoundry provides a complete AI platform layer - orchestration, observability, scaling, and governance—on top of cloud infrastructure. Teams get the cost benefits of optimized compute without needing to assemble and maintain their own platform stack.

A Detailed Comparison of TrueFoundry vs Cloudflare

While both platforms help teams deploy AI models, TrueFoundry and Cloudflare Workers AI are designed for fundamentally different stages of AI maturity. The table below highlights how they compare across the dimensions that matter most for 2026-scale AI workloads.

Why TrueFoundry Is the Strategic Choice for 2026

As AI systems become more central to products and operations, teams are rethinking where and how inference runs. Three major trends explain why platforms like TrueFoundry are gaining ground over edge-only solutions.

The Hybrid Shift Toward Sovereign AI

By 2026, many organizations want to own their inference stack rather than rely exclusively on managed APIs. Running models in your own cloud provides stronger guarantees around data residency, compliance, and long-term flexibility. TrueFoundry is built for this hybrid reality, while Cloudflare remains optimized for centralized edge execution.

Cost Predictability at Scale

Serverless inference is convenient, but costs can grow unpredictably as traffic and model complexity increase. TrueFoundry’s FinOps and cost visibility features—combined with spot instances and autoscaling - help prevent the “bill shock” that teams often encounter with serverless providers like Cloudflare or Replicate.

Beyond Inference

Cloudflare Workers AI is primarily an inference layer. TrueFoundry, on the other hand, supports the entire AI lifecycle from training and fine-tuning to deployment, inference, observability, and governance. This makes it a better long-term platform for teams building AI as a core capability, not just an add-on feature.

Ready to Scale? Pick the Right Infrastructure Partner

Cloudflare Workers AI is an excellent choice for edge-based applications, quick experimentation, and developer-friendly inference close to users. For hobby projects, prototypes, and latency-sensitive edge use cases, it delivers a fast and elegant experience.

However, as teams move toward production-grade AI systems with agentic workflows, large RAG pipelines, custom models, and strict data governance many outgrow the constraints of a fully managed, serverless model. At that stage, infrastructure ownership, cost predictability, and model flexibility become decisive.

This is where TrueFoundry stands out. By enabling teams to run AI workloads in their own cloud or VPC while preserving a PaaS-like developer experience, TrueFoundry offers the flexibility required to scale AI responsibly in 2026.

👉 If you’re building serious AI products and want long-term control over cost, data, and models, book a demo for free to see how TrueFoundry compares in real-world deployments.

FAQs

What are the alternatives to Cloudflare?

Popular Cloudflare AI alternatives include TrueFoundry, AWS Bedrock, Runpod, Replicate, Google Vertex AI, Modal, Hugging Face Inference Endpoints, Anyscale, and Lambda Labs. Each platform targets different stages of AI maturity, from rapid experimentation to full-scale production deployment.

Why is Cloudflare a bad gateway?

Cloudflare is not inherently “bad,” but it can become limiting for advanced AI workloads. Teams often face challenges around model lock-in, data privacy, cost predictability, and support for complex agentic systems when relying solely on Cloudflare as an AI gateway.

What makes TrueFoundry a better alternative to Cloudflare AI?

TrueFoundry provides full infrastructure ownership, support for any model, predictable cost optimization, and end-to-end lifecycle management. Unlike Cloudflare Workers AI, it enables teams to move beyond edge inference and build scalable, compliant AI systems in their own cloud.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.