Prompt Management Tools for Production AI Systems

As teams move LLM applications from demos to production, prompts quickly become one of the most fragile parts of the system. What starts as a few hard-coded strings often grows into dozens of prompts spread across services, agents, and environments. Small prompt changes can significantly impact output quality, cost, and reliability, yet many teams still manage prompts informally.

This is where prompt management tools come in. They provide structured ways to create, version, test, and govern prompts as first-class production artifacts, rather than static text embedded in code.

For teams running multi-model systems, AI agents, or large-scale LLM workloads, prompt management is not just about organization. It directly affects debugging speed, rollout safety, cost control, and overall system reliability.

In this blog, we’ll look at what prompt management tools are, why they become essential in production, and how teams typically integrate them into modern AI platforms.

What Are Prompt Management Tools?

Prompt management tools are systems that help teams store, version, and operate prompts centrally, instead of embedding them directly in application code.

At a basic level, they allow teams to:

- Define prompts as structured templates

- Track changes over time

- Reuse prompts across applications and agents

In production environments, however, prompt management goes further. Prompts are tied to specific models, tasks, agents, and environments. A single application may run multiple prompt versions simultaneously, depending on traffic, user segment, or deployment stage.

A production-grade prompt management setup typically treats prompts as:

- Versioned assets, similar to APIs or models

- Configurable at runtime, without redeploying code

- Observable, so teams can understand how changes affect outputs and costs

This shift is critical once multiple engineers, agents, or teams are working on the same AI system.

Why Prompt Management Breaks Down Without Proper Tooling

Many teams initially manage prompts directly in code repositories or configuration files. This approach works early on, but it does not scale as systems grow.

Some common failure modes include:

- Untracked prompt changes

Prompt updates are often merged quickly to fix quality issues, but without proper versioning, it becomes difficult to understand what changed and why outputs shifted. - Tight coupling between prompts and deployments

When prompts live in code, even small text changes require full application redeployments. This slows iteration and increases the risk of unintended side effects. - Inconsistent prompts across environments

Prompts used in development, staging, and production often diverge over time, making it hard to reproduce issues or validate improvements safely. - Lack of ownership and governance

As more teams and agents rely on shared prompts, it becomes unclear who owns a prompt and who is allowed to modify it.

Prompt management tools are designed to address these problems by decoupling prompt operations from application logic and deployments.

Core Capabilities Teams Expect from Prompt Management Tools

While implementations vary, most production teams look for a common set of capabilities when evaluating prompt management tools.

Prompt versioning and rollback: Every prompt change should be versioned, with the ability to roll back quickly if output quality degrades. This is especially important when prompts are shared across multiple services or agents.

Parameterized prompt templates: Rather than static text, prompts are usually defined as templates with variables. This makes prompts reusable and easier to maintain across different use cases.

Environment-level separation: Teams often need different prompt versions for development, staging, and production. Prompt management tools help enforce these boundaries without duplicating logic.

Safe iteration and experimentation: Prompt changes should be testable in isolation before being rolled out broadly. This often ties into evaluation workflows and controlled rollouts.

How Prompt Management Fits Into AI Gateways and Routing

In production AI systems, prompts do not operate independently. They influence which models are invoked, how requests are routed, and how costs and failures propagate through the system. Because of this, prompt management becomes most effective when it is integrated with an AI Gateway, rather than handled as a standalone layer.

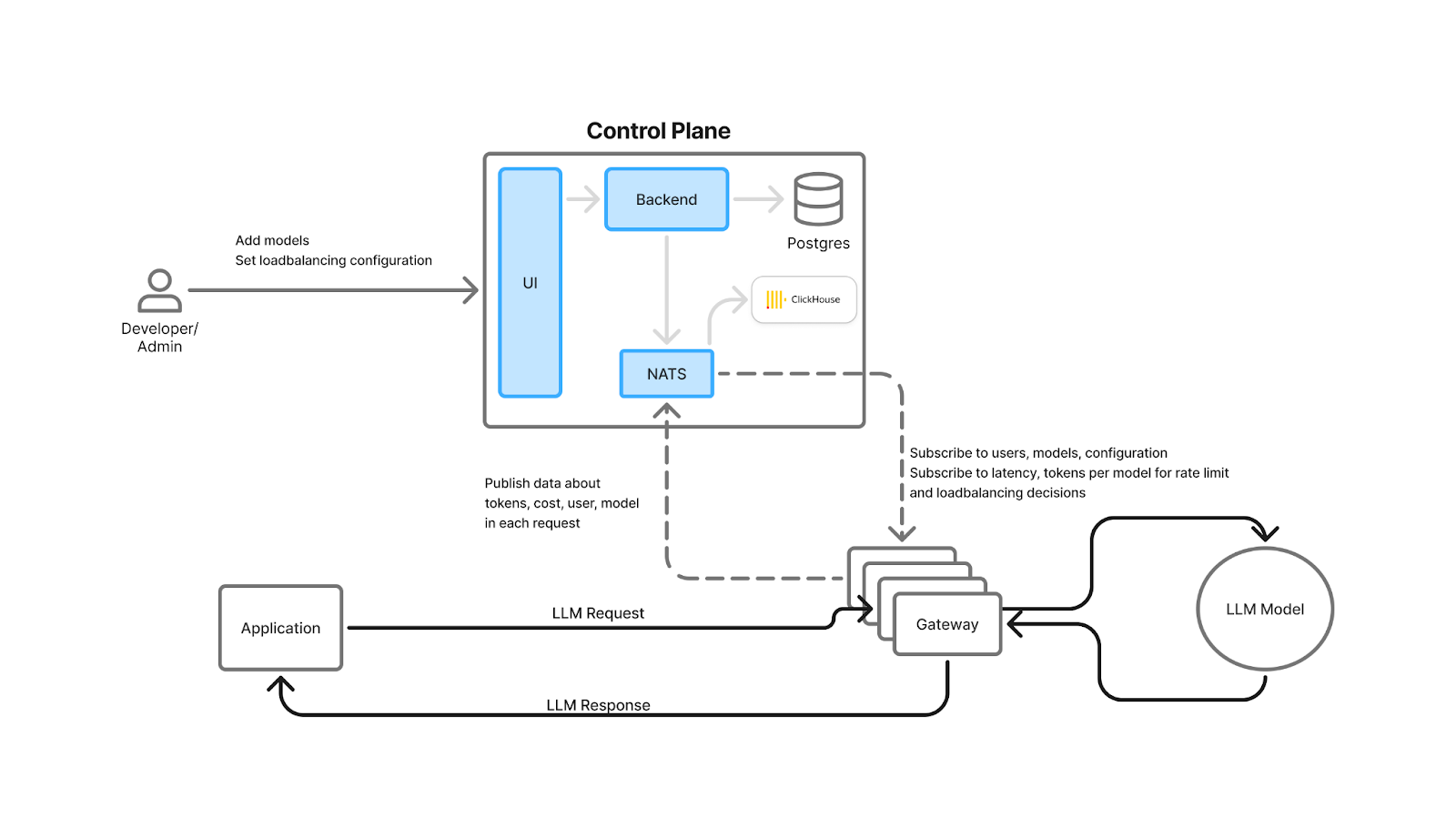

An AI Gateway typically sits between applications or agents and model providers. It is responsible for concerns like model routing, policy enforcement, observability, and cost controls. When prompt management is embedded into this layer, prompts become runtime configurable inputs to routing decisions instead of static strings embedded in code.

Without a gateway, prompt changes are tightly coupled to application deployments. Updating a prompt often requires redeploying services or agents, even when the change is purely textual. Routing logic is usually hard-coded around those prompts, which makes experimentation slow and risky.

With prompt management integrated into an AI Gateway, the flow changes:

- Applications or agents reference prompts by identifier

- The AI Gateway resolves the prompt version at runtime

- Routing decisions are applied based on prompt metadata, task type, or environment

- Requests are forwarded to the appropriate model or provider

This setup enables several practical advantages for teams.

First, prompt updates no longer require redeployments. Teams can modify or roll back prompts independently of application code, which significantly speeds up iteration and reduces operational risk.

Second, routing becomes prompt-aware. The same logical prompt can be routed to different models depending on context, such as environment, traffic segment, or cost constraints. This is especially useful in multi-model setups where teams balance quality, latency, and cost.

Third, observability improves. Because prompts are resolved and executed at the gateway layer, teams can track which prompt version was used for each request, correlate it with latency and cost, and quickly identify regressions caused by prompt changes.

Finally, governance becomes enforceable. Access control, approval workflows, and usage limits can be applied at the prompt level through the gateway, ensuring that sensitive or high-cost prompts are not modified or misused unintentionally.

In practice, this integration turns prompt management into a core part of AI infrastructure. Prompts stop being fragile pieces of text and instead become controlled, observable, and routable assets that evolve safely alongside models and applications.

Prompt Management via an AI Gateway

In a gateway-based architecture, prompt resolution happens at runtime instead of being hard-coded into applications or agents.

The flow typically works as follows:

- Applications or agents reference prompts by identifier

Instead of embedding prompt text directly in code, applications or agents reference a prompt name or ID. This keeps application logic stable even as prompts evolve. - The AI Gateway resolves the prompt version at runtime

When a request reaches the gateway, it determines which prompt version should be used based on environment, configuration, or rollout rules. - Prompt context influences routing decisions

Prompt metadata, such as task type or expected response format, can be used to influence model selection, provider routing, or fallback behavior. - Requests are forwarded to the selected model provider

The gateway sends the resolved prompt and input to the chosen model, while abstracting provider-specific details from the application. - Observability and cost data are captured centrally

Because prompt resolution and execution pass through the gateway, teams can track which prompt version was used, how many tokens it consumed, and how it performed.

This setup allows teams to change prompts, adjust routing logic, and analyze impact without redeploying applications or agents. It also ensures that prompt behavior is consistent across environments and governed through a single control layer.

Prompt Management in Agent-Based Systems

Prompt management becomes significantly more complex once teams start building AI agents. Unlike single-turn applications, agents rely on multiple prompts that evolve dynamically as the agent reasons, plans, and interacts with tools.

In practice, an agent may use:

- A system prompt that defines overall behavior and constraints

- Task prompts that change based on user intent or workflow state

- Tool-specific prompts that guide how tools are invoked and interpreted

- Memory or context prompts that grow over time

Without proper tooling, these prompts often end up scattered across agent definitions, configuration files, and application code. This makes agents difficult to debug and risky to modify.

Centralized prompt management addresses this by decoupling prompt logic from agent implementation.

Decoupling Agents from Prompt Text

In a production-ready setup, agents do not embed prompt text directly. Instead, they reference prompts by identifier, similar to how they reference tools or models.

This allows teams to:

- Update agent behavior without redeploying agents

- Reuse prompts across multiple agents

- Apply consistent changes across workflows

For example, if a system prompt needs refinement to reduce hallucinations or enforce stricter formatting, the change can be applied centrally and immediately affect all agents that reference it.

Managing Prompt Versions Across Agent Lifecycles

Agents often run continuously and may handle long-running workflows. Prompt management tools help ensure that:

- Existing agent runs continue using the prompt version they started with

- New runs pick up updated prompt versions

- Rollbacks can be performed safely if behavior degrades

This version control is critical when agents are used for customer-facing or business-critical tasks.

Improving Debuggability and Reliability

When prompts are centrally managed, teams gain visibility into how agents behave over time. It becomes possible to answer questions like:

- Which prompt version was used when an agent failed?

- Did a prompt update change tool invocation behavior?

- Are certain prompts causing higher costs or longer runtimes?

By tying agent executions to specific prompt versions, teams can debug issues systematically instead of relying on guesswork.

Overall, prompt management turns agent prompts from fragile, embedded text into controlled assets that evolve safely as agent systems grow in complexity.

Observability and Cost Implications of Prompt Management

In production systems, prompts have a direct impact on both system behavior and cost. Small changes in prompt structure, added context, or output constraints can significantly affect token usage, latency, and agent execution paths. Without proper visibility, teams often discover these issues only after costs spike or outputs degrade.

Prompt management tools become especially valuable when they are tightly coupled with observability.

A production-ready setup typically allows teams to track:

- Which prompt version was used for each request or agent run

- Token usage and cost per prompt

- Latency and error rates associated with specific prompts

- Downstream effects, such as tool usage or agent loops triggered by a prompt

This level of visibility enables teams to treat prompts as measurable system components rather than opaque text blobs.

For example, if a new prompt version increases context size, teams can immediately see higher token consumption and attribute the cost increase to that specific change. Similarly, if an agent starts looping or calling tools excessively after a prompt update, the issue can be traced back to the prompt version responsible.

Without prompt-level observability, these problems are difficult to diagnose. Teams are left guessing whether issues originate from model behavior, routing logic, or agent code. Centralized prompt management, combined with observability, removes that ambiguity.

From a cost control perspective, this is critical. As systems scale, prompt inefficiencies are often one of the largest hidden drivers of LLM spend.

Prompt Management in TrueFoundry

In TrueFoundry, prompt management is designed to work as part of the broader AI infrastructure layer, not as a standalone feature.

Prompts are treated as production assets that integrate with:

- The AI Gateway for routing and policy enforcement

- Agent deployments and workflows

- Observability and cost tracking

- Access control and governance

Instead of embedding prompt text directly in applications or agents, teams can manage prompts centrally and resolve them at runtime. This allows prompt updates to be rolled out independently of application deployments, while still maintaining strict control over where and how prompts are used.

Because prompt resolution happens at the gateway layer, TrueFoundry can associate every request with:

- The prompt identifier and version used

- The model and provider selected

- Token usage, latency, and errors

This unified view makes it easier for platform teams to:

- Safely iterate on prompts

- Enforce consistency across environments

- Attribute cost and performance changes to specific prompt updates

- Govern who can modify or deploy prompts

For teams running multi-model systems or agent-based workflows, this approach helps ensure that prompt management scales alongside the rest of the AI platform, rather than becoming a bottleneck or source of hidden risk.

Conclusion

Prompt management is one of the first challenges teams encounter when moving LLM applications and agents into production. What begins as simple prompt strings quickly turns into a growing surface area that affects system behavior, reliability, and cost.

Prompt management tools help teams treat prompts as first-class production assets. By centralizing prompt versioning, enabling safe iteration, and integrating prompts with routing, observability, and access control, teams can evolve their AI systems without introducing unnecessary risk.

As systems scale to include multiple models, agents, and workflows, prompt management becomes less about convenience and more about operational discipline. Integrated approaches, where prompts are managed alongside the rest of the AI infrastructure, give teams the control and visibility needed to run production AI systems reliably.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.