Cloudflare AI Gateway Pricing [A Complete Breakdown]

Cloudflare AI Gateway has quickly become a popular choice for teams looking to manage, monitor, and route LLM traffic through a centralized proxy. As LLM adoption accelerates across industries, teams are increasingly introducing AI gateways to add observability, security, and control between their applications and third-party models.

But as AI usage scales, pricing clarity becomes a critical factor. Teams need to understand not just the per-call costs, but the infrastructure and operational patterns that influence long-term spend.

At first glance, Cloudflare AI Gateway pricing appears simple, especially with free access to core features. However, real-world usage often reveals hidden costs tied to logging, data retention, and scaling limits.

In this blog, we break down:

- Exactly what you pay for when using Cloudflare AI Gateway

- The indirect costs that teams often overlook

- Why scaling companies eventually migrate to in-VPC alternatives like TrueFoundry for more control

What Is Cloudflare AI Gateway?

Before covering Cloudflare AI Gateway cost, it’s important to understand what it does and where it fits in the AI stack.

Cloudflare AI Gateway acts as a centralized layer for teams deploying AI applications that rely on third-party LLM providers. It enables teams to:

- Route AI traffic securely between applications and multiple foundation model providers like OpenAI, Anthropic, and Hugging Face.

- Gain visibility into prompts, responses, latency, and usage metrics for observability and monitoring.

- Integrate directly with Cloudflare’s broader AI stack, including Workers AI, caching, rate limiting, and global edge infrastructure.

Cloudflare AI Gateway Features That Impact Its Cost

While Cloudflare AI Gateway does not charge per token, several features indirectly affect the total cost of ownership (TCO) as usage scales:

- Request routing and rate limiting: Helps control traffic flow but increases the number of logged events as AI usage grows. It requires a Cloudflare Workers Paid plan for high-volume execution.

- Prompt and response caching: Reduces repeated calls to upstream models (saving money on token fees), but efficiency depends on traffic patterns and cache hit rate.

- Usage analytics and token tracking: Requires persistent logging, which can introduce additional storage and retention-related costs.

- Integration with multiple AI providers: Increases flexibility but makes AI cost observability factors such as attribution and forecasting across providers more complex.

- Unified Billing (New for 2026): Cloudflare now allows you to pay for third-party model usage (OpenAI, etc.) directly through your Cloudflare invoice, adding a small transaction convenience fee.

- Logpush Integration: Streaming logs to an external S3 bucket or SIEM tool is a paid feature that incurs additional costs ($0.05 per million records after 10 million/month in paid plans).

Cloudflare AI Gateway Pricing Tiers

Cloudflare uses a "freemium" model where the gateway itself is available to all users, but scaling your application typically requires a transition from the Free tier to the Workers Paid tier.

Cloudflare’s AI Gateway is available on all Cloudflare pricing plans, and core features are free to use. There is no per-call gateway fee beyond your Cloudflare plan: you simply add the gateway and start sending traffic.

By default, Cloudflare includes a free analytics/dashboard, caching, rate limiting, and logging layer, so many teams can spin it up at no extra cost.

Under the free plan, however, some limits apply. For example, Cloudflare’s free (Workers Free) tier includes 100,000 total AI Gateway logs per month (across all gateways) and stops logging beyond that.

To increase limits, you must upgrade to a Workers Paid plan, which provides 1,000,000 logs total. Notably, Cloudflare does not charge per log on the free or paid tiers – you either stay within the included logs or upgrade.

Free vs. Paid Cloudflare AI Pricing Plans

Because Cloudflare AI Gateway is built on Workers, heavy usage of the gateway may trigger Workers billing.

Cloudflare’s Workers Paid plan starts with a $5 monthly subscription and includes a big usage allowance. For example, Standard (Paid) Workers include 10 million requests and 30 million CPU-ms of execution per month.

Beyond that, Cloudflare charges $0.30 per additional million requests and $0.02 per additional million CPU-milliseconds.

In practice, this means a gateway serving 15 million requests (with average CPU use) costs about $8 total in a month. In contrast, the free plan for Cloudflare AI only includes 100k requests/day and minimal CPU usage—once you exceed those, your Workers will simply stop running (no surprise charges, but a service outage).

💡 In summary: Light-use AI apps may stay within Cloudflare’s free tier, but production workloads often need Workers Paid ($5+ usage) to scale reliably.

Request Volume and Logging Costs

Under the Workers Paid plan, Cloudflare AI Gateway still has usage guidelines. The Workers Paid plan includes up to 1,000,000 AI Gateway logs per month (across all gateways).

If you push logs beyond that, you cannot pay an overage—you must either delete old logs or upgrade your plan to Enterprise. Log storage is capped: by default, each gateway can hold ~10 million logs (you can set your own limit), and once full, no new logs are saved.

Thus, while logging is "included," there’s an effective limit on how much history you keep.

Cloudflare also offers a Logpush integration (streaming logs to your storage), but only on the paid plan: you get 10 million requests’ worth of logpush per month free, then $0.05 per additional million.

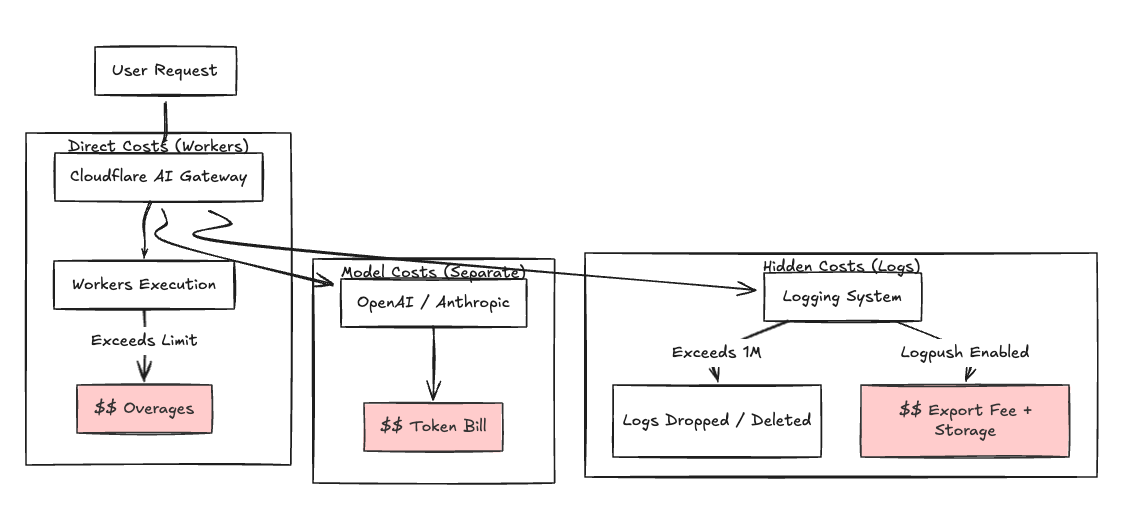

In essence, the Cloudflare AI gateway itself has no per-request fee, but supporting high-volume logging incurs hidden costs. Keeping more logs (beyond the free allowance) may force storage on external systems or lead to more aggressive log deletion. And if you want automatic log shipping (for SIEM or compliance), that feature is only on paid plans with usage fees.

All of these factors mean Cloudflare’s gateway costs can ramp up indirectly with use—you pay for the Workers plan plus any logging/storage beyond the baseline.

Indirect Usage Costs

Beyond the AI Gateway service, remember that every request the gateway handles still calls an underlying model provider. Cloudflare does not replace the model: it proxies your calls to OpenAI, etc., so you still pay the model’s token fees separately.

💡 In other words: Cloudflare removes unpredictability from the network layer but does not eliminate per-token charges from OpenAI/Anthropic/etc.

Additionally, heavy use of Workers (for example, complex request logic or caching) incurs compute charges as noted above. Essentially, using Cloudflare AI Gateway has a base cost of the Workers plan and logging limits, plus whatever you spend calling the actual models.

The Hidden Costs: What is Not Included in Cloudflare AI Gateway Pricing

Beyond the published fees, there are several hidden costs and risks to consider with Cloudflare’s AI Gateway.

1. Log Retention Limits

While basic logging is free, Cloudflare enforces strict retention caps. If your app needs to retain more than 100k (free) or 1M (paid) logs per month, you can’t simply pay for extra logs—storage simply stops.

To keep long histories, you must manually delete old entries or upgrade (potentially to an enterprise plan). This can be a surprise budget issue if you rely on logs for debugging or auditing.

2. Privacy & Data Sovereignty

By default, Cloudflare’s gateway captures all request and response data (including prompts, model replies, tokens, etc.) in its own infrastructure. While Cloudflare allows you to disable logging for privacy compliance, opting out means losing visibility.

Otherwise, you are sending potentially sensitive data (user queries, outputs, possibly PII) into Cloudflare’s cloud. Many vendors refer to this as the "black-box" approach: logs and metrics live outside your control.

As one analysis notes, this can "force the customer’s data—including PII or proprietary information—to leave their secure environment." In short, if you need strict data governance or air-gapped compliance, handing off raw prompts/responses to Cloudflare may be unacceptable.

3. Black-Box Routing

Relatedly, Cloudflare’s dynamic routing and fallback logic are opaque. Internally, the gateway decides which provider endpoint or cached response to use. Customers cannot see the detailed routing or performance heuristics.

This "closed" control plane means you must trust Cloudflare’s algorithms for model selection, caching, and failover, without granular insight. For some organizations (like those under strict audit), this lack of transparency is a hidden cost: you can’t fully certify what happened inside the gateway.

4. Resource Limits & Scaling

The free plan’s hard limits (daily request caps, log caps) can cause sudden throttling or failures for growing apps. Unlike pay-as-you-go clouds, Cloudflare’s free tier simply stops, not bills.

Moving to paid plans can require architectural changes. For example, hitting 100k logs/day without realizing it will drop logging unexpectedly. These operational constraints translate into "hidden" productivity costs; you may need extra DevOps work to handle deleted logs or upgrade mid-stream.

💡 Summary: Cloudflare’s AI Gateway pricing can appear "free," but any non-trivial deployment must account for Workers usage fees, log/storage needs, and data governance issues. Teams should budget for monitoring Workers usage, possible log shipping or storage, and the potential engineering effort of staying within Cloudflare’s limits.

When Cloudflare AI Gateway Pricing Makes Sense

Cloudflare AI Gateway shines in specific scenarios. For edge-centric or lightweight AI features, it offers a quick on-ramp. If you already use Cloudflare’s CDN/Workers, you can add AI calls with minimal changes (just swap the API endpoint).

This is ideal for small teams or startups that want an instant edge-deployed gateway without DevOps overhead. Early-stage projects can leverage Cloudflare’s free pricing plan to prototype global AI-powered experiences, caching popular responses for speed.

It also fits use cases where global distribution matters. For example, chatbots or inference running directly on Cloudflare’s network can benefit from Cloudflare’s 250+ PoPs and built-in DDoS protection. Simple rate limiting and retries via Cloudflare are also attractive for apps that need basic resilience.

In essence, Cloudflare AI Gateway pricing makes sense when you value speed of integration and breadth of Cloudflare’s network, and when your usage is modest enough to stay within (or only moderately exceed) the free plan.

However, for large organizations with high-volume or highly regulated workloads, these advantages may be outweighed by the hidden costs of Cloudflare AI. The lack of fine-grained control and fixed usage allocations can hinder budgeting and compliance.

Why Some Teams Look Beyond Cloudflare AI Gateway

As AI systems mature, the priorities shift from fast setup to cost predictability, security, and ownership. Teams begin to outgrow Cloudflare AI Gateway due to:

- Growing AI usage: As model usage scales, token volume increases and the need for infrastructure-aligned pricing becomes urgent. Cloudflare’s abstractions can make forecasting difficult.

- Compliance and data residency requirements: Regulated industries often need full control over where prompts and completions are processed. Cloudflare’s SaaS model introduces legal and audit complications regarding data residency compliances.

- Agentic workflows and RAG pipelines: Multi-step reasoning and retrieval-augmented generation workloads demand tight control, deeper logs, and sometimes local model hosting, all of which are limited under Cloudflare’s black-box infrastructure.

How TrueFoundry Approaches AI Gateway Pricing Differently

If you need the benefits of an AI Gateway but cannot compromise on security, observability, or infrastructure control, TrueFoundry offers a fundamentally different approach.

TrueFoundry deploys the AI Gateway directly inside your own cloud account (AWS, GCP, Azure) or server. The control plane (which manages configuration and settings) is operated by TrueFoundry, but the data plane, where actual prompts and responses are processed, stays entirely in your VPC.

Your data never leaves your infrastructure unless you explicitly choose to move it. In practice, this means:

- You host the gateway service on your infrastructure, directing all LLM traffic through your own network.

- Logs, requests, and responses never leave your cloud account unless you configure external exports.

- Observability is natively integrated – logs go to your S3 bucket, database, or internal analytics tools, preserving full data sovereignty.

- You control backups, resource allocation, encryption policies, and scaling using your own infrastructure and security protocols.

This eliminates the "black box" compromise seen in SaaS-first platforms like Cloudflare. You get transparent performance, cost visibility, and full ownership with TrueFoundry AI Gateway.

TrueFoundry Pricing

If self-hosted under an Enterprise plan, the only marginal cost is infrastructure (typically ~$600–$1,000/month depending on scale). Even in the SaaS version, TrueFoundry charges no hosting fees beyond your selected storage or cloud usage.

This results in a highly predictable cost structure — teams can forecast growth, upgrade tiers gradually, and retain infrastructure-level control throughout.

With TrueFoundry, you can:

- Ensure granular budgeting: Assign usage caps per team, e.g., "Engineering gets $500, Marketing gets $200" and monitor usage live.

- Implement open routing: Connect to commercial APIs (OpenAI, Anthropic) or route traffic to your own fine-tuned models running on EC2, GKE, or spot instances.

- Enable enterprise-grade isolation: Maintain full compliance with IAM, private networking, and data locality mandates.

Cloudflare AI Gateway vs TrueFoundry: Detailed Comparison

Enterprises evaluating AI gateways often weigh a managed service like Cloudflare against a self-hosted platform such as TrueFoundry. Below is a comparison of key factors:

Ready to Build AI Without Pricing Surprises?

Selecting an AI gateway is a long-term infrastructure decision, not just a question of upfront cost. Cloudflare AI Gateway works well for lightweight, edge-focused AI workloads and early experimentation.

But as systems move toward production scale, priorities shift to cost control, observability, compliance, and flexibility.

Platforms like TrueFoundry are built for teams scaling AI in production, where infrastructure ownership, data privacy, and usage-based budgeting matter deeply.

One scaling startup migrated from Cloudflare to TrueFoundry after unpredictable logging costs began exceeding compute budgets. By switching to a self-hosted gateway in their AWS VPC, they achieved 35% cost reduction, unified logging to S3, and routed traffic to a mix of OpenAI and private Mistral models — all with clear per-team budget controls.

You can get similar results for your organization as well. Book a demo to see how TrueFoundry can bring AI cost predictability and control to your team.

Frequently Asked Questions

Is Cloudflare AI Gateway free?

Yes, Cloudflare offers free access to AI Gateway features under its standard and Workers Paid tiers. However, usage limits, log retention policies, and underlying compute (e.g., Workers CPU time) may introduce hidden costs as scale increases.

How much will Cloudflare AI cost?

Cloudflare AI Gateway itself has no per-request fee. Costs emerge based on:

- Log volume and retention (e.g., 100,000 logs on free tier, 1M on Workers Paid).

- Worker usage for request processing and routing.

- Cloudflare plan level (e.g., Standard vs Enterprise).

Pricing can become unpredictable at high volume without custom plans or external log management.

How is TrueFoundry more cost-effective than Cloudflare AI?

TrueFoundry runs entirely inside your own cloud (AWS, GCP, Azure), eliminating data transfer costs and SaaS markups. You pay only for the compute and storage you allocate — with full routing flexibility, transparent logs, and no vendor lock-in. Teams can also route to private models or use spot instances to reduce cost by 60–70% versus managed APIs.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.