Architecting TrueFoundry on Azure: Control Plane and Compute Integration

Wiring up a Generative AI platform on Microsoft Azure means stitching together distinct compute, identity, and AI primitives. You provision raw capacity via Azure Kubernetes Service (AKS) and Spot VMs, handle identity via Entra ID, and route requests to Azure OpenAI. Friction hits when your infrastructure teams have to orchestrate these connections manually for every new model deployment.

TrueFoundry deploys as an infrastructure overlay inside your Azure subscription. We handle the deployment lifecycle, identity federation, and autoscaling. This post breaks down the exact integration patterns we use to connect TrueFoundry with Azure, covering the split-plane deployment, network boundaries, and workload identity mechanics.

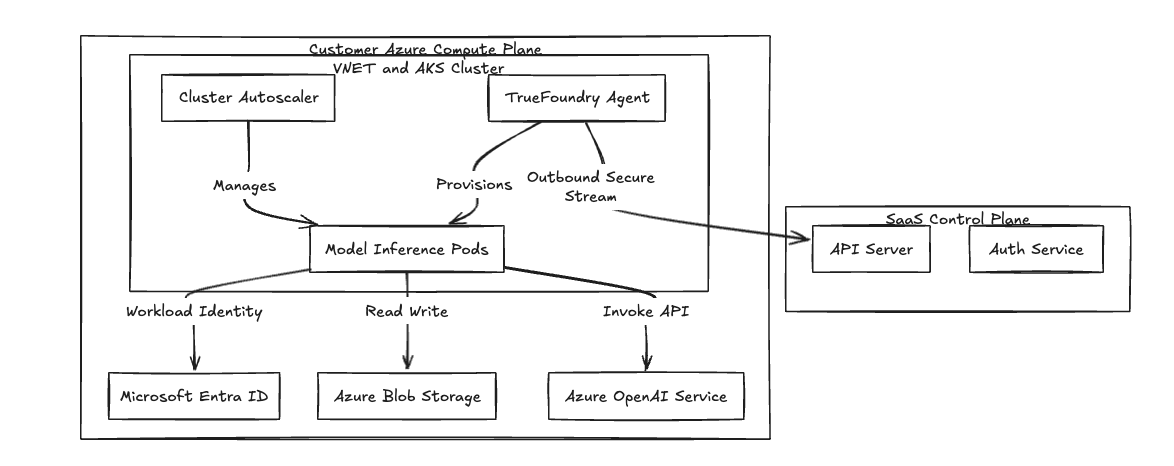

Deployment Model: Split-Plane Architecture

We use a split-plane architecture to isolate workload execution from platform management. If you build platforms on Amazon EKS, this model looks familiar: you separate the control surface from the data plane.

- The Control Plane: Acts as the API server and metadata store. It holds deployment manifests, RBAC configurations, and telemetry data.

- The Compute Plane: Runs inside your AKS cluster. It consists of the TrueFoundry agent, local controllers, and your actual model weights and GPUs.

We connect the two planes using a secure, outbound-only gRPC stream or WebSocket. The cluster-side agent initiates the connection to the Control Plane to pull manifests and push logs. You do not open any inbound ports on your VNET Network Security Groups. Your VNET denies external ingress from the internet by default.

Fig 1: The Split-Plane Architecture isolates data processing within the customer VNET.

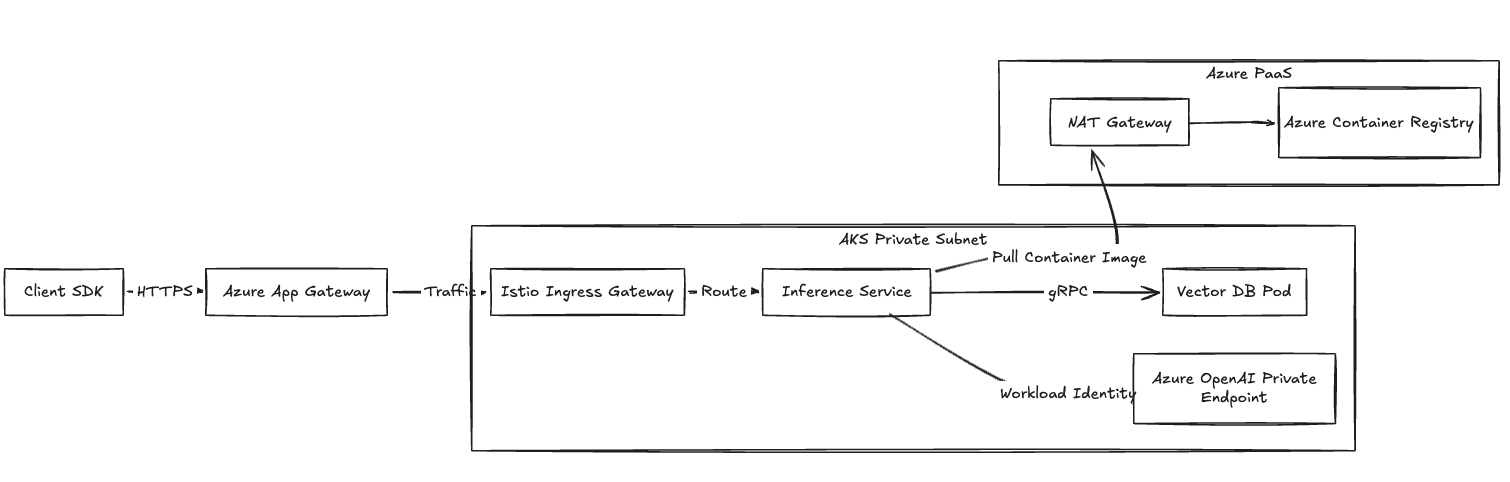

Networking Topology and Traffic Flow

We configure the compute plane networking using Azure CNI for direct pod-level IP assignment. Your compute resources stay in private subnets.

Ingress and Egress

- Inbound Traffic: Application traffic hits an Azure Application Gateway or standard internal Load Balancer. The gateway terminates TLS and passes the traffic to the Istio Ingress Gateway running inside AKS.

- Outbound Traffic: AKS worker nodes route outbound calls through an Azure NAT Gateway. They use this path to pull images from Azure Container Registry and poll the Control Plane.

Private Endpoint Integration

For strict compliance boundaries, we route traffic over Azure Private Link. Connections from your inference pods to Azure OpenAI, Key Vault, and Blob Storage route entirely over the Microsoft backbone.

Fig 2: Network traffic flow detailing ingress and private connectivity to Azure PaaS.

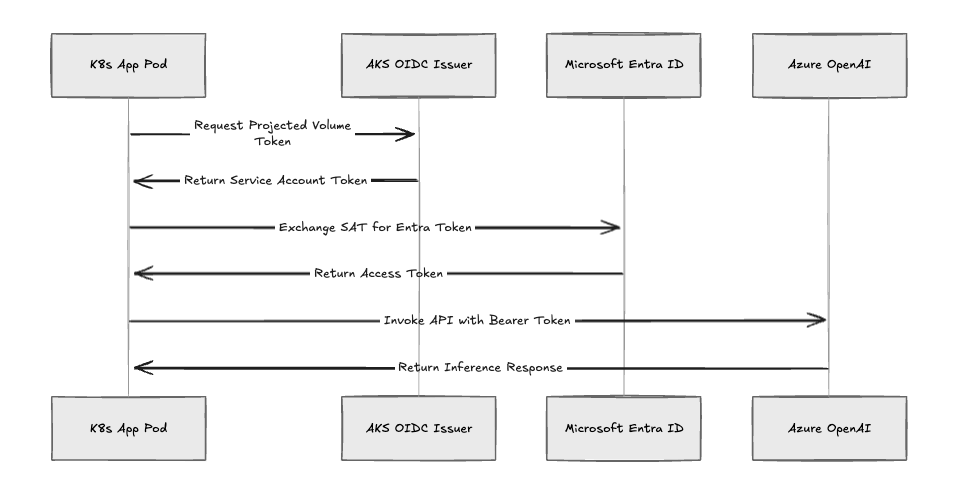

Identity Federation: Entra Workload ID

Hardcoded static secrets and Service Principals introduce severe rotation overhead. We authenticate workloads dynamically using Microsoft Entra Workload ID. If you manage AWS environments, this is the Azure equivalent of AWS IAM Roles for Service Accounts (IRSA).

When you deploy a pipeline, we execute this sequence:

- Service Account Creation: We provision a Kubernetes Service Account in the workload's namespace.

- Federation: We link this Service Account to a user-assigned Managed Identity in Entra ID.

- Token Exchange: The pod requests a signed token from the AKS OIDC Issuer. The Azure SDK swaps this token for an Entra access token via the OpenID Connect endpoint.

- Resource Access: The pod uses this token to pull models from Blob Storage or hit Azure OpenAI.

We use DefaultAzureCredential in the application code. This scopes the blast radius strictly to the RBAC permissions granted to that specific Managed Identity.

Fig 3: The Entra Workload ID authentication flow.

Compute Orchestration: Spot VM Integration

Running steady-state inference on On-Demand VMs often results in higher baseline costs. We integrate directly with AKS node pools to orchestrate Azure Spot Virtual Machines (similar to utilizing Amazon EC2 Spot Instances).

We manage Spot capacity using the following logic:

- Provisioning: We build secondary node pools with priority=Spot and eviction-policy=Delete.

- Eviction Handling: Our controller polls the Azure Instance Metadata Service. When we detect an eviction notice (a 30-second warning), we cordon the node and trigger the Kubernetes Cluster Autoscaler to reschedule the pod to an On-Demand fallback node.

For teams running batch inference or fault-tolerant API serving, this setup—much like running Karpenter on AWS—can reduce compute instance costs by up to 80% depending on workload flexibility.

The AI Gateway: Unifying Models

Managing distinct API keys and Token-Per-Minute (TPM) limits across multiple Azure regions creates operational drag. The TrueFoundry AI Gateway abstracts this. Similar to routing requests through Amazon Bedrock, developers hit a single internal API endpoint.

- Smart Routing: We load balance requests across Azure regions. If East US rate-limits your request, the Gateway retries against West Europe.

- Failover: If an Azure PaaS outage occurs, the Gateway can fail traffic over to a Llama 3 or Mistral instance hosted directly on your AKS compute plane.

Infrastructure as Code Compatibility

We align with standard GitOps and IaC practices. You provision the underlying Azure environment using our maintained Terraform modules.

Your Terraform state manages the VNETs, the AKS cluster, the OIDC issuers, and the underlying PostgreSQL databases. The TrueFoundry overlay simply maps to these native resources, keeping your infrastructure auditable and compliant.

Operational Comparison

Summary

Deploying TrueFoundry on Azure isolates your compute and data execution while we manage the application lifecycle. You maintain direct authority over your VNETs, NSGs, and data residency perimeters. We handle the orchestration. By abstracting the complex wiring between AKS, Entra ID, and Azure OpenAI, we let your engineering teams focus on shipping models instead of fighting infrastructure.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.