TrueFoundry MCP Gateway: Critical Infrastructure for Productive and Secure Enterprise AI in 2026

The era of the "Context Window Wars"—the race to offer ever-larger token limits with the promise of perfect reasoning—has concluded. As enterprise AI matures, a clear strategic truth has emerged from deployment and research: Maximum context does not equate to maximum intelligence.

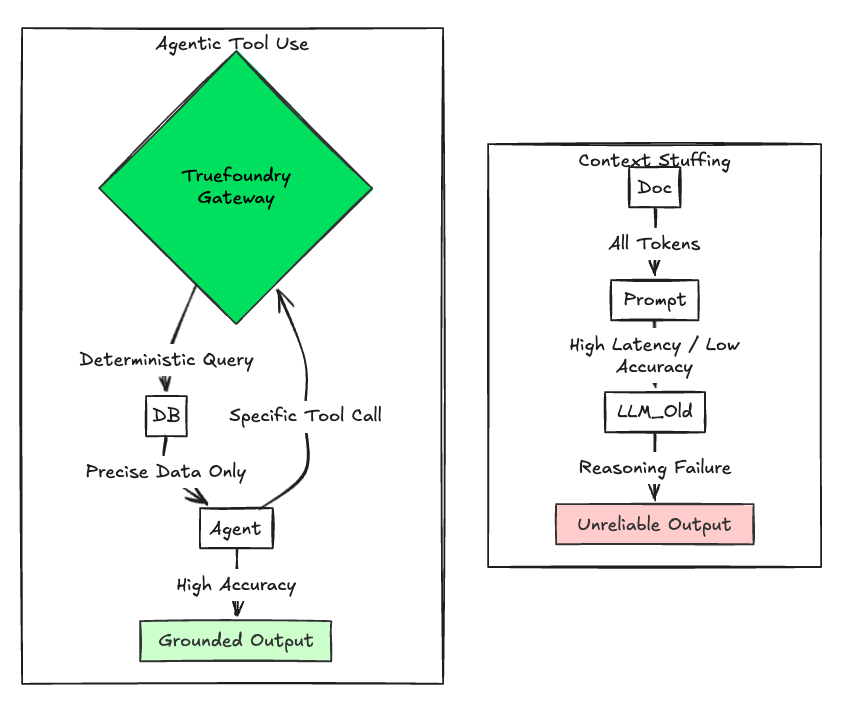

Relying on Large Language Models (LLMs) as omniscient databases by "stuffing" them with vast amounts of raw data has proven inefficient, leading to reasoning fragility, unpredictable hallucinations, and exorbitant inference costs.

The winning architectural standard for 2026 is the pivot from Passive Context to Active Tool Use. We are evolving systems from those that merely read to autonomous agents that act. This fundamental shift, however, necessitates a dedicated infrastructure to manage the resulting N×M Integration Nightmare of connecting agents to critical internal systems. The TrueFoundry MCP Gateway is engineered to be this central control plane, delivering measurable ROI, security, and scalability to the agentic enterprise.

1. The Performance Imperative: Shifting from Context Volume to Reasoning Velocity

For a brief moment last year, it seemed like vector databases were obsolete. Why index data when you can just dump your entire Jira history into the prompt of Gemini 1.5 or Claude 3.5?

Well, intuitively this sounds reasonable, yet some recent careful research shows the opposite – the paper Context Length Alone Hurts LLM Performance Despite Perfect Retrieval (https://aclanthology.org/2025.findings-emnlp.1264.pdf) accepted at EMNLP 2025 (a top AI conference) demonstrated a sobering reality: while models can retrieve a specific needle from a 1M token haystack, their ability to reason over that data collapses. When an agent is forced to process 500 pages of logs to find an error, the noise overwhelms the signal. The model hallucinates relationships that don't exist or misses the causal link buried on page 203.

To build reliable agents, we had to pivot. Instead of giving the model the data, we give the model Tools to query the data itself.

- Old Way (Context Stuffing): "Here are the last 10,000 Jira tickets. Which ones are related to the payment bug?" (High latency, low reasoning accuracy)

- New Way (enabled by Truefoundry MCP gateway): "Here is a tool called search_jira. Use it to find tickets related to 'payment bugs'." (Low latency, high reasoning accuracy)

Fig 1: New Way vs Old Way

This shift keeps the context window clean, the reasoning sharp, and the costs predictable. But it forces IT to manage thousands of secure tool connections, for which below we would walk through in detail how TrueFoundry could help.

2. Addressing the Complexity of Tool Integration at Scale (The N×M Integration Problem)

In a typical enterprise today, you likely have 50 different AI agents (DevOps bot, HR assistant, SQL Analyst) needing access to 50 different internal systems (GitHub, BigQuery, Slack, Salesforce).

Without a standardized protocol and a central gateway, every agent team builds their own connector for every tool. You end up with N×M brittle integrations. If the GitHub API changes, ten different agents break simultaneously.

The Solution: The Virtual MCP Server

TrueFoundry solves this with the Virtual MCP Server abstraction. Instead of connecting agents directly to physical APIs, you aggregate tools into logical, managed endpoints.

You can create a "Finance Agent Virtual Server" that exposes:

- The query_table tool from the BigQuery MCP server.

- The get_exchange_rate tool from the Stripe MCP server.

- The send_alert tool from the Slack MCP server.

The agent sees one endpoint. The Gateway handles the routing. This allows Platform Engineers to swap out backend implementations (e.g., migrating from Stripe to Adyen) without breaking a single line of the agent's code.

3. ROI of TrueFoundry MCP Gateway

Why buy a gateway instead of building direct connections? The math is simple and brutal. Let's look at the operational reality for a mid-sized enterprise running 10 active agents.

Scenario: 10 Agents × 5 Tools each = 50 Integrations.

The Bottom Line: For an enterprise running 100k agent invocations a month, the shift from Context Stuffing to TrueFoundry MCP Gateway-managed Tool Use can save over $50,000/month in pure token costs, excluding the massive savings in engineering hours.

4. Identity is the New Perimeter: Mitigating Enterprise Security Risk

Perhaps the biggest risk in Agentic AI is giving an agent "Superuser" (or Root) status—a service account with broad admin privileges. If an autonomous coding agent is compromised, you don't want it to have DROP TABLE access to your entire production database.

TrueFoundry solves this with OAuth 2.0 Identity Injection.

- The Scenario: A human user (Alice) prompts an agent to "create a ticket in Jira."

- The Interception: The Gateway intercepts the tool call.

- The Injection: It checks if Alice has a valid OAuth token for Jira. If she does, the Gateway injects her token into the request.

The Result: The agent acts On-Behalf-Of (OBO) Alice. It can only touch what Alice can touch. No shared keys. No security holes.

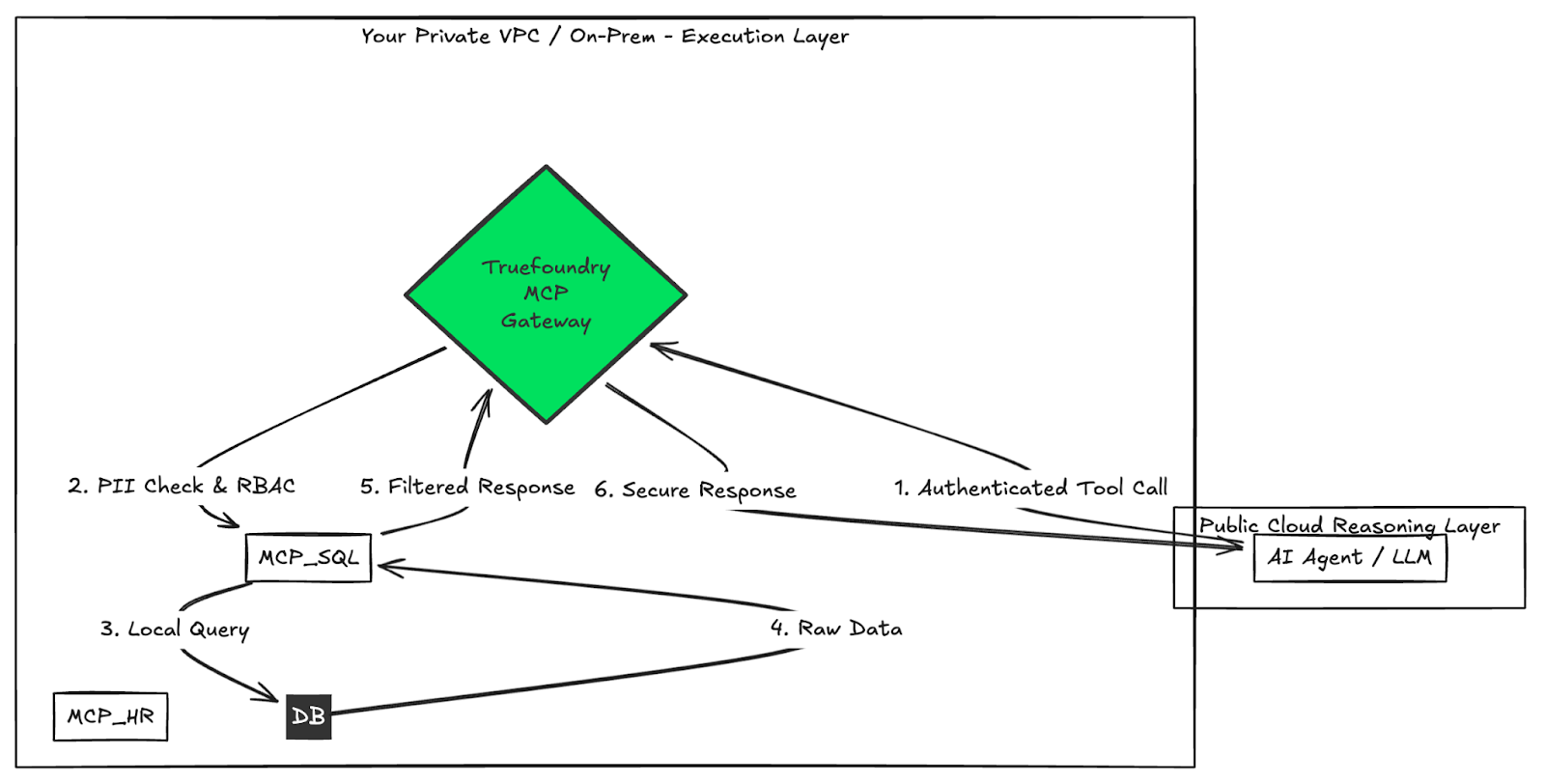

5. Achieving Data Sovereignty with Hybrid MCP Architecture

While public model providers are powerful, your most valuable data—proprietary code, customer PII, financial records—often lives on-premise or in private VPCs. You cannot simply pipe this data to a public cloud agent.

TrueFoundry offers a unique Hybrid MCP Architecture. You can deploy the MCP Gateway inside your private VPC or on-premise data center.

- Local Execution: Run sensitive MCP servers (e.g., "Production DB Query") right next to your data.

- Secure Tunneling: The Gateway exposes a secure, encrypted websocket or HTTP tunnel only to authorized agents.

- No Data Egress: Your database credentials and raw data never leave your controlled environment until they are explicitly requested by an authenticated agent, and even then, they are filtered by the Gateway's PII redaction guardrails.

This architecture allows you to use powerful cloud reasoning models (like Claude Opus 4.5 or Google Gemini 2.5 Pro) to orchestrate tasks, while the actual execution happens securely on your own metal.

Fig 2: an example workflow

The Verdict: Infrastructure is the Moat

In 2026, the "magic" of AI agents requires the "concrete" of robust infrastructure. You cannot run a mission-critical autonomous agent on a laptop with a local tunnel. You need governance, observability, and stability.

The TrueFoundry MCP Gateway provides the nervous system for the Agentic Enterprise. It allows you to move fast with the latest models, while keeping your data grounded and your security team happy.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.jpg)