LLM Cost Tracking Solution: Observability, Governance & Optimization

Why Every Organization Needs a Robust LLM Cost Tracking Solution

As enterprises push generative AI and large language models (LLMs) into production, managing costs becomes mission-critical. Token-based pricing, common with LLM providers, brings unique complexity:

- Multiple LLMs with distinct pricing—OpenAI, Claude, Mistral, and self-hosted models all have different cost per token.

- Variable usage by workflow, user, or team—Each product feature or user session might consume tokens at vastly different rates.

- Layered context and dynamic pipelines—Features like Retrieval Augmented Generation (RAG), toolchains, and agents introduce unpredictable token expansion.

Without a dedicated LLM cost tracking solution, teams lack visibility until costs balloon unexpectedly. This threatens budgets and impedes scaling efforts.

Here’s how to approach end-to-end tracking, governance, and optimization—along with direct, natural links to TrueFoundry documentation for each core element.

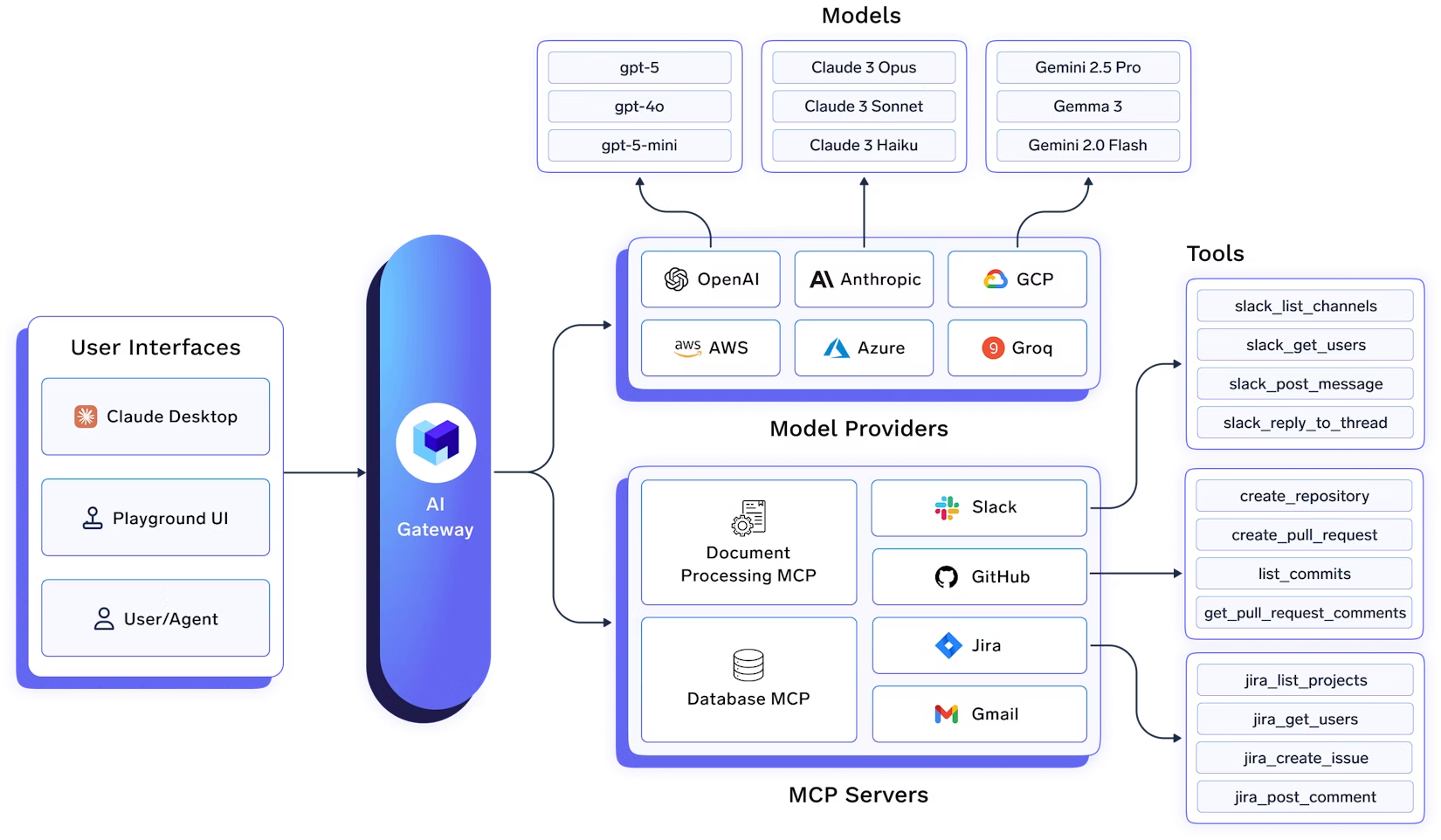

1. Unified Observability

Building robust cost tracking starts by capturing comprehensive, structured data for every LLM request. Using the TrueFoundry AI Gateway, you can route all inference traffic, whether it’s to an API model (like OpenAI, Claude, or Mistral) or to a self-hosted model you operate. This gateway acts as your “single pane of glass” for observability and cost attribution.

With every request, you should:

- Tag metadata such as user, team, environment, and feature for precise cost attribution (How to add metadata tags).

- Capture and analyze token counts, request latency, and which model was used—giving you the basis for real-time chargeback, showback, and spend management (Analytics and monitoring).

- Integrate OpenTelemetry to plug these metrics into your existing observability stack, correlating LLM spend with broader system behavior.

2. Governance

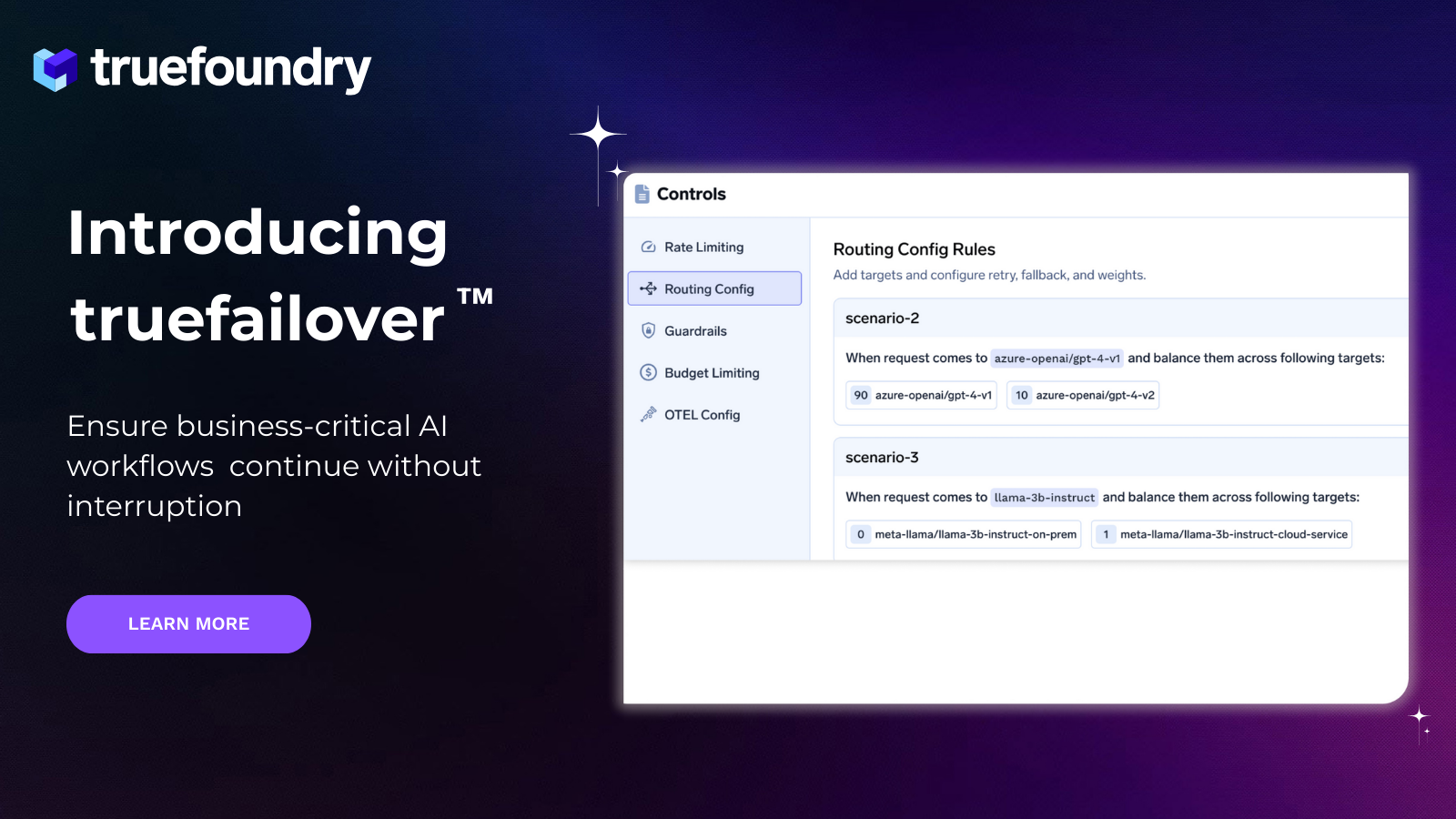

A comprehensive LLM cost tracking solution must let you enforce boundaries before budgets are exceeded.

- Rate limits: Set daily/monthly quotas by user, team, environment, model, or even custom metadata (Rate Limiting Guide). This helps prevent “runaway” workloads that spike spend.

- Budget caps & automated enforcement: Configure rules so that if a team or feature surpasses budget, requests can be auto-blocked or managers alerted (Budget Enforcement).

- Access control: Restrict high-cost or experimental models to only those teams and workflows that truly require them (Access policies).

- Guardrails: Block unsafe or cost-inefficient prompts and prevent accidental prompt expansion (Guardrails Overview).

Together, these governance capabilities turn logging into a live, enforceable cost tracking solution that prevents overruns by design—not just by retroactive reporting.

3. Continuous Optimization: Making Your LLM Cost Tracking Solution Dynamic

After observability and governance, optimization is the ongoing process of reducing spend without sacrificing performance or quality.

- Load balancing and smart routing: Leverage TrueFoundry’s load balancing to send requests to the most cost-effective model. For example, simple queries can go to Mistral or a finetuned small model, while complex ones route to GPT-4.

- Semantic caching: This technique stores and reuses LLM results based on semantic similarity of queries. However, it is not widely adopted, as it may lead to increased uncertainty or variability in model responses due to subtle differences in prompt context.

- Caching and batching: Take advantage of the batch prediction API to minimize repeat queries and aggregate similar requests, slashing token costs.

- Prompt engineering and structured outputs: Use the structured schema tooling to limit verbose/unpredictable LLM outputs and stabilize costs.

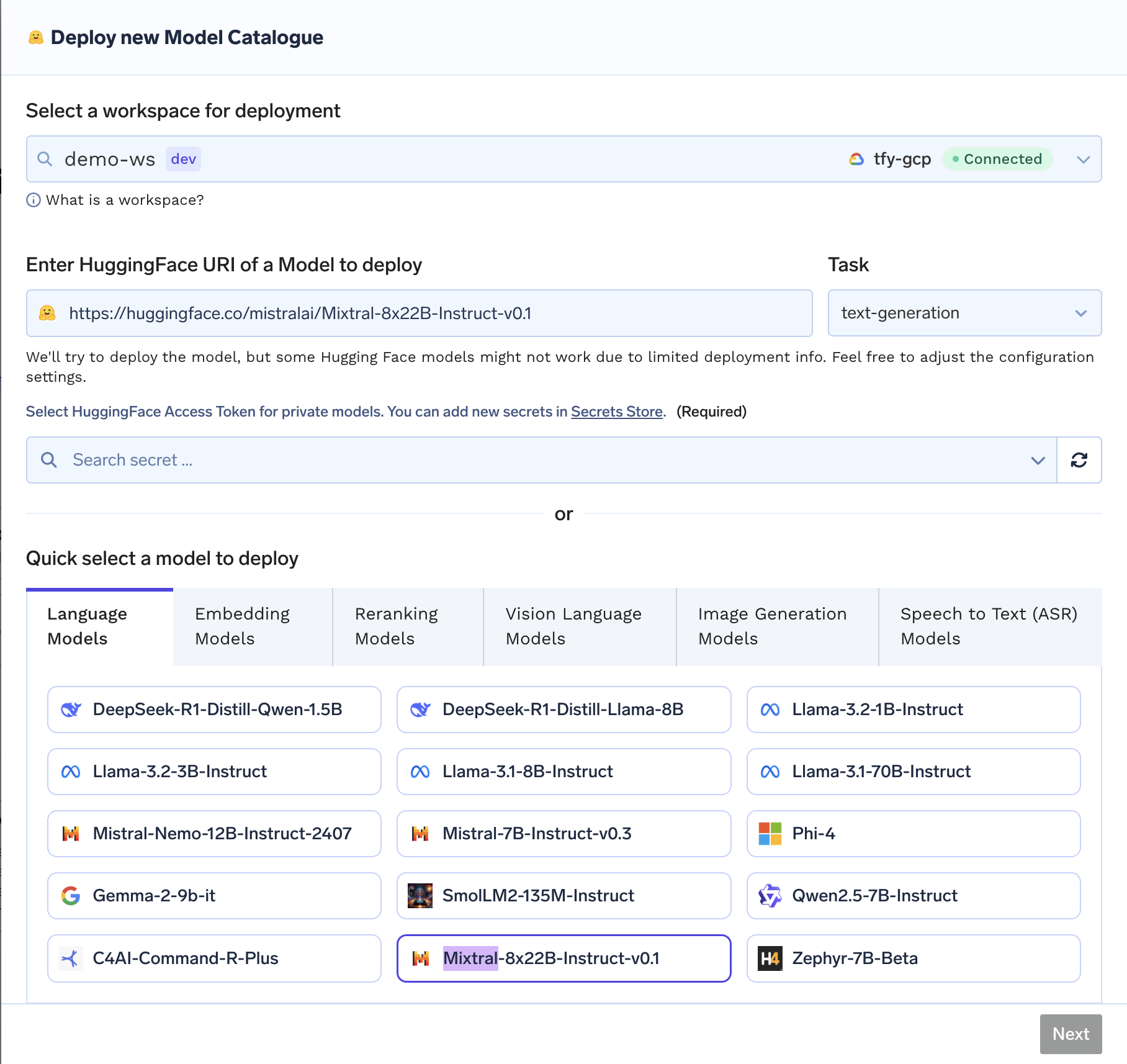

- Model fine-tuning: For repetitive, domain-specific workloads, utilize TrueFoundry's fine-tuning workflows to shorten prompts and compress requests for your business context.

- Self-hosting: When workloads stabilize and volume grows, running open-source LLMs (like Mistral or Llama) via self-hosted deployment can drastically undercut API per-token rates, all while using the same observability and policy tools.

4. Key Metrics: What to Track in Your LLM Cost Tracking Solution

Successful cost optimization relies on vigilant measurement. The following are vital to track across your stack:

- Tokens per request: Normalizes and benchmarks usage patterns.

- Cost per user/team/feature: Enables showback and chargeback reporting for internal accountability.

- Cache hit ratio: Reveals how much spend is saved through smart caching.

- Requests routed to expensive models: Helps you shift non-essential traffic to cheaper options.

- Cost spikes/anomalies: Allows you to detect regressions, misconfigurations, or possible abuse.

All of these can be collected and visualized automatically with TrueFoundry Analytics.

5. When to Self-Host LLMs as Part of Your Cost Tracking Solution

- If your organization has predictable, high-volume LLM usage, the savings from self-hosted open-source models can be significant.

- TrueFoundry’s multi-cloud LLM gateway and self-hosted deployment guides ensure monitoring, governance, and routing logic work identically for both external APIs and your internal clusters.

6. Best Practices for LLM Cost Tracking Solutions

- Centralize all inference traffic through an observability-enabled gateway.

- Automate tagging and budget alerts for line-item cost breakdown by feature, team, or workflow.

- Periodically review and adjust rate limits and access policies as your model, team, and feature mix evolves.

- Monitor and address security risks and unchecked consumption, especially with self-hosted or high-privilege models.

- Use batch prediction3 and prompt validation to ensure efficient resource use and avoid token leakage.

Conclusion

A modern LLM cost tracking solution is more than just after-the-fact reporting—it’s a strategic control plane for every phase of AI deployment, from daily governance to ongoing optimization. By leveraging the comprehensive features offered by TrueFoundry’s AI Gateway, teams unlock granular visibility, proactive spend controls, and cost-conscious routing for every LLM they use, whether via API or self-hosted clusters.

For a step-by-step technical deep dive, see:

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.