Top 5 AWS MCP Gateway Alternatives

The Model Context Protocol (MCP) has emerged as a game-changing standard for connecting AI applications to external data sources and tools. As organizations seek to build more sophisticated agentic AI systems, the choice of MCP gateway becomes critical for ensuring security, scalability, and operational efficiency.

While AWS has introduced its own MCP gateway solution as part of its Bedrock ecosystem, many enterprises are discovering that alternatives like TrueFoundry offer superior features, flexibility, and enterprise-grade capabilities.

In this comprehensive guide, we'll explore the AWS MCP Gateway landscape and examine five leading alternatives that are transforming how organizations deploy and manage their AI infrastructure. Whether you're dealing with multi-cloud requirements, seeking better cost control, or need enhanced observability features, understanding these alternatives will help you make an informed decision for your enterprise AI strategy.

What is AWS MCP Gateway?

The AWS Model Context Protocol Gateway represents Amazon's approach to standardizing how AI applications interact with external data sources and tools within the AWS ecosystem. Built on top of the open-source MCP specification developed by Anthropic, AWS MCP Gateway serves as a bridge between Amazon Bedrock language models and various AWS services, enabling seamless integration of enterprise data with AI applications.

Key features of AWS MCP Gateway include native integration with Amazon Bedrock's Converse API, support for tool use capabilities that allow models to request information from external systems, and seamless connectivity to AWS services such as Amazon S3, DynamoDB, RDS databases, CloudWatch logs, and Bedrock Knowledge Bases. The platform leverages AWS's existing security mechanisms, including IAM for consistent access control, making it an attractive option for organizations already heavily invested in the AWS ecosystem.

How does AWS MCP Gateway work?

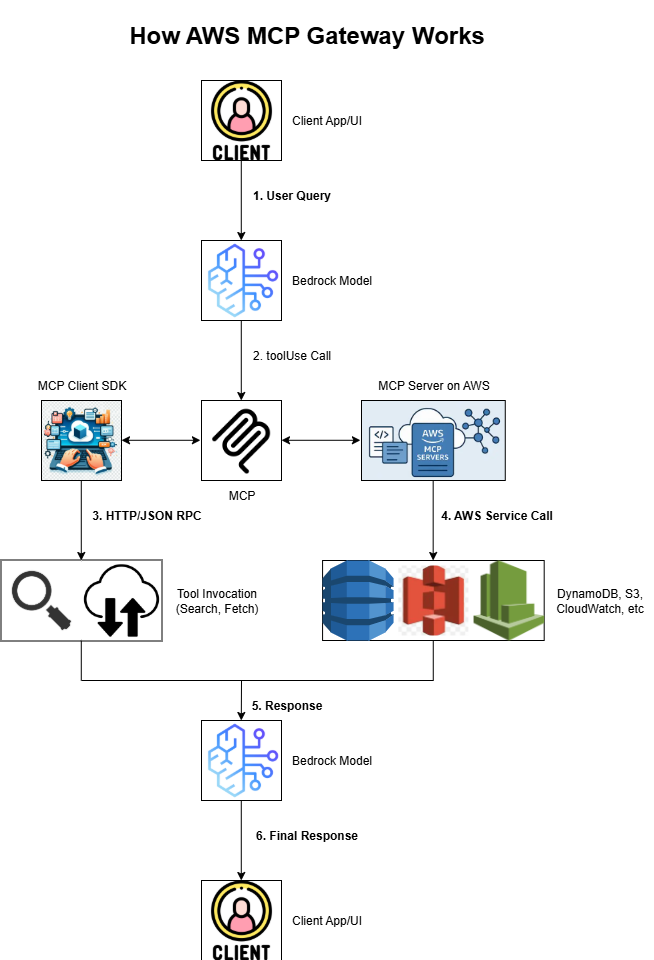

AWS MCP Gateway implements a client-server architecture that follows the standardized Model Context Protocol to enable secure, two-way communication between AI applications and AWS services. The system consists of three primary components: MCP clients embedded in AI applications like Amazon Bedrock, MCP servers that provide standardized access to specific AWS data sources, and the communication flow that follows well-defined protocol specifications.

The operational flow begins when an AI application hosted on Amazon Bedrock processes a user query and determines it needs additional information not available in its training data. The system then generates a toolUse message requesting access to specific tools, which the MCP client application receives and translates into an MCP protocol tool call. This request is routed to the appropriate MCP server connected to AWS services, where the server executes the tool and retrieves the requested data from systems like Amazon S3, DynamoDB, or CloudWatch.

The architecture supports three essential primitives that form the foundation of MCP interactions: Tools (functions that models can call to retrieve information or perform actions), Resources (data that can be included in the model's context such as database records or file contents), and Prompts (templates that guide how models interact with specific tools or resources). This design enables AWS customers to establish a standardized protocol for AI-data connections while reducing development overhead and maintenance costs through the elimination of custom integrations for each AWS service.

Why Explore AWS MCP Gateway Alternatives?

While AWS MCP Gateway offers solid integration within the AWS ecosystem, there are several compelling reasons organizations evaluate alternatives.

1. Avoiding Vendor Lock-In

AWS MCP Gateway tightly couples your AI infrastructure to Amazon services, making multi-cloud strategies or migrations challenging. Organizations seeking flexibility across providers may find this limiting.

2. Cost Considerations

AWS pricing can become complex and unpredictable, especially for high-volume AI workloads. Multi-dimensional pricing across gateway services, API requests, and premium features often results in unexpected charges. Alternatives often provide more transparent and predictable pricing models.

3. Flexibility and Customization

AWS MCP Gateway focuses primarily on AWS service integration, lacking comprehensive LLMOps capabilities, advanced routing strategies, and extensive provider support. Purpose-built AI gateway solutions enable custom routing, multi-LLM support, and enhanced workflow management.

4. Performance and Observability

Specialized AI gateways often deliver lower latency, better cost tracking, and richer monitoring compared to AWS’s service-specific dashboards. Developers benefit from unified interfaces, advanced tracing, and more intuitive management tools.

5. Enterprise Governance

For enterprises, governance is critical. Dedicated AI gateways provide guardrails, content filtering, PII protection, and role-based access control across multiple LLM providers — capabilities that AWS MCP Gateway handles only partially out-of-the-box.

Top 5 AWS MCP Gateway Alternatives

1. TrueFoundry MCP Gateway

TrueFoundry MCP Gateway stands as the premier enterprise-grade alternative to AWS MCP Gateway, offering a comprehensive solution that combines performance, security, and extensive functionality in a single platform. Built specifically for production AI workloads, TrueFoundry delivers sub-3ms internal latency while handling over 350 requests per second on just 1 vCPU, significantly outperforming both AWS and other alternatives in benchmark tests.

Key Features:

- Unified API Access: Connect to 1000+ LLMs from OpenAI, Anthropic, Google, AWS Bedrock, Azure, and custom models through a single OpenAI-compatible endpoint

- Native MCP Support: Comprehensive Model Context Protocol integration with secure server management, authentication, and observability

- Enterprise Security: SOC 2 Type 2, HIPAA, and GDPR compliance with advanced guardrails, PII redaction, and role-based access control

- Advanced Observability: Full request/response logging, OpenTelemetry-compliant tracing, and granular cost tracking with custom retention policies

- Flexible Deployment: Cloud-native, on-premises, air-gapped, or hybrid deployments with complete data sovereignty

- Granular Authentication & Access Control: Full support for OAuth2 and JWT; detailed configuration documented in the authentication and security section.

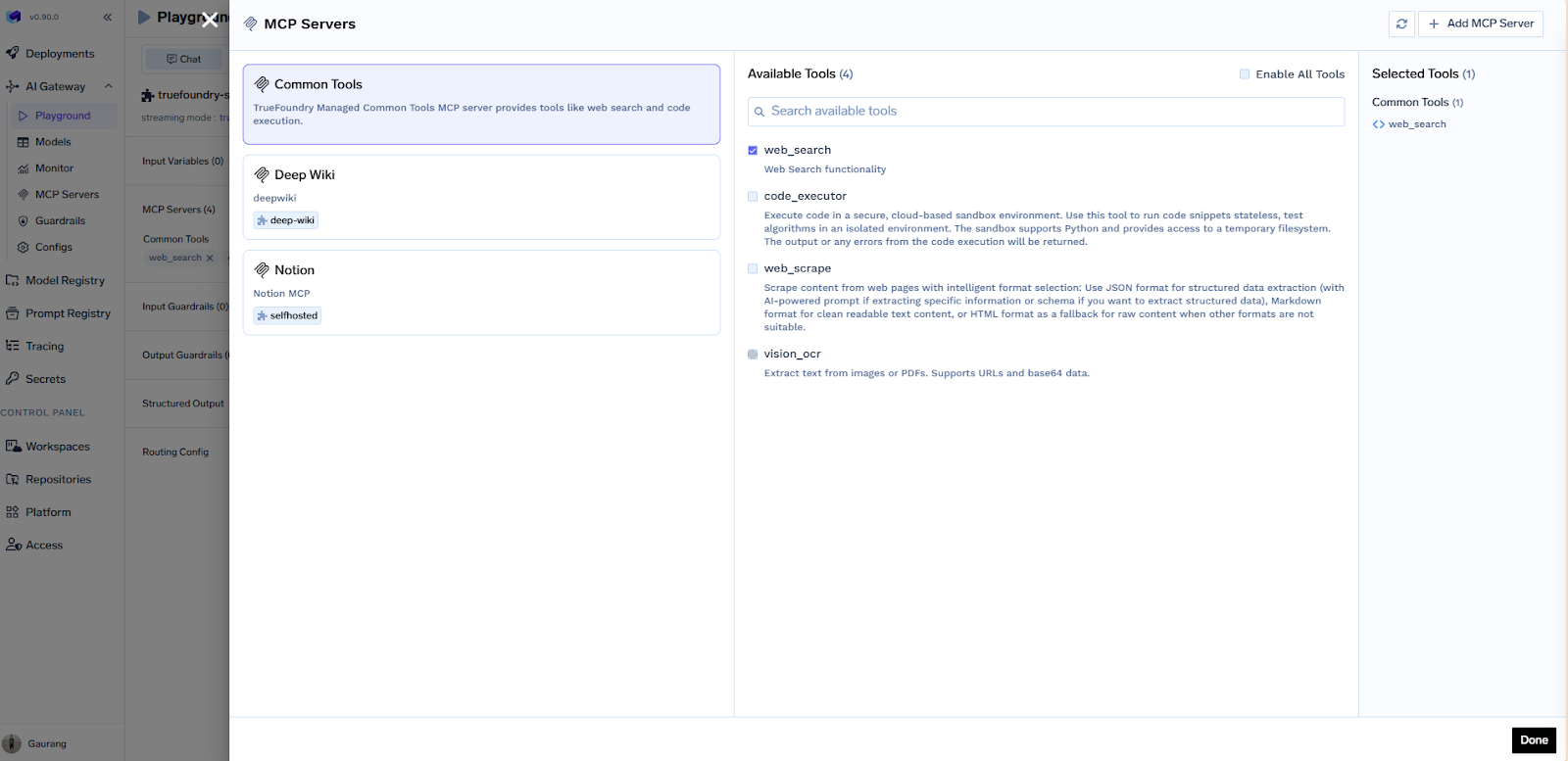

TrueFoundry's MCP Gateway capabilities enable organizations to securely manage integrated MCP servers while providing developers with seamless access to tools and data sources. The platform offers OAuth2 authentication for MCP servers, fine-grained authorization controls, and comprehensive monitoring of tool usage metrics. Unlike AWS MCP Gateway's ecosystem limitations, TrueFoundry supports any MCP server regardless of the underlying infrastructure.

Why Choose TrueFoundry:

For enterprises searching for the best MCP gateway needing enterprise-grade reliability without vendor lock-in find TrueFoundry ideal for managing multiple LLM providers with granular cost and access control. The platform particularly appeals to teams requiring comprehensive observability, predictable costs, and integration with existing enterprise infrastructure while maintaining the flexibility to deploy across any cloud or on-premises environment.

2. Composio

Composio represents an upcoming approach in the MCP ecosystem that focuses on standardized tool abstraction and developer-centric MCP Gateway workflows. Rather than acting as a traditional proxy or platform, it emphasizes discoverable, protocol-driven access to external services and tools via the Model Context Protocol.

Key Characteristics:

- MCP-First Abstraction: Designed around the MCP standard to centralize tool access and connectivity for AI agents.

- Tool Discoverability: Provides a structured way for clients to discover and invoke MCP-compatible tools.

- Developer-Centric: Helpful for teams looking to align their integrations with standardized MCP semantics.

- Flexible Integrations: Supports wrapping a variety of backends, from APIs to internal business services.

Composio fits into the broader MCP Gateway landscape by offering a gateway-aligned architectural pattern that prioritizes consistency and tool standardization. It complements more comprehensive enterprise-grade solutions by highlighting how MCP can be used as a core building block in modular AI stacks.

3. Kong

Kong AI Gateway extends the battle-tested Kong platform with AI-specific capabilities, making it an attractive option for organizations already using Kong for traditional API management. Built on Kong's mature infrastructure, it provides comprehensive API governance with specialized features for LLM traffic management.

Key Features:

- Mature Plugin Ecosystem: 100+ enterprise-grade plugins spanning security, observability, traffic control, and AI-specific functionality

- Universal LLM API: Route across multiple providers including OpenAI, Anthropic, GCP Gemini, AWS Bedrock, Azure AI, Databricks, and Mistral

- Advanced Traffic Management: Six routing strategies with semantic routing, intelligent load balancing, and automated fallbacks

- MCP Traffic Governance: Complete MCP server security, observability, and automated generation from RESTful APIs

- Enterprise Integration: OAuth 2.0, JWT, mTLS support with existing enterprise identity providers

Kong's AI Gateway offers sophisticated semantic processing capabilities, including semantic caching and routing powered by Redis for vector similarity search. The platform provides semantic prompt guard functionality and AI-specific rate limiting based on tokens rather than just requests.

Considerations: Kong's pricing complexity is well-documented, with costs often exceeding $30 per million requests and multi-dimensional pricing models that create cost unpredictability. The enterprise pricing requires sales consultation, making cost planning difficult for high-volume AI workloads.

4. LiteLLM

LiteLLM serves as an open-source Python library focused on providing a unified interface across 100+ LLM providers with complete flexibility and community-driven development. It excels at advanced routing algorithms and comprehensive team management through highly customizable configurations.

Key Features:

- Complete Open Source: Free access to all core functionality without licensing fees

- Advanced Routing: Latency-based, usage-based, cost-based routing with customizable algorithms

- Comprehensive Load Balancing: Multiple algorithms including least-busy and usage-based with Kubernetes scaling

- Production Features: Pre-call checks, cooldowns for failed deployments, and 15+ observability integrations

LiteLLM provides robust team management capabilities with virtual keys, budget controls, tag-based routing, and team-level spend tracking. The platform supports comprehensive retry logic and fallback mechanisms for production reliability.

Considerations: Requires 15-30 minutes of technical setup with Python expertise and YAML configuration. All features require manual configuration, creating a steep learning curve and additional maintenance overhead compared to managed solutions.

5. Anthropic MCP Gateway

Anthropic MCP Connector serves as a protocol-driven interface allowing Claude models to connect to external tools, databases, and services via the Model Context Protocol (MCP). It focuses on interoperability and tool integration for AI workflows.

Key Features:

- Standardized Connectivity: MCP provides a uniform interface to connect models to remote tools and data sources.

- Multi-Tool Integration: Easily integrates with services like Jira, GitHub, Slack, Postgres, and other MCP-compliant servers.

- Open Protocol: Enables an ecosystem of connectors and servers, promoting reusability and interoperability.

- Remote Server Support: Allows models to query MCP servers over HTTP/SSE without local infrastructure.

- Security & Analytics: Includes authorization tokens, server whitelisting, and logging for observability.

Considerations: Currently limited to MCP-compliant tools; full enterprise gateway features (like multi-LLM fallback, routing, caching) are minimal. Requires technical setup and trust in remote servers; potential security concerns if using unverified MCP servers.

Conclusion

The landscape of Model Context Protocol gateways extends far beyond AWS's offering, with specialized solutions providing superior capabilities for enterprise AI deployments. While AWS MCP Gateway serves organizations deeply embedded in the AWS ecosystem, alternatives like TrueFoundry MCP Gateway deliver enhanced performance, flexibility, and comprehensive enterprise features without vendor lock-in constraints.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.