Top 5 Kong AI Alternatives

Artificial intelligence is transforming how organizations develop, deploy, and scale applications. With the rapid rise of large language models (LLMs) and generative AI, developers increasingly need platforms that provide reliable model access, observability, and cost-efficient operations.

Kong AI has emerged as a prominent solution, offering AI-powered API management, monitoring, and workflow optimization to streamline AI application performance. However, no single platform fits every organization’s needs. Deployment flexibility, enterprise-grade security, observability, and integration with existing infrastructure are critical factors that influence platform choice.

Finding a reliable alternative to Kong helps developers discover solutions that better align with their operational requirements, tech stack, and growth ambitions. From open-source gateways to full-stack enterprise AI platforms like TrueFoundry, a variety of Kong gateway alternatives exist to optimize model routing, governance, and scalability.

In this article, we will dive into how Kong AI works, its core features, and the top alternatives available in 2026.

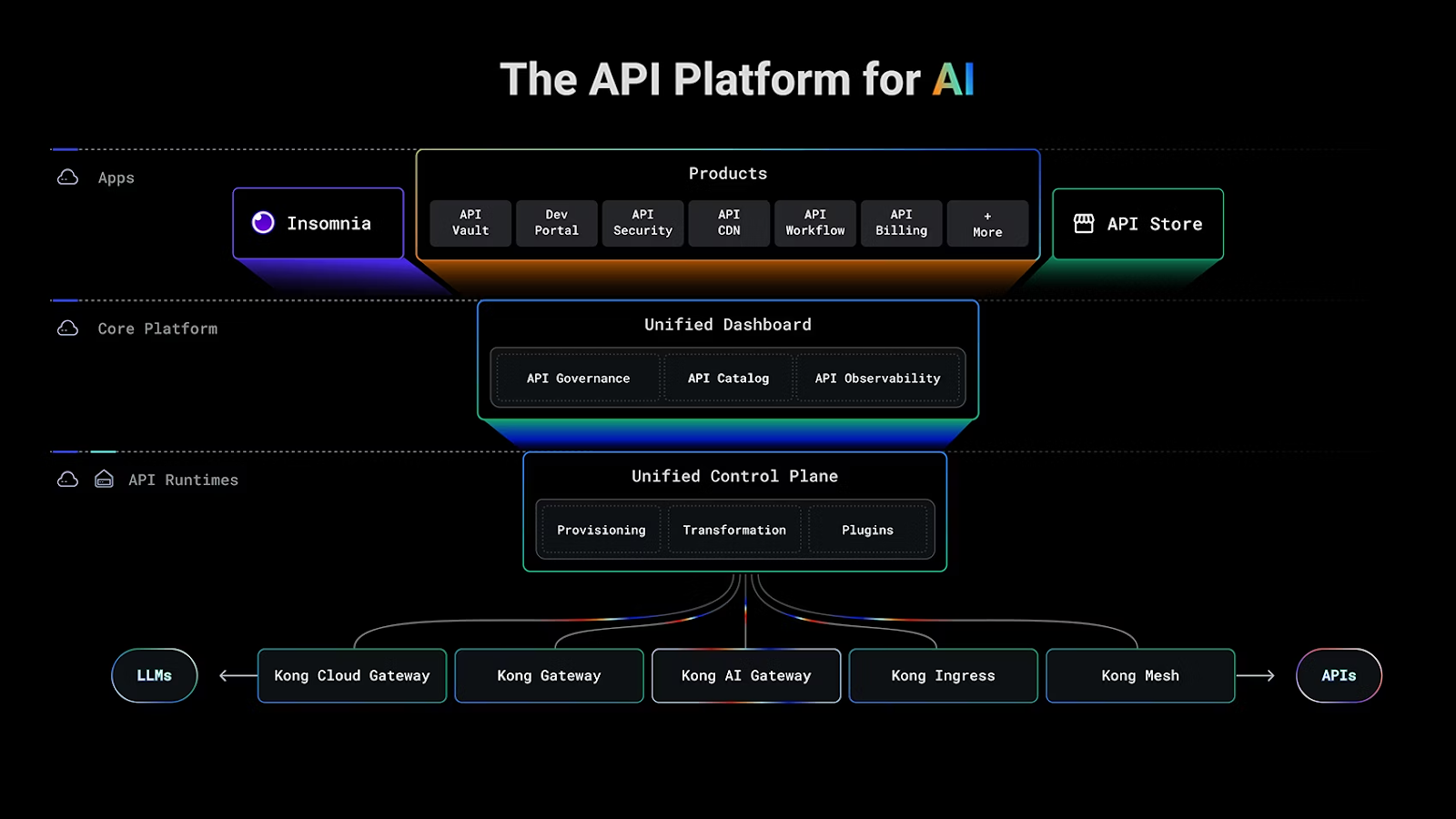

What is Kong AI?

Kong AI is an enterprise-grade AI gateway built on top of the Kong Gateway, designed to simplify the integration, management, and governance of AI models within organizations. It provides a unified API platform that allows businesses to securely expose, route, and monitor AI services from multiple providers, including OpenAI, Azure AI, AWS Bedrock, and GCP Vertex.

By centralizing AI traffic management, Kong AI helps organizations accelerate AI initiatives while maintaining control over security, compliance, and operational performance.

The platform is particularly suited for enterprises that need to implement advanced AI workflows, manage multiple language models, and enforce governance policies at scale. Its features are tailored to streamline AI development, reduce operational overhead, and improve cost efficiency, all while ensuring reliable and secure access to AI resources.

Key Features:

Multi-LLM Support: Kong AI enables seamless integration with multiple AI providers, allowing organizations to switch between models efficiently to meet different use cases or maintain high availability.

Automated RAG Pipelines: The platform can automatically build Retrieval-Augmented Generation pipelines, improving the accuracy of AI responses and reducing hallucinations in generated outputs.

PII Sanitization and Prompt Security: Kong AI enforces content safety and compliance by sanitizing sensitive data and implementing prompt-level security rules across all AI interactions.

No-Code AI Integrations: Developers can enrich, transform, or augment API traffic using supported LLMs without writing any code, allowing rapid deployment of AI capabilities across applications.

Advanced Traffic Management: Semantic caching, routing, and load balancing optimize AI traffic, reduce redundant API calls, and control costs, while observability features provide actionable insights on performance and usage.

Kong AI also supports model control protocols (MCP), enabling secure, reliable, and scalable deployment of AI servers. Its analytics and observability tools allow teams to track AI consumption, optimize costs, and monitor performance across all AI workloads.

How Does Kong AI Work?

Kong AI builds on the core Kong Gateway architecture, adding AI-specific layers and plugins to manage, secure, and optimize traffic to large language models (LLMs). Kong AI acts as a smart gateway between your applications and large language models (LLMs), managing requests, enforcing policies, and optimizing performance. Instead of sending requests directly to a model provider, Kong AI intercepts them, applies rules, and routes traffic efficiently while capturing metrics and tracking usage.

It leverages a plugin-based framework that can:

- Validate and sanitize prompts to ensure compliance and data safety.

- Inject relevant context from vector databases dynamically, improving model reliability.

- Route requests across multiple models based on latency, cost, or semantic similarity.

- Cache repeated prompts to reduce redundant calls and save costs.

Additionally, Kong AI provides observability and analytics, including token usage, latency, error tracking, and performance dashboards. This combination of routing, governance, caching, and monitoring allows developers to deploy AI applications reliably while keeping costs under control.

Why explore Kong AI Alternatives?

While Kong AI Gateway delivers robust features for managing and securing AI traffic, it may not suit every organization’s specific needs. Some teams require deeper integration with agentic frameworks, more flexible observability, or finer control over model orchestration beyond API-level management. Others may prefer open-source platforms that allow greater customization or self-hosting freedom without enterprise licensing constraints.

Additionally, Kong AI’s strength lies in its API governance and enterprise compliance, which can feel complex or excessive for smaller teams focused solely on LLM experimentation or lightweight workloads.

Cost considerations and deployment overhead may also drive teams to evaluate purpose-built LLMOps or AI infrastructure tools that provide faster setup and broader ecosystem compatibility.

Exploring alternatives ensures that organizations choose the platform best aligned with their AI stack, whether the priority is scalability, developer agility, cost efficiency, or end-to-end model observability.

Top 4 Kong AI Alternatives

While Kong AI offers robust governance and observability, several platforms now provide more advanced AI orchestration and gateway features. These alternatives offer greater flexibility, multi-model routing, and more comprehensive control over AI workloads. Below are the top four platforms leading this evolution.

1. TrueFoundry

TrueFoundry empowers enterprises to govern, deploy, scale, and observe agentic AI using a unified, end-to-end platform. Unlike point solutions that handle only orchestration or model hosting, TrueFoundry builds a full stack that supports secure, compliant, and high-performance AI at scale. It is Kubernetes-native and designed to serve enterprise needs in hybrid, VPC, on-premises, or air-gapped environments.

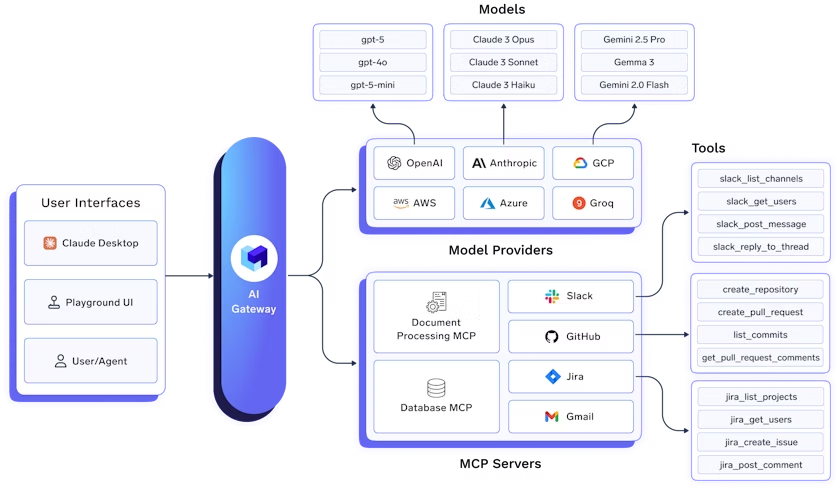

Orchestrate with AI Gateway

TrueFoundry’s AI Gateway provides a centralized protocol for agent workflows. It manages memory, tool orchestration, and multi-step reasoning, allowing agents to plan actions, call external tools, and maintain contextual state with full visibility and control.

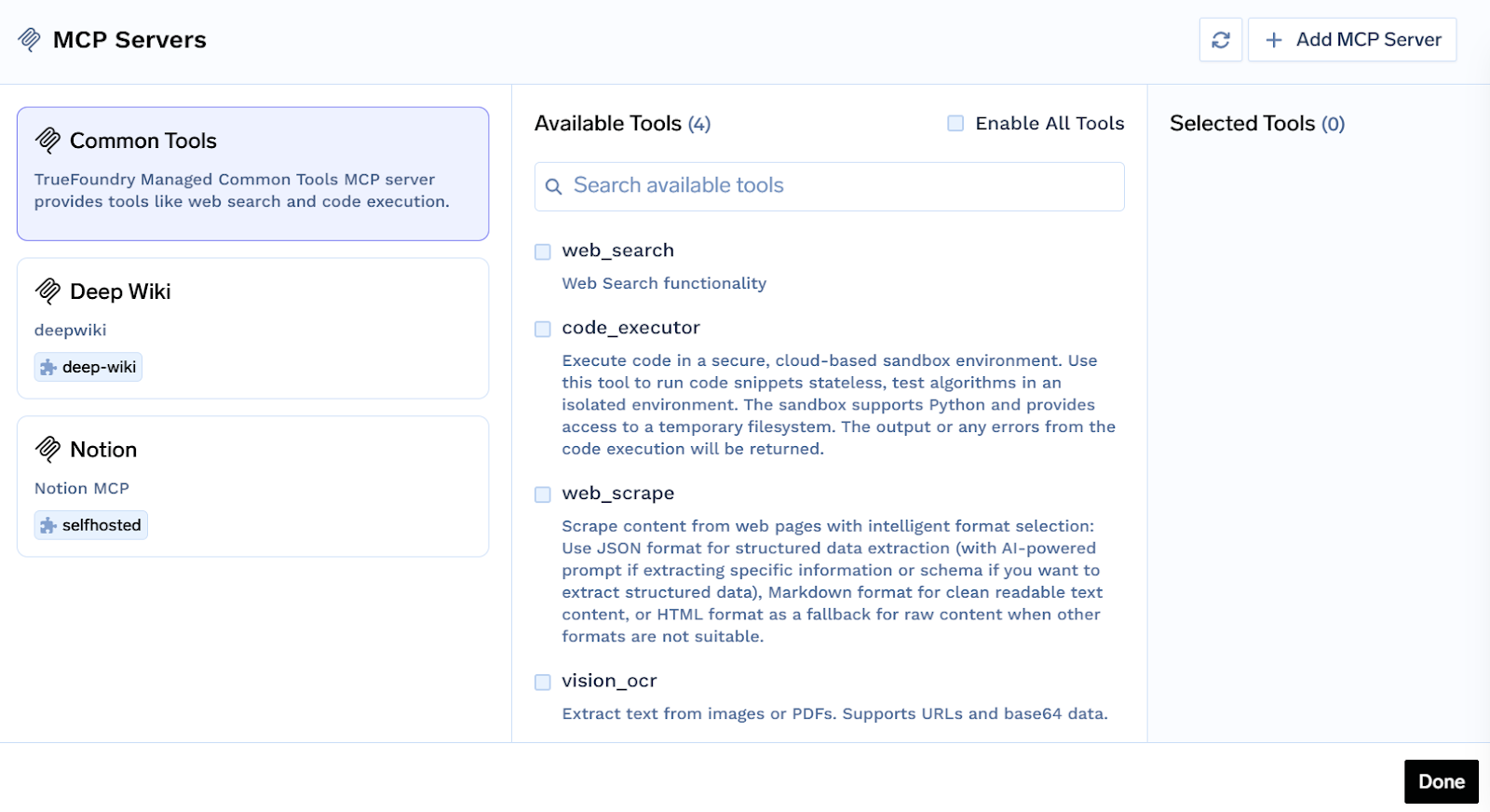

MCP and Prompt Lifecycle Management

The MCP and Agents Registry maintains a structured library of tools and APIs with schema validation and fine-grained access controls. Combined with Prompt Lifecycle Management, teams can version, test, and monitor prompts to ensure consistent and auditable agent behavior.

To know about the inner workings of an AI agent registry, read our detailed guide on what an AI agent registry is.

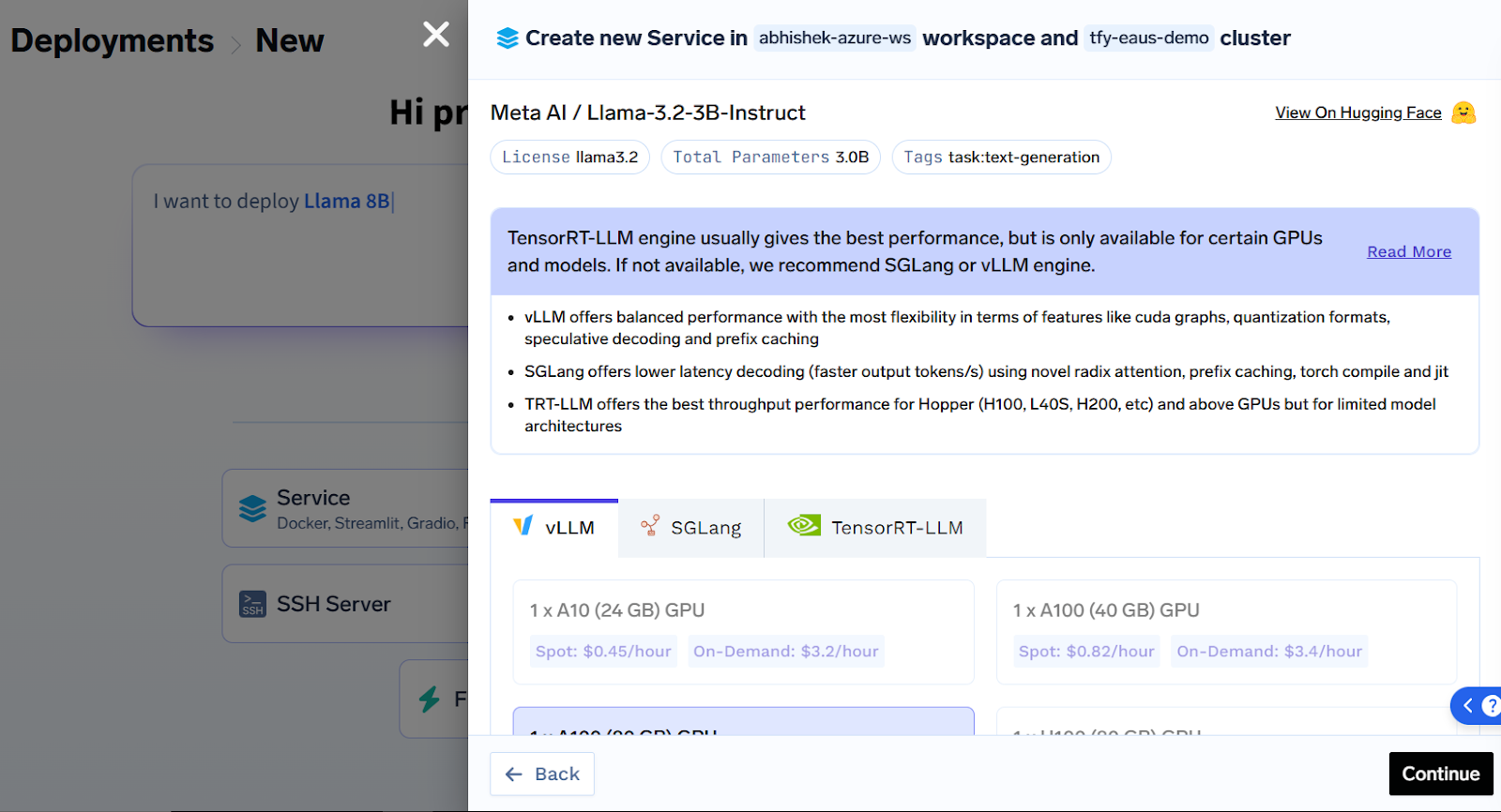

Deploy Any Model, Any Framework

Enterprises can host any LLM or embedding model using optimized backends such as vLLM, TGI, or Triton. Fine-tuning is integrated in the workflow to train on proprietary data and deploy updated checkpoints. Agents built on LangGraph, CrewAI, AutoGen, or custom frameworks are fully supported and containerized for production.

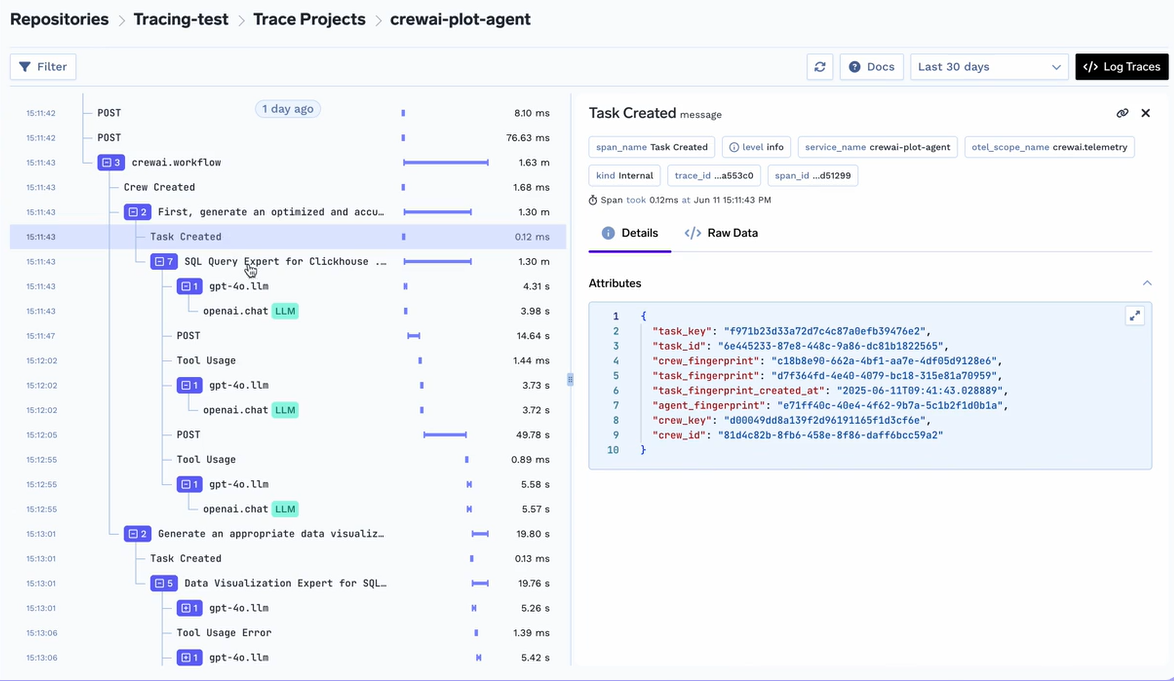

Enterprise-Grade Compliance and Observability

TrueFoundry can run in VPC, on-premises, hybrid, or air-gapped environments, ensuring full control over data. It is SOC 2, HIPAA, and GDPR compliant and supports SSO, RBAC, and immutable audit logging. Observability includes full tracing for agents, monitoring GPU and CPU usage, node health, and scaling behavior. Metrics can be integrated with Grafana, Prometheus, or Datadog.

Optimized for Scale and Cost

The platform provides GPU orchestration, fractional GPU support, and real-time autoscaling to maximize utilization and minimize costs. Enterprises like NVIDIA report up to 80 percent improvement in GPU cluster efficiency using TrueFoundry’s automation and agentic workflows.

By unifying orchestration, deployment, compliance, and observability, TrueFoundry delivers a complete enterprise platform for building and scaling agentic AI with confidence.

While Kong AI governs API traffic, TrueFoundry governs the entire AI lifecycle — from model serving to agent monitoring — making it ideal for enterprise-grade deployments.

2. LiteLLM

LiteLLM is a lightweight platform that enables developers to manage, monitor, and optimize LLM requests in production environments. It focuses on semantic caching and efficient token usage to reduce operational costs. LiteLLM provides detailed observability, including request logging, latency tracking, and performance metrics.

It supports multiple LLM providers such as OpenAI, Anthropic, and Google Gemini, offering flexibility for multi-model applications. Its container-friendly architecture allows rapid deployment and scaling without heavy infrastructure management. The platform is ideal for teams seeking a simple yet effective way to standardize AI operations.

Key Features:

- Unified API for multiple LLM providers

- Semantic caching to reuse responses and reduce costs

- Request logging and performance metrics tracking

- Easy deployment in containerized environments

- Supports OpenAI, Anthropic, Google Gemini, and others

If you need different features or scale, you can explore some alternatives to LiteLLM.

3. AWS Bedrock

AWS Bedrock is a fully managed AI service designed to help enterprises build applications using foundation models without managing underlying infrastructure. It provides access to multiple models, including Anthropic, AI21, and Amazon Titan. The platform ensures enterprise-grade security and compliance, integrating seamlessly with AWS identity and monitoring tools. Bedrock simplifies multi-model experimentation by providing centralized routing, logging, and cost monitoring. It is optimized for large-scale workloads and production deployments, allowing developers to focus on AI application development rather than infrastructure management.

Key Features:

- Access to foundation models from Anthropic, AI21, Amazon Titan, and more

- Fully managed infrastructure for AI workloads

- Integrated security, compliance, and identity management

- Multi-model experimentation with cost and performance insights

- Easy integration with the AWS ecosystem and analytics tools

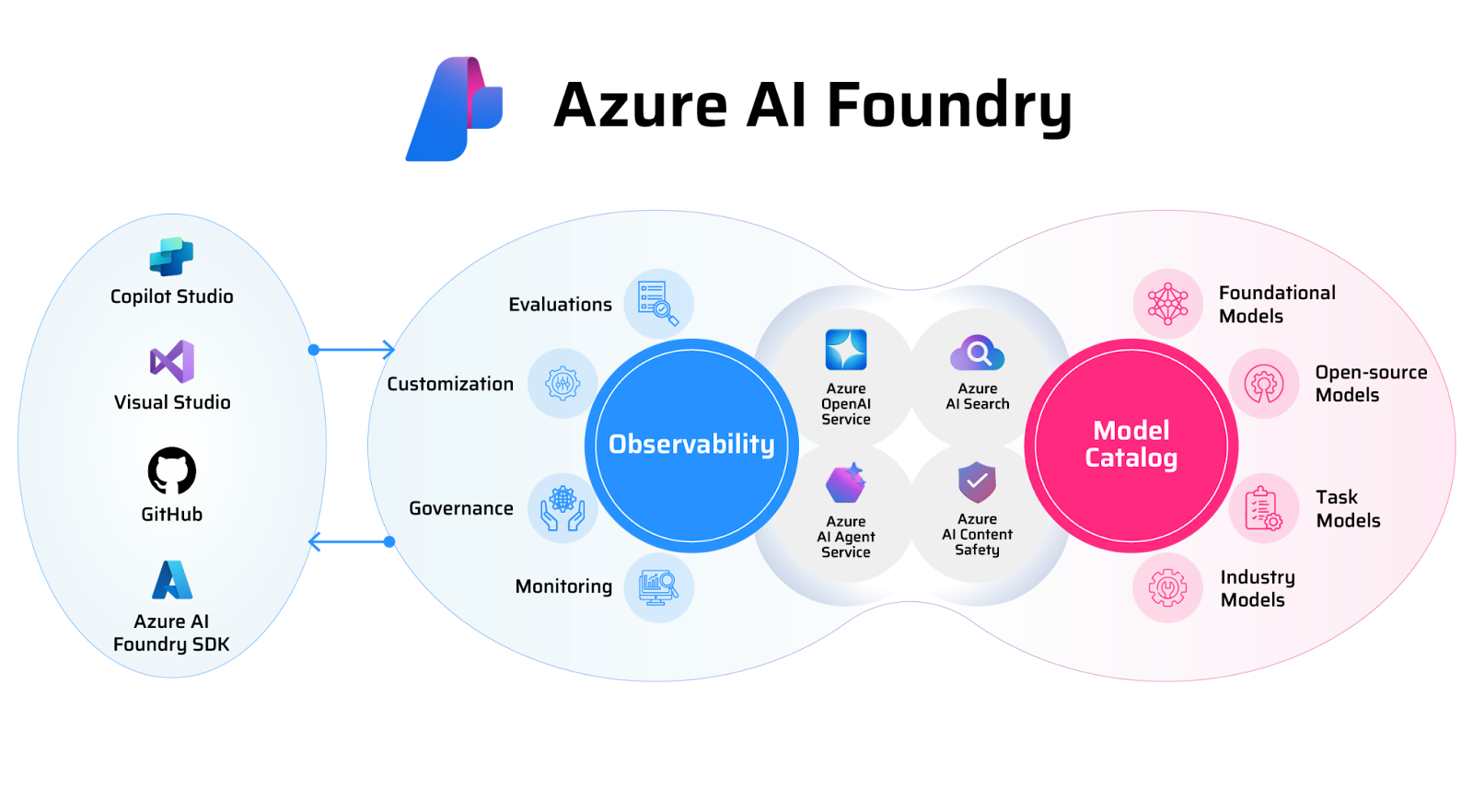

4. Azure AI Foundry

Azure AI Foundry is Microsoft’s cloud-native platform for deploying, monitoring, and governing AI applications. It provides secure access to Azure OpenAI Service and custom models while offering centralized control over AI workloads. The platform ensures enterprise compliance with SOC 2, HIPAA, and GDPR standards.

Azure AI Foundry includes observability features like prompt and token tracking, latency monitoring, and usage analytics. Hybrid deployment options allow organizations to run workloads on-premises, in the cloud, or across multiple regions. It is ideal for enterprises that need a secure, scalable, and governance-ready AI infrastructure.

Key Features:

- Supports Azure OpenAI Service and custom LLMs

- Centralized governance and access control for AI workloads

- Observability and telemetry for models and prompts

- Hybrid and multi-cloud deployment support

- Enterprise compliance with SOC 2, HIPAA, and GDPR

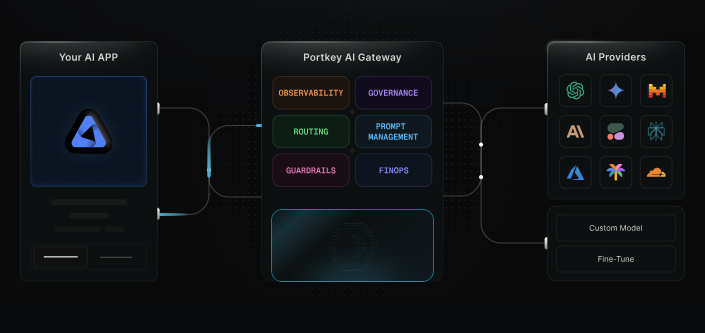

5. Portkey

Portkey is a dedicated AI gateway designed to make Large Language Models (LLMs) production-ready by focusing on "smart" routing and deep observability. While Kong AI approaches the problem from a traditional API management perspective, Portkey is built natively for the application layer, giving developers granular control over prompts, tokens, and model behavior.

For teams that need to optimize costs without sacrificing performance, Portkey's semantic caching and request load balancing offer a lightweight but powerful alternative to heavier enterprise gateways.

Key Features:

- Routes requests dynamically based on latency, cost, user tier, or prompt complexity

- Reduces costs and latency by caching responses for semantically similar queries

- Ensures high availability with automated retries and failovers for failing providers

- Provides detailed observability into token usage, costs, and latency for every request

How to Choose the Right AI Gateway?

Choosing an alternative to Kong AI depends on your specific infrastructure and how complex your AI applications are. Use these three questions to frame your decision:

1. Where must your data live?

- Strict Compliance (VPC/On-Prem): If you handle sensitive data (like healthcare or finance) and require GDPR/HIPAA compliance, prioritize platforms that offer self-hosted or "bring your own cloud" deployment.

- Speed & Ease (SaaS/Cloud-Native): If your priority is rapid development and you don't have strict data residency constraints, managed cloud solutions or lightweight SaaS gateways will let you move faster with less maintenance.

2. Are you managing APIs or Agents?

- Basic API Routing: If you only need to route text prompts to models like GPT-4, look for gateways focused on latency, caching, and cost control.

- Agentic Workflows: If you are building autonomous agents that use tools, memory, and multi-step reasoning, you need a platform that offers deep "agent tracing" and observability, not just simple API logging.

3. How important is vendor neutrality?

- Multi-Model Flexibility: If you want to switch between OpenAI, Anthropic, and open-source models to find the best price, choose an independent gateway that abstracts these providers into a single API.

- Ecosystem Integration: If your entire stack is already on a major cloud provider (like AWS or Azure), using their native AI gateway may reduce integration headaches, even if it limits your model choices.

Conclusion

Kong AI Gateway is a powerful solution for managing AI traffic, ensuring security, and providing observability across multi-LLM environments. However, organizations have diverse needs, from lightweight multi-model routing to enterprise-grade orchestration and compliance.

Platforms like TrueFoundry, LiteLLM, AWS Bedrock, and Azure AI Foundry offer alternatives that address specific gaps, including agent tracing, prompt lifecycle management, and GPU optimization. Choosing the right platform depends on scale, deployment flexibility, and governance requirements. Exploring these alternatives ensures teams can build reliable, efficient, and secure AI applications tailored to their operational and business objectives.

Ready to Move Beyond Basic API Gateways? Switch to TrueFoundry for a unified platform that governs your entire AI lifecycle, from efficient model deployment and GPU orchestration to comprehensive agentic observability. Book a demo today to scale your enterprise AI operations with confidence.

Frequently Asked Questions About Kong AI Alternatives

What is the best alternative to Kong AI?

If you are searching for a robust alternative to Kong, TrueFoundry offers a unified platform for large enterprises. Unlike a standard API management tool, it handles machine learning models and traffic routing with flexible deployment options, enhancing AI lifecycle management far better than simple gateways.

Is Kong a good AI gateway?

While the Kong API gateway provides solid traffic control, rate limiting, and security policies, many seek a KongHQ alternative for AI. While Kong AI governs API traffic, TrueFoundry governs the entire AI lifecycle, from model serving to agent monitoring, making it ideal for enterprise-grade deployments.

What is similar to Kong Gateway?

When evaluating Kong gateway alternatives, you will find open source options like Apache APISIX and Gloo Edge. For cloud users, AWS API Gateway (on Amazon Web Services) and Azure API Management (on Microsoft Azure) offer API security and access management, but TrueFoundry simplifies the entire AI lifecycle.

Who are the competitors of Kong AI gateway?

Major Kong competitors include Red Hat and Google Cloud, which drive digital transformation. Platforms like MuleSoft’s Anypoint Platform focus on API monetization and API analytics. However, for complex integration needs in the world of AI lifecycle management, TrueFoundry provides a superior enterprise-grade platform.

Is Gloo Gateway equivalent to Kong Gateway?

Gloo is a strong KongHQ competitor offering security features and policy enforcement for api keys. While Gloo boasts unique features, Kong prioritizes ease of use. TrueFoundry balances both, avoiding a steep learning curve while supporting best practices for enterprise-grade usage.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.