Kong Gateway Pricing & Architecture: An Analysis for AI Teams (2026 Edition)

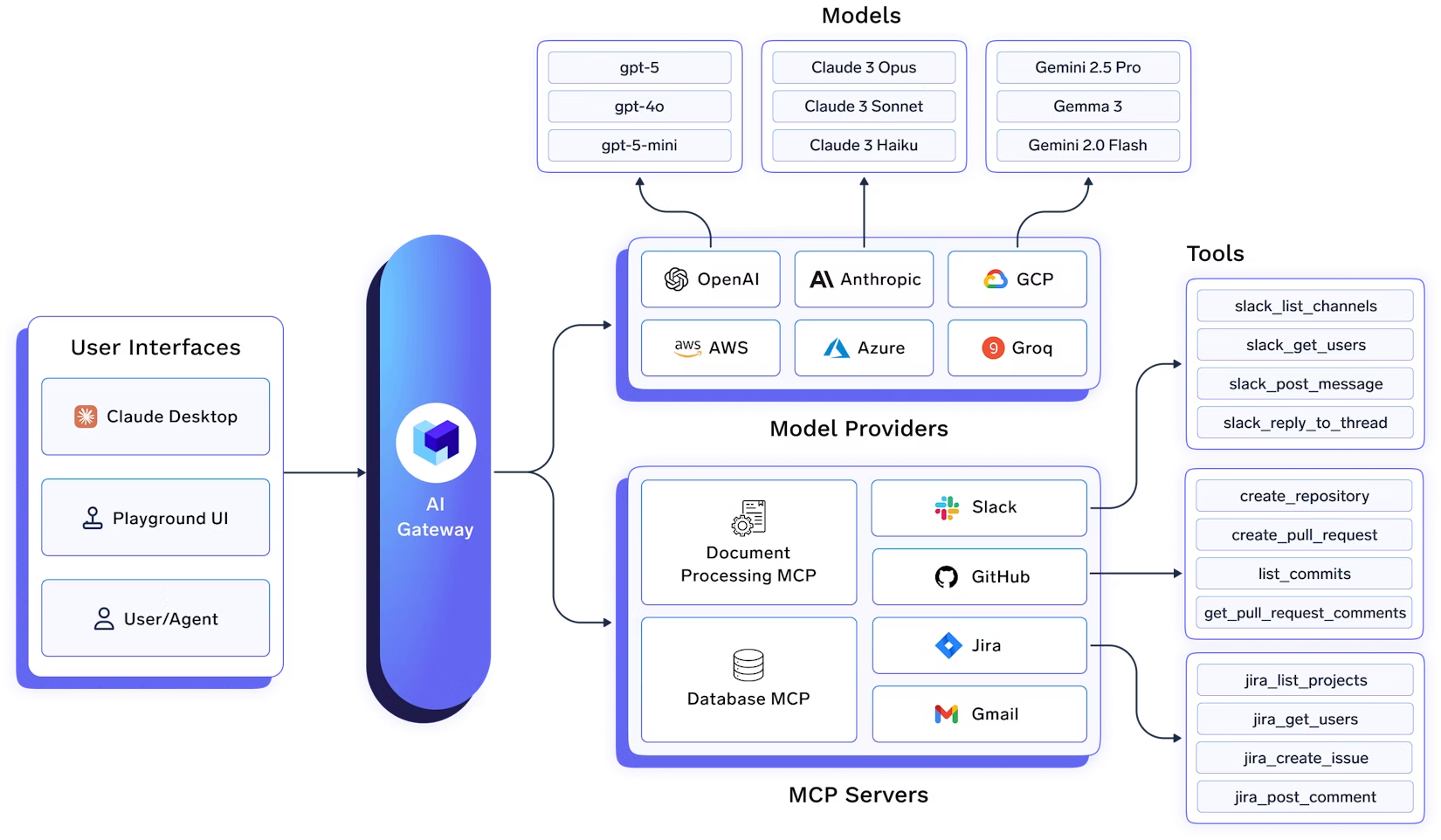

Kong has long been a widely adopted platform for API management, particularly for microservices and REST‑based architectures. With the introduction of its AI Gateway capabilities, Kong is extending its platform to support routing and governance for LLM traffic.

However, Kong’s pricing and operational model were originally designed for large‑scale API management, not lightweight AI inference workflows. This raises an important question: Does it make financial and operational sense to use a full‑scale enterprise API platform for AI routing use cases?

This guide breaks down the costs, limits, and how using a general-purpose gateway for AI routing may impact cost efficiency compared to purpose-built alternatives like TrueFoundry.

What Is Kong Gateway?

In this section, we will briefly cover what Kong Gateway is and its features. Kong is an open-source, cloud-native API gateway built on top of Nginx and the OpenResty framework. It is designed to sit in front of RESTful microservices, handling traffic flow and policies before requests reach the backend.

Key features include:

- Plugin Architecture: Extensible system using Lua to add features like auth, logging, and transformations.

- Load Balancing: Layer 4 and Layer 7 traffic routing across upstream services.

- AI Proxy: Recent additions allow standardizing API signatures for LLM providers (OpenAI, Anthropic).

- Protocol Support: Heavy support for gRPC, SOAP, GraphQL, and WebSocket.

- Deployment Options: Available as a managed SaaS (Konnect) or self-hosted Enterprise binary.

How is Kong Gateway Priced?

Kong operates on a hybrid pricing model that involves distinct variables for different deployment types. Unlike AI-native tools that charge by "token" or "model unit," Kong sticks to traditional API management metrics. This section clarifies the two distinct ways businesses pay for Kong Gateway.

Kong Konnect Pricing (SaaS Control Plane)

Kong Konnect is the managed SaaS version. While it removes the headache of managing the control plane, the pricing structure is aggressive for high-volume AI applications.

Pricing is consumption-based, typically charging per API request processed through the managed gateway. According to current Kong Pricing tiers, costs scale as follows:

- Service Fee: ~$105 per month per "Gateway Service" (each LLM provider or model you route to counts as a service).

- Request Fee: ~$34.25 per 1 million API requests.

- Network Infrastructure: ~$720/month base cost for dedicated cloud gateway instances (often billed as hourly network/compute fees, e.g., $1.00/hour).

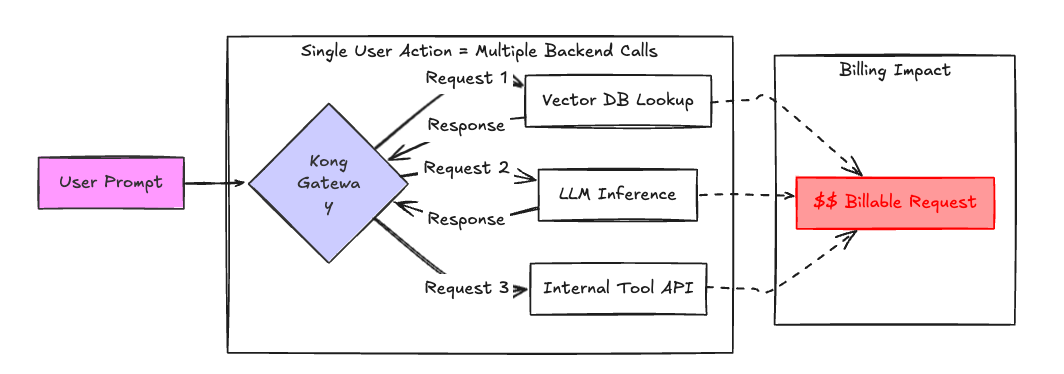

Why this hurts AI workloads:

AI applications generate many internal gateway calls. A single "Agent" workflow might trigger a chain of 20+ internal API calls (RAG lookup, vector DB search, prompting, re-prompting). In a standard API world, 1 million requests is a lot. In agentic workflows, high request volume can accelerate consumption of usage-based tiers more rapidly than standard REST traffic.

Fig 1: The Multiplier Effect of Agentic AI on Request-Based Billing

Kong Enterprise Pricing (Self-Hosted)

For teams that want to self-host to keep data private (common in AI), pricing shifts to annual licenses. This is typically opaque and requires sales negotiation, but structural costs are well-known.

Pricing is based on "Services" or "Nodes":

- Per-Service Licensing: You pay for every backend service the gateway sits in front of. If you are routing to OpenAI, Azure, Anthropic, and a local Llama 3 instance, that counts as 4 distinct services.

- Add-On Modules: Enterprise features like the "AI Rate Limiting Advanced" plugin or specialized analytics often require higher-tier "Plus" or "Enterprise" licenses, pushing contracts frequently above $50,000 annually even for mid-sized deployments.

- Experimentation Tax: AI teams test new open-source models weekly. Adding a new model endpoint in Kong Enterprise can trigger a license upgrade event if you exceed your "Service" quota.

Comparative Overview: Kong vs. AI-Native Models

Why Kong’s API‑First Design Creates Extra Cost for AI Teams?

Kong’s pricing reflects its origins as a general-purpose API management platform (APIM). This section explains why AI teams often pay for capabilities they never use.

Paying for Legacy API Features

Kong is a comprehensive enterprise platform designed to handle the complexity of banking and telco legacy systems. It includes extensive support for SOAP, gRPC transcoding, XML validation, and monolithic architectures by default.

For a Generative AI application, you are processing JSON-based REST payloads. You do not need XML-to-JSON transformation or SOAP services. However, because Kong is a bundled "Platform," a significant portion of your licensing fee goes toward maintaining and supporting these legacy protocol capabilities. You are investing in a broad 'Universal' gateway platform, whereas AI workloads may only utilize a fraction of those capabilities.

The Plugin-Based Cost Structure

Kong’s core is lean, but functionality is extended through plugins found in the Kong Plugin Hub. In the open-source version, you get basics. In the paid tiers, you get the "Advanced" versions required for production.

- AI Rate Limiting: The standard rate limiter limits requests. The "AI Rate Limiting Advanced" plugin (required to limit by tokens) is a premium enterprise feature.

- Authentication: Enterprise-grade OIDC/SSO integrations are often locked behind paid tiers.

This can create a tiered cost structure: while the base price covers core API management, advanced AI features often require additional modules.

Additional Costs When Using Kong AI Gateway

Beyond licensing, Kong introduces operational costs that translate directly into spend. This section highlights friction points that impact total cost of ownership.

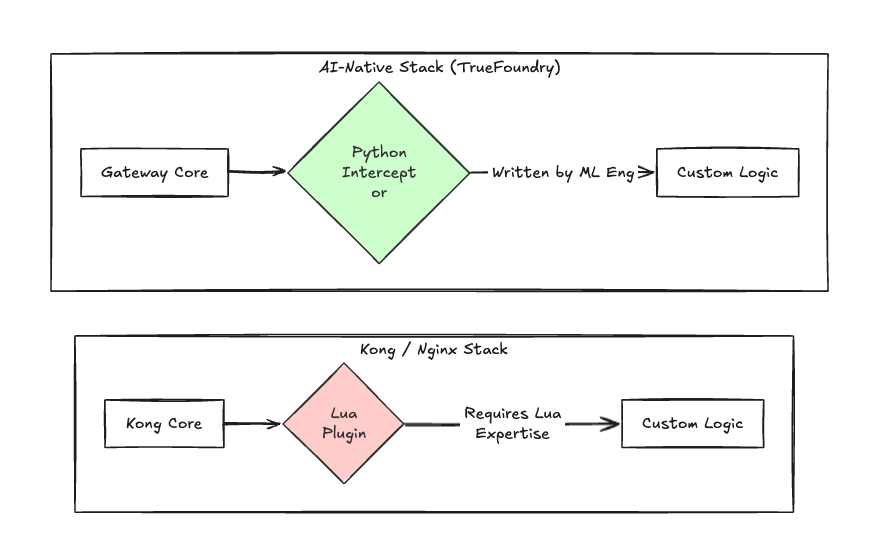

Lua vs Python Customization

Kong is built on Nginx and OpenResty, meaning its extension language is Lua.

- The Skills Gap: Most AI engineers and ML Ops teams live in Python. They do not know Lua.

- The Cost: If you need a custom guardrail (e.g., "Check this prompt against our internal PII database"), you cannot simply write a Python script. You must hire a Lua specialist or spend valuable engineering time learning a niche language to write a custom Kong plugin.

- Performance: While Lua is fast, the development velocity is slow for AI teams accustomed to the rich ecosystem of Python libraries (LangChain, LlamaIndex).

Fig 2: The Customization Barrier

Data Plane Resource Overhead

Kong’s data plane is powerful but resource-intensive. It is designed to handle tens of thousands of requests per second for web traffic.

- Memory Footprint: Running a full Kong instance (often requiring a Cassandra or Postgres database backing it, or a complex K8s Controller setup) consumes significant CPU and RAM.

- Over-provisioning: For AI traffic, which is low-RPS (Requests Per Second) but high-latency (streaming tokens), Kong’s architecture is often overkill. You are paying for Nginx-level throughput capacity when your bottleneck is actually the LLM provider's latency.

When Kong Gateway Pricing Makes Sense?

Despite its drawbacks for AI workloads, Kong remains the right choice in specific scenarios. If your organization fits these criteria, the cost is justified:

- Unified Control Plane: Organizations managing hundreds of existing microservices benefit from having AI traffic visible on the same dashboard as their payment and auth APIs.

- Hybrid Deployments: If you are an existing Kong Enterprise customer, adding AI endpoints to your current contract is operationally easier than standing up a new tool, even if it is more expensive per unit.

- Massive Ingress: If your AI app serves millions of users (e.g., a ChatGPT wrapper) and needs Nginx-level caching and DDoS protection at the edge, Kong’s mature ingress capabilities are superior to younger AI gateways.

Why AI-First Teams Look Beyond Kong?

As AI becomes central to products, teams reassess whether legacy API platforms fit their needs.

- Python-Native Workflows: AI engineers prefer tools that integrate with their stack. They want to write custom routing logic in Python, not configure YAML or write Lua.

- Iteration Speed: Config-heavy platforms like Kong slow down experimentation. AI teams need to swap models, change prompt templates, and update guardrails hourly.

- Cost Efficiency: As shown in the Konnect pricing, paying per-request can be economically inefficient for high-volume agentic AI. Teams need pricing that aligns with value (tokens processed) rather than volume (HTTP hits).

TrueFoundry: The AI-Native Alternative

TrueFoundry is built specifically for the LLM era, not retrofitted from API management. This section explains how its pricing and architecture differ.

Built for Tokens, Not Requests

TrueFoundry understands the unit economics of AI.

- Token Visibility: Metrics track input/output tokens and cost-per-query natively. You don't need a plugin to see how much GPT-4 is costing you versus Llama 3.

- Fair Pricing: Costs are typically tied to compute or managed throughput, avoiding the "Request Trap" of agentic workflows.

- Granularity: Observability aligns with AI goals (e.g., "Time to First Token", "Tokens per Second") rather than generic HTTP status codes.

Python-First Customization

Custom guardrails and policies are written in Python.

- Zero Learning Curve: Your AI engineers can write a PII filter or a custom routing strategy in Python and deploy it to the gateway immediately.

- No DevOps Bottleneck: AI teams own their gateway logic without relying on a centralized Platform team to update Lua scripts or Nginx configs.

No Enterprise Complexity

TrueFoundry is lightweight and purpose-built for AI workloads.

- Efficient: It doesn't carry the weight of SOAP, XML, or unrelated legacy protocols.

- Cost-Effective: You pay for AI features. There is no premium for decades of accumulated enterprise API functionality that your LLM app will never touch.

Don’t Buy a Battleship to Catch a Fish

Kong remains a powerful enterprise platform, but power comes with cost and complexity. If your goal is to manage microservices for a bank, Kong is excellent. If your goal is to route, govern, and observe LLM traffic, Kong imposes a "Legacy Tax" via request-based pricing, Lua obscurity, and service-based licensing.

Next Steps for Engineering Leaders:

- Audit your traffic: If you use Agents or high-chatter AI workflows, calculate your potential Kong Konnect bill using the $34.25/1M requests figure.

- Evaluate alternatives: Look for gateways that offer Token-based visibility and Python extensibility.

- Book a demo: See how TrueFoundry simplifies AI gateway management and lowers costs by stripping away the architectural overhead.

FAQs

Is Kong Gateway free?

The open-source version of Kong Gateway is free to download and self-host. However, it lacks the GUI (Kong Manager), advanced analytics, and enterprise plugins (like OIDC, advanced AI Rate Limiting) required for production AI workloads. The managed version, Kong Konnect, has a free tier that is limited by requests and services, typically suitable only for very small proofs of concept.

How much is Kong AI?

Kong does not sell "Kong AI" as a separate standalone product; it is a feature set within their Enterprise and Konnect plans. In Konnect, you pay standard rates (~$105/service/mo + usage fees). In Enterprise, AI capabilities are part of the license, but specific AI plugins may require upgrading to a higher tier or purchasing add-on packs, pushing costs into the tens of thousands per year.

What makes TrueFoundry a better Kong AI alternative?

TrueFoundry is "AI-Native," meaning its entire architecture is designed for tokens and models, not generic HTTP requests. It offers Python-based customization (vs. Kong's Lua), token-level cost tracking (vs. Kong's request counting), and a lighter operational footprint. This makes it significantly cheaper and faster for teams building LLM applications to iterate compared to configuring a heavyweight enterprise API gateway.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.