Helicone vs Portkey – Key Features, Pros & Cons

Modern AI applications demand both speed and comprehensive functionality from their gateway infrastructure. As conversational interfaces and real-time agents become the norm, every millisecond of latency impacts user experience, while enterprise deployments require sophisticated governance, security, and cost management capabilities.

This fundamental tension has created distinct architectural approaches in the gateway market. Helicone, originally focused on observability, has recently expanded into evaluations and launched a new Rust-based AI Gateway in beta. Portkey represents a mature, feature-rich enterprise platform processing over 2.5 trillion tokens across 650+ organizations.

Understanding these architectural differences becomes critical as organizations move from experimental prototypes to production-scale deployments where both performance and enterprise capabilities are non-negotiable.

What is Helicone?

Helicone is an open-source tool that sits between your app and LLMs to give you better visibility. It automatically logs requests and responses so you can track things like cost, speed, and usage. In simple terms, it helps you understand and improve how your AI app is running.

Helicone began as an LLM observability platform and has recently expanded into evaluations and launched an AI Gateway in beta. It has built its reputation on a single, compelling proposition: minimal latency overhead without operational complexity. Founded by Y Combinator alumni, the platform achieves ~8ms latency overhead while maintaining enterprise-grade reliability.

Performance Engineering Excellence

Helicone's new AI Gateway achieves ~8ms P50 latency through its Rust implementation and edge deployment on Cloudflare Workers. The platform processes requests at edge locations rather than routing through centralized infrastructure, providing geographic distribution advantages and eliminating cold start penalties.

The gateway supports 100+ models with features like smart routing, load balancing, caching, and automatic fallbacks. Built-in observability integration provides real-time insights without requiring additional tooling setup.

Gateway Development and Capabilities

The AI Gateway, rebuilt in Rust, represents Helicone's evolution from pure observability into comprehensive routing capabilities. The team acknowledged that "every team has been building their own AI gateway or settling for subpar solutions because no one had built the definitive one yet," positioning their new gateway as addressing this market gap.

Limited Enterprise Features: The platform lacks comprehensive audit trails, advanced role-based access controls, and sophisticated policy enforcement that regulated industries require.

Basic Integration Support: While the gateway supports multiple providers, it lacks advanced guardrails integration, comprehensive failover strategies, and the extensive model ecosystem that enterprise deployments need.

Narrow Operational Scope: The platform focuses primarily on routing and basic observability, without the broader MLOps/LLMOps capabilities that modern AI operations demand.

As a helicone alternative, some teams also consider platforms like Portkey or TrueFoundry for broader enterprise capabilities.

What is Portkey?

Portkey is a production-grade AI gateway that helps you manage and scale LLM-powered applications. It gives you one unified API to connect with 250+ models, along with features like routing, caching, retries, and observability. In short, Portkey makes your AI apps more reliable, cost-efficient, and easier to run at scale.

Portkey has established itself as a comprehensive enterprise platform, processing 2.5+ trillion tokens across 650+ organizations. The platform provides extensive functionality but faces challenges in integration complexity, latency overhead, and platform comprehensiveness.

Comprehensive Enterprise Capabilities

Portkey's feature breadth differentiates it from performance-focused alternatives. The platform provides functionality that would typically require multiple tools.

Advanced Routing and Orchestration: The platform handles complex scenarios like cascading fallbacks, cost-optimized model selection, and intelligent load balancing across multiple providers. This sophistication enables resilient deployments but requires processing overhead.

Comprehensive Governance: Portkey provides enterprise-grade audit trails, granular role-based access control, policy enforcement, and compliance reporting. These features address regulatory requirements but add computational complexity to every request.

Prompt Management and Versioning: Teams can manage prompt templates, A/B test variations, and roll back changes through the platform interface. This functionality requires additional database queries and processing logic.

Enterprise Integration Depth

Portkey's enterprise focus extends beyond feature breadth to integration sophistication. The platform supports complex deployment scenarios that most simpler gateways can't handle:

Multi-Environment Configuration: Different environments can have distinct model configurations, access controls, and routing policies managed through centralized configuration. Development teams can experiment safely while production maintains strict governance.

Identity Provider Integration: OAuth 2.0, SAML, and enterprise SSO integration allows organizations to leverage existing identity infrastructure. Teams don't need separate credentials or access management systems.

Audit and Compliance: Detailed logging captures every request, configuration change, and policy decision for regulatory compliance. The audit trails meet requirements for SOC 2, HIPAA, and GDPR compliance.

These capabilities matter enormously for large organizations but come with performance costs that affect every request.

Integration and Performance Challenges

Despite its maturity, Portkey faces significant limitations that affect enterprise adoption, and drive interest in portkey alternatives:

Integration Complexity: Independent benchmarking shows Kong AI Gateway performing 228% faster than Portkey, with 65% lower latency. Teams report complex setup processes and ongoing maintenance overhead that slows development velocity.

Latency Overhead: While Portkey claims <1ms latency, real-world deployments often experience 20-40ms or higher overhead, particularly when using advanced features like comprehensive guardrails and complex routing logic.

Limited MLOps Integration: Portkey focuses primarily on API routing without providing comprehensive model deployment, training, or MLOps platform capabilities. Organizations need separate tools for complete AI lifecycle management.

Incomplete Gateway Features: Despite its maturity, Portkey still lacks certain gateway features like comprehensive guardrails integration with third-party providers and advanced failover mechanisms that enterprise deployments require.

Helicone vs Portkey: Key Features Differences

Helicone and Portkey both help teams build better LLM applications, but they focus on different needs. Helicone is an open-source observability proxy that tracks requests, costs, and performance, making it great for debugging and monitoring.

Portkey, on the other hand, is a comprehensive AI gateway designed for production workloads, featuring multi-model routing, caching, and guardrails. While Helicone is lightweight and developer-friendly, Portkey is designed to handle scale, reliability, and enterprise governance.

Here are the key differences between the two:

Helicone and Portkey both help improve LLM applications, but in different ways. Helicone is ideal for deep observability, prompt testing, and self-hosted control. Portkey is better suited for scaling, multi-model routing, caching, and enterprise-grade reliability.

Helicone vs Portkey : When to Choose Helicone?

Helicone is an open-source LLM observability platform designed for developers seeking deep insights into their AI applications. It provides a comprehensive suite of tools to monitor, debug, and optimize LLM-powered systems.

Simplified Integration: Helicone stands out for its ease of integration. With just a single line of code, developers can log all requests to various LLM providers. This minimal setup allows teams to implement observability quickly without major changes to existing code.

Advanced Observability Features: The platform tracks detailed metrics such as cost, latency, and Time to First Token (TTFT). Session tracking lets developers monitor multi-step workflows and conversations, helping identify bottlenecks, optimize performance, and ensure a smooth user experience.

Prompt Management and Experimentation: Helicone offers prompt versioning, A/B testing, and side-by-side prompt experiments. This makes it easy for teams to refine prompts, iterate quickly, and improve outcomes effectively.

Cost and Performance Optimization: Built-in caching reduces redundant requests, lowering inference costs and improving response times. This is especially useful for high-traffic applications or repeated queries.

Open-Source Flexibility: Being fully open-source, Helicone provides transparency and control over data handling. Teams can self-host the platform to comply with internal policies and regulatory requirements.

Real-Time Alerts and Monitoring: Helicone supports real-time alerts via email or messaging platforms, allowing teams to respond immediately to critical issues and maintain application reliability.

Ideal Use Cases

- Enhance the performance and reliability of LLM applications

- Implement observability with minimal setup

- Experiment with and refine prompts for better results

- Monitor and control AI inference costs

You can choose Helicone when you need a developer-friendly, open-source platform for comprehensive observability, prompt management, and optimization of LLM applications.

Helicone vs Portkey : When to Choose Portkey?

Portkey is a production-grade AI gateway designed to help teams scale and manage LLM-powered applications reliably. It combines observability, multi-model routing, caching, and governance into a single platform, making it ideal for production workloads.

Multi-Model and Multi-Provider Access: Portkey provides a unified API to connect with over 250 models across different providers. This eliminates provider lock-in and allows applications to switch models or route requests dynamically without rewriting code.

Reliability and Performance: For production systems, Portkey offers conditional routing, load balancing, automatic retries, and circuit breakers. These features ensure consistent performance and high uptime, even under heavy traffic or when providers experience latency spikes.

Cost and Latency Optimization: Built-in simple and semantic caching reduces redundant requests, lowering inference costs and speeding up responses. This is especially valuable for high-traffic applications or those that require repeated queries.

Security and Governance: Portkey securely stores API keys in a virtual key vault and applies rate limits, budget controls, and real-time guardrails. This makes it easier to enforce enterprise compliance and maintain safe AI outputs.

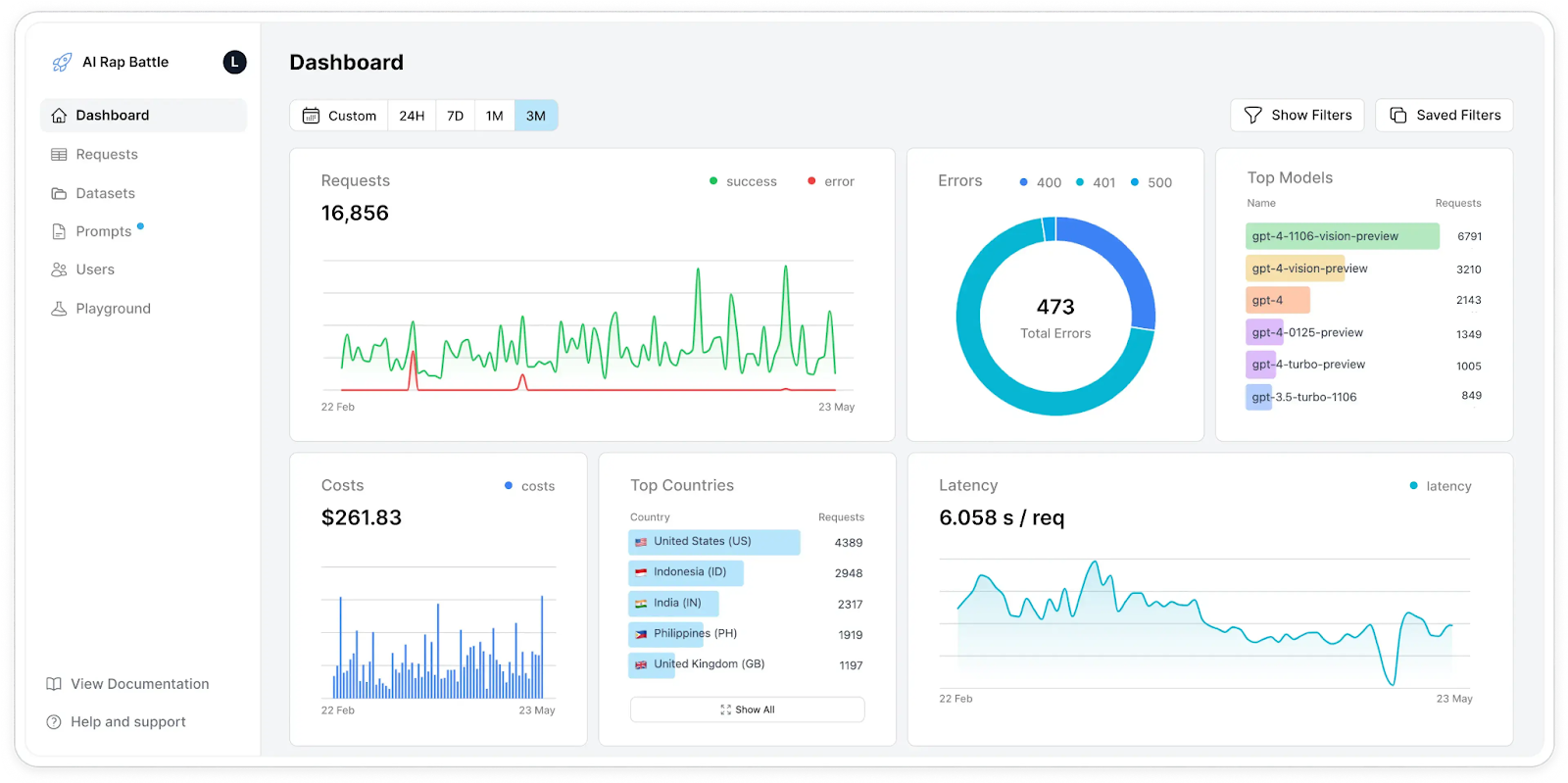

Observability and Monitoring: Portkey tracks requests, responses, costs, and latencies across all integrated models, providing insights into system performance and usage patterns. Real-time dashboards help teams monitor workloads efficiently.

Ideal Use Cases

- Scale LLM applications reliably in production

- Manage multiple models and providers through a single API

- Optimize costs and latency with built-in caching

- Enforce enterprise-grade security, compliance, and guardrails

TrueFoundry is best when :

You need both performance and enterprise capabilities without compromise:

- Mission-critical applications requiring enterprise governance with minimal latency (even a few milliseconds matter)

- High-growth organizations looking for platforms that scale from development to enterprise without architectural changes, with ease of development and a range of integrations

- Hybrid deployments combining cloud APIs with self-hosted models through unified interfaces

- Performance-sensitive enterprise applications where speed, features, and compliance are non-negotiable (things like <3ms latency, SOC2/HIPAA compliance, extensive features including observability, access control, monitoring, MCP integrations, etc.)

TrueFoundry delivers the unified architecture that eliminates traditional trade-offs between performance and functionality.

Conclusion

The choice between Helicone and Portkey reflects a fundamental architectural decision that extends far beyond immediate technical requirements. Platform selection impacts long-term strategic flexibility in ways that compound over time.

Technology Evolution: AI capabilities advance rapidly. Platforms that support both cloud APIs and self-hosted deployment provide flexibility as model capabilities and deployment preferences change. Organizations locked into cloud-only solutions may find themselves constrained when data sovereignty or cost optimization requires on-premises deployment.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.