AI Security Platforms & Gateways: Safeguarding LLMs and Agentic AI

As generative AI and LLMs rapidly enter the enterprise, protecting these powerful new tools has become critical. Traditional security tools can’t see inside an AI model or enforce data-use policies on prompts and outputs, leaving organizations blind to AI-specific risks like prompt injection, data leakage, and rogue “agentic” behavior. In fact, a recent Gartner survey found 81% of organizations are on a GenAI journey, but many are already facing project failures, compliance problems, and misuse incidents due to inadequate AI governance. This visibility gap means that until now there was effectively no AI firewall to inspect or block malicious AI traffic.

Industry analysts now say AI-specific security solutions are urgently needed. Gartner predicts that by 2028 more than half of enterprises will deploy an AI security platform to enforce consistent guardrails across all third-party and custom AI applications. AI security is no longer optional – it’s a foundation for trust. Cited by Gartner as a “Vanguard” technology trend, AI security platforms bring visibility and control over LLMs and multiagent systems in the same way traditional firewalls and gateways protect networks. Without these controls, even well-intentioned AI projects can leak sensitive information or spin out of control. Embedding proactive safety checks and governance in the AI stack is now essential for enterprises scaling LLMs or deploying autonomous AI assistants. In the sections below we’ll explain what “AI Security Platforms” are, why AI security matters more than ever, and why AI gateways are the practical enforcement layer for AI security. We’ll also show how TrueFoundry’s AI Gateway implements all these requirements in one unified solution – effectively becoming your organization’s AI firewall.

What Are AI Security Platforms? (AIUC + AIAC)

Gartner defines an AI Security Platform (AISP) as a unified security layer for all AI usage – third-party services and in-house models alike. In practice, an AISP combines two pillars: AI Usage Control (AIUC) and AI Application Cybersecurity (AIAC).

- AI Usage Control (AIUC): Governs how users and apps interact with external AI services (e.g. ChatGPT, cloud AI APIs). AIUC enforces acceptable-use policies on generative AI, discovers and inventories “shadow AI” usage, prevents sensitive data from being sent to unauthorized models, and monitors risky interactions. For example, an AIUC feature might block an employee from querying ChatGPT with proprietary documents, or automatically redact PII from prompts. It also continuously scans prompts and responses for signs of leaks or malicious content (prompt injection testing is now an expected capability). In short, AIUC is like an intelligent gatekeeper for all 3rd-party LLM usage in the organization.

- AI Application Cybersecurity (AIAC): Protects custom-built AI models and agents inside the enterprise – from development through deployment. This pillar addresses novel AI threats that conventional AppSec tools can’t catch: scanning downloaded model artifacts for backdoors, detecting prompt-injection or model-poisoning attacks, and tracking the behavior of autonomous AI agents for “rogue” actions. AIAC also applies safety guardrails in real time: validating every input request before it hits your custom model, and filtering or mutating outputs to prevent harmful or non-compliant data from reaching end users. Gartner emphasizes that AIAC delivers end-to-end protection across the AI lifecycle – something no legacy vulnerability scanner or WAF can do.

By combining AIUC and AIAC, a mature AI Security Platform provides a single pane of glass for AI governance. It centralizes visibility of all model calls, enforces consistent policies (data residency rules, content policies, access controls) across every AI workload, and continuously monitors for AI-specific risks like prompt injections or unauthorized agent activities. Gartner predicts that unified AISPs (offering both AIUC and AIAC) will dominate the market, providing a consolidated solution rather than piecemeal tools. Essentially, an AISP is the AI-era equivalent of an enterprise firewall and SIEM combined – tailored for LLMs and AI agents.

Why AI Security Is Essential Now

The rapid adoption of LLMs and “agentic AI” (multi-step AI assistants) has vastly expanded the enterprise attack surface. Here are key reasons AI security is now mission-critical for any organization deploying generative AI:

- New Vulnerabilities (Prompt Injection, Data Leakage): Traditional security tools were not built to understand AI. Attackers can now “inject” malicious instructions or data into prompts to manipulate models or extract sensitive data. For example, a cleverly crafted user prompt might trick a model into revealing confidential information it was exposed to during fine-tuning. Without AI-aware guards, enterprises are blind to such malicious inputs and outputs.

- Unpredictable “Agentic” Risk: Autonomous AI agents introduce fresh challenges. These AI services can carry out tasks or make decisions without human approval. Since agent actions are probabilistic and less predictable, they can inadvertently bypass controls or take harmful actions. Gartner warns that custom AI agents create “new attack surfaces” and uncertainty, requiring secure dev and runtime practices. In practice, this means every API call made by an AI agent must be logged, monitored and potentially restricted – something only a purpose-built gateway can enforce.

- Lack of Visibility and Enforcement: Most organizations lack visibility into how employees use AI tools or what data flows through them. The PointGuard survey noted that enterprise security stacks have no visibility into AI prompts or model training workflows. There is no native way to “block” an AI prompt or audit a model’s output. As a result, compliance mandates (from GDPR/CCPA to the EU AI Act) and internal data policies become impossible to enforce without an AI control layer.

- Regulatory and Compliance Pressure: New regulations like the EU’s AI Act and Digital Services Act explicitly demand risk management, documentation, and governance for AI systems. This means companies will need to prove they have safety checks and logging for their AI apps. An AI Security Platform provides the necessary tooling (audit trails, policy enforcement, content moderation) to meet these requirements out of the box.

- Growing Adoption = Growing Risk: Gartner found that as of 2025, 81% of enterprises are deploying GenAI, yet many report compliance issues and project failures due to poor governance. Without change, more scale only means more incidents. In response, analysts say treating AI security as an afterthought will leave organizations dangerously exposed. They advise embracing a dedicated AI security architecture – namely the AI Security Platform model.

In short, LLM security and AI governance can no longer be ignored. Data scientists and developers are already experimenting with public AI services; the question now is how to do so safely. Enterprises need an “AI firewall” – a control point that understands the semantics of AI traffic and can apply policies dynamically. Enter the AI Gateway: the practical enforcement point where security, compliance, and observability come together at runtime.

AI Gateways: The Enforcement Point for AI Security

An AI Gateway is a specialized proxy layer that sits between applications (or agents) and AI model services. Gartner describes it as middleware managing security, observability, routing, and cost for AI APIs. In other words, the AI Gateway is the runtime anchor of your AI security platform. It is here that policies from AIUC and AIAC are actually enforced on live traffic. Let’s break down the key roles an AI Gateway plays in securing AI:

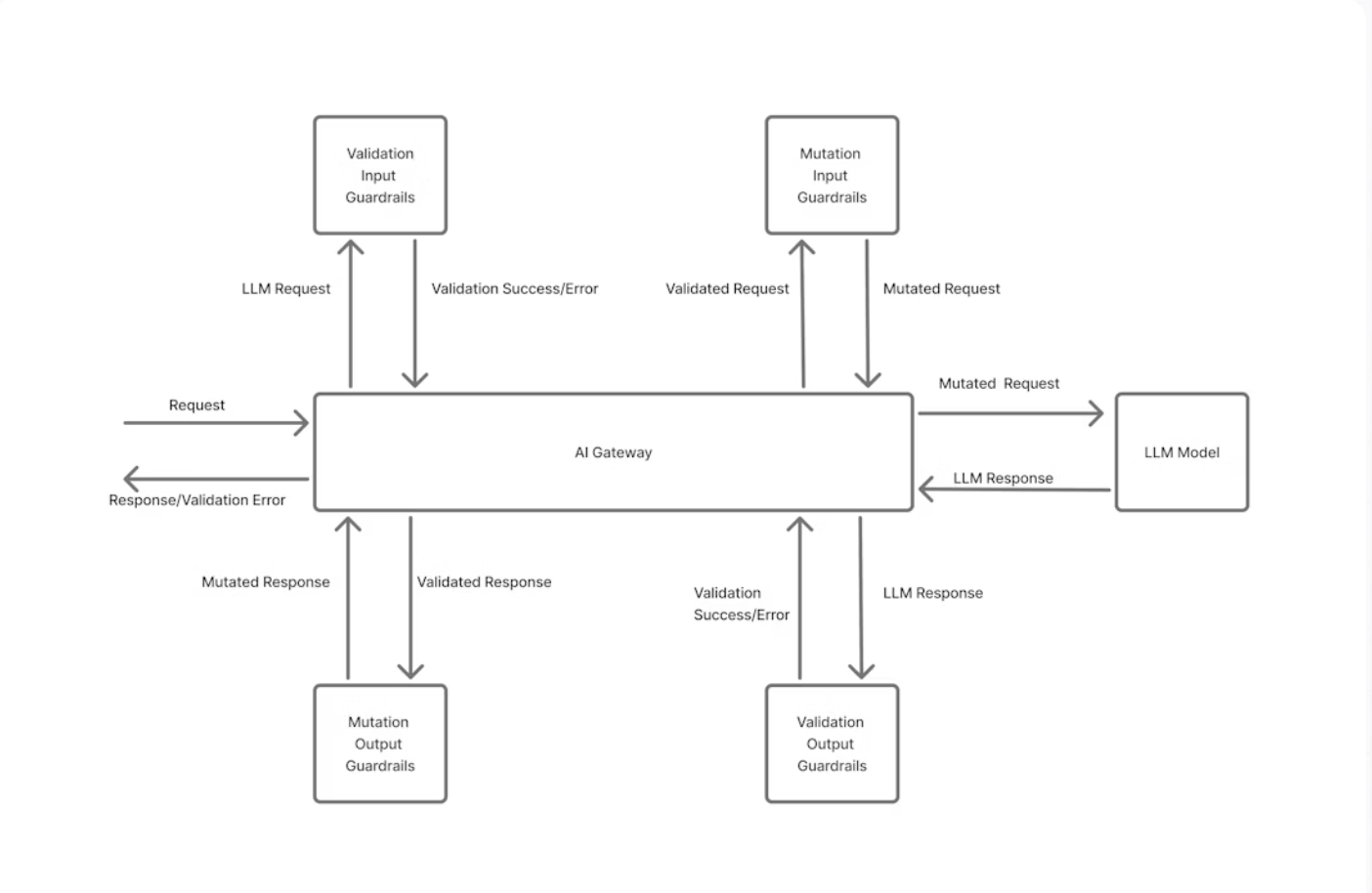

- Runtime Protection & Guardrails: Every request to a model passes through the gateway’s security and policy engine. Here, input guardrails can validate or mutate prompts before they hit the LLM. For example, the gateway can automatically mask or redact PII, sanitize inputs to prevent prompt injection, or outright block disallowed queries. Similarly, output guardrails scan and transform model responses: filtering profanity, removing hallucinated private data, or enforcing a consistent answer format. By front-ending each AI call with these checks, the gateway enforces corporate security policies just like an API firewall.

- Data Residency & Hybrid Control: Many enterprises require that certain data never leave a specific cloud or region. AI Gateways make this possible by routing traffic based on geolocation or deployment rules. You could send regulated data only to an on-prem model, or use a sovereign-cloud LLM for EU customer data. The gateway’s centralized routing logic ensures requests land where they should, and that none of them slip out to unauthorized cloud endpoints. In effect, the gateway provides data-residency controls for AI.

- Model and Provider Governance: An AI Gateway keeps a single inventory of models and AI services. Administrators can register approved LLM endpoints (OpenAI, Anthropic, on-prem Llama, etc.), along with fine-grained access controls. Each application or user can be authorized for only the models it needs. The gateway also handles credential management and key rotation for those services. In practice, this means the gateway acts like an AI-network manager: it can throttle or quota usage per model, deny calls to out-of-policy providers, and perform intelligent load balancing across multiple model deployments. For example, if one model instance is hitting its token limit, the gateway can route overflow to a secondary instance to ensure reliability. These controls address Gartner’s call for “consistent guardrails and content moderation across third-party and in-house AI”.

- Agentic AI and MCP Integration: Modern AI agents often interact with external tools and data stores. TrueFoundry’s gateway natively supports the Model Context Protocol (MCP) to connect agents to enterprise systems in a secure way. This allows the gateway to trace every action an agent takes – which tools it calls, what data it retrieves – and enforce governance on those actions as well. In other words, even autonomous multi-step processes remain under centralized audit and control. An AI Gateway can also set policy on agent behavior (“this agent can write emails but cannot execute financial transactions,” for example), effectively managing agentic AI risk.

- Logging & AI Observability: Beyond blocking threats, the gateway provides rich telemetry. It logs every prompt sent and every response received, along with metadata like user identity, model choice, and execution time. Dashboards and alerts can then surface anomalies or risky trends (e.g. spikes in rejected prompts, unexpected uses of a particular model, or agents deviating from safe paths). TrueFoundry’s solution, for instance, offers integrated analytics and OpenTelemetry support so that LLM interactions appear in enterprise monitoring tools. This kind of AI observability is essential for forensic auditing and continuous compliance.

In essence, the AI Gateway is the enforcement engine of your AI Security Platform. It turns abstract policies into action at the point of integration with models. Gartner notes that modern gateways are evolving “from simple traffic routers to intelligent governance engines”. Organizations use AI Gateways to handle everything from cost optimization to AI trust/risk management. For example, the tasks: authenticating and authorizing AI calls, balancing load across endpoints, logging interactions, and enforcing token quotas – are listed as exactly the controls needed for secure AI deployment.

TrueFoundry’s AI Gateway: A Unified Solution

TrueFoundry’s AI Gateway is built precisely to fulfill the role of a comprehensive AI Security Platform. Gartner has even recognized TrueFoundry as a representative vendor in this fast-evolving category. The Gateway acts as a unified control layer for AI – offering observability, governance, cost control, and security for your AI environment. Here’s how it meets the key requirements:

- Centralized Ingress & Routing: All AI traffic flows through the gateway’s front end. TrueFoundry provides a single OpenAI-compatible API endpoint, so applications need only code against one URL. Behind the scenes, the gateway routes requests to the optimal model or cloud provider (OpenAI, Anthropic, AWS, Azure, etc.) based on rules you set. This enables use cases like latency-based routing or intent-based routing (sending finance queries to a finance-tuned model, for example). Automatic failover ensures continuity: if one model endpoint goes down, the gateway seamlessly retries on an alternate backend.

- Token-Aware Cost Management: Built-in cost controls monitor token usage at a granular level – by user, team, model, or application. Administrators can set quotas and rate limits on token consumption to prevent runaway costs or noisy neighbors. The gateway also supports semantic caching: it detects when a new prompt is highly similar to a recent one and returns the cached response instead of incurring a new model call. In real workloads, this can cut redundant LLM requests by up to 40%, drastically lowering spend while speeding up responses. All usage data is tracked for billing and chargeback.

- Security and Guardrail Enforcement: TrueFoundry’s gateway integrates with popular moderation and guardrail services to vet every request and response. It provides built-in hooks so you can apply content filters (via OpenAI Moderation, Azure Safety, Enkrypt AI, etc.) to block or mutate unsafe prompts and outputs.

For example, upon receiving a user prompt, the gateway can run an integrated privacy filter to redact any sensitive PII before calling the model. After the model returns an answer, the gateway can apply another policy (e.g. “no medical advice allowed”) and block any responses that violate enterprise policy. The gateway also handles credential rotation and RBAC for AI keys: individual developers or services get only the permissions they need. By default it enforces OAuth2/OIDC, so your standard identity controls govern who can query which model. In short, it acts as an AI firewall and content filter on every interaction – a central enforcement point for AI governance.

Example Use Case: Consider a financial firm building an AI-powered assistant for customer service. Using TrueFoundry AI Gateway, the company can set a policy that any prompt to the assistant first passes through a compliance filter (blocking any request containing account numbers or instructions to execute trades). The assistant’s responses are similarly filtered for inappropriate financial advice. All interactions are logged for auditing. Moreover, the gateway can route regulatory-sensitive queries to an internally-hosted LLM (ensuring data never goes to an external cloud), while other traffic uses a public model for general knowledge. Meanwhile, token usage by the assistant is tracked and capped, preventing surprises in the cloud bill. In this way, the gateway operationalizes the firm’s AI governance rules end-to-end.

Conclusion

In summary, AI gateways are central to any modern AI security strategy. They serve as the operational enforcement point for the Gartner-defined AI Security Platform, applying centralized policies and guardrails at the point of inference. By inspecting every prompt and output, controlling model access, and providing full observability, AI gateways transform the distributed chaos of AI usage into a governable, secure system.

TrueFoundry’s AI Gateway embodies this vision as a unified AI firewall and control plane. It delivers AI security platform capabilities – from prompt injection protection and data loss prevention to agentic AI risk management – all in one technical stack. Gartner even recognizes TrueFoundry’s offering as a leader in this space. For CTOs and AI platform teams, deploying an AI Gateway from a solution like TrueFoundry is the fastest way to operationalize AI security: it enforces usage policies, performs continuous risk testing, and ensures enterprise-grade compliance across your LLM and agent use cases.

With generative AI adoption surging, the time to act is now. An AI gateway turns AI security policies from hope into practice – blocking threats in real time and providing a single pane of glass for AI governance. By unifying third-party and custom AI under one roof, TrueFoundry AI Gateway helps enterprises “secure the path to AI adoption,” enabling innovation without compromising trust

References: https://www.gartner.com/en/articles/top-technology-trends-2026

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.