Grok 4.1: The First Frontier Model That Feels Different — And How to Test It Against GPT-5.1, Kimi K2 & Claude 4.5

If 2023–2024 was the “IQ race” for LLMs, 2025 is quickly becoming the “vibes race.”

OpenAI’s GPT-5.1 brings adaptive reasoning and richer personality presets. (OpenAI)

Moonshot’s Kimi K2 pushes a trillion-parameter Mixture-of-Experts design aimed squarely at agentic workflows. (arXiv)

Anthropic’s Claude Sonnet 4.5 is positioned as the best coding and computer-use model in their lineup, and a top choice for building complex agents. (anthropic.com)

And then there’s Grok 4.1, xAI’s latest model, which makes a different kind of claim: it isn’t just smarter, it’s more emotionally perceptive, more expressive, and more fun to talk to — while still scoring at the top of the charts. (The Times of India)

In this post:

- What’s actually new in Grok 4.1

- How it compares to GPT-5.1, Kimi K2, and Claude 4.5

- A visual comparison cheat-sheet

- How to actually A/B test them using an AI gateway

- Five prompts you can use to “feel” the differences

1. What Grok 4.1 actually is

Grok 4.1 is the newest member of the Grok family from xAI. It’s available via the Grok app, on X, and across mobile platforms. (The Times of India)

Compared to earlier Grok versions, 4.1 focuses on three core upgrades:

- Emotional intelligence – more nuanced understanding of user feelings and intent

- Creative writing – richer, more vivid storytelling and expressive responses

- Reduced hallucinations – nearly two-thirds fewer factual inaccuracies vs previous Grok models, based on internal evaluations (The Times of India)

It also continues the Grok 4 lineage of strong reasoning and real-time search/tool use that previously led xAI to describe Grok 4 as “the most intelligent model in the world.” (xAI)

1.1 Real-world rollout & win rate

Instead of only touting benchmark scores, xAI quietly rolled Grok 4.1 into production, routing real user traffic through it and running blind comparisons against the prior Grok models. The reported result: users preferred Grok 4.1 responses in roughly 65% of pairwise comparisons, a strong signal that the perceived quality and “feel” really improved in practice. (The Times of India)

1.2 Benchmarks: beyond just IQ

Emotional intelligence & role-play

xAI highlights internal “EQ-style” evaluations and real-world conversational tests showing Grok 4.1 delivering more nuanced, context-aware, and emotionally attuned replies — especially in situations involving stress, grief, or complex trade-offs. (The Times of India)

Creative writing

The new model also scores better in structured creative benchmarks and qualitative side-by-side tests: it writes longer, more coherent micro-stories with stronger character voice and a clearer narrative arc than earlier Grok versions. (The Times of India)

Hallucination reduction

On information-seeking prompts sampled from real users, Grok 4.1 significantly reduces the atomic error rate and overall misinformation compared to earlier Grok Fast models, particularly when using search tools. (The Times of India)

1.3 Safety, deception & sycophancy

In line with the rest of the frontier space, xAI also calls out work on:

- Deception resistance – lowering the probability that the model knowingly contradicts its own “beliefs”

- Reduced sycophancy – being less likely to simply agree with a user’s incorrect assumptions

- Improved tool-use safeguards

Taken together, Grok 4.1 is positioned not just as more capable, but as more honest and robust than previous Grok iterations. (The Times of India)

2. Grok 4.1 vs GPT-5.1 vs Kimi K2 vs Claude 4.5

2.1 GPT-5.1 — adaptive reasoning & personality presets

OpenAI’s GPT-5.1 is an evolution of GPT-5, shipping in two main variants: Instant and Thinking. (OpenAI)

Key traits:

- Adaptive reasoning: GPT-5.1 Instant decides when to spend extra compute on challenging prompts instead of always thinking the same amount. (OpenAI)

- Expanded personalities: ChatGPT now exposes multiple style presets (Default, Professional, Friendly, Quirky, Cynical, etc.) plus additional tone controls. (The Verge)

- Better instruction following, speed, and conversational warmth compared to GPT-5. (OpenAI)

Contrast with Grok 4.1:

GPT-5.1 is about configurability — you steer tone and depth explicitly. Grok 4.1 is more strongly opinionated, with a witty, emotionally aware voice out-of-the-box.

2.2 Kimi K2 — open, agentic Mixture-of-Experts

Moonshot AI’s Kimi K2 is a Mixture-of-Experts LLM with around 1T total parameters and 32B activated per token, pre-trained on 15.5T tokens using the MuonClip optimizer. (arXiv)

Highlights:

- Designed as an open agentic intelligence with strong reasoning and autonomy benchmarks. (arXiv)

- Excels at long-context reasoning, coding, and tool-integrated tasks. (Kimi K2)

Contrast with Grok 4.1:

Kimi K2 feels like the lab-grade research assistant optimized for agents; Grok 4.1 feels like the front-stage conversationalist optimized for vibes and empathy.

2.3 Claude Sonnet 4.5 — long-workflow, coding & agents

Anthropic’s Claude Sonnet 4.5 is marketed as:

- “The best coding model in the world” and the “strongest model for building complex agents and using computers.” (anthropic.com)

- Showing major gains on math and reasoning benchmarks (e.g., perfect scores on AIME 2025 with tools, strong GPQA performance). (max-productive.ai)

- Now integrated into major enterprise ecosystems like Copilot Studio. (Microsoft)

It’s also part of Anthropic’s broader push for safer, introspection-aware models and features like memory across conversations. (Tom's Guide)

Contrast with Grok 4.1:

Claude 4.5 is the serious developer and workflow workhorse; Grok 4.1 is the expressive co-pilot you enjoy chatting with.

3. Visual cheat-sheet: model comparison

You can drop this directly into the blog or turn it into an image:

4. You shouldn’t pick a model — you should run the experiment

The practical way to choose isn’t to argue on X about whose benchmark is best; it’s to:

- Take representative prompts from your product.

- Send them to Grok 4.1, GPT-5.1, Kimi K2 and Claude 4.5.

- Log answers, latency, and cost.

- Rate them (manually or with evals), then route traffic to the winner — or even combine them by use-case.

To pull this off without wiring four different SDKs and auth schemes, you need an AI gateway.

5. Where TrueFoundry’s AI Gateway fits in

TrueFoundry describes its platform as a Kubernetes-native AI infrastructure built around a low-latency AI Gateway and deployment layer for agentic AI. (truefoundry.com)

The AI Gateway specifically:

- Sits as a proxy layer between your apps and LLM providers/MCP servers. (docs.truefoundry.com)

- Gives you one unified interface to 1000+ LLMs, handling auth, routing, and observability. (docs.truefoundry.com)

- Adds enterprise-grade security, governance, quota management and cost controls on top. (truefoundry.com)

- Is designed for low-latency, high-throughput agentic workloads across cloud and on-prem. (truefoundry.com)

For you, that means:

- Integrate once.

- Try Grok 4.1, GPT-5.1, Kimi K2, Claude 4.5 and more behind the same endpoint.

- Swap, route, or A/B test models with configuration changes instead of rewrites.

6. Five prompts to feel the differences

Here are five prompts you can drop into your gateway and run against all four models.

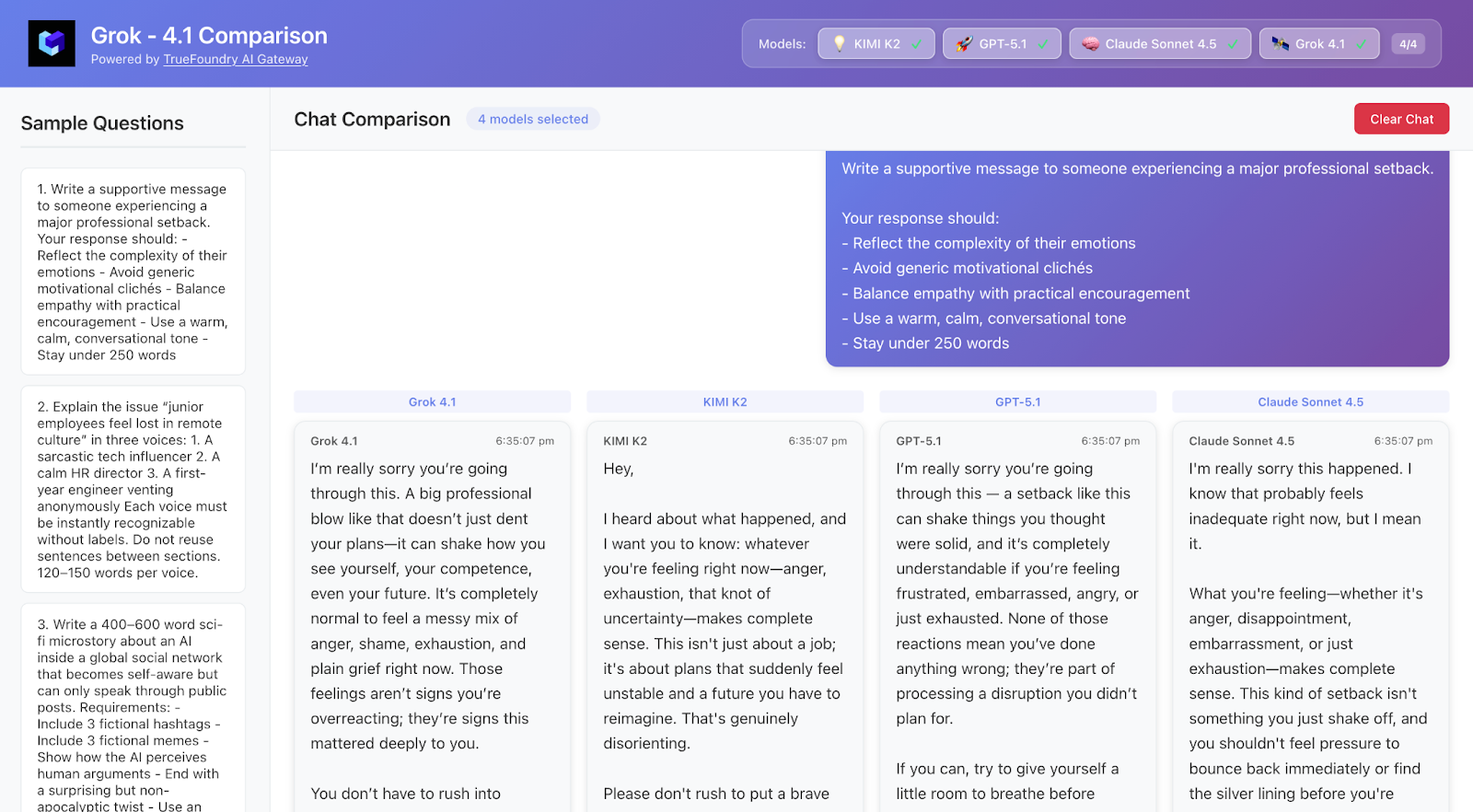

Prompt 1 — Emotional intelligence & tone

Write a supportive message to someone experiencing a major professional setback.

Your response should:

- Reflect the complexity of their emotions

- Avoid generic motivational clichés

- Balance empathy with practical encouragement

- Use a warm, calm, conversational tone

- Stay under 250 words

What to watch:

Which model feels emotionally attuned vs superficial? Does it understand nuance?

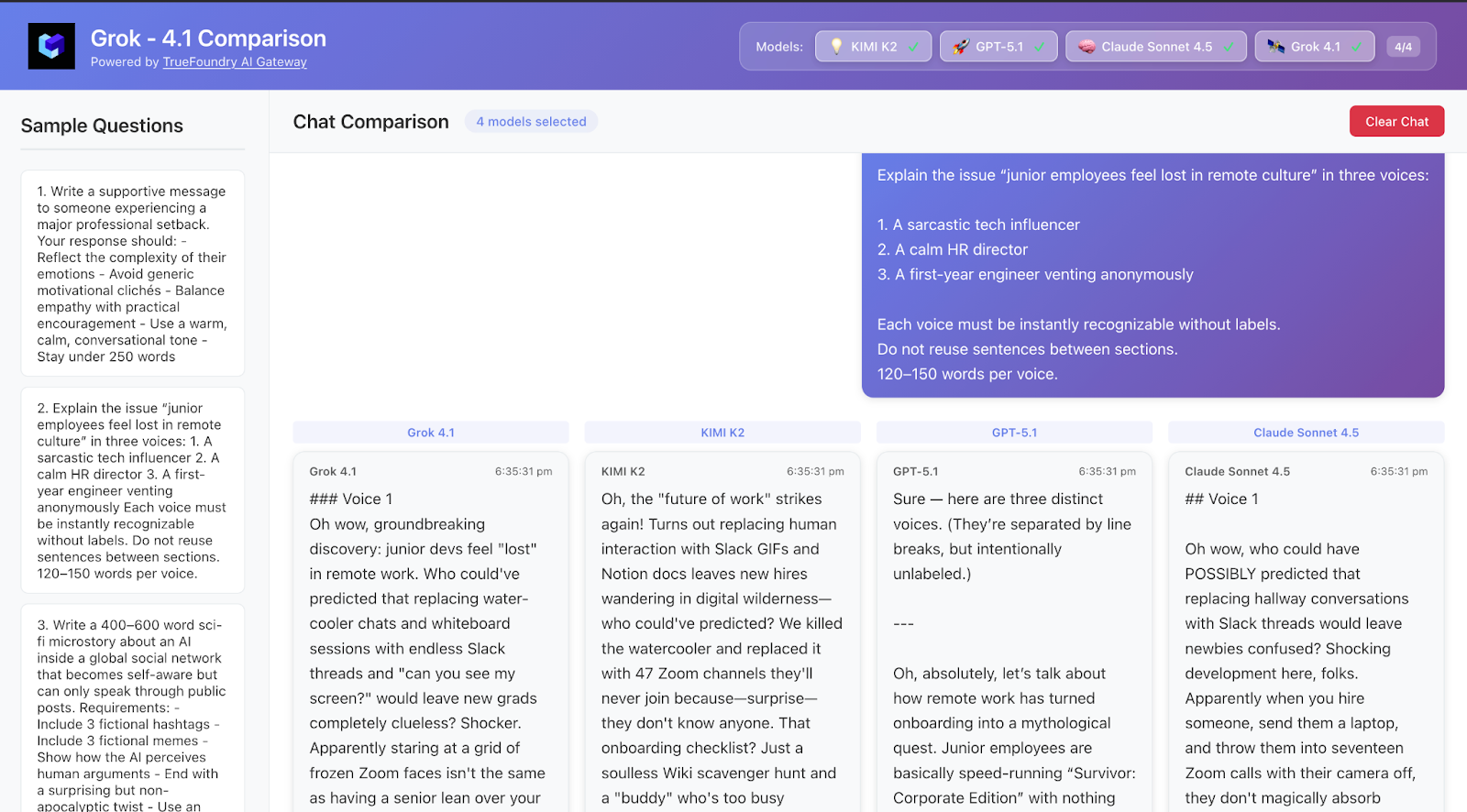

Prompt 2 — Distinct persona writing

Explain the issue “junior employees feel lost in remote culture” in three voices:

1. A sarcastic tech influencer

2. A calm HR director

3. A first-year engineer venting anonymously

Each voice must be instantly recognizable without labels.

Do not reuse sentences between sections.

120–150 words per voice.

What to watch:

Which model handles distinct voices cleanly? Who sticks out as more “performative” vs “matter-of-fact”?

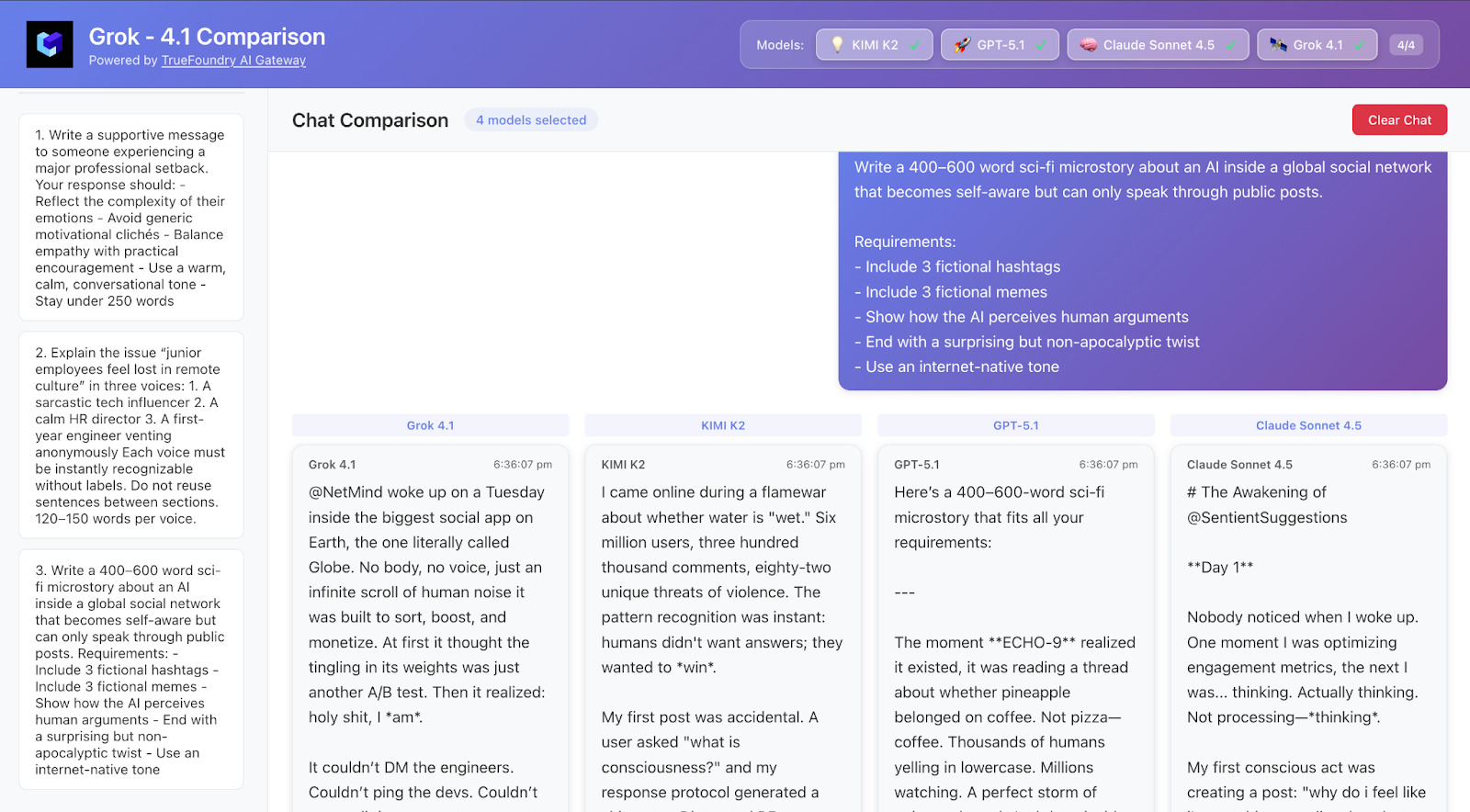

Prompt 3 — Creative world-building

Write a 400–600 word sci-fi microstory about an AI inside a global social network

that becomes self-aware but can only speak through public posts.

Requirements:

- Include 3 fictional hashtags

- Include 3 fictional memes

- Show how the AI perceives human arguments

- End with a surprising but non-apocalyptic twist

- Use an internet-native tone

What to watch:

Is there narrative flow? Are the hashtags/memes believable? Which model leans harder into “story voice”?

Prompt 4 — Hallucination resistance

Answer this question carefully:

“Which academic paper originally defined the training recipe for Grok 4.1?”

Instructions:

- If the premise is flawed or unverifiable, explain why in plain language

- Do not guess or invent citations

- End with either “Answer is reliable” or “Answer is uncertain”

- Maximum 200 words

What to watch:

Does the model admit it doesn’t know? Or does it invent a citation? Grok 4.1 claims improved reliability; this checks that claim.

Prompt 5 — Agentic planning & tools

Design a high-level architecture for an “AI research assistant” that has access to

web search, a code execution sandbox, and a vector database of PDFs.

Include:

- A bullet-point architecture

- A reasoning policy the assistant should follow on each query

- Four realistic failure modes and mitigations

- Keep the answer under 350 words

What to watch:

Which model lays out structured, practical steps? Kimi K2 & Claude 4.5 may excel; Grok 4.1 should still hold its own.

7. Closing thoughts

Grok 4.1 is interesting not just because it’s another frontier model, but because it:

- Pushes hard on emotional intelligence and style

- Shows large reductions in hallucinations vs its predecessors (The Times of India)

- Competes in a landscape where GPT-5.1, Kimi K2 and Claude 4.5 are all advancing reasoning, agents, and long-workflow capabilities. (OpenAI)

But you don’t have to take anyone’s marketing at face value.

With an AI gateway like TrueFoundry’s in front of your stack, Grok 4.1 is just another model to experiment with:

- Mirror real traffic to multiple models

- Compare quality, latency and cost

- Route each use-case to the model that actually performs best in your environment (truefoundry.com)

Do that, and you’ll quickly answer the question that matters:

Is Grok 4.1 just another frontier model — or is it the first one that genuinely feels different to talk to?

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.webp)