10 Best AI Observability Platforms for LLMs in 2026

Deploying an LLM is easy. Understanding what it is actually doing in production is terrifyingly hard. When costs spike, teams struggle to determine whether traffic increased or an agent entered a recursive loop. When quality drops, it is unclear whether prompts regressed, retrieval failed, or a new model version introduced subtle behavior changes. And when compliance questions arise, many teams realize they lack a complete audit trail of what their AI systems actually did.

In 2026, AI observability is no longer just about debugging prompts. It has become a foundational capability for running LLM systems safely and efficiently in production. Teams now rely on observability to control cost, monitor latency, detect hallucinations, enforce governance, and understand agent behavior across increasingly complex workflows.

This guide ranks the 10 best AI observability platforms that help teams shine light into the black box of Generative AI. We compare tools across cost visibility, tracing depth, production readiness, and enterprise fit, so you can choose the right platform for your LLM workloads.

Quick Comparison of Top AI Observability Platforms

Before diving into individual tools, the table below provides a high-level comparison to help teams quickly evaluate which AI observability platforms best match their needs.

1. TrueFoundry: Best Overall AI Observability Platform

TrueFoundry stands out as the most complete AI observability platform in 2026 because it goes beyond visibility and enables direct control over cost, performance, and execution. While most AI observability tools focus on surfacing metrics, TrueFoundry allows teams to act on observability signals in real time.

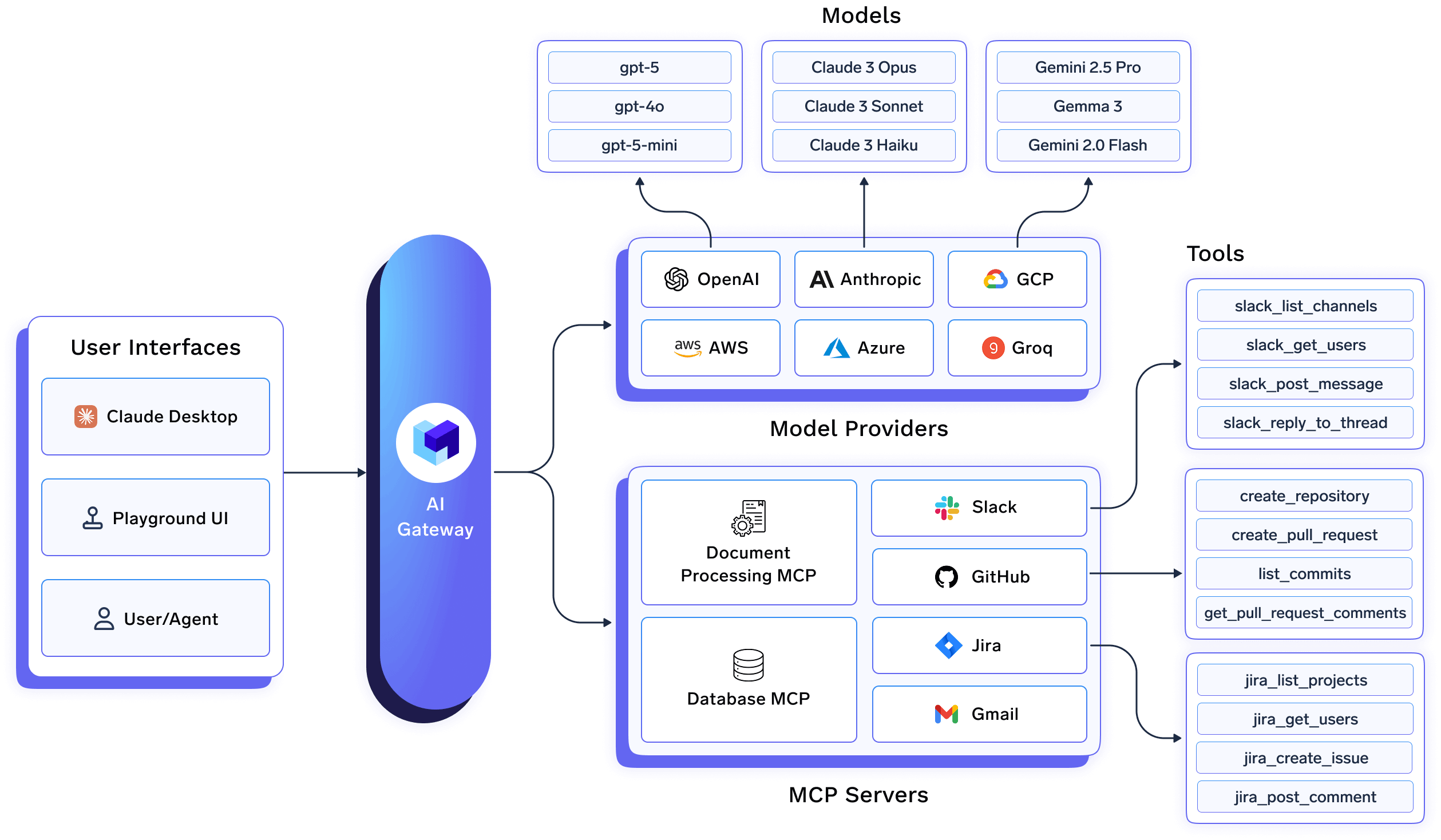

TrueFoundry combines LLM observability with an AI Gateway and infrastructure-level controls. This means teams can not only see where costs, latency, or failures are coming from but also route traffic, enforce budgets, and apply governance policies centrally. Importantly, TrueFoundry deploys directly inside your AWS, GCP, or Azure account, ensuring full data ownership and compliance for enterprise workloads.

This tight coupling of observability and control makes TrueFoundry particularly well-suited for production LLM systems with multiple models, agents, and environments.

Key Features

- Unified LLM Observability Across Models and Agents

Track prompts, completions, token usage, latency, and errors across all LLM providers and agent workflows from a single dashboard. - Token-Level Cost Tracking and FinOps Guardrails

Attribute LLM spend by team, application, environment, or agent, and enforce budgets, rate limits, and spend caps in real time. - AI Gateway–Native Observability

Because observability is built into the AI Gateway, every request is captured by default- no SDK sprawl or inconsistent instrumentation. - Deep Agent and Tool Tracing

Visualize multi-step agent executions, tool calls, retries, and failures to understand where latency, hallucinations, or loops occur. - Enterprise-Grade Data Ownership and Compliance

Logs, metrics, and traces are stored in the customer’s own cloud, avoiding black-box SaaS data pipelines and simplifying compliance. - Hybrid, Private Cloud, and On-Prem Deployment

Run observability close to your workloads while maintaining centralized visibility across regions and environments.

Pricing

TrueFoundry follows a usage-based pricing model aligned with production AI workloads. Pricing typically depends on:

- Number of LLM requests routed through the platform

- Token volume processed

- Enabled observability and governance features

Because TrueFoundry is deployed in your own cloud, infrastructure costs remain transparent and predictable. Teams can start small and scale observability alongside LLM adoption without upfront lock-in. Exact pricing is available on request and varies based on deployment model and usage patterns.

Best For

TrueFoundry is best suited for:

- Enterprises running multiple LLMs and agents in production

- Platform teams responsible for cost control, reliability, and governance

- Organizations with strict data privacy or residency requirements

- Teams that want to optimize LLM spend, not just observe it

It is especially valuable when AI observability needs to integrate tightly with infrastructure and execution controls.

Customer Reviews

Customers consistently highlight TrueFoundry’s ability to combine observability with real operational control. Common themes from reviews include:

- Clear visibility into LLM costs and usage at scale

- Faster debugging of agent failures and latency issues

- Confidence running AI workloads in regulated environments

TrueFoundry is rated 4.6 / 5 on G2, with strong feedback from platform and ML engineering teams operating production AI systems.

Arize AI

Arize AI is a well-known ML observability platform that has expanded into LLM observability. It focuses on tracing, evaluation, and performance monitoring for models in production, making it popular among ML-heavy teams.

Key Features

- LLM tracing and prompt logging

- Offline and online evaluations

- Drift and performance monitoring

- Dataset-based analysis for LLM outputs

Pros

- Strong ML observability foundation

- Good evaluation tooling for model quality

- Suitable for data science–led teams

Cons

- Limited infrastructure-level cost control

- Observability without execution or routing control

- SaaS-first model can be limiting for regulated environments

How TrueFoundry Is Better Than Arize AI

TrueFoundry goes beyond metrics by coupling observability with an AI Gateway. Teams can act on insights- routing traffic, enforcing budgets, and controlling execution, rather than only analyzing traces after the fact.

LangSmith

LangSmith is built for debugging and tracing LangChain-based LLM applications. It is widely used during development to understand prompt flows and agent behavior.

Key Features

- Prompt and chain tracing

- Agent graph visualization

- Experimentation and prompt comparison

- Tight integration with LangChain

Pros

- Excellent developer experience

- Very strong for agent debugging

- Easy to get started

Cons

- Primarily a dev-time tool

- Limited cost governance and infra visibility

- Tied closely to the LangChain ecosystem

How TrueFoundry Is Better Than LangSmith

TrueFoundry is built for production observability. It supports multiple frameworks, providers, and agents while adding cost controls, governance, and deployment flexibility that LangSmith does not target.

Weights & Biases

Weights & Biases is a leading platform for ML experiment tracking and model training observability, with growing support for LLM workflows.

Key Features

- Experiment tracking and dashboards

- Model versioning

- Training and evaluation metrics

- Collaboration for ML teams

Pros

- Best-in-class ML experiment tracking

- Mature ecosystem and integrations

- Strong visualization tools

Cons

- LLM observability is secondary

- Limited real-time production tracing for agents

- No native AI cost or traffic control

How TrueFoundry Is Better Than Weights & Biases

TrueFoundry focuses on runtime LLM observability and control, not just experiments. It is designed for production inference, cost governance, and agent execution rather than training workflows.

Helicone

Helicone is an API-level observability tool designed primarily for OpenAI and similar providers, offering lightweight logging and cost tracking.

Key Features

- Request and response logging

- Token and cost tracking

- Simple dashboards

- API proxy model

Pros

- Easy to set up

- Good visibility for OpenAI usage

- Developer-friendly

Cons

- Limited multi-provider depth

- No governance or policy enforcement

- Not built for complex agent workflows

How TrueFoundry Is Better Than Helicone

TrueFoundry supports multi-model, multi-agent, enterprise-scale observability with governance and deployment control, whereas Helicone is best suited for lightweight API monitoring.

HoneyHive

HoneyHive focuses on prompt management and evaluation workflows for LLM applications, especially during iteration and testing.

Key Features

- Prompt versioning

- Dataset-based evaluation

- Feedback loops for quality

- Experimentation workflows

Pros

- Good for prompt iteration

- Evaluation-centric design

- Simple workflows

Cons

- Limited real-time observability

- Weak cost and infra visibility

- Not designed for large-scale production systems

How TrueFoundry Is Better Than HoneyHive

TrueFoundry covers end-to-end production observability, including cost, latency, agents, and infrastructure, areas HoneyHive intentionally does not address.

Fiddler AI

Fiddler AI is an enterprise-focused ML monitoring platform with strong explainability and compliance capabilities.

Key Features

- Model explainability

- Performance monitoring

- Bias and fairness metrics

- Governance reporting

Pros

- Strong compliance story

- Explainability for regulated industries

- Enterprise-grade tooling

Cons

- Primarily designed for traditional ML

- Limited LLM and agent-native workflows

- Slower iteration for GenAI teams

How TrueFoundry Is Better Than Fiddler AI

TrueFoundry is LLM- and agent-native, offering real-time tracing, cost control, and execution governance that better match modern generative AI workloads.

Arthur AI

Arthur AI provides monitoring and governance tools focused on risk, bias, and model performance in enterprise AI systems.

Key Features

- Model monitoring and drift detection

- Bias and fairness checks

- Compliance dashboards

- Alerting

Pros

- Strong governance capabilities

- Good for regulated environments

- Risk-focused design

Cons

- Limited LLM-specific observability depth

- Minimal agent-level tracing

- No infrastructure or cost controls

How TrueFoundry Is Better Than Arthur AI

TrueFoundry combines governance with operational control, enabling teams to manage cost, routing, and execution, not just monitor risk after deployment.

WhyLabs

WhyLabs specializes in data and model health monitoring, helping teams detect anomalies and drift in production ML systems.

Key Features

- Data drift detection

- Anomaly monitoring

- Model health metrics

- Alerts

Pros

- Strong data monitoring

- Lightweight integration

- Useful for ML pipelines

Cons

- Limited LLM-specific insights

- No agent or prompt tracing

- Not designed for AI cost observability

How TrueFoundry Is Better Than WhyLabs

TrueFoundry is purpose-built for LLM and agent observability, including prompt flows, token usage, and runtime execution, areas where WhyLabs is not focused.

DeepEval

DeepEval is an open-source–friendly evaluation framework designed to test and score LLM outputs programmatically.

Key Features

- Automated LLM evaluations

- Custom test cases

- Quality scoring

- CI-friendly design

Pros

- Great for testing and benchmarking

- Flexible evaluation logic

- Developer-centric

Cons

- Not a full observability platform

- No real-time monitoring

No cost, infra, or governance features

How TrueFoundry Is Better Than DeepEval

TrueFoundry provides continuous, production-grade observability, whereas DeepEval focuses on testing correctness rather than operating LLM systems at scale.

How to Choose the Right AI Observability Platform

Use the checklist below to evaluate whether an AI observability platform can support both your current LLM workloads and the complexity you will face as you scale.

- LLM-Native Visibility

Does the platform natively understand prompts, completions, token usage, and agent workflows, rather than treating them as generic logs? - Token-Level Cost Attribution

Can you track and attribute cost by model, team, application, agent, and environment? - End-to-End Tracing

Does it provide full request traces across multi-step agents, tool calls, retries, and fallbacks? - Real-Time Monitoring and Alerting

Can you detect cost spikes, latency regressions, or failures as they happen not hours later? - Actionability, Not Just Dashboards

Can teams act on observability signals (rate limits, budgets, routing), or is the platform read-only? - Multi-Model and Multi-Provider Support

Does it work seamlessly across commercial and open-source LLMs without vendor lock-in? - Governance and Compliance Readiness

Does it support audit logs, access controls, and policy enforcement for regulated environments? - Support for Agents and Automation

Can it handle long-running agents, background jobs, CI pipelines, and recursive workflows? - Deployment Flexibility

Can the platform run in your cloud, VPC, or on-prem, or is it limited to SaaS-only deployments? - Long-Term Platform Fit

Is this a point tool for debugging or a foundational platform you can rely on as AI becomes mission-critical?

Platforms that meet only a subset of these criteria may work during experimentation. Teams operating LLMs in production should prioritize observability platforms that combine deep visibility with operational control and scale alongside their AI systems.

Observability Is the Backbone of Production AI

In 2026, running LLMs without observability is operationally reckless.

Without observability, teams cannot:

- Control runaway costs

- Diagnose latency and failures

- Understand agent behavior

- Enforce governance or compliance

Point tools solve narrow problems- prompt debugging, evaluations, or metrics but they break down as systems grow more complex. Enterprise AI systems require end-to-end visibility, attribution, and control, not isolated dashboards.

This is where platforms like TrueFoundry differentiate themselves. By combining AI observability with an AI Gateway and infrastructure-level controls, TrueFoundry enables teams to not only see what’s happening in production, but to govern, optimize, and operate LLM systems confidently at scale.

If you’re running LLMs in production and need observability that extends beyond metrics into real operational control, booking a demo with TrueFoundry is a practical next step.

FAQs

What is an AI observability platform?

An AI observability platform provides visibility into how AI and LLM systems behave in production. This includes tracking prompts, responses, tokens, latency, errors, agent workflows, and cost - helping teams debug issues, control spend, and ensure reliability and compliance.

What is the best AI observability?

The best AI observability platform depends on your use case. For production LLM systems, the strongest platforms combine deep LLM-native observability with cost controls, governance, and infrastructure integration, rather than focusing only on prompt debugging or evaluations.

What are the top 5 AI platforms?

While rankings vary by use case, commonly adopted AI observability platforms in 2026 include TrueFoundry, Arize AI, LangSmith, Weights & Biases, and Helicone. Each serves different needs, from enterprise-scale operations to developer-focused debugging.

What are the 4 pillars of observability?

The four pillars of observability are metrics, logs, traces, and events. In AI systems, these extend to include prompts, completions, token usage, agent steps, and tool executions - making AI observability more complex than traditional software observability.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.