Why Production AI Needs Dedicated Prompt Management

Once upon a time – roughly six months ago in startup years – there was Jason, a brilliant ML engineer at a rapidly growing fintech company. Jason was the resident "AI Whisperer." When the product team needed their new LLM-powered chatbot to sound more empathetic but less hallucination-prone about interest rates, they called Jason.

Jason’s toolkit was vast: state-of-the-art vector databases, highly optimized Kubernetes clusters, and sophisticated CI/CD pipelines. But the heart of the operation, the actual prompts driving these multi-million dollar features, lived in a precarious ecosystem.

Some prompts were hardcoded into Python f-strings, buried deep within conditional logic like ancient artifacts. Others existed in a 40-page shared Google Doc titled "FINAL_PROMPTS_v3_REAL_FINAL(2).docx," maintained by three different product managers. The newest experimental prompts were currently slacked to Jason by the CEO at 11:30 PM.

When a customer complained that the chatbot had confusingly offered them a mortgage in Klingon, Jason didn’t debug code. Jason went on an archeological dig through Slack history and git commits to find out which version of the "empathy prompt" was running in production and who changed it last.

Jason wasn’t doing engineering anymore. Jason was doing digital janitorial work. The team had built a Ferrari engine but was steering it with loose bits of string.

The Hard Truth About Production Generative AI

The pain behind the above story is actually acute and universal. Moving generative AI from a hackathon prototype to a reliable production system reveals a critical missing piece in the traditional MLOps stack.

In the early days, treating prompts as code seemed logical. You version them in Git, you deploy them with the application. But as teams scale, this model collapses. Prompts are not traditional code; they are configuration, business logic, and user interface all rolled into one natural language package.

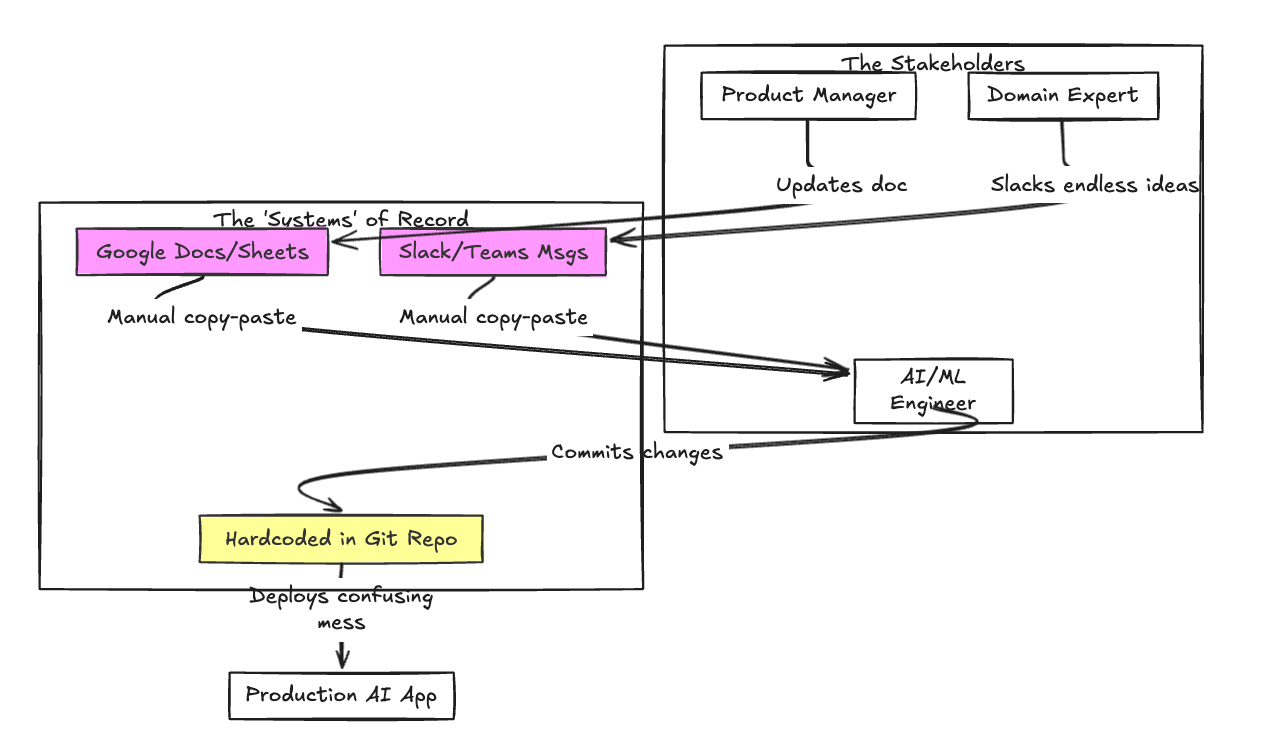

When prompts are tightly coupled with codebases, several critical issues emerge:

- Iteration Velocity Crawls: A domain expert wants to tweak a few words to improve tone. This shouldn't require a Jira ticket, a git pull request, a full CI/CD pipeline run, and an engineering sign-off.

- Lack of Visibility: It becomes nearly impossible to answer the simple question: "What exactly is running in production right now, and how does it differ from last week?"

- Collaboration Friction: Engineers become bottlenecks. The people best suited to write prompts (PMs, copywriters, subject matter experts) are often the furthest removed from the codebase where the prompts live.

To cross the chasm from prototype to production, we must stop treating prompts as "magic strings" scattered throughout our infrastructure. We need to treat them as first-class citizens.

The Chaos of Unmanaged Prompts

Before implementing a structured approach, the workflow often looks like a tangled web of miscommunication and manual effort.

Enter TrueFoundry: The Infrastructure for GenAI

This is where a dedicated Prompt Management System becomes essential. It is the bridge between the experimental art of prompt engineering and the rigorous discipline of production software engineering.

TrueFoundry acts as this central control system. It is designed to decouple prompt management from application logic, allowing teams to collaborate, version, evaluate, and deploy prompts with the same rigor they apply to traditional code, but with interfaces designed for the specific needs of LLM workflows.

TrueFoundry transforms prompt management from an ad-hoc task into a structured, auditable infrastructure layer.

1. A Single Source of Truth (The Registry)

TrueFoundry provides a centralized prompt registry. No more hunting through Google Docs or codebases. Every prompt, for every use case, resides in one secure, accessible location.

2. Decoupling Prompts from Code

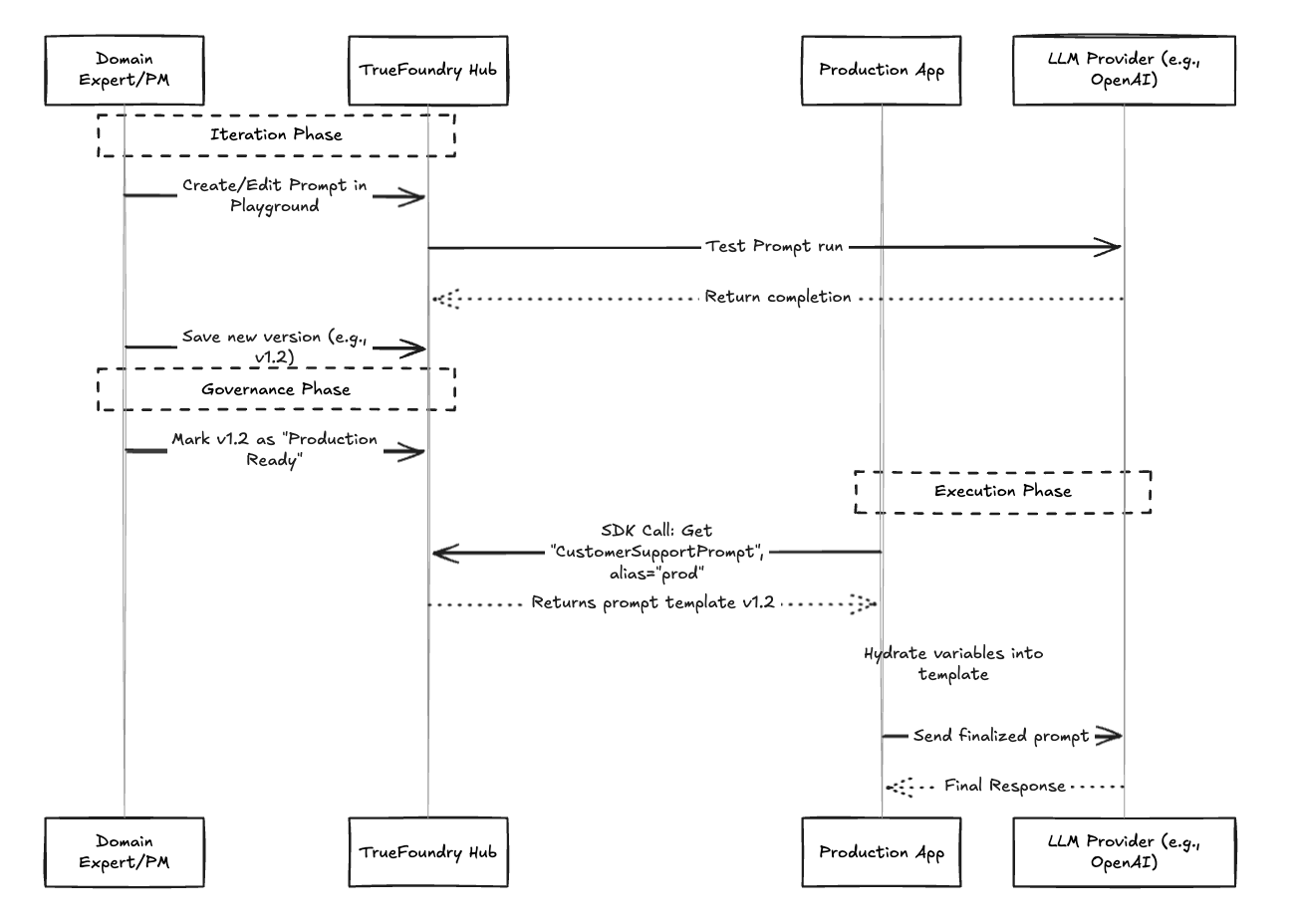

This is the most significant shift for velocity. In TrueFoundry, your application code doesn't contain the prompt text. Instead, it contains a lightweight SDK call that fetches the active version of the desired prompt.

This means a Product Manager can iterate on a prompt, test it within TrueFoundry’s playground, and "promote" it to production without an engineer ever needing to touch the application code or trigger a redeployment.

3. The Structured Workflow

With TrueFoundry, the chaos transforms into a streamlined lifecycle. Stakeholders collaborate in the hub, versions are tracked rigorously, and applications consume prompts reliably via API.

4. Evaluation Integrated with Management

Managing the prompt text is only half the battle. How do you know if version 2.0 is actually better than version 1.5? TrueFoundry integrates evaluation alongside management. Before promoting a prompt to production, you can run it against golden datasets to ensure accuracy, tone, and safety haven't regressed.

For more information, please visit https://truefoundry.com/docs/ai-gateway/prompt-management

Conclusion: Engineering Discipline for AI

Returning to our story, Jason implemented TrueFoundry. The Google Docs were archived. The hardcoded strings were replaced with SDK calls.

Now, when the CEO wants to change the chatbot's tone, they log into TrueFoundry, draft a new version, test it against a few examples, and tag Jason for review. Jason can see the exact diff, run an evaluation set against it, and approve it for deployment in minutes—all without writing a single line of Python.

The shift to production AI requires recognizing that prompts are a new class of software artifact. They need their own dedicated infrastructure. TrueFoundry provides the tooling to turn the art of prompt engineering into a manageable, scalable engineering discipline, ensuring your generative AI applications are as robust as the rest of your stack.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.png)

.png)