Top Agent Gateways 2025

Introduction

Between 2023 and 2024, the primary challenge in AI infrastructure revolved around prompt optimization and efficient access to Large Language Models (LLMs). The solution? LLM Gateways — lightweight middleware that unified API calls, abstracted provider differences, and added basic capabilities like caching, logging, and token tracking.

But 2025 has shifted the conversation entirely. We’ve moved beyond chatbots and one-off completions. Today, organizations are building autonomous agents - systems that plan, reason, and act across tools, APIs, and databases. These agents operate as decision-makers, not just text generators. They browse websites, execute multi-step workflows, invoke external services, and update business-critical state, all without constant human supervision.

This new level of autonomy introduces new risks and requirements: agents that retry on failure, hallucinate commands, or trigger high-stakes actions like financial refunds or server updates. A basic prompt-to-response loop simply can’t govern this complexity.

This shift has given rise to a new piece of infrastructure: the Agent Gateway and it’s rapidly becoming the most critical control point in the AI stack.

What Is an Agent Gateway?

To understand the Agent Gateway, we must first distinguish it from the infrastructure that came before it.

An Agent Gateway is a governance and orchestration layer that sits between LLM-powered agents and the external systems they interact with, such as APIs, databases, cloud tools, and proprietary backends. It acts as the execution firewall for autonomous AI behavior.

Whereas an LLM Gateway routes prompts and responses (statelessly), an Agent Gateway manages long-lived, stateful, and multi-step tasks. It understands the lifecycle of an agent’s plan from initial intent through tool selection, execution, validation, retry, and final result and enforces policies throughout that flow.

Core responsibilities of an Agent Gateway include:

- Tool Governance: Verifying which tools an agent can call, under what conditions, and with what parameters.

- State Management: Persisting agent memory, tool outputs, and context over time — enabling workflows that span hours or days.

- Security & Policy Enforcement: Enforcing fine-grained permissions at the action level (e.g., refund limits, access tiers), preventing risky or unauthorized behavior.

- Observability & Auditability: Logging every step, input, and decision for debugging, compliance, and improvement.

- Resilience & Recovery: Handling retries, fallbacks, and safe exits when agents fail or go off-script.

In essence, the Agent Gateway is the prefrontal cortex of your AI architecture: filtering and controlling what the reasoning engine (the LLM) is allowed to execute, and how it interacts with the real world.

Key Features to Look for When Choosing an Agent Gateway

Selecting the right agent gateway is critical to scaling AI systems securely, efficiently, and with minimal friction. The gateway acts as the intermediary between your agents and the external world — orchestrating requests, enforcing policies, logging activity, and managing access. Here are the most important capabilities to look for when evaluating an agent gateway:

1. Multi-Model Routing and Provider Abstraction

Your gateway should support seamless routing across multiple LLM providers (OpenAI, Anthropic, Mistral, etc.) and internal models. A strong gateway abstracts provider-specific APIs and offers a unified interface for all model calls, making your stack flexible and future-proof.

2. Token-Level Observability and Cost Tracking

AI workloads are priced per token. A good gateway should give fine-grained visibility into input/output token usage per call, user, model, or team. This enables accurate cost attribution and helps avoid surprise bills.

3. Programmable Guardrails and Policies

The gateway should allow you to enforce guardrails like rate limits, content filters, access restrictions, and input/output validations. These programmable policies are essential to maintaining safe, compliant, and controlled AI interactions.

4. Authentication and Authorization Controls

Robust identity management (via API tokens, OAuth, RBAC) is non-negotiable. The gateway must verify who is calling the model and what they’re allowed to do — especially in multi-tenant or enterprise setups.

5. Centralized Logging and Auditing

Every agent action — from a tool invocation to a model query — should be logged in a structured, searchable way. This enables debugging, monitoring, and post-mortem analysis, and is often required for compliance or governance reviews.

6. Caching and Efficiency Optimizations

Look for features like semantic caching, batch inference, or model fallback to reduce duplicate queries and optimize performance. This helps balance latency, cost, and load across systems.

7. Deployment Flexibility (Self-hosted, Cloud, Hybrid)

Enterprises often require control over where and how the gateway runs — on-prem, cloud, or hybrid. Choose a gateway that supports your infrastructure and data residency needs without vendor lock-in.

8. Developer Experience & Extensibility

The gateway should offer SDKs, observability dashboards, admin APIs, and a smooth developer onboarding experience. Support for plugins, webhooks, or integration with orchestration frameworks is a major plus.

Top Agent Gateway Platforms (2025)

The market for Agent Gateways has matured rapidly. In early 2024, the landscape was dominated by simple proxy tools that merely forwarded API requests. By 2025, the ecosystem will have bifurcated into specialized categories: Enterprise Control Planes that govern internal tooling, Developer Utilities that offer raw flexibility for engineers, and Infrastructure Ecosystems that bake agent capabilities directly into the edge network.

The following five platforms represent the best-in-class solutions for different architectural needs. Whether you are a solo developer building a consumer agent or an enterprise architect governing thousands of internal autonomous workflows, one of these gateways will fit your stack.

1. TrueFoundry

Truefoundry has positioned itself as the heavyweight champion for enterprise AI governance. Addressing the "M×N integration problem", where every new agent requires individual connections to tools, databases, and APIs, Truefoundry functions as a centralized traffic controller for agentic workflows. It is specifically engineered to eliminate the "security blind spots" that arise when developers scatter API keys and credentials across disparate agent codebases.

Key Differentiator: The Centralized MCP Registry Truefoundry tackles the chaos of unmanaged tools by offering a Centralized Registry & Discovery system. Instead of hard-coding tool integrations, administrators define a catalog of approved MCP servers and tools in one place.

Agents simply point to the gateway to discover and utilize these vetted tools. This creates a "single MCP endpoint" architecture, drastically reducing configuration overhead and preventing "shadow MCP servers" from popping up within the organization.

Agentic Features:

- Unified AuthN & AuthZ: The gateway handles authentication globally. It supports modern standards like OAuth2 and OIDC, ensuring that agents can only access the specific tools they are authorized for. This allows for granular Role-Based Access Control (RBAC), where a "Finance Agent" might have write access to a database while a "Support Agent" has read-only permissions.

- Protocol Translation & Composition: Recognizing that AI tools speak different languages, Truefoundry’s gateway can translate an agent’s JSON-RPC call (common in MCP) into a REST API call or Lambda invocation. It can even compose multiple APIs into a single agent-facing endpoint.

- Resiliency & Failover: To ensure robust execution, the platform includes built-in retries and fallback logic. If a specific model endpoint or tool replica fails, the gateway automatically reroutes the traffic to a backup, preventing fragile agent workflows from breaking during production outages.

- Agent Playground: Beyond simple routing, Truefoundry offers an interactive Playground where developers can prototype prompts and orchestrate multiple tools via the gateway before deploying them to production.

Best For: Enterprises and regulated industries (Finance, Healthcare) that require strict governance (SOC 2, HIPAA compliance) and need to manage complex multi-agent systems without compromising on security or observability.

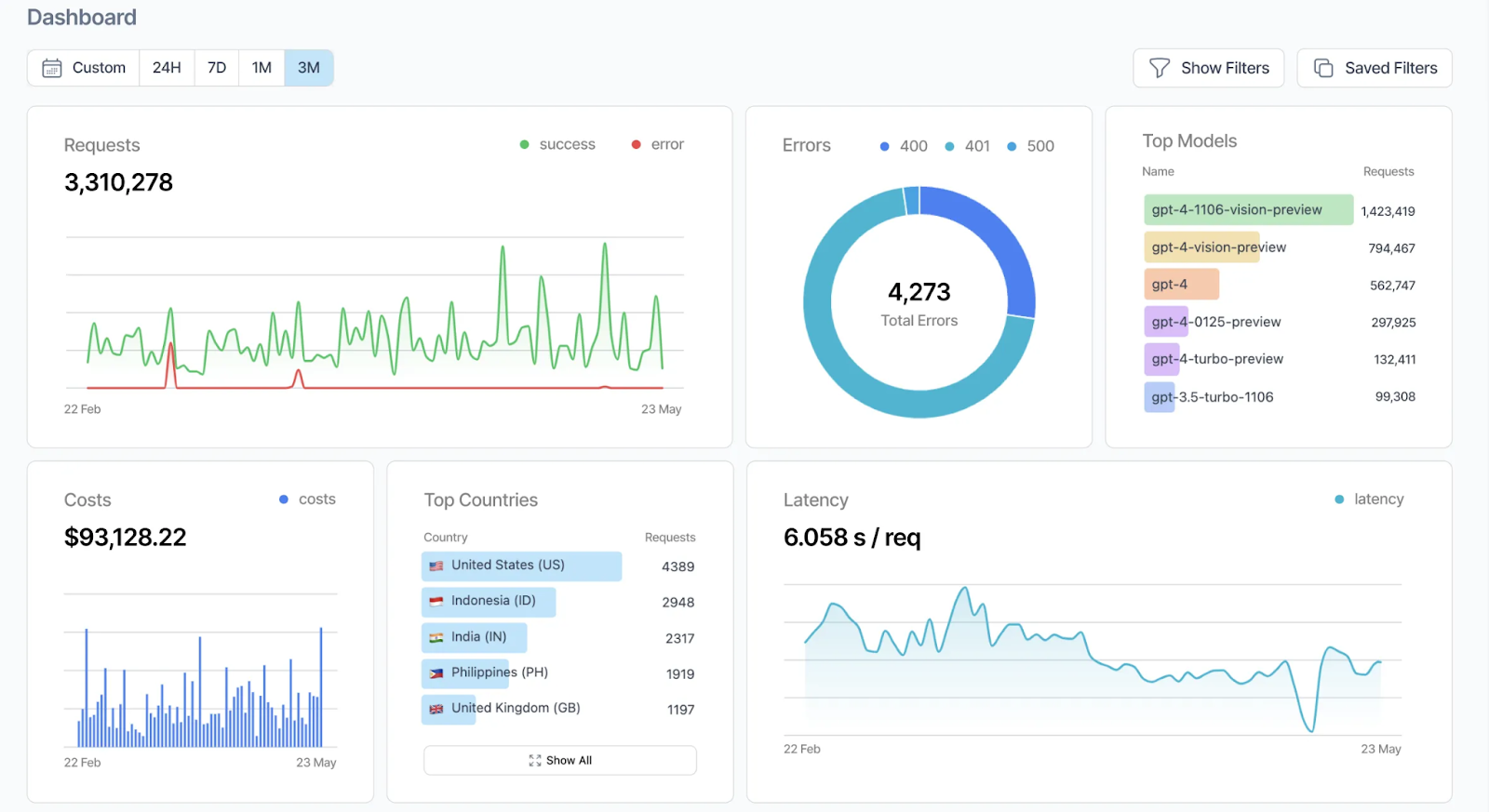

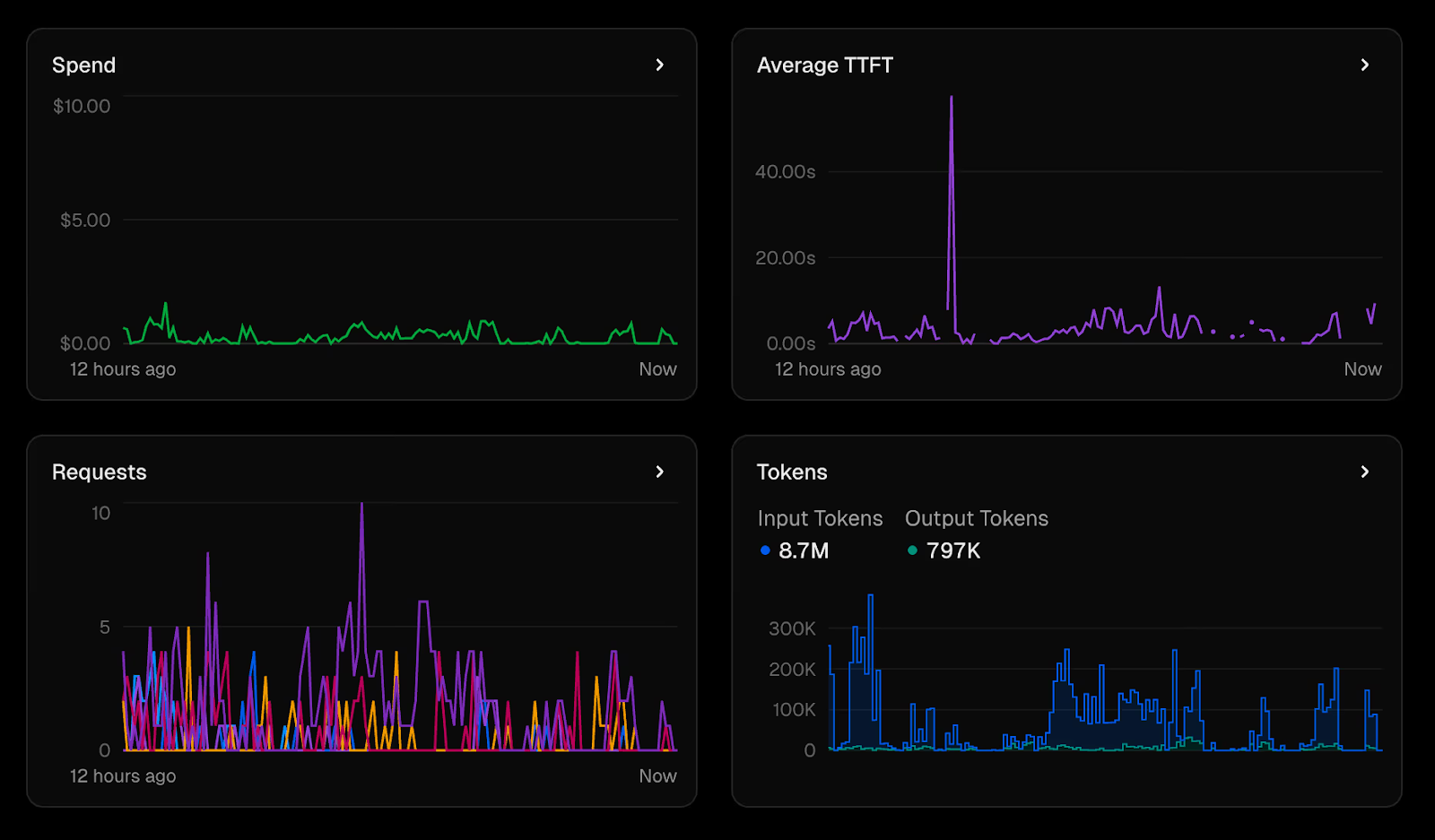

2. LiteLLM

LiteLLM is the Swiss Army Knife of the AI engineering world. Starting as a Python library to normalize API calls, it has grown into a highly performant Gateway Proxy Server. Its philosophy is "flexibility above all."

Key Differentiator: The Standardization Layer Agents are notoriously fragile when you switch models. A prompt that works for GPT-4 might break Claude 3.5 because of differences in how they format tool calls. LiteLLM solves this by normalizing inputs and outputs into a standard OpenAI format. This allows you to write your agent's tool-calling logic once and swap the underlying brain (model) without rewriting your code.

Agentic Features:

- Virtual Keys: You can generate virtual API keys for each agent. This allows you to track spend for "Sales Agent" vs "Support Agent" separately, even if they use the same underlying Anthropic account.

- Reliability: Exceptional retry and fallback logic. If an agent fails a step because Azure OpenAI is experiencing high latency, LiteLLM can seamlessly route that specific thought-step to AWS Bedrock without the agent realizing the switch happened.

- Self-Hosted: Because it’s open-source, you can run it inside your own VPC, ensuring that agent memories and tool data never leave your infrastructure.

Best For: Engineering teams who want full control, are comfortable with self-hosting, and prioritize avoiding vendor lock-in.

3. Helicone

Helicone is technically an observability platform that acts as a gateway. In the world of agents, where systems are non-deterministic "black boxes," observability is not a luxury; it is the only way to debug. This makes it a strong choice among teams considering Helicone alternatives.

Key Differentiator: Session Replay & Experimentation Helicone excels at visualizing the "Thought Chain." When an agent fails to complete a task, Helicone allows you to open that specific session and see exactly where the logic broke down. Was it a bad prompt? Did the tool return a 500 error? Did the model ignore the tool output? Its Session Replay feature lets you replay the exact sequence of events that led to a failure, allowing you to test fixes against real-world data without affecting live users.

Agentic Features:

- Prompt Versioning: Agents are defined by their system prompts. Helicone tracks versions of these prompts alongside performance metrics, allowing you to correlate a drop in success rate with a specific change in the agent's instructions.

- Caching: They offer highly granular caching. You can configure semantic cache rules so that if two users ask different agents to "summarize the Q3 report," the second agent gets the result instantly from the cache, skipping the expensive document processing capabilities.

- User Tracking: You can tag requests with user IDs or session IDs, allowing you to reconstruct the entire "memory" of a user's interaction with an agent over time. This is crucial for debugging long-running agent sessions where context might be lost across multiple turns.

Best For: Developers and Product Managers who need to debug agent behavior and iterate on "System Prompts" to improve success rates.

4. Vercel

Vercel has moved aggressively into the AI space with its AI SDK and integrated AI Gateway. Their approach is unique because they focus heavily on the client-side and edge experience, making them the go-to for user-facing agent applications that require low latency and rich interactivity.

Key Differentiator: The Data Stream Protocol & Generative UI. Unlike backend-heavy gateways that return raw JSON, Vercel’s architecture is designed for the frontend. Their Data Stream Protocol allows agents to stream text, tool calls, and even UI updates in a single connection. This enables Generative UI, where an agent doesn’t just text you the weather but streams a fully interactive React component (e.g., a weather widget) directly to the user's screen. This solves the "latency perception" problem, keeping users engaged while the agent thinks.

Agentic Features:

- Agent-First Architecture (AI SDK 6): Vercel’s latest SDK update introduces specific primitives for agents, including ToolLoopAgent for automated multi-step reasoning. It standardizes how agents discover and execute tools, removing the boilerplate code typically needed to wire LLMs to APIs.

- Human-in-the-Loop Approvals: A critical feature for safety, Vercel allows developers to gate specific tool executions (e.g., "Delete Database" or "Send Email") behind a human approval step. The agent pauses its execution flow until a user confirms the action via the UI, blending autonomy with necessary oversight.

- Edge Caching: Leveraging their global edge network, Vercel caches common agent queries close to the user. If multiple users ask an agent for the same data analysis, the result is served instantly from the edge, bypassing expensive model inference costs.

- Workflow Development Kit: For long-running agent tasks that might take minutes or hours (e.g., "Research this company"), Vercel provides a Workflow Kit. This ensures durability: if an agent crashes or a function times out, the system automatically retries from the last successful step rather than restarting the entire process.

Best For: Full-stack developers and startups building consumer-facing AI apps (SaaS, B2C agents) who want a seamless "Code-to-Production" workflow with zero infrastructure management.

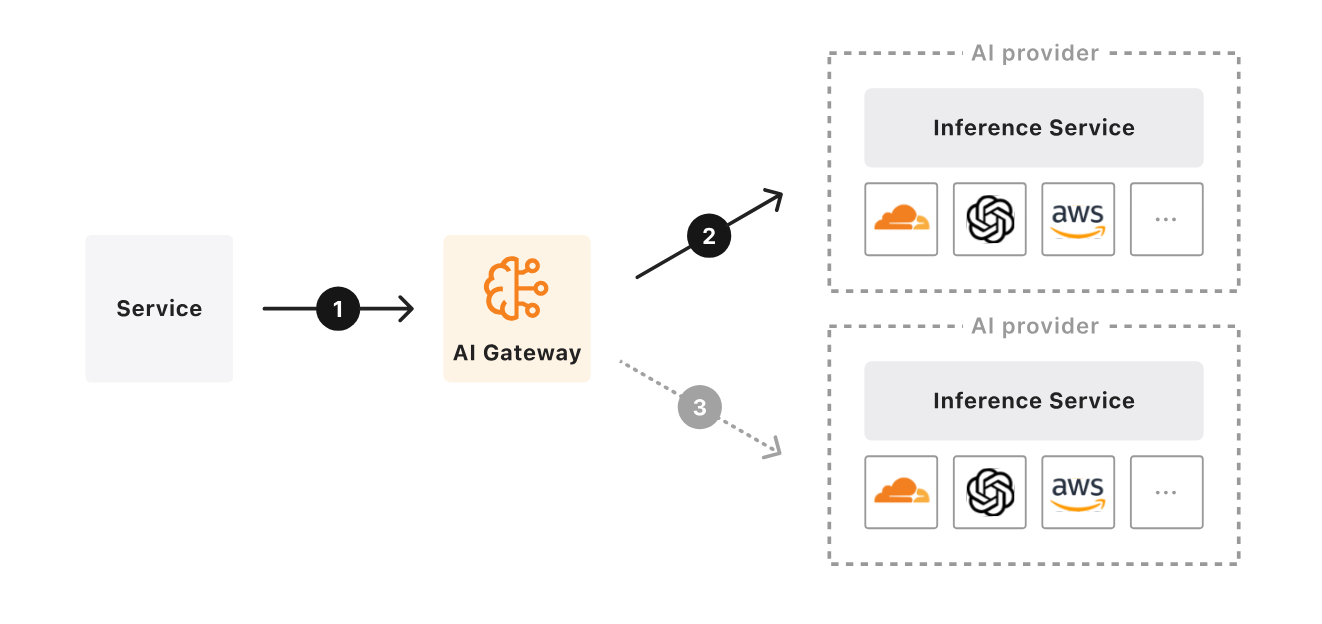

5. Cloudfare

Cloudflare has quietly built one of the most powerful ecosystems for agents, leveraging its global network. They are a standout choice for 2025 because they address the hardest problem in agent engineering: State.

Key Differentiator: Durable Objects & Remote MCP Cloudflare uses a technology called Durable Objects to provide a distinct "state" for every agent. This means your agent isn't just a script running in the cloud; it's a persistent entity that "lives" on the network, remembering user context instantly without needing to query a slow centralized database.

Agentic Features:

- Remote MCP Servers: Cloudflare allows you to deploy MCP servers (tools) directly on their Workers platform. The Gateway then manages the secure connection between your LLM and these remote tools, handling authentication (OAuth) automatically.

- The Agents SDK: They provide a specialized SDK designed to build "stateful" agents. This SDK handles the complexity of saving conversation history and managing long-running tasks (e.g., an agent that needs to wait 2 hours for a file to process).

- Global Scale: Because it runs on Cloudflare’s network, your Agent Gateway is present in 300+ cities worldwide. An agent interacting with a user in Tokyo runs in Tokyo, minimizing the latency of every thought-step.

Best For: Developers building high-performance, stateful agents that need to scale to millions of users without managing complex database infrastructure for "memory."

Conclusion

As AI agents become the execution layer of enterprise workflows, the infrastructure supporting them must evolve beyond simple prompt routing. The era of LLM Gateways is giving way to Agent Gateways — systems built not just to serve models, but to orchestrate decision-making, tool usage, and secure multi-step operations across a growing AI ecosystem.

Choosing the right Agent Gateway is no longer a matter of preference; it's a strategic decision that impacts cost, security, governance, and velocity. While open-source tooling like LiteLLM caters well to local experimentation, and platforms like Vercel optimize for latency and simplicity, they fall short in handling the enterprise-grade complexities of agent ecosystems at scale.

TrueFoundry offers the most complete answer to this challenge. With its unified gateway architecture, governed registry of tools (via MCP), granular access controls, and production-ready observability, it empowers teams to safely scale from prototype agents to enterprise automation. It’s not just about making AI work — it’s about making AI governable, auditable, and operationally sound.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.