Multi-Cloud GPU Orchestration: Integrating Specialized Clouds with TrueFoundry

Compute availability is the primary bottleneck for training LLMs and scaling high-throughput inference. If you have tried to provision Amazon EC2 P5 instances or Azure ND H100 v5 VMs lately, you have likely hit InsufficientInstanceCapacity errors or been told you need a multi-year private pricing agreement.

This scarcity makes specialized GPU providers—like CoreWeave, Lambda Labs, and FluidStack—viable alternatives. These "Neo-Clouds" offer NVIDIA H100s and A100s often at lower on-demand rates than the big three.

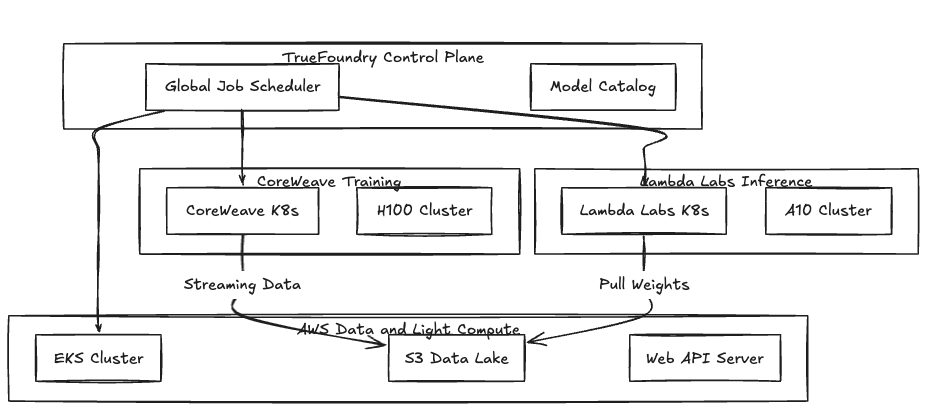

The problem? Running AWS for your Amazon S3 data lake while manually spinning up bare-metal nodes in Lambda Labs creates fragmented workflows. We solve this by treating specialized clouds as standard Kubernetes clusters within a unified control plane.

The Architecture: Bring Your Own Cluster (BYOC)

TrueFoundry uses a split-plane architecture. The control plane handles job scheduling and experiment tracking, while the compute plane stays in your environment. Since most specialized clouds provide a managed Kubernetes service or allow you to deploy K3s, we attach them via a standard agent.

- The Compute Plane: Provision a cluster on the provider (e.g., a CoreWeave namespace or Lambda GPU instance).

- The Agent: You install the TrueFoundry Agent via Helm.

- The Integration: The cluster joins your dashboard alongside Amazon EKS or Azure AKS.

We abstract the storage and ingress. Whether the provider uses Vast Data or local NVMe RAID, we map it to a PersistentVolumeClaim. This keeps your Docker containers portable across providers.

Fig 1: Hybrid topology utilizing AWS for data persistence and specialized clouds for GPU-intensive workloads.

Technical Advantages of the Hybrid Model

1. Cost Management and Failover

On-demand H100 prices vary significantly. We use TrueFoundry to set up prioritized queues. You can target cheap, interruptible capacity on specialized clouds first. If the provider preempts the instance or capacity disappears, the scheduler can automatically failover to a reserved Amazon EC2 instance.

2. Mitigating Infrastructure Lock-in

Relying on proprietary AI platforms often binds you to a specific cloud’s storage and IAM ecosystem. We package training jobs as standard containers. TrueFoundry handles the Kubernetes CSI drivers for S3 mounting and configures the NVIDIA Container Toolkit environment variables automatically. You move a job from AWS to CoreWeave by updating the cluster_name in your deployment spec.

3. Centralized Observability

Multi-cloud setups usually break logging. We aggregate Prometheus metrics and Grafana dashboards across all clusters. If a training job OOMs on a Lambda Labs node, you see the GPU utilization and system logs in the same UI you use for your production EKS environment.

Workflow: Adding Lambda Labs Capacity

To add specialized capacity, follow this lifecycle:

- Provision: Create your GPU nodes in the provider console.

- Connect: In TrueFoundry, select "Connect Existing Cluster."

- Deploy Agent: Bash Commands

helm repo add truefoundry https://truefoundry.github.io/infra-charts/

helm install tfy-agent truefoundry/tfy-agent \

--set tenantName=my-org \

--set clusterName=lambda-h100-pool \

--set apiKey=<YOUR_API_KEY>

- Tolerations: Specialized providers often taint GPU nodes. You configure the TrueFoundry workspace to apply the required tolerations to all jobs targeted at that cluster.

Comparing Infrastructure Models

Bottom Line

Relying on a single cloud for LLM compute is no longer a viable strategy for high-growth engineering teams. By decoupling the workload definition from the execution venue, you can treat GPUs as a commodity. Route your heavy training to specialized clouds for efficiency while keeping your core data and services in your primary hyperscale region.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.webp)